Windows Azure and Cloud Computing Posts for 1/4/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 1/7/2012 1:30 PM PST with new articles marked •• by the SQL Server Team

• Updated 1/6/2012 11:30 AM PST with new articles marked • by Jim O’Neil, Avkash Chauhan, Ilan Rabinovitch, Ashlee Vance, Beth Massi, Mary Jo Foley, Maarten Balliauw, Ralph Squillace, SearchCloudComputing, Himanshu Singh, Larry Franks, Cihan Biyikoglu, Andrew Brust and Me.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, EAI Bridge and Cache

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Andrew Brust (@andrewbrust) offered Hadoop on Azure: First Impressions in his Redmond Roundup post of 1/6/2012:

In the last Roundup, I mentioned that I was admitted to the early preview CTP of Microsoft's Project "Isotope" Hadoop on Azure initiative. At the time, I had merely provisioned a Hadoop cluster. At this point, I've run queries from the browser, logged in over remote desktop to the cluster's head node, tooled around and connected to Hadoop data from four different Microsoft BI tools. So far, I'm impressed.

I've been saying for a while that Hadoop and Big Data aren't so far off in mission from enterprise BI tools. The problem is that the two technology areas have thus far existed in rather mutually-isolated states, when they should be getting mashed up. While a number of BI vendors have been pursuing that goal, it actually seems to be the entire underpinning of Microsoft's Hadoop initiative. That does lead to some anomalies. For instance, Microsoft's Hadoop distribution seems to exclude the Hbase NoSQL database, which is rather ubiquitous in the rest of the Hadoop world. But in general, the core Hadoop environment seems faithful and robust, provisioning a cluster is really simple and connectivity from Excel, PowerPivot, Analysis Services tabular mode and Reporting Services seems solid and smooth. I like what I'm seeing and am eager to see more. …

I’m a bit mystified by the missing Hbase, also. Andrew continues with observations about Nokia/Windows Phone 7 and Ultrabooks running Windows 7.

• Avkash Chauhan (@avkashchauhan) described Using Windows Azure Blob Storage (asv://) for input data and storing results in Hadoop Map/Reduce Job on Windows Azure in a 1/5/2012 post:

Microsoft[’s] distribution [of] Apache Hadoop [has] direct connectivity to cloud storage, i.e., Windows Azure Blob storage or Amazon S3. Here we will learn how to connect your Windows Azure Storage directly from your Hadoop Cluster.

To learn how to connect you[r] Hadoop Cluster to Windows Azure Storage, please read the following blog first:

After reading the above blog, please setup your Hadoop configuration to connect with Azure Storage and verify that connection to Azure Storage is working. Now, before running [a] Hadoop Job please be sure to understand the correct format to use asv:// as below:

When using input or output string using Azure storage you must use the following format:

Input

asv://<container_name>/<symbolic_folder_name>

Example: asv://hadoop/input

Output

asv://<container_name>/<symbolic_folder_name>

Example:asv://hadoop/output

Note If you will use asv://<only_container_name> then job will return error.

Let’s verify at Azure Storage that we do have some data in proper location

The contents of the file helloworldblo[b].txt are as below:

This is Hello World I like Hello World Hello Country Hello World Love World World is Love Hello WorldNow let’s run a simple WordCount Map/Reduce Job and use HelloWorldBlob.txt as input file and store results also in Azure Storage.

Job Command:

call hadoop.cmd jar hadoop-examples-0.20.203.1-SNAPSHOT.jar wordcount asv://hadoop/input asv://hadoop/output

Once the Job Completes the following screenshot shows the results output:

Opening part-r-00000 shows the results as below:

- Country 1

- Hello 5

- I 1

- Love 2

- This 1

- World 6

- is 2

- like 1

Finally the Azure HeadNode WebApp shows the following final output about the Hadoop Job: …

See the original post for the detailed output.

• Avkash Chauhan (@avkashchauhan) added yet another member with Apache Hadoop on Windows Azure: Connecting to Windows Azure Storage from Hadoop Cluster on 1/5/2012:

Microsoft[’s] distribution [of] Apache Hadoop [has] direct connectivity to cloud storage, i.e., Windows Azure Blob storage or Amazon S3. Here we will learn how to connect your Windows Azure Storage directly from your Hadoop Cluster.

As you know Windows Azure Storage access need[s the] following two things:

- Azure Storage Name

- Azure Storage Access Key

Using [the] above information we create the following Storage Connection Strings:

- DefaultEndpointsProtocol=https;

- AccountName=<Your_Azure_Blob_Storage_Name>;

- AccountKey=<Azure_Storage_Key>

Now we just need to setup the above information inside the Hadoop cluster configuration. To do that, please open C:\Apps\Dist\conf\core-site.xml and include the following to parameters related with Azure Blob Storage access from Hadoop Cluster:

<property> <name>fs.azure.buffer.dir</name> <value>/tmp</value> </property> <property> <name>fs.azure.storageConnectionString</name> <value>DefaultEndpointsProtocol=https;AccountName=<YourAzureBlobStoreName>;AccountKey=<YourAzurePrimaryKey></value> </property>The above configuration setup Azure Blob Storage within the Hadoop setup.

ASV:// => https://<Azure_Blob_Storage_name>.blob.core.windows.net

Now let’s try to list the blogs in your specific container:

c:\apps\dist>hadoop fs -lsr asv://hadoop/input

-rwxrwxrwx 1 107 2012-01-05 05:52 /input/helloworldblob.txt

Let’s verify at Azure Storage that the results we received above are correct as below:

So for example if you would want to copy a file from Hadoop cluster to Azure Storage you will use the following command:

Hadoop fs –copyFromLocal <Filename> asv://<Target_Container_Name>/<Blob_Name_or_samefilename>

Example:

c:\Apps>hadoop.cmd fs -copyFromLocal helloworld.txt asv://filefromhadoop/helloworldblob.txt

This will upload helloworld.txt file to container name “filefromhadoop” as blob name “helloworldblob.txt”.

c:\Apps>hadoop.cmd fs -copyToLocal asv://hadoop/input/helloworldblob.txt helloworldblob.txt

This command will download helloworldblob.txt blob from Azure storage and made available to local Hadoop cluster

Please see [the original article] to learn more about [the] “Hadoop fs” command:

• Ashlee Vance (@valleyhack) asserted “The startup's competitions lure PhDs and whiz kids to solve companies' data problems” in a deck for her Kaggle's Contests: Crunching Numbers for Fame and Glory article of 1/4/2012 for Bloomberg Business Week:

A couple years ago, Netflix held a contest to improve its algorithm for recommending movies. It posted a bunch of anonymized information about how people rate films, then challenged the public to best its own Cinematch algorithm by 10 percent. About 51,000 people in 186 countries took a crack at it. (The winner was a seven-person team that included scientists from AT&T Labs.) The $1 million prize was no doubt responsible for much of the interest. But the fervor pointed to something else as well: The world is full of data junkies looking for their next fix.

In April 2010, Anthony Goldbloom, an Australian economist, decided to capitalize on that urge. He founded a company called Kaggle to help businesses of any size run Netflix-style competitions. The customer supplies a data set, tells Kaggle the question it wants answered, and decides how much prize money it’s willing to put up. Kaggle shapes these inputs into a contest for the data-crunching hordes. To date, about 25,000 people—including thousands of PhDs—have flocked to Kaggle to compete in dozens of contests backed by Ford, Deloitte, Microsoft, and other companies. The interest convinced investors, including PayPal co-founder Max Levchin, Google Chief Economist Hal Varian, and Web 2.0 kingpin Yuri Milner, to put $11 million into the company in November.

The startup’s growth corresponds to a surge in Silicon Valley’s demand for so-called data scientists, who are able to pull business and technical insights out of mounds of information. Big Web shops like Facebook and Google use these scientists to refine advertising algorithms. Elsewhere, they’re revamping how retailers promote goods and helping banks detect fraud.

Big companies have sucked up the majority of the information all-stars, leaving smaller outfits scrambling. But Goldbloom, who previously worked at the Reserve Bank of Australia and the Australian Treasury, contends there are plenty of bright data geeks willing to work on tough problems. “There is not a lack of talent,” he says. “It’s just that the people who tend to excel at this type of work aren’t always that good at communicating their talents.”

One way to find them, Goldbloom believes, is to make Kaggle into the geek equivalent of the Ultimate Fighting Championship. Every contest has a scoreboard. Math and computer science whizzes from places like IBM and the Massachusetts Institute of Technology tend to do well, but there are some atypical participants, including glaciologists, archeologists, and curious undergrads. Momchil Georgiev, for instance, is a senior software engineer at the National Oceanic and Atmospheric Administration. By day he verifies weather forecast data. At night he turns into “SirGuessalot” and goes up against more than 500 people trying predict what day of the week people will visit a supermarket and how much they’ll spend. (The sponsor is dunnhumby, an adviser to grocery chains like Tesco.) “To be honest, it’s gotten a little bit addictive,” says Georgiev.

Eric Huls, a vice-president at Allstate, says many of his company’s math whizzes have been drawn to Kaggle. “The competition format makes Kaggle unique compared to working within the context of a traditional company,” says Huls. “There is a good deal of pride and prestige that comes with objectively having bested hundreds of other people that you just can’t find in the workplace.”

Allstate decided to piggyback on Kaggle’s appeal and last July offered a $10,000 prize to see if it could improve the way it prices automobile insurance policies. In particular, the company wanted to examine if certain characteristics of a car made it more likely to be involved in an accident that resulted in a bodily injury claim. Allstate turned over two years’ worth of data that included variables like a car’s horsepower, size, and number of cylinders, and anonymized accident histories. “This is not a new problem, but we were interested to see if the contestants would approach it differently than we have traditionally,” Huls says. “We found the best models in the competition did improve upon the models we built internally.” …

• Avkash Chauhan (@avkashchauhan) continued his series with Apache Hadoop on Windows Azure: How Hadoop cluster was setup on Windows Azure on 1/4/2012:

Once your provide following information to setup your Hadoop cluster in Azure:

- Cluster DNS Name

- Type of Cluster

- Small – 4 Nodes – 2TB diskspace

- Medium – 8 Nodes – 4 TB diskspace

- Large – 16 nodes – 8 TB diskspace

- Extra Large – 32 Nodes – 16 TB diskspace

- Cluster login name and Password

The cluster setup process configure[s] your cluster depend[ing] on your settings, and finally you get your cluster ready to accept Hadoop Map/Reduce Jobs.

If you want to understand how the head node and worker nodes were setup internally, here is some information to you:

[The] head node is actually a Windows Azure web role running. You will find Head Node Details … below:

- Isotope HeadNode JobTracker 9010

- Isotope HeadNode JobTrackerWebApp 50030 ß Hadoop Map/Reduce Job Tracker

- Isotope HeadNode NameNode 9000

- Isotope HeadNode NameNodeWebApp 50070 ç Namenode Management

- ODBC/HiveServer running on Port 10000

- FTP Server running on Port 2226

- IsotopeJS is also running at 8443 as Interactive JavaScript Console.

About [the] Worker Node, which is actually a worker role having [an] endpoint directly communicating with HeadNode WebRole, here are some details important to you:

Isotope WorkerNode – Create X instances depend[ing] on your cluster setup

For example, a Small cluster use 4 nodes in that case the worker node will look like:

- IsotopeWorkerNode_In_0

- IsotopeWorkerNode_In_1

- IsotopeWorkerNode_In_2

- IsotopeWorkerNode_In_3

Each WorkerNode gets its own IP Address and Port and following two ports are used for individual job tracker on each node and HDFS management:

- http://<WorkerNodeIPAddress_X>:50060/tasktracker.jsp - Job Tracker

- http://<WorkerNodeIPAddress_X>:50075/ - HDFS

If you remote[ly] login to your clus[t]er and check the name node summary using http://localhost:50070/dfshealth.jsp you will see the exact same worker node IP Address as described here:

http://localhost:50070/dfshealth.jsp

If you look your C:\Resources\<GUID.IsotopeHeadNode_IN_0.xml you will learn more about these details. This XML file is the same which you finds on any Web or Worker Role and the configuration in XML will help you a lot on this regard.

CloudTweaks asserted “Open source “Big Data” cloud computing platform powers millions of compute-hours to process exabytes of data for Amazon.com, AOL, Apple, eBay, Facebook, foursquare, HP, IBM, LinkedIn, Microsoft, Netflix, The New York Times, Rackspace, Twitter, Yahoo!, and more” in an introduction to an Apache Hadoop v1.0: Open Source “Big Data” Cloud Computing Platform Powers Millions… news release on 1/4/2012:

The Apache Software Foundation (ASF), the all-volunteer developers, stewards, and incubators of nearly 150 Open Source projects and initiatives, today announced Apache™ Hadoop™ v1.0, the Open Source software framework for reliable, scalable, distributed computing. The project’s latest release marks a major milestone six years in the making, and has achieved the level of stability and enterprise-readiness to earn the 1.0 designation.

A foundation of Cloud computing and at the epicenter of “big data” solutions, Apache Hadoop enables data-intensive distributed applications to work with thousands of nodes and exabytes of data. Hadoop enables organizations to more efficiently and cost-effectively store, process, manage and analyze the growing volumes of data being created and collected every day. Apache Hadoop connects thousands of servers to process and analyze data at supercomputing speed.

“This release is the culmination of a lot of hard work and cooperation from a vibrant Apache community group of dedicated software developers and committers that has brought new levels of stability and production expertise to the Hadoop project,” said Arun C. Murthy, Vice President of Apache Hadoop. “Hadoop is becoming the de facto data platform that enables organizations to store, process and query vast torrents of data, and the new release represents an important step forward in performance, stability and security.

“Originating with technologies developed by Yahoo, Google, and other Web 2.0 pioneers in the mid-2000s, Hadoop is now central to the big data strategies of enterprises, service providers, and other organizations,” wrote James Kobielus in the independent Forrester Research, Inc. report, “Enterprise Hadoop: The Emerging Core Of Big Data” (October 2011).

Dubbed a “Swiss army knife of the 21st century” and named “Innovation of the Year” by the 2011 Media Guardian Innovation Awards, Apache Hadoop is widely deployed at organizations around the globe, including industry leaders from across the Internet and social networking landscape such as Amazon Web Services, AOL, Apple, eBay, Facebook, Foursquare, HP, LinkedIn, Netflix, The New York Times, Rackspace, Twitter, and Yahoo!. Other technology leaders such as Microsoft and IBM have integrated Apache Hadoop into their offerings. Yahoo!, an early pioneer, hosts the world’s largest known Hadoop production environment to date, spanning more than 42,000 nodes. [Microsoft emphasis added.]

“Achieving the 1.0 release status is a momentous achievement from the Apache Hadoop community and the result of hard development work and shared learnings over the years,” said Jay Rossiter, senior vice president, Cloud Platform Group at Yahoo!. “Apache Hadoop will continue to be an important area of investment for Yahoo!. Today Hadoop powers every click at Yahoo!, helping to deliver personalized content and experiences to more than 700 million consumers worldwide.”

“Apache Hadoop is in use worldwide in many of the biggest and most innovative data applications,” said Eric Baldeschwieler, CEO of Hortonworks. “The v1.0 release combines proven scalability and reliability with security and other features that make Apache Hadoop truly enterprise-ready.”

“Gartner is seeing a steady increase in interest in Apache Hadoop and related “big data” technologies, as measured by substantial growth in client inquiries, dramatic rises in attendance at industry events, increasing financial investments and the introduction of products from leading data management and data integration software vendors,” said Merv Adrian, Research Vice President at Gartner, Inc. “The 1.0 release of Apache Hadoop marks a major milestone for this open source offering as enterprises across multiple industries begin to integrate it into their technology architecture plans.”

Apache Hadoop v1.0 reflects six years of development, production experience, extensive testing, and feedback from hundreds of knowledgeable users, data scientists, systems engineers, bringing a highly stable, enterprise-ready release of the fastest-growing big data platform.

It includes support for:

- HBase (sync and flush support for transaction logging)

- Security (strong authentication via Kerberos)

- Webhdfs (RESTful API to HDFS)

- Performance enhanced access to local files for HBase

- Other performance enhancements, bug fixes, and features

- All version 0.20.205 and prior 0.20.2xx features

“We are excited to celebrate Hadoop’s milestone achievement,” said William Lazzaro, Director of Engineering at Concurrent Computer Corporation. “Implementing Hadoop at Concurrent has enabled us to transform massive amounts of real-time data into actionable business insights, and we continue to look forward to the ever-improving iterations of Hadoop.”

“Hadoop, the first ubiquitous platform to emerge from the ongoing proliferation of Big Data and noSQL technologies, is set to make the transition from Web to Enterprise technology in 2012,” said James Governor, co-founder of RedMonk, “driven by adoption and integration by every major vendor in the commercial data analytics market. The Apache Software Foundation plays a crucial role in supporting the platform and its ecosystem.”

Availability and Oversight

As with all Apache products, Apache Hadoop software is released under the Apache License v2.0, and is overseen by a self-selected team of active contributors to the project. A Project Management Committee (PMC) guides the Project’s day-to-day operations, including community development and product releases. Apache Hadoop release notes, source code, documentation, and related resources are available at http://hadoop.apache.org/.

About The Apache Software Foundation (ASF)

Established in 1999, the all-volunteer Foundation oversees nearly one hundred fifty leading Open Source projects, including Apache HTTP Server — the world’s most popular Web server software. Through the ASF’s meritocratic process known as “The Apache Way,” more than 350 individual Members and 3,000 Committers successfully collaborate to develop freely available enterprise-grade software, benefiting millions of users worldwide: thousands of software solutions are distributed under the Apache License; and the community actively participates in ASF mailing lists, mentoring initiatives, and ApacheCon, the Foundation’s official user conference, trainings, and expo.

For more information, visit http://www.apache.org/.

Source: Apache

<Return to section navigation list>

SQL Azure Database and Reporting

•• Christina Storm of the SQL Azure Team (@chrissto) recommended that you Get your SQL Server database ready for SQL Azure! in a 1/6/2011 post to the Data Platform Insider blog:

One of our lab project teams was pretty busy while the rest of us were taking a break between Christmas and New Year’s here in Redmond. On January 3rd, their new lab went live: Microsoft Codename "SQL Azure Compatibility Assessment". This lab is an experimental cloud service targeted at database developers and admins who are considering migrating existing SQL Server databases into SQL Azure databases and want to know how easy or hard this process is going to be. SQL Azure, as you may already know, is a highly available and scalable cloud database service delivered from Microsoft’s datacenters. This lab helps in getting your SQL Server database cloud-ready by pointing out schema objects which are not supported in SQL Azure and need to be changed prior to the migration process. So if you are thinking about the cloud again coming out of a strong holiday season where some of your on-premises databases were getting tough to manage due to increased load, this lab may be worth checking out.

There are two steps involved in this lab:

- You first need to generate a .dacpac file from the database you’d like to check on with SQL Server Data Tools (SSDT) CTP4. SQL Server 2005, 2008, 2008 R2, 2012 (CTP or RC0) are supported.

- Next, you upload your .dacpac to the lab cloud service, which returns an assessment report, listing the schema objects that need to change before you can move that database to SQL Azure.

You find more information on the lab page for this project and in the online documentation. A step-by-step video tutorial will walk you through the process. Of course, we would love to hear feedback from you!

And we’re always interested in suggestions or ideas for other things you’d like to see on http://wwwsqlazurelabs.com. You can use the feedback buttons on that page and send us a note, or visit http://www.mygreatwindowsazureidea.com/ and enter a new idea into any of the specific voting forums that are available there. There’s a dedicated voting forum for SQL Azure and many of its subareas. You can also place a vote for ideas that were posted if you spot something that matters to you. We appreciate your input! Follow @SQLAzureLabs on Twitter for news about new labs.

• Cihan Biyikoglu updated his Introduction to Fan-out Queries: Querying Multiple Federation Members with Federations in SQL Azure post of 12/29/2011 with code fixes on 1/4/2012:

Happy 2012 to all of you! 2011 has been a great year. We now have federations live in production with SQL Azure. So lets chat about fanout querying.

Many applications will need querying all data across federation members. With version 1 of federations, SQL Azure does not yet provide much help on the server side for this scenario. What I’d like to do in this post is show you how you can implement this and what tricks you may need to engage when post processing the results from your fan-out queries. Lets drill in.

Fanning-out Queries

Fan-out simply means taking the query to execute and executing that on all members. Fan-out queries can handle cases where you’d like to union the results from members or when you want to run additive aggregations across your members such as MAX or COUNT.

Mechanics of how to execute these queries over multiple members is fairly simple. Federations-Utility sample application demonstrated how simple fan-out can be done under the Fan-out Query Utility Page. The application code is available for viewing here. The app fans-out a given query to all federation members of a federation. Here is the deployed version;

http://federationsutility-scus.cloudapp.net/FanoutQueryUtility.aspx

There is help available for how to use it on the page. The action starts with the button click event for the “Submit Fanout Query” button. Lets quickly walk through the code first;

- First the connection is opened and three federation properties are initialized for constructing the USE FEDERATION statement: federation name, federation key name and the minimum value to take us to the first member.

49: // open connection

50: cn_sqlazure.Open();

51:

52: //get federation properties

53: str_federation_name = txt_federation_name.Text.ToString();

54:

55: //get distribution name

56: cm_federation_key_name.Parameters["@federationname"].Value = str_federation_name;

57: str_federation_key_name = cm_federation_key_name.ExecuteScalar().ToString();

58:

59: cm_first_federation_member_key_value.Parameters["@federationname"].Value = str_federation_name;

60: cm_next_federation_member_key_value.Parameters["@federationname"].Value = str_federation_name;

61:

62: //start from the first member with the absolute minimum value

63: str_next_federation_member_key_value = cm_first_federation_member_key_value.ExecuteScalar().ToString();

- In the loop, the app constructs and executes the USE FEDERATION routing statement using the above three properties and through each iteration connect to the next member in line.

67: //construct command to route to next member

68: cm_routing.CommandText = string.Format("USE FEDERATION {0}({1}={2}) WITH RESET, FILTERING=OFF", str_federation_name , str_federation_key_name , str_next_federation_member_key_value);

69:

70: //route to the next member

71: cm_routing.ExecuteNonQuery();

- Once the connection to the member is established to the member, query is executed through the DataAdapter.Fill method. The great thing about the DataAdapter.Fill method is that it automatically appends the rows or merges the rows into the DataSet.DataTables so as we iterate over the members, there is no additional work to do in the DataSet.

76: //get results into dataset

77: da_adhocsql.Fill(ds_adhocsql);

- Once the execution of the query in the current member is complete, app grabs the range_high of the current federation member. Using this value, in the next iteration the app navigates to the next federation member. The value is discovered through “select cast(range_high as nvarchar) from sys.federation_member_distributions”

86: //get the value to navigate to the next member

87: str_next_federation_member_key_value = cm_next_federation_member_key_value.ExecuteScalar().ToString();

- The condition for the loop is defined at line#71. Simply expresses looping until the range_high value returns NULL.

89: while (str_next_federation_member_key_value != String.Empty);

Fairly simple!

What can you do with this tool? Now use the tool to do schema deployments to federations; To deploy schema to all my federation members, simply put a DDL statement in the query window… And it will run it in all federation members;

DROP TABLE language_code_tbl;

CREATE TABLE language_code_tbl(

id bigint primary key,

name nchar(256) not null,

code nchar(256) not null);I can also maintain reference data in federation members with this tool; Simply do the necessary CRUD to get the data into a new shape or simply delete and reinsert language_codes;

TRUNCATE TABLE language_code_tbl

INSERT INTO language_code_tbl VALUES(1,'US English','EN-US')

INSERT INTO language_code_tbl VALUES(2,'UK English','EN-UK')

INSERT INTO language_code_tbl VALUES(3,'CA English','EN-CA')

INSERT INTO language_code_tbl VALUES(4,'SA English','EN-SA')Here is how to get the database names and database ids for all my federation members;

SELECT db_name(), db_id()

Here is what the output looks like from the tool;

Here are more ways to gather information from all federation members: This will capture information on connections to all my federation members;

SELECT b.member_id, a.*

FROM sys.dm_exec_sessions a CROSS APPLY sys.federation__member_distributions b…Or I can do maintenance with stored procedures kicked off in all federation members. For example, here is how to update statistics in all my federation members;

EXEC sp_updatestats

For querying user data: Well, I can do queries that ‘Union All’ the results for me. Something like the following query where I get blog_ids and blog_titles for everyone who blogged about ‘Azure’ from my blogs_federation. By the way, you can find the full schema at the bottom of the post under the title ‘Sample Schema”.

SELECT b.blog_id, b.blog_title

FROM blogs_tbl b JOIN blog_entries_tbl be

ON b.blog_id=be.blog_id

WHERE blog_entry_title LIKE '%Azure%'I can also do aggregations as long as grouping involves the federation key. That way I know all data that belongs to each group is only in one member. For the following query, my federation key is blog_id and I am looking for the count of blog entries about ‘Azure’ per blog.

SELECT b.blog_id, COUNT(be.blog_entry_title), MAX(be.created_date)

FROM blogs_tbl b JOIN blog_entries_tbl be

ON b.blog_id=be.blog_id

WHERE be.blog_entry_title LIKE '%Azure%'

GROUP BY b.blog_idHowever if I would like to get a grouping that does not align with the federation key, there is work to do. Here is an example: I’d like to get the count of blog entries about ‘Azure’ that are created between Aug (8th month) and Dec (12th month) of the years.

SELECT DATEPART(mm, be.created_date), COUNT(be.blog_entry_title)

FROM blogs_tbl b JOIN blog_entries_tbl be

ON b.blog_id=be.blog_id

WHERE DATEPART(mm, be.created_date) between 8 and 12

AND be.blog_entry_title LIKE '%Azure%'

GROUP BY DATEPART(mm, be.created_date)the output contains a whole bunch of counts for Aug to Oct (8 to 10) form different members. Here is how it looks like;

How about something like the DISTINCT count of languages used for blog comments across our entire dataset? Here is the query;

SELECT DISTINCT bec.language_id

FROM blog_entry_comments_tbl bec JOIN language_code_tbl lc ON bec.language_id=lc.language_id‘Order By’ and ‘Top’ clauses have similar issues. Here are a few examples. With ‘Order by’ I don’t get a fully sorted output. Ordering issue is very common given most app do fan-out queries in parallel across all members. Here is TOP 5 query returning many more than 5 rows;

SELECT TOP 5 COUNT(*)

FROM blog_entry_comments_tbl

GROUP BY blog_id

ORDER BY 1I added the CROSS APPLY to sys.federation_member_distributions to show you the results are coming from various members. So the second column in the output below is the RANGE_LOW of the member the result is coming from.

The bad news is there is post processing to do on this result before I can use the results. The good news is, there are many options that ease this type of processing. I’ll list a few and I will explore those further in detail in future;

- LINQ to DataSet offers a great option for querying datasets. Some examples here.

- ADO.Net DataSets and DataTables offers a number of options for processing groupbys and aggregates. For example, DataColumn.Expressions allow you to add aggregate expressions to your Dataset for this type of processing. Or you can use DataTable.Compute for processing a rollup value.

There are others, some went as far as sending the resulting DataSet back to SQL Azure for post processing using a second T-SQL statement. Certainly costly but they argue TSQL is more powerful.

So far we saw that it is fairly easy to iterate through the members and repeat the same query: this is fan-out querying. We saw a few sample queries with ADO.Net that doesn’t need any additional work to process. Once grouping and aggregates are involved, there is some processing to do.

The post won’t be complete without mentioning a class of aggregations that will be harder to calculate: there are the none-additive aggregates. Here is an example; This query calculates a distinct count of languages in blog entry comments per month.

SELECT DATEPART(mm,created_date), COUNT(DISTINCT bec.language_id)

FROM blog_entry_comments_tbl bec JOIN language_code_tbl lc

ON bec.language_id=lc.language_id

GROUP BY DATEPART(mm,created_date)The processing of distinct is the issue here. Given this query, I cannot do any post processing to calculate the correct distinct count across all members. Style of these queries lend themselves to centralized processing so all comments language ids across days first need to be grouped across all members and then distinct can be calculated on that resultset.

You would rewrite the TSQL portion of the query like this;

SELECT DISTINCT DATEPART(mm,created_date), bec.language_id

FROM blog_entry_comments_tbl bec JOIN language_code_tbl lc

ON bec.language_id=lc.language_idThe output looks like this;

With the results of this query, I can do a DISTINCT COUNT on the grouping of months. If we take the DataTable from the above query, in TSQL terms the post processing query would be like;

SELECT month, COUNT(DISTINCT language_id)

FROM DataTable

GROUP BY monthSo to wrap up, fan-out queries is the way to execute queries across your federation members. With fanout queries, there are 2 parts to consider; the part you execute in each federation member and the final phase where you collapse the results from all members to a single result-set. Does this sound familiar; If you were thinking map/reduce you got it right! The great thing about fan-out querying is that it can be done completely in parallel. All the member queries are executed executed by separate databases in SQL Azure. The down side is in certain cases, you need to now consider writing 2 queries instead of 1. So there is some added complexity for some queries. In future I hope we can reduce that complexity. Pls let me know if you have feedback about that and everything else on this post; just comment on this blog post or contact me through the link that says ‘contact the author’.

Thanks and happy 2012!

*Sample SchemaHere is the schema I used for the sample queries above.

-- Connect to BlogsRUs_DB

CREATE FEDERATION Blogs_Federation(id bigint RANGE)

GO

USE FEDERATION blogs_federation (id=-1) WITH RESET, FILTERING=OFF

GO

CREATE TABLE blogs_tbl(

blog_id bigint not null,

user_id bigint not null,

blog_title nchar(256) not null,

created_date datetimeoffset not null DEFAULT getdate(),

updated_date datetimeoffset not null DEFAULT getdate(),

language_id bigint not null default 1,

primary key (blog_id)

)

FEDERATED ON (id=blog_id)

GO

CREATE TABLE blog_entries_tbl(

blog_id bigint not null,

blog_entry_id bigint not null,

blog_entry_title nchar(256) not null,

blog_entry_text nchar(2000) not null,

created_date datetimeoffset not null DEFAULT getdate(),

updated_date datetimeoffset not null DEFAULT getdate(),

language_id bigint not null default 1,

blog_style bigint null,

primary key (blog_entry_id,blog_id)

)

FEDERATED ON (id=blog_id)

GO

CREATE TABLE blog_entry_comments_tbl(

blog_id bigint not null,

blog_entry_id bigint not null,

blog_comment_id bigint not null,

blog_comment_title nchar(256) not null,

blog_comment_text nchar(2000) not null,

user_id bigint not null,

created_date datetimeoffset not null DEFAULT getdate(),

updated_date datetimeoffset not null DEFAULT getdate(),

language_id bigint not null default 1

primary key (blog_comment_id,blog_entry_id,blog_id)

)

FEDERATED ON (id=blog_id)

GO

CREATE TABLE language_code_tbl(

language_id bigint primary key,

name nchar(256) not null,

code nchar(256) not null

)

GO

Avkash Chauhan (@avkashchauhan) recommended that you Assess your SQL Server to SQL Azure migration using SQL Azure Compatibility Assessment Tool by SQL Azure Labs in a 1/4/2012 post:

SQL Azure team announced today about the release of a new experimental cloud service, "SQL Azure Compatibility Assessment”. This tool is a created by SQL Azure Labs and if you are considering moving your SQL Server databases to SQL Azure, you can use this assessment service to check if your database schema is compatible with SQL Azure grammar. This service is very easy to use and does not require Azure account.

"SQL Azure Compatibility Assessment" is an experimental cloud service. It’s aimed at database administrators who are considering migrating their existing SQL Server databases to SQL Azure. This service is super easy to use with just a Windows Live ID. Here are the steps:

- Generate a .dacpac file from your database using SQL Server Data Tools (SSDT) CTP4. You can either run SqlPackage.exe or import the database into an SSDT project and then build it to generate a .dacpac. SQL Server 2005, 2008, 2008 R2, 2012 (CTP or RC0) are all supported.

- Upload your .dacpac to the "SQL Azure Compatibility Assessment" cloud service and receive an assessment report, which lists the schema objects that are not supported in SQL Azure and that need to be fixed prior to migration.

Learn more about Microsoft Codename "SQL Azure Compatibility Assessment" in this great video:

Learn Technical details about "SQL Azure Compatibility Assessment" tool:

SqlPackage.exe is a command line utility that automates the following database development tasks:

- Extraction: creating a database snapshot (.dacpac) file from a live SQL Azure database

- Publishing: incrementally updates a database schema to match the schema of a source database

- Report: creates reports of the changes that result from publishing a database schema

- Script: creates a Transact-SQL script that publishes a database schema

I intend to use this new tool after I bulk upload my 10+ GB tab-separated text file to SQL Server 2008 R2 in preparation for a move to SQL Azure federations.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

• Robin Van Steenburgh posted Decoding image blobs obtained from OData query services by Arthur Greef on 1/5/2012:

This post on the OData Query Service is by Principal Software Architect Arthur Greef.

Images retrieved using OData query services are returned as base 64 encoded containers. The following code will decode the image container into a byte array that contains just the image.

string base64EncodedString = "<place your base64 encoded blob here>"; byte[] serializedContainer = Convert.FromBase64String(base64EncodedString); byte[] modifiedSerializedContainer = null; if (serializedContainer.Length >= 7 && serializedContainer.Take(3).SequenceEqual(new byte[] { 0x07, 0xFD, 0x30 })) { modifiedSerializedContainer = serializedContainer.Skip(7).ToArray(); } File.WriteAllBytes("<image file name here>", modifiedSerializedContainer);

Joe McKendrick asserted “Growing interest in the cloud, and a desire for more vendor independence, may drive REST deeper into the enterprise BPM space” as a deck for his REST will free business process management from its shackles: prediction article of 1/3/2012 for ZDNet’s ServiceOriented blog:

The REST protocol has been known for its ability to link Web processes in its lightweight way, but at least one analyst sees a role far deeper in the enterprise. This year, we’ll see REST increasingly deployed to pull together business processes.

ZapThink’s Jason Bloomberg predicts the rise of RESTful business process management, which will enable putting together workflows on the fly, without the intervention of heavyweight BPM engines.

People haven’t really been employing REST to its full potential, Jason says. REST is more than APIs, it’s an “architectural style for building hypermedia applications,” which are more than glorified Web sites. REST is a runtime workflow, he explains. It changes the way we might look at BPM tools, which typically have been “heavyweight, integration-centric tools that typically rely upon layers of infrastructure.”

“With REST, however, hypermedia become the engine of application state, freeing us from relying upon centralized state engines, instead allowing us to take full advantage of cloud elasticity, as well as the inherent simplicity of REST.”

The shift will take time, beyond 2012. But with growing interest in the cloud, and a desire for more vendor independence, means its time for RESTful BPM.

As goes REST, so goes OData.

<Return to section navigation list>

Windows Azure Access Control, Service Bus, EAI Bridge, and Cache

• Ralph Squillace complained about Understanding Azure Namespaces, a bleg on 1/6/2012:

I've been busy recently writing sample applications that use Windows Azure Service Bus and Windows Azure web roles and Windows Azure (damn I'm tired of typing brand names now) Access Control Service namespaces. And each time I do, I create a new namespace and often I reuse namespaces for general services in what used to be called AppFabric -- the Service Bus, Access Control, and Caching.

If I'm creating a set of applications, and all I need is access control to that application, I'll need to use an ACS namespace to configure that application as a relying party (which I would call a relying or dependent application, but I don't write the names). But do I use an ACS namespace that is already in use? Do I create a new ACS namespace just for that application? Or are there categories of people or clients that I can group into an ACS namespace, trusted by one or more applications LIKE those used by those people or clients?

I'm curious what you do, but I'm doing more work figuring out the best way to go here from the perspective of the real world. More soon.

• Richard Seroter (@rseroter) wrote Microsoft Previews Windows Azure Application Integration Services and InfoQ published it, together with an interview with Microsoft’s Itai Raz on 1/6/2012:

In late December 2011, Microsoft announced the pre-release of a set of services labeled Windows Azure Service Bus EAI Labs. These enhancements to the existing Windows Azure Service Bus make it easier to connect (cloud) applications through the use of message routing rules, protocol bridging, message transformation services and connectivity to on-premises line of business systems.

Microsoft has the three major components of the Windows Azure Service Bus EAI software. The first is referred to as EAI Bridges. Bridges form a messaging layer between multiple applications and support content-based routing rules for choosing a message destination. While a Bridge hosted in Windows Azure can only receive XML messages via HTTP, it can send its XML output to HTTP endpoints, Service Bus Topics, Service Bus Queues or to other Bridges. Developers can use the multiple stages of an XML Bridge to validate messages against a schema, enrich it with reference data, or transform it from one structure to another.

Transforms are the second component of the Service Bus EAI Labs release and are targeted at developers who need to change the XML structure of data as it moves between applications. To create these transformations that run in Windows Azure, Microsoft is providing a visual XSLT mapping tool that is reminiscent of a similar tool that ships with Microsoft’s BizTalk Server integration product. However, this new XSLT mapper has a richer set of canned operations that developers can use to manipulate the XML data. Besides basic string manipulation and arithmetic operators, this mapping tool also provides more advanced capabilities like storing state in user-defined lists, and performing If-Then-Else assessments when deciding how to populate the destination message. There is not yet word from Microsoft on whether they will support the creation of custom functions for these Transforms.

The final major component of this Labs release is called Service Bus Connect. This appears to build on two existing Microsoft products: the Windows Azure Service Bus Relay Service and the BizTalk Adapter Pack. Service Bus Connect is advertised as a way for cloud applications to securely communicate with onsite line of business systems like SAP, Siebel, and Oracle E-Business Suite as well as SQL Server and Oracle data repositories. Developers create what are called Line of Business Relays that make internal business data or functions readily accessible via secure Azure Service Bus endpoint.

Microsoft has released a set of tools and templates for Microsoft Visual Studio that facilitate creation of Service Bus EAI solutions. A number of Microsoft MVPs authored blog posts that showed how to build projects based on each major Service Bus EAI component. Mikael Hakansson explained how to configure a Bridge that leveraged content-based routing, Kent Weare demonstrated the new XSLT mapping tool, and Steef-Jan Wiggers showed how to use Service Bus Connect to publicly expose an Oracle database.

…

The InfoQ article concludes with the Itai Raz interview. Thanks to Rick G. Garibay for the heads-up.

• Maarten Balliauw (@maartenballiauw) asked How do you synchronize a million to-do lists? in a 1/5/2012 post:

Not this question, but a similar one, has been asked by one of our customers. An interesting question, isn’t it? Wait. It gets more interesting. I’ll sketch a fake scenario that’s similar to our customer’s question. Imagine you are building mobile applications to manage a simple to-do list. This software is available on Android, iPhone, iPad, Windows Phone 7 and via a web browser. One day, the decision to share to-do lists has been made. Me and my wife should be able to share one to-do list between us, having an up-to-date version of the list on every device we grant access to this to-do list. Now imagine there are a million of those groups, where every partner in the sync relationship has the latest version of the list on his device. In often a disconnected world.

My take: Windows Azure Service Bus Topics & Subscriptions

According to the Windows Azure Service Bus product description, it “implements a publish/subscribe pattern that delivers a highly scalable, flexible, and cost-effective way to publish messages from an application and deliver them to multiple subscribers.“ Interesting. I’m not going into the specifics of it (maybe in a next post), but the Windows Azure Service Bus gave me an idea: why not put all actions (add an item, complete a to-do) on a queue, tagged with the appropriate “group” metadata? Here’s the producer side:

On the consumer side, our devices are listening as well. Every device creates its subscription on the service bus topic. These subscriptions are named per device and filtered on the SyncGroup metadata. The Windows Azure Service Bus will take care of duplicating messages to every subscription as well as keeping track of messages that have not been processed: if I’m offline, messages are queued. If I’m online, I receive messages targeted at my device:

The only limitation to this is keeping the number of topics & subscriptions below the limits of Windows Azure Service Bus. But even then: if I just make sure every sync group is on the same bus, I can scale out over multiple service buses.

How would you solve the problem sketched? Comments are very welcomed!

• Jim O’Neil continued his series with Photo Mosaics Part 9: Caching Analysis on 1/5/2012:

When originally drafting the previous post in this series, I’d intended to include a short write-up comparing the performance and time-to-completion of a photo mosaic when using the default in-memory method versus Windows Azure Caching. As I got further and further into it though, I realized there’s somewhat of a spectrum of options here, and that cost, in addition to performance, is something to consider. So, at the last minute, I decided to focus a blog post on some of the tradeoffs and observations I made while trying out various options in my Azure Photo Mosaics application.

Methodology

While I’d stop short of calling my testing ‘scientific,’ I did make every attempt to carry out the tests in a consistent fashion and eliminate as many variables in the executions as possible. To abstract the type of caching (ranging from none to in-memory), I added a configuration parameter, named CachingMechanism, to the ImageProcessor Worker Role. The parameter takes one of four values, defined by the following enumeration in Tile.vb

Public Enum CachingMechanism

None = 0

BlobStorage = 1

AppFabric = 2

InRole = 3

End EnumThe values are interpreted as follows:

- None: no caching used, every request for a tile image requires pulling the original image from blob storage and re-creating the tile in the requested size.

- BlobStorage: a ‘temporary’ blob container is created to store all tile images in the requested tile size. Once a tile has been generated, subsequent requests for that tile are drawn from the secondary container. The tile thumbnail is generated only once; however, each request for the thumbnail does still require a transaction to blob storage.

- AppFabric: Windows Azure Caching is used to store the tile thumbnails. Whether the local cache is used depends on the cache configuration within the app.config file.

- InRole: tile thumbnail images are stored in memory within each instance of the ImageProcessor Worker Role. This was the original implementation and is bounded by the amount of memory within the VM hosting the role.

To simplify the analysis, the ColorValue of each tile (a 32-bit integer representing the average color of that tile) is calculated just once and then always cached “in-role” within the collection of Tile objects owned by the ImageLibrary.

For each request, the same inputs were provided from the client application and are shown in the annotated screen shot to the right:

- Original image: Lighthouse.jpg from the Windows 7 sample images (size: 1024 x 768)

- Image library: 800 images pulled from Flickr’s ‘interestingness’ feed. Images were requested as square thumbnails (default 75 pixels square)

- Tile size: 16 x 16 (yielding output image of size 16384 x 12288)

- Original image divided into six (6) slices to be apportioned among the three ImageProcessor roles (small VMs); each slice is the same size, 1024 x 128.

These inputs were submitted four times each for the various CachingMechanism values, including twice for the AppFabric value (Windows Azure Caching), once leveraging local (VM) cache with a time-to-live value of six hours and once without using the local cache.

All image generations were completed sequentially, and each ImageProcessor Worker Role was rebooted prior to running the test series for a new CachingMechanism configuration. This approach was used to yield values for a cold-start (first execution) versus a steady-state configuration (fourth execution).

Performance Results

The existing code already records status (in Windows Azure tables) as each slice is completed, so I created two charts displaying that information. The first chart shows the time-to-completion of each slice for the “cold start” execution for each CachingMechanism configuration, and the second chart shows the “steady state” execution.

In each series, the same role VM is shown in the same relative position. For example, the first bar in each series represents the original image slice processed by the role identified by AzureImageProcessor_IN_0, the second by AzureImageProcessor_IN_1, and the third by AzureImageProcessor_IN_2. Since there were six slice requests, each role instance processes two slices of the image, so the fourth bar represents the second slice processed by AzureImageProcessor_IN_0, the fifth bar corresponds to the second slice processed by AzureImageProcessor_IN_1, and the sixth bar represents the second slice processed by AzureImageProcessor_IN_2.

There is, of course, additional processing in the application that is not accounted for by the ImageProcessor Worker Role. The splitting of the original image into slices, the dispatch of tasks to the various queues, and the stitching of the final photo mosaic are the most notable. Those activities are carried out in an identical fashion regardless of the CachingMechanism used, so to simplify the analysis their associated costs (both economic and performance) are largely factored out of the following discussion.

Costs

System at Rest

There are a number of costs for storage and compute that are consistent across the five different scenarios. The costs in the table below occur when the application is completely inactive.

Note, there are some optimizations that could be made:

- Three instances of ImageProcessor are not needed if the system is idle, so using some dynamic scaling techniques could lower the cost. That said, there is only one instance each of ClientInterface and JobController, and for this system to qualify for the Azure Compute SLA there would need to be a minimum of six instances, two of each type, bringing the compute cost up about another $87 per month.

- Although the costs to poll the queues are rather insignificant, one optimization technique is to use an exponential back-off algorithm versus polling every 10 seconds.

Client Request Costs

Each request to create a photo mosaic brings in additional costs for storage and transactions. Some of the storage needs are very short term (queue messages) while others are more persistent, so the cost for one image conversion is a bit difficult to ascertain. To arrive at an average cost per request, I’d want to estimate usage on the system over a period of time, say a week or month, to determine the number of requests made, the average original image size, and the time for processing (and therefore storage retention). The chart below does list the more significant factors I would need to consider given an average image size and Windows Azure service configuration.

Note there are no bandwidth costs, since the compute and storage resources are collocated in the same Windows Azure data center (and there is never a charge for data ingress, for instance, submitting the original image for processing). The only bandwidth costs would be attributable to downloading the finished product.

I have also enabled storage analytics, so that’s an additional cost not included above, but since it’s tangential to the topic at hand, I factored that out of the discussion as well.

Image Library (Tile) Access Costs

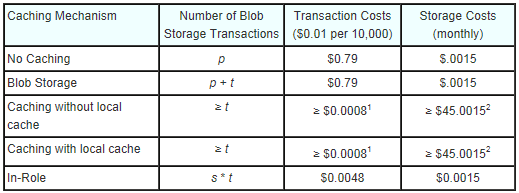

Now let’s take a deeper look at the costs for the various caching scenarios, which draws our focus to the storage of tile images and the associated transaction costs. The table below summarizes the requirements for our candidate Lighthouse image, where:

- p is the number of pixels in the original image (786,432),

- t is the number of image tiles in the library (800), and

- s is the number of slices into which the original images was partitioned (6).

1 Caching transaction costs cover the initial loading of the tile images from blob storage into the cache, which technically needs to occur only once regardless of the number of source images processed. Keep in mind though that cache expiration and eviction policies also apply, so images may need to be periodically reloaded into cache, but at nowhere near the rate when directly accessing blob storage.

2 Assumes 128MB cache, plus the miniscule amount of persistent blob container storage. While 128MB fulfills space needs, other resource constraints may necessitate a larger cache size (as discussed below).

Observations

1: Throttling is real

This storage requirements for the 800 Flickr images, each resized to 16 x 16, amount to 560KB. Even with the serialization overhead in Windows Azure Caching (empirically a factor of three in this case) there is plenty of space to store all of the sized tiles in a 128MB cache.

Consider that each original image slice (1024 x 128) yields 131072 pixels, each of which will be replaced in the generated image with one of the 800 tiles in the cache. That’s 131072 transactions to the cache for each slice, and a total of 768K transactions to the cache to generate just one photo mosaic.

Now take a look at the hourly limits for each cache size, and you can probably imagine what happened when I initially tested with a 128MB cache without local cache enabled.

The first three slices generated fine, and on the other three slices (which were running concurrently on different ImageProcessor instances) I received the following exception:

ErrorCode<ERRCA0017>:SubStatus<ES0009>:There is a temporary failure. Please retry later. (The request failed, because you exceeded quota limits for this hour. If you experience this often, upgrade your subscription to a higher one). Additional Information : Throttling due to resource : Transactions.In my last post, I mentioned this possibility and the need to code defensively, but of course I hadn’t taken my own advice! At this point, I had three options:

reissue the request directly to the original storage location whenever the exception is caught (recall a throttling exception can be determined by checking the DataCacheException SubStatus for the value DataCacheErrorSubStatus.QuotaExceeded [9]),

wait until the transaction ‘odometer’ is reset the next hour (essentially bubble the throttling up to the application level),

provision a bigger cache.

Well, given the goal of the experiment, the third option was what I went with – a 512MB cache, not for the space but for the concomitant transactions-per-hour allocation. That’s not free “in the real world” of course, and would result in a 66% monthly cost increase (from $45 to $75), and that’s just to process a single image! That observation alone should have you wondering whether using Windows Azure Caching in this way for this application is really viable.

Or should it??… The throttling limitation comes into play when the distributed cache itself is accessed, not if the local cache can fulfill the request. With the local cache option enabled and the current data set of 800 images of about 1.5MB total in size, each ImageProcessor role can service the requests intra-VM, from the local cache, with the result that no exceptions are logged even when using a 128MB cache. Each role instance does have to hit the distributed cache at least once in order to populate its local cache, but that’s only 800 transactions per instance, far below the throttling thresholds.

2: Local caching is pretty efficient

I was surprised at how local caching compares to the “in-role” mechanism in terms of performance; the raw values when utilizing local caching are almost always better (although perhaps not to a statistically significant level). While both are essentially in-memory caches within the same memory space, I would have expected a little overhead for the local cache, for deserialization if nothing else, when compared to direct access from within the application code.

The other bonus here of course is that hits against the local cache do not count toward the hourly transaction limit, so if you can get away with the additional staleness inherent in the local cache, it may enable you to leverage a lower tier of cache size and, therefore, save some bucks!

How much can I store in the local cache? There are no hard limits on the local cache size (other than the available memory on the VM). The size of the local cache is controlled by an objectCount property configurable in the app.config or web.config of the associated Windows Azure role. That property has a default of 10,000 objects, so you will need to do some math to determine how many objects you can cache locally within the memory available for the selected role size.

In my current application configuration, each ImageProcessor instance is a Small VM with 1.75GB of memory, and the space requirement for the serialized cache data is about 1.5MB, so there’s plenty of room to store the 800 tile images (and then some) without worrying about evictions due to space constraints.3: Be cognizant of cache timeouts

The default time-to-live on a local cache is 300 seconds (five minutes). In my first attempts to analyze behavior, I was a bit puzzled by what seemed to be inconsistent performance. In running the series of tests that exercise the local cache feature, I had not been circumspect in the amount of time between executions, so in some cases the local cache had expired (resulting in a request back to the distributed cache) and in some cases the image tile was served directly from the VM’s memory. This all occurs transparently, of course, but you may want to experiment with the TTL property of your caches depending on the access patterns demonstrated by your applications.

Below is what I ultimately set it to for my testing: 21600 minutes = 6 hours.

<localCache isEnabled="true" ttlValue="21600" objectCount="10000"/>Timeout policy indirectly affects throttling. An aggressive ttlValue value will tend to increase the number of transactions to the distributed cache (which counts against your hourly transaction limit), so you will want to balance the need for local cache currency with the economical and programmatic complexities introduced by throttling limits.

4: The second use of a role instance performs better

Recall that the role with ID 0 is responsible for both Slice 0 and Slice 3, ID 1 for Slices 1 and 4, and ID 2 for Slices 2 and 5. Looking at the graphs (see callout to the right), note that the second use of each role instance within a specific single execution almost always results in a shorter execution time. This is the case for the first run, where you might attribute the difference to a warm-up cost for each role instance (seeding the cache, for example), but it’s also found in the steady state run and also on scenarios where caching was not used and where the role processing should have been identical.

Unfortunately, I didn’t take the time to do any profiling of my Windows Azure Application while running these tests, but it may be something I revisit to solve the mystery.

5: Caching pits economics versus performance

Let’s face it, caching looks expensive; the entry level of 128MB cache is $45 per month. Furthermore, each cache level has a hard limit on number of transactions per hour, so you may find (as I did) that you need to upgrade cache sizes not because of storage needs, but to gain more transactional capacity (or bandwidth or connections, each of which may also be throttled). The graph below highlights the stair-step nature of the transaction allotments per cache size in contrast with the linear charges for blob transactions.

With blob storage, the rough throughput is about 60MB per second, and given even the largest tile blob in my example, one should conservatively get 1000 transactions per second before seeing any performance degradation (i.e., throttling). In the span of an hour, that’s about 3.6 million blob transactions – over four times as many as needed to generate a single mosaic of the Lighthouse image. While that’s more than any of the cache options would seem to support, four images an hour doesn’t provide any scale to speak of!

BUT, that’s only part of the story: local cache utilization can definitely turn the tables! For sake of discussion, assume that I've configured a local cache ttlValue of at least an hour. With the same parameters as I’ve used throughout this article, each ImageProcessor role instance will need to make only 800 requests per hour to the distributed cache. Those requests are to refresh the local cache from which all of the other 786K+ tile requests are served.

Without local caching, we couldn’t support the generation of even one mosaic image with a 128MB cache. With local caching and the same 128MB cache, we can sustain 400,000 / 800 = 500 ImageProcessor role ‘refreshes’ per hour. That essentially means (barring restarts, etc.) there can be 500 ImageProcessor instances in play without exceeding the cache transaction constraints. And by simply increasing the ttlValue and staggering the instance start times, you can eke out even more scalability (e.g., 1000 roles with a ttlValue of two hours, where 500 roles refresh their cache in the first hour, and the other 500 roles refresh in the second hour).

So, let’s see how that changes the scalability and economics. Below I’ve put together a chart that shows how much you might expect to spend monthly to provide a given throughput of images per hour, from 2 to 100. This assumes an average image size of 1024 x 768 and processing times roughly corresponding to the experiments run earlier: a per-image duration of 200 minutes when CachingMechanism = {0|1}, 75 minutes when CachingMechanism = 2 with no local cache configured, and 25 minutes when CachingMechanism = {2|3} with local cache configured.

The costs assume the minimum number of ImageProcessor Role instances required to meet the per-hour throughput and do not include the costs common across the scenarios (such as blob storage for the images, the cost of the JobController and ClientInterface roles, etc.). The costs do include blob storage transaction costs as well as cache costs.

For the “Caching (Local Disabled)” scenario, the 2 images/hour goal can be met with a 512MB cache ($75), and the 10 images/hour can be met with a 4GB cache. Above 10 images/hour there is no viable option (because of the transaction limitations), other than provisioning additional caches and load balancing among them. For the “Caching (Local Enabled)” scenario with a ttlValue of one hour a 128MB ($45) cache is more than enough to handle both the memory and transaction requirements for even more than 100 images/hour.

Clearly the two viable options here are Windows Azure Caching with local cache enabled or using the original in-role mechanism. Performance-wise that makes sense since they are both in-memory implementations, although Windows Azure Caching has a few more bells-and-whistles. The primary benefit of Windows Azure Caching is that the local cache can be refreshed (from the distributed cache) without incurring additional blob storage transactions and latency; the in-role mechanism requires re-initializing the “local cache” from the blob storage directly each time an instance of the ImageProcessor handles a new image slice. The number of those additional blob transactions (800) is so low though that it’s overshadowed by the compute costs. If there were a significantly larger number of images in the tile library, that could make a difference, but then we might end up having to bump up the cache or VM size, which would again bring the costs back up.

In the end though, I’d probably give the edge to Windows Azure Caching for this application, since its in-memory implementation is more robust and likely more efficient than my home-grown version. It’s also backed up by the distributed cache, so I’d be able to grow into a cache-expiration plan and additional scalability pretty much automatically. The biggest volatility factor here would be the threshold for staleness of the tile images: if they need to be refreshed more frequently than, say, hourly, the economics could be quite different given the need to balance the high-transactional requirements for the cache with the hourly limits set by the Windows Azure Caching service.

Final Words

Undoubtedly, there’s room for improvement in my analysis, so if you see something that looks amiss or doesn’t make sense, let me know. Windows Azure Caching is not a ‘one-size-fits-all’ solution, and your applications will certainly have requirements and data access patterns that may lead you to completely different conclusions. My hope is that by walking through the analysis for this specific application, you’ll be cognizant of what to consider when determining how (or if) you leverage Windows Azure Caching in your architecture.

As timing would have it, I was putting the finishing touches on this post, when I saw that the Forecast: Cloudy column in the latest issue of MSDN Magazine has as its topic Windows Azure Caching Strategies. Thankfully, it’s not a pedantic, deep-dive like this post, and it explores some other considerations for caching implementations in the cloud. Check it out!

Bruno Terkaly (@brunoterkaly) started a Service Bus series with Part 1-Cloud Architecture Series-Durable Messages using Windows Azure (Cloud) Service Bus Queues–Establishing your service through the Portal

Introduction

The purpose of this post is to explain and illustrate the use of Windows Azure Service Bus Queues.

This technology solves some very difficult problems. It allows developers to send durable messages among applications, penetrating network address translation (NAT) boundaries, , or bound to frequently-changing, dynamically-assigned IP addresses, or both. Reaching endpoints behind these types of boundaries in extremely difficult. The Windows Azure Service Bus Queuing technologies makes this challenge very approachable.

There are many applications for this technology. We will use this pattern to implement the CQRS pattern in future posts.

Well known pattern in computer science

These technologies refleofct well known patterns in computer science, such as the "Pub-Sub" or publish-subscribe pattern. This pattern allows senders of messages (Publishers) to send these messages to listeners (Subscribers) without knowing anything about the number of type of subscribers. Subscribers simply express an interest in receiving certain types of messages without knowing anything about the Publisher. It is a great example of loose coupling.

Publish-Subscribe Pattern: http://en.wikipedia.org/wiki/Publish/subscribe

Topics

Windows Azure also implements the concept of "Topics." In a topic-based system, messages are published to "topics" or named logical channels. Subscribers in a topic-based system will receive all messages published to the topics to which they subscribe, and all subscribers to a topic will receive the same messages. The publisher is responsible for defining the classes of messages to which subscribers can subscribe.

Getting Started at the portal

The next few screens will walk you through establishing a namespace at the portal.

Essential Download

To create a Service Bus Queue service running on Azure you’ll need to download the Azure SDK here:

Establishing a namespace for the service bus endpoint

Select “Service Bus, Access Control & Caching” as seen below.

Creating a new service bus endpoint

Click “New”

Providing a namespace, region. Selecting services.

The end result

Summary of information from portal

Thirumalai Muniswamy described Implementing Azure AppFabric Service Bus - Part 2 in a 1/4/2012 post to his Dot Net Twitter blog:

In my last post, I had explained about what Azure App Fabric is, the types and how Relaying Messaging works. From this post, we will see a real time example working with Windows Azure App Fabric - Relaying Messaging.

From the Azure training kit provided by Azure Team has lots of great materials to learn about each concepts of Azure. I hope this example also will add valuable learning material for those who starts implementing Azure App Fabric in their applications.

Implementation Requirement:

We are going to take an example of Customer Maintenance entry screen which has CURD (Create, Update, Read and Delete) operations on customer data. The service will be running in one of the on-premise server and exposed to public using Service Bus. The client Web Application will be running in another/same local system or in the cloud which consume the service to show the list of customers. The user can add a new customer, modify an existing customer by selecting from the customer list or even delete a customer from the list directly. The functionality is simple, but we are looking here how to implement with Service Bus and how the customer data flows from service to cloud and to client.

I am going to use Northwind database configured in my on-premise SQL Server for data storage. So make sure to have Northwind database for implementing this source code. (You can get the database script from here)Requirements

- You required to have Azure Subscription with App Fabric enabled.

- Visual Studio 2010 (for development and compilation)

- Install Windows Azure SDK for .NET (http://www.windowsazure.com/en-us/develop/net/)

Note: The Service Bus and Caching libraries are included as part of Windows Azure SDK from v1.6. So there is no need of installing additional setup for Azure App Fabric as we have done before.Creating a Service Bus Namespace

Pls skip this step if you already have namespace created or know how to create.Step 1: Open Azure Management Portal using https://windows.azure.com and select the Service Bus, Access Control & Caching tab.

The portal will open Service panel in the left hand side.

Step 2: Select the Service Bus node and select the Subscription under which you required to create the Service Bus namespace.

Step 3: Press New menu button in the Service Namespace section. The portal will open Create a New Service Namespace popup window.

Step 4: Make sure Service Bus checkbox selected and provide the input as defined below.

- Enter the meaningful namespace in the Namespace textbox and verify the namespace availability with Check Availability.

Note:

- The namespace should not contain any special characters such as hyphen (_), dot (.) or any special characters. It can contain any alphanumeric letters and it should starts with only a characters.

- The Service Namespace name must be greater than 5 and less than 50 characters in length.

- As the namespace should be unique across all the service bus namespace created around the world, it would be better to follow some basic standard to form the namespace in the organization level.

For Example - Create a unique namespace at application level or business level. So, the namespace should be as defined below

<DomainExtention><DomainName><ApplicationName>

For Ex: ComDotNetTwitterSOP

The Service Bus endpoint URI would be

http://ComDotNetTwitterSOP.servicebus.windows.net

Once the Namespace created at application level, we can add other important names through hierarchies at the end of the service bus URI.

http://<DomainExtention><DomainName><ApplicationName>.servicebus.windows.net/<ServiceName>/<EnvironmentName>/<VersionNo>

- The Service Name will specify the name of the service

- The Environment Name will specify whether the namespace deployed for Test, Staging and Prod

- The Version No will specify the version number of the release such as V0100(for Version 1.0), V0102 (for Version 1.2), V01021203 (for V 1.2.1203) etc.,

So the fully qualified namespace URI for Sales Invoice would be

http://ComDotNetTwitterSOP.servicebus.windows.net/SalesInvoice/Test/V0100

This is an example of how a service bus service bus endpoint could be. Please decide as per your organization requirements.- Select the Country / Region (Good to select nearby where most of the consumer will reside and make the service also nearby the Country / Region selected).

- Make sure the Subscription selected is correct or select the correct one from the drop down list.

- Press the Create Namespace button.

The portal will create the namespace and list under the subscription selected. Initially the namespace status will be Activating… for some time and then will become Active.

Step 5: Select the namespace created, you can see properties panel in the right hand side. Press the View button under the Default Key heading from the properties.

The portal pops up a Default Key window with two values Default Issuer and Default Key. These two values are important to expose our service to cloud. So press the Copy to Clipboard button to copy to clipboard and save somewhere temporarily.

Note: When pressing Copy to Clipboard button, the system might popup Silverlight alert. You can press Yes to copy it to clip board.

Keep in mind

- Only one on-premise service can listen on a particular service bus endpoint address. (except NetEventRelayBinding, which is designed to listen multiple services)

- When attempting to use same address for multiple services, the first service only will take place. The remaining services will fail.

- An endpoint can use part of other endpoint, where as it can’t completely use other endpoint address.

For Ex: When an endpoint defined as http://ComDotnetTwitterSOP.servicebus.windows.net/SalesInvoiceReport/Test/V0100 which can’t used completely by other service bus endpoint. So defining http://ComDotnetTwitterSOP.servicebus.windows.net/SalesInvoiceReport/Test/V0100/01012012 endpoint is wrong. But below is accepted. http://ComDotnetTwitterSOP.servicebus.windows.net/SalesInvoiceReport/Test/V0102Further steps will be published on next post soon.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Amit Kumar Agrawal posted Remote Desktop with Windows Azure Roles on 12/30/2011 (missed when published):