Windows Azure and Cloud Computing Posts for 7/27/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

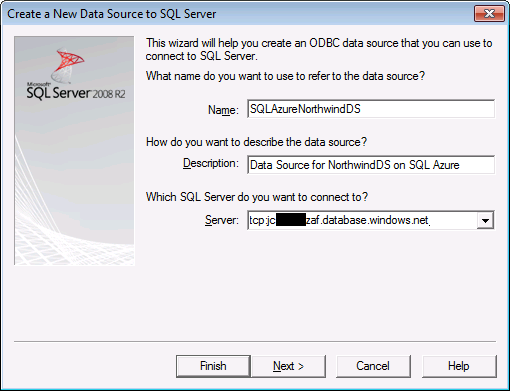

My Linking Microsoft Access 2010 Tables to a SQL Azure Database post of 7/27/2010 is an illustrated, detailed tutorial that explains how to create an Access 2010 front end with an ODBC data source having a SQL Server Native Client 10.0 driver that connects to an SQL Azure database (Northwind, what else?):

Ryan McMinn described how to link Microsoft Access 2010 tables to a SQL Azure database in his Access 2010 and SQL Azure post to the Microsoft Access blog of 6/7/2010. Ryan didn’t include screen captures in his narrative for creating the ODBC connection with the SQL Server Native Client 10.0 driver. I also encountered a problem that required a workaround to get links to a Northwind database working. This step-by-step tutorial adds screen captures and describes the workaround for primary key column name conflicts. It also describes an issue with adding subdatasheets to linked table datasheets.

Note: This tutorial assumes that you have a SQL Azure account with at least one populated database and have SQL Server Management Studio [Express] 2008 R2 available to edit primary key names of the linked tables.

1. Launch Access 2010, click the External Data tab and click the ODBC Database button to open the ODBC Select Data Source dialog:

2. Click the New button to open the Select a Type of Data Source dialog and select the System Data Source option:

3. Click Next to open the Select a Driver for Which You Want to Set up a Data Source dialog and select the SQL Server Native Client 10.0 driver:

4. Click Next and Finish to open the SQL Server Data Source Wizard’s first dialog, type a name and description for the data source, and type the full server name, including the tcp: prefix (tcp:servername.database.windows.net):

You’ll probably find it easier to copy the server name from SSMS’s Connect to Server dialog than to type it. …

The post continues with 14 more steps.

Wayne Walter Berry explains Improving Your I/O Performance for SQL Azure in this 7/27/2010 post to the SQL Azure blog:

As a DBA I have done a lot of work improving I/O performance for on-premise SQL Server installations. Usually it involves tweaking the storage system, balancing databases across RAID arrays, or expanding the count of files that the tempdb is using; these are all common techniques of SQL Server DBA. However, how do you improve your I/O performance when you are not in charge of the storage subsystem, like in the case of SQL Azure? You focus on how your queries use the I/O and improve the queries. This blog post will talk about how to detect queries which use a high amount of I/O and how to increase the performance of your I/O on SQL Azure.

Detecting Excessive I/O Usage

With SQL Azure, just like SQL Server, I/O is a bottleneck in getting great query performance. Before you can make any changes, you first thing have to be able to do is detect which queries are having trouble.

This Transact-SQL returns the top 25 slowest queries:

SELECT TOP 25 q.[text], (total_logical_reads/execution_count) AS avg_logical_reads, (total_logical_writes/execution_count) AS avg_logical_writes, (total_physical_reads/execution_count) AS avg_phys_reads, Execution_count FROM sys.dm_exec_query_stats cross apply sys.dm_exec_sql_text(plan_handle) AS q ORDER BY (total_logical_reads + total_logical_writes) DESCThe output looks like this:

You can modify the ORDER BY clause in the statement above to get just the slowest queries for writes, or the slowest for reads.

Changing the Query

With SQL Azure, it is Microsoft’s job to maintain the data center, the servers, handle the storage, and optimize the performance of storage. There is nothing you can do to make the I/O faster from a storage subsystem perspective. However, if you can reduce the amount of read and writes to storage, you can increase the performance of your queries.

Reading Too Much Data

One way to overuse I/O is to read data that you are never going to use. A great example of this is:

SELECT * FROM [Table]This query reads all the columns and all the rows from the [Table]. Here is how you can improve that:

- Use a WHERE clause to reduce the number of rows to just the ones that you need for your scenario

- Explicitly name the columns you need from the tables, which hopefully will be less than all of them

Create Covered Indexes

Once you have reduced the number of columns you are returning for each query, you can focus on creating non-clustered covered indexes for the queries that have the most read I/O. Covered indexes are indexes that contain all the columns in the query as part of the index, this includes the columns in the WHERE clause. Note that there might be several covered indexes involved in a single query, since the query might join many tables, each potentially with a covered index. You can determine what columns should go into the index by examining SQL Azure’s execution plan for the index. More information about be found in the MSDN article: SQL Server Optimization.

Just a note, non-clustered indexes (what you make when you do a covered index) reduce the performance of your writes. This is because on insertion or updates, the indexes need to be updated. So you need to balance your covered index creation with the ratio of reads and writes to your database. Databases with a disproportion amount of reads to writes gain more performance from covered indexes.

Summary

I barely touch on the ways to reduce your I/O usage and increase your query performance by modifying your queries. As a rule of thumb the techniques that you find on the MSDN for query optimization for on-premise SQL Server installations should work for SQL Azure. The point I am trying to make is that if your query I/O usage is high, focus on optimizing your queries, Microsoft is doing a great job of optimizing the storage subsystem behind SQL Azure.

The SQL Server Team posted Leading Research Firm Gartner, Inc. Publishes Two Important Notes on Microsoft PowerPivot on 7/27/2010:

If you haven’t already tried out PowerPivot, or if you need a little help educating your IT department on SQL Server 2008 R2, Office 2010 and SharePoint 2010, check out these two reports from leading research firm, Gartner, Inc. These important notes highlight the value of PowerPivot and how IT should prepare for and position PowerPivot. They also offer best practices based on real-world customer scenarios and feedback for building business intelligence (BI) and performance management architecture.

Read the full Gartner reports here:

BI is a top priority for our customers who are looking for solutions to bring better business insights to employees making decisions every day. PowerPivot introduces Microsoft’s unique ‘managed self-service’ approach that gives companies and IT departments the ability to enable and empower their business users to create reports and conduct their own analysis while still maintaining all the insight and oversight of IT. It’s this focus on users that differentiates Microsoft BI. Microsoft has long been tracking on a vision of bringing BI to more users and PowerPivot is another step in this direction to making BI truly pervasive.

Another recent note by Gartner’s John Hagerty (formerly AMR) titled: “Microsoft SQL Server PowerPivot for Excel: Unleashing the Business User” discusses the critical need for companies to embrace the business user, enabling them to get important work done and freeing up IT for more strategic projects.

For more information, see our previous posts here and here. For a free trial, visit http://powerpivot.com/.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Chris Kanaracus reported “Revenues are projected to grow from $13.1 billion in 2009 to more than $40 billion in 2014” in his IDC: SaaS momentum skyrocketing post of 7/27/2010 to InfoWorld’s Cloud Computing blog:

Interest in the SaaS (software as a service) delivery model is growing to the point that by 2012, almost 85 percent of new vendors will be focused on SaaS services, according to new research from analyst firm IDC. [URL added.]

Also by 2012, some two-thirds of new offerings from established vendors will be sold as SaaS, IDC said. …

SaaS revenue numbers will jump up accordingly in the next few years, rising from $13.1 billion in 2009 to $40.5 billion by 2014, according to the analyst firm.

License revenues for traditional on-premises applications will drop roughly $7 billion this year and are likely in permanent decline, since SaaS is generally sold via subscription, the report adds.

IDC's estimate includes applications, application development, deployment and infrastructure software delivered in SaaS form. Over the next several years, the latter two categories will gain more market share, according to IDC.

The Americas accounted for about 74 percent of the overall SaaS market in 2009, but by 2014 their share will drop to 54 percent. Europe, the Middle East, and Africa will account for 34 percent; and Asia-Pacific about 12 percent, IDC said.

Companies are apparently turning to SaaS for faster deployments, the lack of a need to purchase and maintain hardware, and easier upgrades.

But there are certain pitfalls to avoid as well, analyst firm Altimeter Group warned in its SaaS "bill of rights" released last year.

For one, customers should make sure they own and have unfettered access to their data, the firm said.

Unforeseen additional costs regarding data storage present another danger, that report said. "SaaS clients often find out after the fact that the storage allocations do not meet actual usage requirements. Once hooked into the product, ongoing storage costs could prove to be the largest expense item."

Other Altimeter recommendations, such as customer indemnification from intellectual-property suits filed against their vendor, and access to multiple tiers of support, are carryovers from the world of on-premises software.

Chris Kanaracus covers enterprise software and general technology breaking news for The IDG News Service.

Alex Williams asserted SaaS Market Grows Spectacularly to the Detriment of the IT Kings in this 7/26/2010 post to the ReadWriteCloud blog:

Two reports issued today by Gartner and IDC show a SaaS market exhibiting double digit growth across the enterprise and in the overall market.

This a SaaS market dominated by vendors that sell services more than on-premise licenses. The growth is proof that the IT kings of the enterprise face the greatest potential disruption as traditional licensing models are replaced by subscription services.

Gartner is forecasting the enterprise application software market to surpass $8.5 billion in 2010. That is up 14.1% compared to 2009 when revenues hit $7.5 billion.

IDC is reporting that the SaaS market had worldwide revenues of $13.1 billion in 2009. The research firm estimates these revenues will reach $40.5 billion by 2014.

Enterprise

Enterprise SaaS services represent 10% of the overall enterprise software market. The SaaS market's share will rise to 16% by 2014.

Gartner reported that the SaaS market had some attrition in 2009 but revenues were generally up. Continued growth will be fueled by the attention on cloud computing.

What we see are markets with a lot of activity and others that are still uncertain of SaaS and its uses in the cloud. Gartner points out that security is not as much of an issue as it used to be, and that's reflected in the increasing use of collaboration technologies. But there are places in the enterprise where the cloud is a concern more for its data portability issues than anything else. In resource planning, for instance, companies have deeper concerns about using a SaaS provider.

Overall Market

ICD's report reflects how quickly the market has moved from software delivered on a compact disc.

For instance, IDC estimates that by 2012, about 85% of new software to the market will be delivered as a service. Revenues from SaaS services will account for nearly 26% of net new growth in the software market in 2014. In 2010, IDC predicts a $7 billion drop in worldwide license revenues.

What this all means is that there will be a significant shift for traditional enterprise vendors. The last 20 years have seen the rise of the IT kings like Oracle, Microsoft and a host of others. They now face a challenge to their crowns.

And that is just in one year.

Thomas Erl and his co-authors posted an excerpt from their SOA with .NET and Windows Azure book in a Cloud Services with Windows Azure - Part 1 post of 7/27/2010:

For a complete list of the co-authors and contributors, see the end of the article.

Microsoft's Software-plus-Services strategy represents a view of the world where the growing feature-set of devices and the increasing ubiquity of the Web are combined to deliver more compelling solutions. Software-plus-Services represents an evolutionary step that is based on existing best practices in IT and extends the application potential of core service-orientation design principles.

Microsoft's efforts to embrace the Software-plus-Services vision are framed by three core goals:

- User experiences should span beyond a single device

- Solution architectures should be able to intelligently leverage and integrate

on-premise IT assets with cloud assets- Tightly coupled systems should give way to federations of cooperating systems and loosely coupled compositions

The Windows Azure platform represents one of the major components of the Software-plus-Services strategy, as Microsoft's cloud computing operating environment, designed from the outset to holistically manage pools of computation, storage and networking; all encapsulated by one or more services.

Cloud Computing 101

Just like service-oriented computing, cloud computing is a term that represents many diverse perspectives and technologies. In this book, our focus is on cloud computing in relation to SOA and Windows Azure.Cloud computing enables the delivery of scalable and available capabilities by leveraging dynamic and on-demand infrastructure. By leveraging these modern service technology advances and various pervasive Internet technologies, the "cloud" represents an abstraction of services and resources, such that the underlying complexities of the technical implementations are encapsulated and transparent from users and consumer programs interacting with the cloud.

At the most fundamental level, cloud computing impacts two aspects of how people interact with technologies today:

- How services are consumed

- How services are delivered

Although cloud computing was originally, and still often is, associated with Web-based applications that can be accessed by end-users via various devices, it is also very much about applications and services themselves being consumers of cloud-based services. This fundamental change is a result of the transformation brought about by the adoption of SOA and Web-based industry standards, allowing for service-oriented and Web-based resources to become universally accessible on the Internet as on-demand services.

One example has been an approach whereby programmatic access to popular functions on Web properties is provided by simplifying efforts at integrating public-facing services and resource-based interactions, often via RESTful interfaces. This was also termed "Web-oriented architecture" or "WOA," and was considered a subset of SOA. Architectural views such as this assisted in establishing the Web-as-a-platform concept, and helped shed light on the increasing inter-connected potential of the Web as a massive collection (or cloud) of ready-to-use and always-available capabilities.

This view can fundamentally change the way services are designed and constructed, as we reuse not only someone else's code and data, but also their infrastructure resources, and leverage them as part of our own service implementations. We do not need to understand the inner workings and technical details of these services; Service Abstraction (696), as a principle, is applied to its fullest extent by hiding implementation details behind clouds.

SOA Principles and Patterns

There are several SOA design patterns that are closely related to common cloud computing implementations, such as Decoupled Contract [735], Redundant Implementation [766], State Repository [785], and Stateful Services [786]. In this and subsequent chapters, these and other patterns will be explored as they apply specifically to the Windows Azure cloud platform.With regards to service delivery, we are focused on the actual design, development, and implementation of cloud-based services. Let's begin by establishing high-level characteristics that a cloud computing environment can include:

- Generally accessible

- Always available and highly reliable

- Elastic and scalable

- Abstract and modular resources

- Service-oriented

- Self-service management and simplified provisioning

Fundamental topics regarding service delivery pertain to the cloud deployment model used to provide the hosting environment and the service delivery model that represents the functional nature of a given cloud-based service. The next two sections explore these two types of models.

Cloud Deployment Models

There are three primary cloud deployment models. Each can exhibit the previously listed characteristics; their differences lie primarily in the scope and access of published cloud services, as they are made available to service consumers.Let's briefly discuss these deployment models individually.

Public Cloud

Also known as external cloud or multi-tenant cloud, this model essentially represents a cloud environment that is openly accessible. It generally provides an IT infrastructure in a third-party physical data center that can be utilized to deliver services without having to be concerned with the underlying technical complexities.Essential characteristics of a public cloud typically include:

- Homogeneous infrastructure

- Common policies

- Shared resources and multi-tenant

- Leased or rented infrastructure; operational expenditure cost model

- Economies of scale and elastic scalability

Note that public clouds can host individual services or collections of services, allow for the deployment of service compositions, and even entire service inventories.

Private Cloud

Also referred to as internal cloud or on-premise cloud, a private cloud intentionally limits access to its resources to service consumers that belong to the same organization that owns the cloud. In other words, the infrastructure that is managed and operated for one organization only, primarily to maintain a consistent level of control over security, privacy, and governance.Essential characteristics of a private cloud typically include:

- Heterogeneous infrastructure

- Customized and tailored policies

- Dedicated resources

- In-house infrastructure (capital expenditure cost model)

- End-to-end control

Community Cloud

This deployment model typically refers to special-purpose cloud computing environments shared and managed by a number of related organizations participating in a common domain or vertical market.Other Deployment Models

There are variations of the previously discussed deployment models that are also worth noting. The hybrid cloud, for example, refers to a model comprised of both private and public cloud environments. The dedicated cloud (also known as the hosted cloud or virtual private cloud) represents cloud computing environments hosted and managed off-premise or in public cloud environments, but dedicated resources are provisioned solely for an organization's private use.The Intercloud (Cloud of Clouds)

The intercloud is not as much a deployment model as it is a concept based on the aggregation of deployed clouds (Figure 8.1). Just like the Internet, which is a network of networks; intercloud refers to an inter-connected global cloud of clouds. Also like the World Wide Web, intercloud represents a massive collection of services that organizations can explore and consume.

Figure 1: Examples of how vendors establish a commercial intercloud

From a services consumption perspective, we can look at the intercloud as an on-demand SOA environment where useful services managed by other organizations can be leveraged and composed. In other words, services that are outside of an organization's own boundaries and operated and managed by others can become a part of the aggregate portfolio of services of those same organizations.

Deployment Models and Windows Azure

Windows Azure exists in a public cloud. Windows Azure itself is not made available as a packaged software product for organizations to deploy into their own IT enterprises. However, Windows Azure-related features and extensions exist in Microsoft's on-premise software products, and are collectively part of Microsoft's private cloud strategy. It is important to understand that even though the software infrastructure that runs Microsoft's public cloud and private clouds are different, layers that matter to end-user organizations, such as management, security, integration, data, and application are increasingly consistent across private and public cloud environments.Service Delivery Models

Many different types of services can be delivered in the various cloud deployment environments. Essentially, any IT resource or function can eventually be made available as a service. Although cloud-based ecosystems allow for a wide range of service delivery models, three have become most prominent:Infrastructure-as-a-Service (IaaS)

This service delivery model represents a modern form of utility computing and outsourced managed hosting. IaaS environments manage and provision fundamental computing resources (networking, storage, virtualized servers, etc.). This allows consumers to deploy and manage assets on leased or rented server instances, while the service providers own and govern the underlying infrastructure.Platform-as-a-Service (PaaS)

The PaaS model refers to an environment that provisions application platform resources to enable direct deployment of application-level assets (code, data, configurations, policies, etc.). This type of service generally operates at a higher abstraction level so that users manage and control the assets they deploy into these environments. With this arrangement, service providers maintain and govern the application environments, server instances, as well as the underlying infrastructure.Software-as-a-Service (SaaS)

Hosted software applications or multi-tenant application services that end-users consume directly correspond to the SaaS delivery model. Consumers typically only have control over how they use the cloud-based service, while service providers maintain and govern the software, data, and underlying infrastructure.Other Delivery Models

Cloud computing is not limited to the aforementioned delivery models. Security, governance, business process management, integration, complex event processing, information and data repository processing, collaborative processes-all can be exposed as services and consumed and utilized to create other services.Note: Cloud deployment models and service delivery models are covered in more detail in the upcoming book SOA & Cloud Computing as part of the Prentice Hall Service-Oriented Computing Series from Thomas Erl. This book will also introduce several new design patterns related to cloud-based service, composition, and platform design.

IaaS vs. PaaS

In the context of SOA and developing cloud-based services with Windows Azure, we will focus primarily on IaaS and PaaS delivery models in this chapter. Figure 8.2 illustrates a helpful comparison that contrasts some primary differences. Basically, IaaS represents a separate environment to host the same assets that were traditionally hosted on-premise, whereas PaaS represents environments that can be leveraged to build and host next-generation service-oriented solutions.

Figure 2: Common Differentiations Between Delivery Models

We interact with PaaS at a higher abstraction level than with IaaS. This means we manage less of the infrastructure and assume simplified administration responsibilities. But at the same time, we have less control over this type of environment.

IaaS provides a similar infrastructure to traditional on-premise environments, but we may need to assume the responsibility to re-architect an application in order to effectively leverage platform service clouds. In the end, PaaS will generally achieve a higher level of scalability and reliability for hosted services.

An on-premise infrastructure is like having your own car. You have complete control over when and where you want to drive it, but you are also responsible for its operation and maintenance. IaaS is like using a car rental service. You still have control over when and where you want to go, but you don't need to be concerned with the vehicle's maintenance. PaaS is more comparable to public transportation. It is easier to use as you don't need to know how to operate it and it costs less. However, you don't have control over its operation, schedule, or routes.

Summary

- Cloud computing enables the delivery of scalable and available capabilities by leveraging dynamic and on-demand infrastructure.

- There are three common types of cloud deployment models: public cloud, private cloud, and community cloud.

- There are three common types of service delivery models: IaaS, PaaS, and SaaS.

Authors

- David Chou is a technical architect at Microsoft and is based in Los Angeles. His focus is on collaborating with enterprises and organizations in such areas as cloud computing, SOA, Web, distributed systems, and security.

- John deVadoss leads the Patterns & Practices team at Microsoft and is based in Redmond, WA.

- Thomas Erl is the world's top-selling SOA author, series editor of the Prentice Hall Service-Oriented Computing Series from Thomas Erl (www.soabooks.com), and editor of the SOA Magazine (www.soamag.com).

- Nitin Gandhi is an enterprise architect and an independent software consultant, based in Vancouver, BC.

- Hanu Kommalapati is a Principal Platform Strategy Advisor for a Microsoft Developer and Platform Evangelism team based in North America.

- Brian Loesgen is a Principal SOA Architect with Microsoft, based in San Diego. His extensive experience includes building sophisticated enterprise, ESB and SOA solutions.

- Christoph Schittko is an architect for Microsoft, based in Texas. His focus is to work with customers to build innovative solutions that combine software + services for cutting edge user experiences and the leveraging of service-oriented architecture (SOA) solutions.

- Herbjörn Wilhelmsen is a consultant at Forefront Consulting Group, based in Stockholm, Sweden. His main areas of focus are Service-Oriented Architecture, Cloud Computing and Business Architecture.

- Mickey Williams leads the Technology Platform Group at Neudesic, based in Laguna Hills,

Contributors

- Scott Golightly is currently an Enterprise Solution Strategist with Advaiya, Inc; he is also a Microsoft Regional Director with more than 15 years of experience helping clients to create solutions to business problems with various technologies.

- Darryl Hogan is an architect with more than 15 years experience in the IT industry. Darryl has gained significant practical experience during his career as a consultant, technical evangelist and architect.

- As a Senior Technical Product Manager at Microsoft, Kris works with customers, partners, and industry analysts to ensure the next generation of Microsoft technology meets customers' requirements for building distributed, service-oriented solutions.

- Jeff King has been working with the Windows Azure platform since its first announcement at PDC 2008 and works with Windows Azure early adopter customers in the Windows Azure TAP

- Scott Seely is co-founder of Tech in the Middle, www.techinthemiddle.com, and president of Friseton, LLC,

Return to section navigation list>

Windows Azure Infrastructure

Lori MacVittie (@lmacvittie) asserted Bottles, birds, and packets: how the message is exchanged is less important than what the message is as long as it gets there as a preface to her The World Doesn’t Care About APIs post of 7/27/2010 to the F5 DevCentral blog:

I heard it said the other day, regarding the OpenStack announcement, that “the world does not care about APIs.”

Unpossible! How could the world not care about APIs? After all, it is APIs that make the Web (2.0) go around. It is APIs that drive the automation of infrastructure from static toward dynamic. It is APIs that drive self-service and thin-provisioning of compute and storage in the cloud. It is APIs that make cross-environment integration of SaaS possible. In general, without APIs we’d be very unconnected, un-integrated, un-collaborative, and in many cases, uninformed.

Now, it could be said that the world doesn’t care about APIs until they’re highly adopted, but unlike the chicken and the egg question (which may very well have been answered, in case you weren’t paying attention), it is still questionable whether the success of sites like Facebook and Twitter and the continued growth of SaaS darlings like Salesforce.com are dependent upon exactly that: their API.

The API is the new CLI in the network. The API is the web’s version of EAI (Enterprise Application Integration), without which we wouldn’t have interesting interactions between our favorite sites and applications. The API is the cloud’s version of the ATM (Automated Teller Machine) through which services are provisioned with just a few keystrokes and a valid credit card. The API is the means by which interoperability of cloud computing will be enabled because to do otherwise is to create the mother-of-all hub-and-spoke integration points. As Jen Harvey

( co-founder of Voxilate, developer of voice-related mobile applications) pointed out, an API makes it possible to develop a user-interface that essentially obscures the underlying implementation. It’s the user-interface the users care about, and if you don’t have to change it as your application takes advantage of different clouds or services or technologies, you ensure that productivity and user-adoption – two frequently cited negative impacts of changes to applications in any organization – are not impacted at all. It’s a game-changer, to be sure.

So the API is the world in technology today, how could we not care about it?

WE DO. WE JUST CARE MORE ABOUT the MODEL

Maybe the point is that we shouldn’t because the API today is just a URI and URIs are nearly interchangeable.

Face it, if interoperability between anything were simply about the API then we’d have already solved this puppy and put it to sleep. Permanently. But it’s not about the API per se, it is, as William Vambenepe

is wont to say, “it’s about model, stupid”.

Unfortunately, when most people stand up and cheer “the API” what they’re really cheering is “the model.” They aren’t making the distinction between the interface and the data (or meta-data) exchanged. It’s what's inside the message that enables interoperability because it is through the message, the model, that we are able to exchange the information necessary to do whatever it is the API call is supposed to do. Without a meaningful, shared model the API is really not all that important.

The API is how, not what, and unfortunately even if everyone agreed on how, we’d still have to worry about what and that, as anyone who has every worked with EAI systems can tell you, is the really, really, super hard part of integration. And it is integration that we’re really looking for when we talk about cloud and interoperability or portability or mobility, because what we want is to be able to share data (configuration, architectures, virtual machines, hypervisors, applications) across multiple programmatic systems in a meaningful way.

COMMODITIZATION REALLY MEANS NORMALIZATION

Here’s the rub: having an API is important, but the actual API itself is not nearly as important as what it’s used to exchange.

APIs, at least those on the web and taking advantage of HTTP, are little more than URIs. Doesn’t matter if it’s REST or SOAP, the end-point is still just a URI. The URI is often somewhat self-descriptive and in the case of true REST (which doesn’t really exist) it would be nearly completely self-documenting but it’s still just a URI. That means it is nearly a trivial exercise to map “/start/myresource” to “/myresource/start”. But when the data, the model, is expressed as the payload of that API call, then things get … ugly. Is one using JSON? Or is it XML? Is that XML OVF? Schemaless? Bob’s Homegrown Format? Does it use common descriptors? Is a load balancer in cloud a described as an application delivery controller in cloud b? Is the description of a filter required in cloud a using iptables semantics or some obscure format the developer made up on the fly because it made sense to her?

Mapping the data, the model, isn’t a trivial exercise. In fact without a common semantic model it would require not the traditional one-chicken sacrifice but probably a whole flock in order to get it working, and you’d essentially be locked-in for all the same reasons you end up locked-in today: it costs too much and takes too much effort to change. When pundits and experts talk about commoditization of cloud computing, and they do often, it’s not an attempt to minimize the importance of the model, of the infrastructure, but rather it’s a necessary step toward providing services in a consistent manner across implementations; across clouds. By defining core cloud computing services in a consistent manner and describing them in similar terms, advanced services can then be added atop those services in a like manner without impacting negatively the ability to migrate between implementations. If the underlying model is consistent, commoditized if you will, this process becomes much easier for everyone involved.

Consider HTTP headers. There is a common set, a standard set of headers used to describe core functions and capabilities. They have a common model and use consistent semantics through which the name-value pairs are described. Then there’s custom headers; headers that follow the same model but which are peculiar to the service being invoked. In a cloud model these are the differentiated value-added cloud services (VACS) Randy Bias

mentions in his post regarding the announcement of OpenStack and the ensuing cries of “it will be the standard! it will save the world!”. The most important aspect of custom HTTP headers that we must keep in any cloud API or stack is that if they aren’t supported, they do not negatively impact the ability to invoke the service. They are ignored by applications which do not support them. Only through commoditization and a common model can this come to fruition.

Having an API is important. It’s what makes integration of applications, infrastructure, and ultimately clouds possible. But it isn’t the definition of that interface across disparate implementations of similar technology that will make or break intercloud. What will make or break intercloud is the definition of a consistent semantic model for core services and components that can be used to describe the technologies and policies and meta-data necessary to enable interoperability.

David Linthicum reported “A recent survey shows that IT leaders are more ready to adopt cloud computing, an indication they see growth coming” as he asked Will cloud computing save the economy? in this 7/27/2010 post to InfoWorld’s Cloud Computing blogs:

As CRN reported, "68 percent of respondents said cloud computing will help their businesses recover from the recession" in a survey of more than 600 IT and business decision makers in the United States, the United Kingdom, and Singapore on behalf of cloud infrastructure and hosted IT provider Savvis.

The survey found that 96 percent of IT decision makers are as confident that cloud computing is ready for the enterprise, more so than in 2009, and that "7 percent of IT decision makers said they use or are planning to use enterprise-class cloud computing solutions within the next two years." So will this fuel economic growth?

While this is good news, it is perhaps a stretch to focus on cloud computing as something that will "fuel economic growth." Cloud computing is very effective, but the chances that business channels in two years will cover it as an economic change engine are iffy -- it's an expectation that's unrealistic to ask cloud computing, or any technology, to live up to.

A more realistic opportunity is growth in the cloud computing technology space, as providers ramp up for a hoped-for market explosion, and more venture and public money flows into the cloud computing technology companies. Much like the explosion of the Web in the 1990s, it could be the catalyst that gets more investment dollars back into technology -- and translates into jobs and the building of wealth. Now that will fuel the tech economy at least.

At the same time, I'm sure that enterprises and government agencies will find a use for and value in cloud computing, but the savings won't be apparent until 2013, if past patterns are a meaningful guide. Moreover, it will be evolutionary rather than revolutionary. Like other hyped technologies, we won't understand the true benefits until after -- well, it's no longer being hyped, and surveys such as this are long forgotten.

<Return to section navigation list>

Windows Azure Platform Appliance

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Cloud Tweaks reported VMworld® 2010, the Leading Virtualization Event of the Year, Comes to San Francisco and Copenhagen — This Year Educating Attendees on the Virtual Road to Cloud Computing on 7/27/2010:

PALO ALTO, CA–(Marketwire – July 27, 2010) – VMware, Inc. (NYSE: VMW), the global leader in virtualization solutions from the desktop through the datacenter and to the cloud, today announced that VMworld® 2010 makes its return to San Francisco Aug. 30 through Sept. 2 at Moscone Center and will also be held Oct. 12-14 at The Bella Center in Copenhagen. With more than 19,000 attendees expected worldwide and more than 200 sponsors and exhibitors, including Global Diamond sponsors Cisco, Dell, EMC and NetApp and Global Platinum sponsors HP and Intel, VMworld 2010 will spotlight VMware and the industry’s commitment to virtualization and the transformation to IT as a Service.

The theme of VMworld 2010 is “Virtual Roads. Actual Clouds,” showcasing the journey to cloud computing through virtualization. Attendees will explore new roads that will help them discover, learn and break new ground in transforming IT.

VMworld offers attendees informative deep-dive technical sessions and hands-on labs training, plus access to a wealth of technology and cloud partners gathering in San Francisco and Copenhagen. Attendees will share and gain practical knowledge around virtualization best practices, building a private cloud, leveraging the public cloud, managing desktops as a service, virtualizing enterprise applications and more.

This year, all VMworld labs will be powered by the VMware LabCloud portal, a self-service interface custom-built to enable attendees to access lab courses and content, allowing VMware to increase the number of labs offered and provide attendees more opportunities to explore how virtualization can make a powerful impact on their organization. VMworld will stage more than 22,000 lab seats through 480 simultaneous user workstations during the four-day event. To build out this environment, VMware has committed more than 75,000 man-hours in lab creation and development to produce 30 lab topics — all of which are powered by the VMware vSphere™ cloud infrastructure platform and hardware technologies donated by more than a dozen sponsors. The labs will cover everything from virtualized desktop infrastructure, through the VMware vSphere-powered datacenter and into the VMware-powered cloud. With easy access to more than 150 VMware subject matter experts on hand to answer questions and explore options, participants will get one-on-one attention and still have the flexibility to move at their own pace.

VMworld 2010 Registration

To register to attend VMworld 2010, please visit www.vmworld.com.

For questions about press registration, please contact: vmworld10@outcastpr.com

For questions about analyst registration, please contact: vmwarear@nectarpr.comFor a current list of sponsors, please visit: http://www.vmworld.com/community/conferences/2010/sponsors-exhibitors/

To network and join VMworld discussions on Facebook, Twitter, and other social media, please visit: http://www.vmworld.com/community/discussion-document/

<Return to section navigation list>

Other Cloud Computing Platforms and Services

CSC’s Ron Knode joins the cloud standards fracas by asking Cloud Standards Now!? in this 7/27/2010 post:

Wouldn’t it be wonderful if we could simply point to cloud standards and claim that such standards could reliably lubricate government adoption of safe, dependable, accreditable cloud computing?! Sadly, we cannot. At least, not yet.

And, this fact is as true for commercial adoption of cloud computing as it is for government adoption. It is also the subject of this month’s question in the Mitre Cloud Computing Forum for Government.

Well, we don’t have the standards, but what we do have is the collective sense that such standards are needed, and the energy to try to build them. Furthermore, while the “standards” we need do not yet exist, we are not without the likely precursors to such standards, e.g., guidelines, so-called best practices, threat lists, special publications, and all manner of “advice-giving” items that try to aim us in the right direction (or at least aim us away from the very wrong direction). In fact, we have so many contributors working on cloud standards of one kind or another that we are in danger of suffering the “lesson of lists” for cloud computing.

Nevertheless, given our desire to reap some of the benefits of cloud computing, should we not try to accelerate the production, publication, and endorsement of cloud computing standards from the abundance of sources we see today?

Wait a minute! Standards can be a blessing or a curse. On the one hand, standards make possible reasonable expectations for such things as interoperability, reliability, and the assignment and recognition of authority and accountability. On the other hand, standards, especially those generated in haste and/or without widespread diligence and commentary, can bring unintended consequences that actually make things worse. Consider, for example, the Wired Equivalent Privacy (WEP) part of 802.11 or the flawed outcomes and constant revisions for the PCI DSS (remember Hannaford and Heartland!?).

Furthermore, even when standards are carefully crafted and vetted with broad and intensive review, they can still be misinterpreted and misapplied by users, leading to surprising outcomes such as the USB device flaws that showed up in products with FIPS 140-2 certified modules. Even the best standards require an informed and sensible application on the part of users.

What we seek are standards that lead us into trusted cloud computing, not just “secure” cloud computing or even “compliant” cloud computing. Ultimately, any productive stack of standards must deliver transparency to cloud computing. Otherwise, cloud consumers will remain trapped in the never-ending cycle of cloud security claims, with no easy way for individual and independent validation, and cloud vendors will remain stymied by having no regular way to demonstrate their conformance without a lot of technical mumbo-jumbo or expensive and time-consuming third party intervention.

Simply having cloud standards just to have standards does not bring any enterprise closer to the promised payoffs of the cloud.

Let’s consider the possibility that different categories of standards, or tiered standards, may be required to cover all of the important use cases for government and commercial use. Not every cloud or every cloud market need necessarily be encumbered with every cloud standard.

Finally, let’s consider also what it might take to demonstrate compliance with standards. For example, it is unlikely that “whole cloud” compliance will be effective or efficient across the board. A standard that is more trouble than it’s worth is not going to be very helpful at all.

So, let’s proceed with all deliberate speed through some of the worthy efforts ongoing, but not declare success merely for the sake of an artificial deadline or competitive advantage. The cloud definition and certification efforts sponsored by NIST and GSA, the security threat and guidance documents authored by the Cloud Security Alliance, the cloud modeling work of the OMG, the cloud provider security assertions technique proposed by Cloudaudit.org, and the CloudTrust Protocol that extends SCAP notions and techniques to reclaim transparency for cloud computing — all of these efforts certainly hold promise for accelerating the adoption of cloud computing for government and industry.

Let’s push and participate in the actions of these and other groups. Ask questions, experiment, build prototypes, consider all manner of outcomes, and seek extensive and deliberate peer review. Standards that survive such a process can be endorsed. But, like fine wines, cheeses, (and even thunderstorms), “We will accept no cloud standard before its time.”

Ron Knode is Director of Global Security Solutions for CSC and a researcher with the Leading Edge Forum.

Audrey Watters describes Facebook’s, Twitter’s and 37Signals’ plans to build their own cloud data centers in her When Startups Grow Out of the Cloud post to the ReadWriteCloud of 7/27/2010:

Cloud computing has been a boon to tech startups, allowing them to build, launch and scale without substantial up-front investment in hardware. But at what point does the moving from the cloud to a data center make more sense - for both performance and cost?

Facebook announced plans earlier this year to build a custom data center in Prineville, Oregon, and Twitter announced last week that it plans to build one near Salt Lake City, Utah. And web app maker 37Signals isn't building its own data center, but it did reveal last week that it will move its infrastructure from Rackspace hosting to a colocation space in a Chicago data center.

As Jonathan Heiliger, Facebook's VP of Technical Operations said at last month's Structure 2010 conference, "For a consumer web site starting today, I would absolutely run on the cloud. It allows you to focus on building your product. But if you have 10 million users, that's a pretty big check I'm writing to someone else. How much control do I have?"

Data Centers Give More Flexibility, More Control?

37Signals Operations Manager Mark Imbriaco explains the growth of 37Signals as such: since moving to the cloud four years ago, "we've grown from around 15 physical machines to a mixture of around 150 physical and virtual machines. We've also grown from having less than 1TB of data to on the order of 80TB of data today. Our needs have evolved a great deal as we've grown and we reached the point where it made sense for us to acquire our own hardware and manage our own datacenter infrastructure. The amount of flexibility that we have with our own environment makes it much easier for us to use some specialized equipment that meets our needs better than the solutions that Rackspace generally supports."

The desire for greater flexibility and control was also given by Twitter as rationale for its new data center. According to Twitter's Engineering Blog, "Twitter will have full control over network and systems configuration, with a much larger footprint in a building designed specifically around our unique power and cooling needs. Twitter will be able to define and manage to a finer grained SLA on the service as we are managing and monitoring at all layers. The data center will house a mixed-vendor environment for servers running open source OS and applications."

Implication for the Cloud

It isn't surprising that rapidly growing companies like Twitter and Facebook have reached a point where it pencils out to have their own data center. But what are the implications for cloud computing - something that promises infinite scalability?

And more importantly perhaps, the justifications given by Twitter and 37Signals for building a data center or moving to a colocation facility are less about cost and performance than they are about sufficient controls. Are public cloud providers doing enough to offer their enterprise customers with the flexibility and control they want?

Leena Rao reported Google: City Of Los Angeles Apps Delay Is Overblown in a post of 7/26/2010 to the TechCrunch blog:

Google has long been touting the deployment of its productivity suite and Microsoft Office-killer Google Apps to the City of Los Angeles. The City planned to equip its 34,000 employees with Google Apps, replacing Novell’s GroupWise system, the e-mail technology provider that LA had previously been using. But unfortunately, the process of transitioning the government entity over to the cloud-based system has seen a few speedbumps to say the least. In April, LA City administrators began questioning

the move thanks to productivity, security and slowness issues with Google Apps. During that time, there was also the possibility of a delay in the full deployment of the system to employees because of these concerns.

On Friday, we learned that this delay became a reality, and Google missed its June 30 deadline

to deploy Apps to all 34,000 employees. But today, at the launch of Google Apps for Government, a specialized version of the suite to meet government security needs, Google said the situation was in fact overblown.

Here’s the statement we were given by Google on the issue:

“The City’s move to the cloud is the first of its kind, and we’re very pleased with the progress to date, with more than 10,000 City employees already using Google Apps for Government and $5.5 million in expected cost savings to Los Angeles taxpayers. It’s not surprising that such a large government initiative would hit a few speed bumps along the way, and we’re working closely with CSC and the City to meet their evolving requirements in a timely manner and ensure the project is a great success for Los Angeles.”

As for the delay, Google says that they are working with with the City of LA to “address requirements that were not included in the original contract.” One example of these possible requirements that came up is that the LAPD wants to conduct background checks on all Google employees that have access to Google Apps data in the cloud. Doing these checks of course add more time to the adminstrative clock.

Google spokesman Andrew Kovacs declined to get into any specifics on what requirements were “unforseen” but he did say that the delay is actually only a matter of months, and that the full deployment should take place this year. And the delay will results in no additional payments by the City of Los Angeles.

So why has this particular deployment become somewhat of a comedy of errors and an apparent communications breakdown? Washington D.C. and Orlando, FL have made a similar move to Google Apps without so much drama. But the City of LA is Google’s largest government Apps transition date, so we could just be witnessing the growing pains of such a large deployment within a government entity.

However, as Google starts expanding apps to larger government organizations, the company is bound to face ongoing bureaucratic issues involving security concerns, outdated systems, and more. If Google is actively targeting government organizations for Apps (which seems to be apparent from today’s announcement), then this is probably not going to be the first or the last time deployment is bogged down in bureaucratic questioning.

Photo credit/Flickr/KLA4067

<Return to section navigation list>