Windows Azure and Cloud Computing Posts for 6/30/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this daily series. |

Update: Items added 7/1/2010 are marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

This is a preliminary addition. More posts will be added the morning of 7/1/2010.

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated in June 2010 for the January 4, 2010 commercial release.

Azure Blob, Drive, Table and Queue Services

• Panagiotis Kefalidis wrote Windows Azure: Custom persistence service for WF 4 saving information on Windows Azure Blob on 6/30/2010:

Recently, I’ve been looking a way to persist the status of an idling Workflow on WF4. There is a way to use SQL Azure to achieve this, after modifying the scripts because they contain unsupported T-SQL commands, but it’s totally an overkill to use it just to persist WF information, if you’re not using the RDBMS for another reason.

I decided to modify the FilePersistence.cs of the Custom Persistence Service sample in WF 4 Samples Library and make it work with Windows Azure Blob storage. I’ve created two new methods to Serialize and Deserialize information to/from Blob storage.

Here is some code:

Just make sure you’ve created [the] “workflow_persistence” blob container before using these methods.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Wayne Walter Berry explains Security Considerations with SQL Azure and PowerPivot in this 6/30/2010 post:

In this former blog post I discussed how to enable access to SQL Azure from PowerPivot. Now that access is enabled and the data is available to you, let’s examine how PowerPivot and SQL Azure work from a security perspective.

Accessing the Data

In order to use PowerPivot against your SQL Azure data you need to download the data into the Excel workbook. How to enable the user access to the SQL Azure firewall, and create a user account in SQL Azure for accessing the data is covered in this earlier blog post.

A Copy of the Data

When using PowerPivot the analysis of the data is done by PowerPivot and Excel on the user’s computer. In order to do that, the data is imported from SQL Azure to Excel by PowerPivot and stored in the Excel workbook. In fact all the data that is imported is readable by the user in Excel via the PowerPivot Window.

In other words, a copy of the data now exists in the Excel workbook and that data is outside the access control of SQL Azure and the SQL Azure Administrator. Even if the SQL Azure Administrator restricts the user’s access to SQL Azure after they downloaded the data, they can still access the data in their Excel workbook.

Encrypting the Excel Workbook

Once the data is in the Excel Workbook, the workbook can be protected using the encryption feature in Excel, for more about that click here. This allows the Excel user to password protect the workbook and the data inside it.

Connecting to SQL Azure

When PowerPivot connects to SQL Azure to import data, it is done through a secure connection. All traffic in or out of SQL Azure is encrypted, regardless of the client. This is done automatically in the .NET Data Provider for SQL Server when you create a connection with PowerPivot; see this blog post for information about creating a connection.

Login and Password Information

When you create a connection in PowerPivot you are asked for your login and password to SQL Azure. Right below the request for information is a check box that says: Save my password.

If you check this box, the password to SQL Azure is saved encrypted in the Excel workbook. The connection string is used in two places: by the PowerPivot user interface and by the VertiPaq storage engine that processes the data. The PowerPivot user interface doesn’t save the password in the Excel workbook; however it does store it in memory over the life of the application. If you close Excel, and reopen your workbook, and want to add additional tables (see this blog post for how to add additional table) you will have to reenter the password; the user interface requires it.

If you just refresh the data in the Excel workbook after you open it and you requested that the password be saved when you created the connection, the VertiPaq storage engine in the Excel has stored the password. During the refresh it can query SQL Azure and is able to import the new data. If you didn’t check the box to save the password when you created the connection, then you will be prompted for the password.

Bottom line: if you don’t want your SQL Azure password to be saved in the Excel workbook, don’t check the Save my password checkbox when you create the connection.

• Sanjay Jain’s Pervasive Data Integrator Universal CONNECT! offers OData integration is a 00:03:20 Channel9 video demo of 6/29/2010:

Pervasive Software(R) Inc., a Microsoft Gold Certified Partner and recent “Best of SaaS Showplace” award winner, enables the integration and connectivity to the Open Data Protocol (OData) using the Microsoft Dynamics certified Pervasive Data Integrator ™. Pervasive Data Integrator Universal CONNECT! has an unparalleled range of connectivity and integration capabilities to a variety of on-premises, on-demand and cloud-based applications and sources, including the Open Data Protocol (OData). OData, a web protocol for querying and updating data, applies web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON to provide access to information from a variety of applications, services, and stores. There is a growing list of products that implement OData. Microsoft supports the Open Data Protocol in SharePoint Server 2010, Excel 2010 (through SQL Server PowerPivot for Excel), Windows Azure Storage, SQL Server 2008 R2, and Visual Studio 2008 SP1.

• Lei Li explains the Performance Impact of Server-side Generated GUIDs in EF, which will interest you if you’re connecting Entity Framework v4 with SQL Azure, in this 6/28/2010 post to the ADO.NET Team blog:

Background

EF4 supports the use of server-generated GUIDs, which has been a popular request since EF 3.5. Lee Dumond summarized his experience on how to use this feature very well. But you may find that the performance of server-generated GUIDs is not as fast as client-side generated GUIDs or even the server side Identity columns of other types like int, smallint etc. I would like to take a few moments to explain why that is and how we are going to improve it.

Performance Analysis

First of all, we need to take a look at the SQL script that is generated by EF. Based on the types of server side generated key column, there are two variations of SQL as listed below,

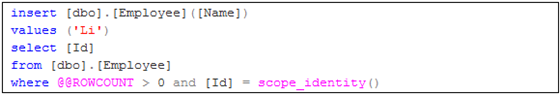

1. Identity type supported by Scope_Identity(), such as int, bigint and smallint, etc. For example in the statement below, the Id is an int.

2. Identity type not supported by Scope_Identity(), such as uniqueidentifier. For instance the Id in the statement below is a uniqueidentifier.

To measure the relative execution time, we run those two insert statements in one batch with Actual Execution Plan. As you can see in the diagram below, the second batch of SQL runs twice slower than the first batch.

Reason

You may think why not just get rid of the table variable in the second SQL script (as shown in the table below) to make it faster.

The reason is that OUTPUT clause without INTO in update statements can be problematic when you have triggers defined on the table. You can read more about this here and here. Basically we want to make sure when the table has triggers on insert, our generated SQL will still work.

Workaround and Future Improvements

If you want to have better performance on the insert, you can always generate the GUID on the client side.

We would like to improve the performance in server-generated GUID scenarios by supporting Function in the DefaultValue attribute on Property definition in SSDL, so that user can define a store function “newid()” in SSDL and reference it in the DefaultValue of the Id Property on the Customer table. EF will then generate the following SQL which improves the performance considerably (50% faster).

Here is the Actual Execution Plan from running both the new SQL script and the original SQL script.

Rob Saunders four-part Working with Entity Framework v4 and SQL Azure blog series explains in detail how to use EF v4 with SQL Azure.

Cihangir Bihikoglu’s Transferring Schema and Data From SQL Server to SQL Azure – Part 1: Tools post of 6/29/2010 is the first of a series that recommends SQL Server to SQL Azure migration tools by task:

Many customers are moving existing workloads into SQL Azure or are developing on premise but move production environments to the SQL Azure. For most folks, that means transferring schema and data from SQL Server to SQL Azure.

When transferring schema and data, there are a few tools to choose from. Here is a quick table to give you the options.

Here is a quick overview of the tools;

Generate Script Wizard

This option is available through Management Studio 2008 R2. GSW has built in understanding of SQL Azure engine type can generate the correct options when scripting SQL Server database schema. GSW provides great fine grained control on what to script. It can also move data, especially if you are looking to move small amounts of data for one time. However for very large data, there are more efficient tools to do the job.

Figure 1. To use generate script wizard, right click on the database then go under tasks and select “Generate Script”.

DAC Packages

DACPacs are a new way to move schema through the development lifecycle. DACPacs are a self contained package of all database schema as well as developers deployment intent so they do more than just move schema between SQL Server and SQL Azure but they can be used for easy transfer of schema between SQL Server and SQL Azure. You can use DACPacs pre or post deployment scripts to move data with DACPacs but again, for very large data, there are more efficient tool to do the job.

Figure 2. To access DAC options, expand the “Management” section in the SQL instance and select “Data-tier Applications” for additional options.

SQL Server Integration Services

SSIS is a best of breed data transformation tools with full programmable flow with loops, conditionals and powerful data transformation tasks. SSIS provides full development lifecycle support with great debugging experience. Beyond SQL Server, It can work with diverse set of data sources and destinations for data movement. SSIS also is the technology that supports easy-to-use utilities like Import & Export Wizard so can be a great powerful tool to move data around. You can access Import and Export Wizard directly from the SQL Server 2008 R2 folder under the start menu

BCP & Bulk Copy API

Bulk Copy utility is both a tool (bcp.exe) and API (System.Data.SqlClient.SqlBulkCopy) to move structured files in and out of SQL Server and SQL Azure. It provides great performance and fine grained control for how the data gets moved. There are a few options that can help fine-tune data import and export performance.

In Part 2 of this post, we’ll take a closer look at bcp and high performance data uploads.

Cihangir didn’t mention my favorite: George Huey’s SQL Server Migration Wizard. See Using the SQL Azure Migration Wizard v3.1.3/3.1.4 with the AdventureWorksLT2008R2 Sample Database.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

• Dr. Cliffe wrote Introducing the WF Security Pack CTP 1 on wf.codeplex.com on 6/30/2010:

Today, we would like to announce the release of the WF Security Pack CTP 1 on http://wf.codeplex.com. Where did it come from? Quite simply, from you: real WF 4 customers who have spent time banging their heads against the wall trying to get certain security scenarios working with WF 4. From your feedback, we’ve put together this Activity Pack to fill in some gaps that you have identified. Let’s take a quick look at what is covered.

The Microsoft WF Security Pack CTP 1 is a set of 7 security-related activities, designers, and the associated source code based on WF 4 and the Windows Identity Foundation (WIF). The scenarios we targeted were the following: [Emphasis added.]

- Impersonating a client identity in the workflow.

- In-workflow authorization, such as PrincipalPermission and validation of Claims.

- Authenticated messaging using ClientCredentials specified in the workflow, such as username/password or a token retrieved from a Security Token Service (STS).

- Flowing a client security token through a middle-tier workflow service to a back-end service (claims-based delegation) using WS-Trust features (ActAs).

Now, my question for you is: are these the right set of scenarios to target for the next .NET framework release? What is missing? Which is the most important? Again, we want your feedback so that we can make the right decisions for the long-term benefit of the product. Use the Discussions tab, our WF 4 Forums, a carrier pigeon, whatever it takes to get us some feedback.

Ok, here are three great ways to get started:

- Download the WF Security Pack CTP 1 from wf.codeplex.com, add a reference to the Microsoft.Security.Activities.dll in your WF 4 project, and check out the “Security” tab in the Toolbox.

- Take a quick read through the User Guide introduction to get a feel for what is included.

- Download the WF Security Pack CTP 1 Source code, open up the WorkflowSecurityPack.sln, and take a look at the activity APIs and the rest of the moving pieces of the implementation.

In the next couple of weeks, we’ll take an in-depth look at these scenarios and how you can use the WF Security Pack CTP 1 in your projects. Stay tuned for that content here on The .NET Endpoint & on zamd.net (special thanks to Zulfiqar Ahmed, Microsoft Consultant, for his help in building this Activity Pack!).

• Kent Brown reported New Web Services Interop site on MSDN in this 6/30/2010 post to the WCF Endpoint blog:

Interoperability has been a primary goal of the web services standards from the beginning. After all, why would you go to the trouble to form a cross-industry initiative to essentially re-invent advanced distributed programming protocols like CORBA and DCOM, unless you could create standards that were broadly supported and truly interoperable? Interoperability is also fundamental to the whole promise of service-orientation. From it's inception, WCF was created to enable this interoperable, service-oriented vision.

Achieving something of this scale on an industry-wide scale is not an easy task. It's been a long road, but Microsoft and the other platform vendors have stuck with the vision of SOAP and WS-* and the end result is solid and getting better with each release of the various platforms that support it.

We've just published a new page on MSDN to be the one place you can go to learn about web services interop. We have information there about current interop test results between WCF in .NET 4 and the latest releases of the major Java web services stacks. We also have white papers that show specific scenarios that are supported and How-To guidance for developers. And we have information about the various interop activities and communities Microsoft is involved in. Check it out.

• Ron Jacobs wants you to help Make WCF Simpler on 6/29/2010 by participating in a survey from the WCF Endpoint blog:

If there is one thing I hear from people over and over again it is this. Please make WCF simpler, easier to understand and use. I totally agree with this sentiment. In fact, in .NET 4 we made it much easier but we still have a long way to go. Once in a while each of us gets the opportunity to change the world for the better… (ok we aren’t solving world hunger here but…) If you want your voice to be heard take a minute and fill out this survey.

• John Fontana wrote CIS series. John Shewchuk: A futuristic identity on 6/25/2010 for the Cloud Identity Summit Conference (see the Cloud Computing Events section):

John Shewchuk plans to arrive in Colorado’s Rocky Mountains for July’s Cloud Identity Summit with a crystal ball and both eyes fixed on the future.

Microsoft’s technical fellow for cloud identity and access plans to speak on what he sees as a coming storm of open identity protocols and the emergence of a claims-based model tied to those specifications. The plan is to gaze 10-years into the future and discuss challenges users will face with identity in the cloud.

“There will be challenges with multiple devices, multiple organizations and with roaming, we’ll look at where authorization is headed and go through some scenarios,” he said.

One of those scenarios could involve consumer issues such as determining whose music player a Bluetooth-enabled stereo picks out when a husband and wife get in a car together. “What permissions should you experience in that case?” he said.

Innovations happening today will determine if there will be an answer to that question in the future.

“If all of this is going to be successful it needs to be built on open protocols,” Shewchuk said. “As we move forward, a lot of work the industry has done with identity in the enterprise space becomes internet protocol based,” he said.

In the next 2-3 years convergence could begin around emerging protocols, with OpenID and OAuth 2.0 currently being the popular front runners.

Today, Microsoft and others are supporting those new protocols even as they build out platforms based on SAML, WS-Trust and other more familiar standards.

But Shewchuk says the convergence of open protocols is producing the foundation for the scenarios flickering within his crystal ball.

His car stereo example might include an Apple device, a Sony stereo and a Microsoft service. A scenario in the business space might center on two companies who want to share documents in an environment that lets administrators from each organization retain access controls for their own user base, and provides IT with auditing and tracking capabilities among other tools.

Shewchuk will explain how the goals of those scenarios, and others in health care and government, can be met

“We will talk about what is common among these things and what we can conclude,” he said. “I will define the characteristics of what these protocols need to do, and look at all the options.”

Register for the Cloud Identity Summit, July 20-22, 2010 at Colorado's Keystone Resort.

Follow John Fontana on Twitter and check out our Identity-Conversation Tweet list.

Read about other cloud identity luminaries covered by John’s CIS Series here.

• Sesha Mani summarized WIF Workshops, June 2010 update for Identity Training Kit, and a Patterns & Practices Guide on “Claims-based Identity” on 6/30/2010 on behalf of the WIF Team:

Vittorio in DPE (Developer Platform and Evangelism) team has been touring the world evangelizing claims based identity model and WIF. As a result, there is an excellent set of resources for you to learn WIF! Check out the 10-part WIF Workshop recordings that cover the topics such as basics of claims-based identity and WIF, the scenarios that WIF enables, how WIF plugs into the ASP.NET pipeline, how WIF plays with WCF, and how WIF plays a key role for identity management in Azure. If you want to grab the presentation decks of these WIF Workshops, check out the latest June 2010 update of the Identity Developer Training Kit.

Eugenio Pace in Patterns & Practices team has published a guide on “Claims-based Identity and Access Control”. It is an excellent guide to understand the benefits of claims-based identity model when you are planning a new application or making changes to existing applications that require user identity information. You can also purchase a hard copy of this guide from your favorite online book stores.

Other References and Resources:

Eugenio Pace posted Identity Federation Interoperability – WIF + ADFS + Sun’s OpenSSO on 6/20/2010:

As I announced some time ago, we’ve been working on a few labs that demonstrate interoperability with 3rd party identity components. More specifically:

CA SiteMinder 12.0

IBM Tivoli Federated Identity Manager 6.2

Sun OpenSSO 8.0

The general architecture of the lab follows what is described in chapter 4 of the Claims Guide and is illustrated below:

All configurations are very similar. Each Identity Provider (IdP) supplies slightly different set of claims.

The application (aExpense from the Claims Guide) trusts ADFS (acting as a Federation Provider)

ADFS is configured with multiple issuers and is responsible for:

“Home realm discovery” (in the lab this is simply a drop down box the user has to choose from)

Token transformation (tokens issued by the different IdP’s are converted into another one used by the app)

Each IdP is responsible for authenticating its users and issuing a token

This first post shows how it works for OpenSSO.

I wanted to thank my colleague Claudio Caldato, from Microsoft interoperability labs for allowing us to reuse all his infrastructure, and for helping us configure all components involved.

Interop with OpenSSO

How it works

(Full size diagram here)

End to end demo

(Video here)

• Eugenio Pace repeated the preceding exercise for Identity Federation Interoperability – WIF + ADFS + IBM Tivoli Federated Identity Manager on 6/30/2010:

How it works

(Full size diagram here)

End to end demo

(Video here)

• See the Ping Identity will sponsor the Cloud Identity Summit Conference to be held 7/20 through 7/22/2010 in Keystone, CO entry in the Cloud Computing Events section.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Microsoft Case Studies Team released GIS Provider [ESRI] Lowers Cost of Customer Entry, Opens New Markets with Hosted Services on 6/29/2010:

Since 1969, ESRI has led the development of Geographic Information System (GIS) software. Governments and businesses in dozens of industries use ESRI products to connect business, demographic, research, or environmental data with geographic data from multiple sources. The company wanted to expand the reach of its GIS technology by offering a lightweight solution called MapIt that combines software plus services to provide spatial analysis and visualization tools to users unfamiliar with GIS. ESRI began offering MapIt as a cloud service with the Windows Azure platform, and now ESRI customers can deploy MapIt with Windows Azure and store geographic and business information in the Microsoft SQL Azure database service. By lowering the cost and complexity of deploying GIS, ESRI is reaching new markets and providing new and enhanced services to its existing customers.

Situation

The professionals at ESRI understand that geography connects societies, people, and opportunities. More than a million people in 300,000 organizations around the world use ESRI Geographic Information System (GIS) software—most notably, ArcGIS Desktop—to integrate complex information with detailed geographic data at local, regional, and global scales.

By freeing customers from having to make large hardware, software, and staffing investments up front, we’re helping lower the cost of GIS entry and increase the return on investment.Arthur Haddad

Development Lead and Architect, ESRISince 1969, ESRI has been a leading developer of GIS technology, earning as high as a one-third share of the worldwide GIS software market. Governments, agencies, and businesses in dozens of industries use ESRI products to manage staffing and assets across locations, perform complex analyses, and make more informed decisions. “Our customers use GIS to get more information from their data,” says Renee Brandt, Product Marketing Team Lead at ESRI. “Most data has a spatial component, and when you look at that information geographically, trends and patterns can be identified that would not be intuitively obvious through spreadsheets or tables.”

ESRI offers server, database, desktop, and mobile GIS software, as well as online services and mobile phone technologies. Organizations use ESRI products to build GIS applications that connect almost any type of business, demographic, research, or environmental data with vast amounts of geographic data from multiple sources, such as maps, aerial photography, and satellite data.

Traditionally, GIS applications have been highly customized, complex applications that, while allowing organizations to work effectively with large amounts of diverse data, required a significant investment in software, hardware, and development, and a high degree of user expertise. For instance, organizations often have to restructure their database to work with GIS applications, a process that can require significant time and IT resources.

ESRI wanted to reach new markets and provide an offering for new customers not familiar with GIS by making it easier to view information spatially, without having to invest in a complex infrastructure that it did not otherwise need. “We wanted to offer GIS capability to customers on a platform that enabled them to get started quickly, without the need to reorganize their data, develop an application, or ramp up on a new interface,” says Rex Hansen, Lead Product Engineer at ESRI.

Solution

ESRI chose to offer MapIt as a cloud solution with the Windows Azure platform because it offered a short ramp-up time and familiar technologies that customers were used to working with in their own IT environments. Windows Azure is a development, service hosting, and service management environment that provides developers with on-demand compute and storage to host, scale, and manage web applications on the Internet through Microsoft data centers.The company designed MapIt to work with the technology infrastructures that many of its customers already have, which often includes Microsoft technologies such as SQL Server, Microsoft Office SharePoint Server, and the Silverlight browser plug-in. ESRI chose Windows Azure to take advantage of its easy interoperability with other Microsoft software and services such as Microsoft SQL Azure, a self-managed database service built on technologies in SQL Server 2008.

Because ESRI built MapIt with the Microsoft .NET Framework 3.5 and designed it to work with SQL Server 2008, it takes fewer resources for customers to deploy and maintain MapIt with Windows Azure. “With Windows Azure, we’re in an environment that is ready-made to make the MapIt service function for the job,” says Hansen.

MapIt connects to Windows Azure to prepare and serve data for viewing in a geographical context. From the MapIt Spatial Data Assistant desktop application, customers can upload map data to SQL Azure and geo-enable existing attribute data to prepare it for use in mapping applications. The MapIt Spatial Data Service connects to SQL Azure and provides a web service interface that allows mapping applications to access the spatial and attribute data stored in SQL Azure. ESRI also provides a utility that generates a Spatial Data Service deployment package to upload as a Windows Azure Web role.

To build the applications that display and use the data available in the Spatial Data Service, ESRI developed the ArcGIS application programming interface (API) for Microsoft Silverlight and Windows Presentation Foundation (Figure 1). Customers can use the ArcGIS API to build rich mapping applications with data in SQL Azure or use application solutions developed on Silverlight and integrated with Microsoft Office SharePoint Server 2007 or Microsoft SharePoint Server 2010.

Figure 1. This application displays a map provided by Bing Maps, integrated

with selected sets of census data stored in SQL Azure and accessed using

the MapIt Spatial Data Service.ESRI released MapIt for deployment as a service with Windows Azure in November 2009. “When someone deploys MapIt on Windows Azure, they can write a simple application that allows them to use GIS without having to be a GIS expert. It’s mapping for everybody,” says Arthur Haddad, Development Lead and Architect at ESRI. “Then as their needs grow, they can add more analytical capabilities without having to change the application; they can just add the specific services they need and use them with the data they have in SQL Azure.” …

The case study continues with Benefits and other sections.

Tim Anderson reported PivotViewer comes to SilverLight – data as visual collections in conjunction with Steve Marx’s PivotViewer for Netflix demo of 6/29/2010 in a 6/30 blog to his IT Writing blog:

Microsoft has released a PivotViewer control for Silverlight. Data visualisation is a key business reason to use Silverlight or Flash rather than HTML and JavaScript for an application, so it is a significant release. But what does it do?

PivotViewer is the latest tool to come out of the Microsoft Live Labs Pivot project. Pivot is based on collections, which are sets of data where each item has an associated image. A pivot item has attributes, similar to properties, called facets; and facets have facet categories. Facet categories are used to filter and sort the data.

More complex Pivot data sets have several linked collections, or dynamic collections which are generated at runtime as a query result. This is necessary if the size of the data set is very large or even unbounded. You could create a web search, for example, that returned a pivot collection.

Once you have created and hosted your Pivot collections, most of the work of displaying them is done by the Pivot client. There is a desktop Pivot client, which is Windows-only; but the Silverlight PivotViewer is more useful since it allows a Pivot collection to be viewed in a web page. The client (or control) does most of the work of displaying, filtering and sorting your data, including a user-friendly filter panel.

PivotViewer also makes use of Deep Zoom, also known as Seadragon, which lets you view vast images over the internet while downloading only what is needed for the small section or thumbnail preview you are viewing.

The result is that a developer like Azure Technical Strategist Steve Marx was able to create a PivotViewer for Netflix with only about around 500 lines of code. This kind of product selection is a natural fit for Pivot.

I was quickly able to find the highest-rated music movies in the Netflix Instant Watch collection.

Starting with the full set, I checked Music and Musicals and then set Rating to 4 or over.

It seems to me that the strength of Pivot is not so much that it offers previously unavailable ways to visualise data, but more that it transforms a complex programming task into something that any developer can accomplish. Microsoft at its best; though of course it will only work on platforms where Silverlight runs.

Return to section navigation list>

Windows Azure Infrastructure

• David Chou questioned Standardization in the Cloud – Necessity or Optional? on 6/30/2010:

This is a widely discussed topic as well, along with many others. A recent panel discussion at GigaOm’s Structure 2010 conference had some pretty interesting comments about the question. Sinclair Schuller who was on that panel, posted the question, and his thoughts, on his blog - Do We Need Cloud API Standards?

Here is my take (though a bit more philosophical one):

My personal opinion is that “formal API standardization” is not “absolutely required”. Philosophically I’m with the “innovate now, standardize later” camp as I think the trade-offs still favor innovation over standardization in this area today, plus the rest of the IT world still operates in that mode, thus would cloud computing have a better chance at standardization?

Fundamentally though, I think we could ask the question a little differently. Instead of applying that question to cloud computing as a whole, it might make more sense, and more feasible, to look at certain areas/layers in cloud computing as places where standardization may add more value than constraints.

At a high-level, the industry is differentiating between infrastructure, platform, and software as-a-service offerings (i.e., IaaS, PaaS, SaaS). At this moment, specialization levels increase significantly as we move up the stack. Public PaaS offerings such as Windows Azure, AWS, App Engine, Force.com, etc., are already more different than similar, and the differentiations grow as we get into SaaS, and then into information management, and so on. The opportunity for standardization is really only available at the lower levels in IaaS offerings, as there is more commonality and established standards and processes in terms of how customers operate and manage infrastructure. For example, a lot of focus today is to support cloud federation to provide elasticity for private clouds, but that’s just one abstraction layer on top of provisioning and managing VM’s (over-simplifying a bit here). Though over time we might see stability and commonalities grow upwards, and towards the tipping point where standardization in some form may be more feasible for some layers.

However, at the same time, why standardize when, as others have pointed out, companies like Eucalyptus can help mitigate and manage the differences in underlying API’s and providing that abstraction at a certain level? After all, cloud-as-a-platform provides opportunities for people to build layers and layers of abstractions to add value in different ways. Also in a way, this is where cloud computing and traditional on-premise software operations differ, fundamentally. Cloud computing inherently allows us to work in a dynamic environment, where changes can be more frequent, and in fact preferred. On the other hand, on-premise software operations today tend to be more on the static side of things, and standardization helps to manage and mitigate changes and differences when we have a heterogeneous infrastructure to operate.

Thus standardization can be considered an established approach to help us better manage the on-premise world. From this perspective, is it necessary or beneficial to try to enforce this particular traditional approach to a different paradigm? That is of course, if we think cloud computing represents a new paradigm even though it’s built upon existing technologies and best practices. Personally I think cloud computing represents something different than just trying to host VMs in different places, and more benefits can be gained by leveraging it as a new paradigm (and that’s a whole other topic to dive into). :)

David Chou is an Architect at Microsoft, focused on collaborating with enterprises and organizations in many areas such as cloud computing, SOA, Web, RIA, distributed systems, security, etc., and supporting decision makers on defining evolutionary strategies in architecture.

Michelle Hemsoth posted her Too Early for HPC in the Cloud? Microsoft Responds... article to HPC in the Cloud’s Behind the Cloud blog on 6/27/2010:

Last week as I prepared for a lengthy article about the post-virtualization performance gap for high-performance computing, which signifies that it might be too early for HPC in the cloud for many users across a wide range of applications, I reached out to Microsoft's Director of Technical Computing, Vince Mendillo, for a quote about the company's position on the matter. What I received in return was so complete that it seemed most appropriate to give it some room of its own. The answer is not only more complete than typical responses, it provides a unique glimpse into Microsoft's world--at least as it pertains to HPC and cloud. [Emphasis added.]

The following is Mendillo's verbatim email answer, which sheds some light on the possibilities for the future, even if it doesn't touch on many of the significant challenges that actual HPC users are discussing specifically. That might be because the application-specific complaints that almost always rooted in performance are too scattered across several different research areas and groups or perhaps it might just be because Microsoft has infinite hope about the possibilities of clouds for traditional HPC users. Either way, the response, which is below in non-italics, reiterates Microsoft's position and lends some insight about where the company will be heading in coming months.

High performance computing is at an inflection point and the time has come for high performance computing in the cloud. We believe the cloud can provide enabling technology that will make supercomputing available to a much broader range of users. This means a whole new group of scientists, engineers and analysts that may not have the resources for or access to on-premises HPC systems can now benefit from their power and promise.

It’s important to note that certain HPC workloads are ready for the cloud today (e.g., stochastic modeling, embarrassingly parallel problems) while others (e.g., MPI-based workloads) will take longer to move to the cloud because they require high speed interconnects and high bandwidth for low-latency, node-to-node communications. Data sensitivity and locality are also important considerations—large, highly sensitive data might be better suited to on-premises HPC, while publicly available data in the cloud could fuel new, innovative HPC work.

We feel that organizations will benefit from both on-premise HPC and HPC in the cloud. Among the benefits of this blended model are:

• Economics: On-premise computational resources include more than servers. Much of the on-premise computing cost is infrastructure and labor for most organizations. Other expenses like power, cooling, storage and facilities also have to be factored in. The cloud can provide economic advantages to on-premise-only computational resources. For example, take a “predictable bursting” scenario: In order to provision the computational requirements of an organization – including periods of peak demand – with only an on-premise resources, the organization would be paying for capacity that would go unutilized for a large part of the time. By provisioning a predictable level of computational demand with on-premise resources, while at the same time accommodating “bursts” in computational demand with cloud computing, the organization will have much better utilization rates and just pay for what they need.

• Access: Some stand-alone organizations (and workgroups inside bigger companies) today do not have access to on-premises HPC systems. HPC in the cloud gives these organizations an entirely new resource. For example, a small finance or engineering firm that runs a periodic model but doesn’t want a closet full of servers, can readily access high performance computing literally on a moment’s notice.

• Sharing and Collaboration: The cloud enables multiple organizations to easily share data, models and services. With on-premise HPC, sharing involves moving data back and forth through LAN/WAN networks which is impractical and costly for large data. By putting data and models into public clouds, sharing among multiple organizations becomes more practical, creating the possibility for new partnerships and collaborations.

By building a parallel computing platform, we can enable new models across science, academia and business to scale across desktop, cluster and cloud to both speed calculation and provide higher fidelity answers. The power of the cloud is a fantastic example of the tremendous computational resources that are becoming available.

Today, scientists, engineers and analysts build models, hand them to software developers to code them (which can take weeks or months) and then hand them to their IT departments to run on a cluster. The time from math (or science) to model to answer is extraordinarily slow. Even once an application is coded, it can take days (or sometimes months) for a simulation to run. Imagine having greater computational power available to simulate interactively throughout the day, taking advantage of clusters and the cloud. Faster analysis reduces time to results and can speed discovery or creates competitive advantage. This potential—across nearly every sector—is at the core of Microsoft’s Technical Computing initiative. Consider some of the possibilities when HPC power is more broadly available and accessible:

- Better predictions to help improve the understanding of pandemics, contagion and global health trends.

- Climate change models that predict environmental, economic and human impact, accessible in real-time during key discussions and debates.

- More accurate prediction of natural disasters and their impact to develop more effective emergency response plans.

We’re working partners and the technical computing community to bring this vision to life. You can tune into the conversation at http://www.modelingtheworld.com.

Nicole Hemsoth is the managing editor of HPC in the Cloud and will discuss a range of overarching issues related to HPC-specific cloud topics in posts, which will appear several times per week in Behind the Cloud. HPC in the Cloud is a new new internet portal and weekly email newsletter [that’s] dedicated to covering Enterprise & Scientific large scale cloud computing. The portal will provide technology decision-makers and stakeholders (spanning government, academia, and industry) with the most accurate and current information on developments happening in the specific point where Cloud Computing and High Performance Computing (HPC) intersect. Tabor Communication’s press release of 4/20/2010 announced the new portal.

<Return to section navigation list>

Cloud Security and Governance

• Salvatore Genovese recommended “Leverage existing IdM and security event management (SIEM) systems for increased adoption and greater business value” in his Taking Control of the Cloud for Your Enterprise post of 6/30/2010:

This white paper [by Intel] is intended for Enterprise security architects and executives who need to rapidly understand the risks of moving business-critical data, systems, and applications to external cloud providers. Intel's definition of cloud services and the dynamic security perimeter are presented to help explain three emerging cloud-oriented architectures. Insecure APIs, multi-tenancy, data protection, insider threats, and tiered access control cloud security risks are discussed in depth. To regain control, the white paper from Intel illustrates how a Service Gateway control agent can be used to protect the enterprise perimeter with cloud providers. Various deployment topologies show how the gateway can be used to leverage existing IdM and security event management (SIEM) systems for increased adoption and greater business value.

Lori MacVittie asserts Devops needs to be able to select COMPUTE_RESOURCES from CLOUD where LOCATION in (APPLICATION SPECIFIC RESTRICTIONS) in her Cloud Needs Context-Aware Provisioning article of 6/30/2010 for F5’s DevCentral blog:

The awareness of the importance of context in application delivery and especially in the “new network” is increasing, and that’s a good thing. It’s a necessary evolution in networking as both users and applications become increasingly mobile. But what might not be evident is the need for more awareness of context during the provisioning, i.e. deployment, process.

A desire to shift the burden of management of infrastructure does not mean a desire for ignorance of that infrastructure, nor does it imply acquiescence to a complete lack of control. But today that’s partially what one can expect from cloud computing . While the fear of applications being deployed on “any old piece of hardware anywhere in the known universe” is not entirely a reality, the possibility of having no control over where an application instance might be launched – and thus where corporate data might reside - is one that may prevent some industries and individual organizations from choosing to leverage public cloud computing.

This is another one of those “risks” that tips the scales of risk versus benefit to the “too risky” side primarily because there are legal implications to doing so that make organizations nervous.

The legal ramifications of deploying applications – and their data – in random geographic locations around the world differ based on what entity has jurisdiction over the application owner. Or does it? That’s one of the questions that remains to be answered to the satisfaction of many and which, in many cases, has led to a decision to stay away from cloud computing.

According to the DPA, clouds located outside the European Union are per se unlawful, even if the EU Commission has issued an adequacy decision in favor of the foreign country in question (for example, Switzerland, Canada or Argentina).

-- German DPA Issues Legal Opinion on Cloud Computing

Back in January, Paul Miller published a piece on jurisdiction and cloud computing, exploring some of the similar legal juggernauts that exist with cloud computing:

While cloud advocates tend to present 'the cloud' as global, seamless and ubiquitous, the true picture is richer and complicated by laws and notions of territoriality developed long before the birth of today's global network. What issues are raised by today's legislative realities, and what are cloud providers — and their customers — doing in order to adapt?

CONTEXT-AWARE PROVISIONING

To date there are two primary uses for GeoLocation technology. The first is focused on performance, and uses the client location as the basis for determining which data center location is closest and thus, presumably, will provide the best performance. This is most often used as the basis for content delivery networks like Akamai and Amazon’s CloudFront. The second is to control access to applications or data based on the location from which a request comes. This is used, for example, to comply with U.S. export laws by preventing access to applications containing certain types of cryptography from being delivered to those specifically prohibited by law from obtaining such software.

There are additional uses, of course, but these are the primary ones today. A third use should be for purposes of constraining application provisioning based on specified parameters.

While James Urquhart touches on location as part of the criteria for automated acquisition of cloud computing services what isn’t delved into is the enforcement of location-based restrictions during provisioning. The question is presented more as “do you support deployment in X location” rather than “can you restrict deployment to X location”. It is the latter piece of this equation that needs further exploration and experimentation specifically in the realm of devops and automated provisioning because it is this part of the deployment equation that will cause some industries to eschew the use of cloud computing.

Location should be incorporated into every aspect of the provisioning and deployment process. Not only should a piece of hardware – server or network infrastructure – be capable of describing itself in terms of resource capabilities (CPU, RAM, bandwidth) it should also be able to provide its physical location. Provisioning services should further be capable of not only including location restrictions as part of the policies governing the automated provisioning of applications, but enforcing them as well. …

Lori continues with a STANDARDS NEED LOCATION-AWARENESS section and concludes:

If not OCCI, then some other standard – de facto or agreed upon – needs to exist because one thing is certain: something needs to make that information available and some other thing needs to be able to enforce those policies. And that governance over deployment location must occur during the provisioning process, before an application is inadvertently deployed in a location not suited to the organization or application.

<Return to section navigation list>

Cloud Computing Events

• Cumulux and MSDN Events will present An Hour In The Cloud With Windows Azure – Webcast, Event ID: 1032450925 on 7/2/2010 at 9:00 AM PDT:

Have you wondered how cloud computing can bring your ideas to the web faster? Reduce your IT costs? Or be able to respond quickly to changes in your business and customers needs? If so, then you need to find out what Azure is all about!

What is this Windows Azure training?

In this Windows Azure training session you will learn about Azure; how to develop and launch your own application as well as how it impacts YOUR daily life.

What does it cost me?

There is NO cost to participate in this training.

The Agenda:

- Overview of Cloud Computing

- Introduction to Windows Azure

- Tour of the Azure Portal

- Uploading your first Azure package

- Real world Scenario

- Experiencing your first cloud app & behind the scenes

- Q & A

How do I attend:

Find the day and time that works best for you, then click to register. Everything you need will be emailed prior to the start of the training.“Find the day and time that works best for you,” as long as it’s Friday, July 2 at 9:00 AM PDT. (A search shows only one session.) Same principle as the color of Ford’s Model T automobiles.

• Ping Identity will sponsor the Cloud Identity Summit Conference to be held 7/20 through 7/22/2010 in Keystone, CO:

As Cloud computing matures, organizations are turning to well-known protocols and tools to retain control over data stored away from the watchful eyes of corporate IT. The Cloud Identity Summit is an environment for questioning, learning, and determining how identity can solve this critical security and integration challenge.

The Summit features thought leaders, visionaries, architects and business owners who will define a new world delivering security and access controls for cloud computing.

The conference offers discussion sessions, instructional workshops and hands-on demos in addition to nearly three days of debate on standards, trends, how-to’s and the role of identity to secure the cloud.

Topics

Understanding the Cloud Identity Ecosystem: The industry is abuzz with talk about cloud and cloud identity. Discover the definition of a secure cloud and learn the significance of terms such as identity providers, reputation providers, strong authentication, and policy and rights management mediators.Recognizing Cloud Identity Use-Cases: The Summit will highlight how to secure enterprise SaaS deployments, and detail what is possible today and what’s needed for tomorrow. The requirements go beyond extending internal enterprise systems, such as directories. As the cloud takes hold, identity is a must-have.

Standardizing Private/Public Cloud Integration: The cloud is actually made up of a collection of smaller clouds, which means agreed upon standards are needed for integrating these environments. Find out what experts are recommending and how companies can align their strategies with the technologies.

Dissecting Cloud Identity Standards: Secure internet identity infrastructure requires standard protocols, interfaces and API's. The summit will help make sense of the alphabet soup presented to end-users, including OpenID, SAML, SPML, ACXML, OpenID, OIDF, ICF, OIX, OSIS, Oauth IETF, Oauth WRAP, SSTC, WS-Federation, WS-SX (WS-Trust), IMI, Kantara, Concordia, Identity in the Clouds (new OASIS TC), Shibboleth, Cloud Security Alliance and TV Everywhere?

Who's in your Cloud and Why? Do you even know?: Privileged access controls take on new importance in the cloud because many security constructs used to protect today’s corporate networks don't apply. Compliance and auditing, however, are no less important. Learn how to document who is in your cloud, and who is managing it.

Planning for 3rd Party (Cloud-Based) Identity Services: What efficiencies in user administration will come from cloud-based identity and outsourced directory providers? How can you use these services to erase the access control burdens internal directories require for incorporating contractors, partners, customers, retirees or franchise employees. Can clouds really reduce user administration?

Preparing for Consumer Identity: OpenID and Information cards promise to give consumers one identity for use everywhere, but where are these technologies in the adoption life-cycle? How do they relate to what businesses are already doing with their partners, and what is required to create a dynamic trust fabric capable to make the two technologies a reality?

Making Cloud Identity Provisioning a Reality: The access systems used today to protect internal business applications http://www.interop.com/newyork/conference/cloud-computing.phptake a backseat when SaaS is adopted. How do companies maintain security, control and automation when adopting SaaS? The Summit will answer questions around secure SaaS usage? Do privileged account controls extend to the cloud? Are corporate credentials accidentally being duplicated in the cloud? Does the enterprise even know about it?

Securing & Personalizing Mashups: Many SOA implementations are using WS-Trust, but in the cloud OAuth is becoming the standard of choice for lightweight authorization between applications. Learn about what that means for providing more personalized services and how it provides more security to service providers.

Enterprise Identity meets Consumer Identity: There are two overlapping sets of identity infrastructures being deployed that have many duplications, including Single Sign-On to web applications. How will these infrastructures align or will they? How will enterprises align security levels required for their B2B interactions with the convenience they have with their customer interactions?

Monitoring Cloud Activity: If you've invested in SEIM, you can see what’s happening behind your firewall, but can you see beyond into employee activity in the cloud? What is being done to align controls on activity beyond enterprise walls with established internal controls?

See the John Fontana wrote CIS series. John Shewchuk: A futuristic identity on 6/25/2010 for the Cloud Identity Summit Conference item in the AppFabric: Access Control and Service Bus section.

• UBM TechWeb reported Interop 2010 will be held in New York City on 10/18 through 10/22/2010 and Conference Program Announced — Register by 7/2 and Save 30% on 6/29/2010:

Conference: Cloud Computing Track

Track Chair: Alistair Croll, Principal Analyst, BitCurrent

Interop's Cloud Computing track brings together cloud providers, end users, and IT strategists. It covers the strategy of cloud implementation, from governance and interoperability to security and hybrid public/private cloud models. Sessions include candid one-on-one discussions with some of the cloud's leading thinkers, as well as case studies and vigorous discussions with those building and investing in the cloud. We take a pragmatic look at clouds today, and offer a tantalizing look at how on-demand computing will change not only enterprise IT, but also technology and society in general.

• Salvatore Genovese asserted “Cloud Benefits Clear Despite Debate Over Definitions Shows Network Instruments Onsite Survey” in his Cisco Live Snapshot: Cloud Computing Adoption Surges Ahead report of 6/30/2010:

According to a survey conducted during Cisco Live, 71 percent of organizations have implemented some form of cloud computing, despite an unclear understanding as to the actual definition of the technology. From the exhibition floor, Network Instruments polled 184 network engineers, managers, and directors and found:

Widespread Cloud Adoption: Of the 71 percent having adopted cloud computing solutions, half of these respondents deployed some form of private cloud. Forty-six percent implemented some form of Software as a Service (SaaS), such as SalesForce.com or Google Apps. Thirty-two percent utilize Infrastructure as a Service (IaaS), such as Amazon Elastic Compute Cloud. A smaller number (16 percent) rely on some form of Platform as a Service (PaaS), such as Microsoft Azure and SalesForce.com's Force.

Meaning of the Cloud Debatable: The term "cloud computing" meant different things to respondents. To the majority, it meant any IT services accessed via public Internet (46 percent). For other respondents, the term referred to computer resources and storage that can be accessed on-demand (34 percent). A smaller number of respondents stated cloud computing pertained to the outsourcing of hosting and management of computing resources to third-party providers (30 percent).

Real Gains Realized: The survey asked those who had implemented cloud computing to discuss how performance had changed after implementation. Sixty-four percent reported that application availability improved. The second area of improvement reported was a reduction in the costs of managing IT infrastructure (48 percent).

Technology Trouble Spots: While several respondents indicated their organizations saw definite gains from the technology, others observed network performance stayed the same or declined. Sixty-five percent indicated that security of corporate data declined or remained the same, compared to 35 percent that saw security improvements. With regards to troubleshooting performance problems, 61 percent reported no change or faced increased difficulty in detecting and solving problems.

"With proper planning and tools to ensure visibility from the user to the cloud provider, Cisco Live attendees are successfully deploying cloud services," said Brad Reinboldt, product marketing manager at Network Instruments. "I was a bit surprised by the number of companies lacking tools to detect and troubleshoot cloud performance issues, as they risk running into significant problems that jeopardize any cost savings they may have initially gained."

The above seems to me to be based on a small and probably skewed sample population.

• Kevin Jackson reported NCOIC Plenary Highlights Collaboration and Interoperability on 6/29/2010:

Last week in Brussels, Belgium, the Network Centric Operations Industry Consortium highlighted it's support of collaboration and interoperability through an information exchange session with the National Geospatial-Intelligence Agency (NGA) and an impressive lab interoperability demonstration.

As published in Defense News, the "lab interoperability framework" demonstration was designed to show how different players could share an operational picture of an area. The NCOIC pointed to the recent Haiti operation or the Gulf of Mexico oil spill as examples where different networks or countries could communicate using these standards. Customers could be NATO, the European Union, civilians, governments or industry.

The standards will be published free of charge for public use later this year after the NCOIC gives final approval. The NCOIC says that using its lab interoperability framework can save companies approximately $200,000 per event in labor costs, shorten execution timelines by 66 percent and reduce the risk of event failure.

In an effort to engage and collaborate with industry, the NGA briefed attendees on the Geospatial Community Cloud Project. While this effort is not an acquisition, it is intended to be an opportunity to discuss the status of geospatial intelligence (GEOINT) sharing across the community and to explore the application of cloud computing to this important requirement.

For next steps, the agency charged NCOIC members to provide a plan of actions and milestones for possible inclusion of NGA systems on the NCOIC lab interoperability test bed. Recommendation are scheduled to be presented at the next NCOIC plenary, September 10, 2010 in Falls Church, Virginia. The entire NGA brief is provided below.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Bill McColl asked “Who Is Going To Build The Low-Latency Cloud for Enterprise Customers?” in a deck for his Cloud Computing: The Need For Speed post of 6/30/2010:

The spectacular predictions for cloud revenue growth over the coming decade depend critically on the success of cloud providers in persuading major enterprise customers to deploy many of their mission-critical systems in the cloud rather than in-house. Today's cloud users are overwhelmingly web companies, for whom the low cost of cloud computing, together with simple elastic scaling are the critical factors. But major enterprises are different. In looking at the cloud option, their first major concern is security. This is well documented, has been discussed to death in cloud blogs and conferences, and is certainly a real problem. However, I think we can be very confident that the cloud vendors will be able to quickly overcome the concerns in this area.

The reality, although not currently the perception, is that many/most in-house systems are no more secure than cloud systems. The perception of cloud security will change quickly over the next couple of years, as more cloud services are deployed. There are certainly no significant technical impediments here. Many of the security technologies used to protect in-house datacenters can be adapted to secure cloud services.

A second major concern for major enterprises is reliability, but here again we can be confident that the perception on this issue will rapidly change, as cloud vendors continue to improve the reliability of their datacenter and network architectures. Again, no major technical impediments here.

In comparison to security and reliability, the third major concern around enterprise cloud computing has received almost no attention so far. That concern is speed, or rather the lack of it in the cloud. In short, the cloud is slooooooooowww, and as multicore moves to manycore, as the cost of super-fast SSD storage solutions plummet, the difference in speed between cloud computing and in-house systems will widen dramatically unless there is a serious shift in the kinds of cloud services on offer from vendors to high end users.Consider the most basic of all enterprise services, online transaction processing (OLTP). This is at the heart of all ecommerce and banking. Modern OLTP requires that web transactions and business transactions are processed in a fraction of a second, and that the OLTP system has the scalability and performance to handle even the heaviest loads with this very low latency. But it's even more challenging than that. OLTP systems need to be tightly coupled to complex realtime fraud detection systems that operate with the same very low latency. Can anyone see VISA or American Express moving to the cloud as we know it today?

Amazon Web Services is the main cloud provider today. At Cloudscale we are significant and enthusiastic users of the numerous AWS cloud services. The Amazon team have built an architecture that is very low cost and ideally suited to supporting web companies where the transactions involved are mainly serving up data rather than performing complex analysis. For these simple types of website data delivery, storage services such as S3 offer acceptable performance. But what about modern enterprise apps?

The next generation of enterprise apps will revolve around two major themes: big data and realtime. Even the most traditional of enterprise software vendors, SAP, recognizes this. Hasso Plattner, the company's founder, has been saying for the past couple of years that the future of enterprise software will be based on in-memory database processing that can deliver realtime insight on huge volumes of data. …

Bill continues with the details of Cloudscale’s “new realtime data warehouse model that enables both realtime ETL and realtime analytics on big data.” He is the founder of Cloudscale, Inc.

• Geva Perry analyzed Salesforce.com: An Example of the Bottom-Up Model in this 6/30/2010 post to his Thinking Out Cloud blog:

In APIs and the Growing Influence of Developers I mentioned the bottom-up sales and adoption model. That post, naturally, was focused on developers, but I said that the model can apply equally to any type of "rank & file" users.

I was flipping through Salesforce.com CEO and founder Marc Benioff's book, Behind the Cloud

, and came across the following passage, which is a good (and classic) example of how the model works well with products targeted at business users:

“

[Sales executives] Jim and David had been with us for only a week or two when

an incredible opportunity unfolded at SunGard, a NYSE Fortune 1000 company. Cris Conde, who had just been promoted to CEO, was looking for a way to integrate the data systems giant's eighty different business units and saw CRM as the "unifying glue." The possibility of our biggest customer to date, and the thousand-user deal David had guaranteed, was right in front of us. At the time, SunGard's various divisions were working on myriad systems, but Cris noted that salesforce.com was the only one that was spreading virally. "The sales people were buying it on their own credit cards and going around their managers to purchase an account," he said.

That ‘vote’ from the users was significant to Cris, and meeting with him to discuss our service's capabilities not only yielded our biggest customer at the time but also helped build a blueprint for selling to all enterprise companies.”

Salesforce.com, as you may well know, went on to become the world's first $1 billion pure-play Cloud/SaaS company.

<Return to section navigation list>