Windows Azure and Cloud Computing Posts for 4/19/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in April 2010.

Azure Blob, Drive, Table and Queue Services

Maarten Balliauw released PhpAzureExtensions (Azure Drives) - 0.2.0 to CodePlex on 2/19/2010:

Release Notes

Extension for use with Windows Azure SDK 1.1!

Breaking changes! Documentation can be found at http://phpazurecontrib.codeplex.com/wikipage?title=AzureDriveModule.

Steve Marx’s Put Blob Without Overwrite post of 4/20/2010 explains:

A question came up recently of how to store a Windows Azure blob without overwriting the blob if it already exists. This quick post will show you how to do this using the .NET StorageClient library.

To figure out how to do this, I looked at the “Specifying Conditional Headers for Blob Service Operations” MSDN topic, which says the following:

If-None-Match An ETag value, or the wildcard character (*). Specify this header to perform the operation only if the resource's ETag does not match the value specified. Specify the wildcard character (*) to perform the operation only if the resource does not exist, and fail the operation if it does exist.

That means all we need to do to keep our Put Blob request from overwriting an existing file is to add the

If-None-Match:*header to our request!Here’s how to upload text to a blob without overwriting the existing contents:

try { blob.UploadText("Hello, World!", Encoding.ASCII, new BlobRequestOptions() { AccessCondition = AccessCondition.IfNoneMatch("*") }); Console.WriteLine("Wrote to blob."); } catch (StorageClientException e) { if (e.ErrorCode == StorageErrorCode.BlobAlreadyExists) { Console.WriteLine("Blob already exists."); } else { throw; } }

The Luiz Fernando Santos’ SqlClient Default Protocol Order post of 4/18/2010 recommends checking your servers’ SqlClient protocols:

A while ago, someone came to me with a very interesting problem: basically, his .NET application was taking more than a minute to open a connection. After a quick look and some investigations, we found out that only Named Pipes was enabled on this individual’s SQL Server. Since this is a legitimate configuration, I decided to document this behavior in this post, so that, you can quickly find an answer if you face this problem.

As you know, SqlClient implements native access to SQL Server on top of SQL’s protocol layer. To establish communication between client and server both need to use the same protocol.

By default, SqlClient attempts to make the connection using the following protocol order[1]:

- Shared Memory

- TCP/IP

- Named Pipes

Starting from the first one, it moves to the next, failure after failure until reaching a valid one. So, if only Named Pipes is enabled on the server, the client goes through a failed Shared Memory and a failed TCP/IP (with their timeouts) before reaching the right one.

Now, to minimize some performance impact, SqlClient detects if the server name is a remote host, then it’s smart enough to bypass the Shared Memory and goes directly to the next option. Also, if SSRP is required (when you need to connect to a non-default instance, for example), then SqlClient queries the SQL Server Browser in order to gather the appropriate protocol list from the target server.

Now, when connecting the default instance, you can override this default behavior to avoid any timeouts when opening your Named Pipes connection. Basically, in the connection string, you can specify the protocol you wish to use, like the sample below:

Data Source=MyDatabaseServer; Integrated Security=SSPI; Network Library= dbnmpntw

Or, you can just specify a prefix in MyServer, just to tell SqlClient to establish a Named Pipes connection:

Data Source=np:MyDatabaseServer; Integrated Security=SSPI

As a conclusion, it’s a good practice to understand which protocols your DBA enabled in the server and, if you they don’t have plans to use TCP, you need to make sure you explicitly made this call in your connection string.

Eugenio Pace posted Windows Azure Guidance (WAG) Code Drop 3 to CodePlex on 3/31/2010. Here are the Release Notes:

Second iteration of a-Expense on Azure.

This release builds on the previous one and mainly focuses on replacing SQL Azure by Table Storage. We have slightly enhanced the data model to show some other interesting features (transactions and lack of them).Highlights of this release are:

- Use of Table Storage as the backend store for application entities (e.g. expense reports and line items).

- New model for the "Expense" entity: there's now a "header" and "line items". We did this to show two dependent entities and the implications on the storage.

- Some bug fixes and little usability enhancements.

- Automated deployment.

Make sure you read the "readme" file included in the release.

I retroactively posted this sample application because it had only 156 downloads since 3/31/2010 and I assumed that it hasn’t been advertised widely.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Jack Greenfield offers Silverlight Samples for OData Over SQL Azure in this 4/20/2010 post:

I've just published some code samples that show how to build a Silverlight client for the OData Service for SQL Azure, and how to build a service that validates Secure Web Tokens (SWTs) issued by AppFabric Access Control. For more information about the client, see my earlier post about Silverlight Clients and AppFabric Access Control. To implement identity federation scenarios for the OData Service, see my earlier post and code sample that show how to use identity federation with Codename "Dallas".

James Governor quotes The Guardian: NoSQL EU. Don’t Melt The Database in this 4/20/2010 post from the NoSQL EU conference:

What follows is something like a live blog, based on comments from Matthew Wall and Simon Willison from The Guardian the NoSQL EU conference in London today.

Wall kicked off the talk with a question about NoSQL: is it a good name for the phenomenon? He says not really, pointing out absurdity of calling SQLite and MySQL “old world databases” as opposed to “new world” key value stores.[This point resonates strongly with RedMonk thinking. Both me and Stephen have been wary of reductionist approaches to defining NoSQL - we feel Hadoop style Big Data for example should be thought of as a related trend]

Where is The Guardian today? Its a modern, information-driven web site driven by tags and feeds.

“Its a traditional three tier web app, with a large Oracle database at center of the world. People might have thought we’re cooler than that, but we’re not.”

The Guardian took the decision to stick with traditional relational model 5 years ago. The kind of tools we’re beginning to use weren’t as mature back then.

A key reason for sticking with Oracle was the maturity of the surrounding tools ecosystem- performance management and optimisation, back up – and available skills.

SQL has worked well for the paper. “SQL is great. we can do cool stuff with that. at scale.” …

<Return to section navigation list>

AppFabric: Access Control and Service Bus

See Jack Greenfield’s Silverlight Samples for OData Over SQL Azure post in the SQL Azure Database, Codename “Dallas” and OData section.

See Wade Wegner will present Using the Azure AppFabric with Surgical Skill at the Chicago Code Camp 2010 in the Cloud Computing Events section.

Jack Greenfield’s Silverlight Clients and AppFabric Access Control post of 4/19/2010 begins:

As described in my previous post, I've just finished building some Silverlight clients and a portal for the new OData service for SQL Azure using AppFabric Access Control (ACS). In the process, I ran into a few issues worth documenting.

Hello, World!

Before we get to harder problems, let’s make sure we have the basics right.

If you’re doing Silverlight client development, then you probably already know that any service accessed by a Silverlight client must either be hosted from the same Internet zone, domain and scheme as the client, or have a cross domain security policy file in place. What you may not know is that the AppFabric Team recently released a version of ACS to AppFabric Labs with a cross domain security policy file, allowing access from any domain over either http or https to any path below the base URI of any STS endpoint. You should be able to obtain and inspect the file using your web browser.

You probably also know that you need to specify client HTTP handling for REST endpoints like ACS. What you may not know is that for cross domain access to data using WCF Data Services (formerly ADO.NET Data Services), you’ll either need to move to Silverlight 4, or use ADO.NET Data Services for Silverlight 3 Update CTP3 (thanks to Mike Flasko and Pablo Castro for that pointer). …

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Bruce Kyle interviews Bob Jansen in a brief (00:02:52) RES Software Stores Desktop Profiles on SQL Azure Channel9 video segment:

RES Software uses SQL Azure to offer cloud storage for user desktop configurations. The company also offers on premises storage options.

In this video ISV Architect Evangelist Bruce Kyle talks with CTO Bob Janssen about how and why they company offers both SQL Azure and SQL Server storage options for their customers.

RES Software is the proven leader in user workspace management. They driving a transformation in the way today’s organizations manage and reduce the cost of their PC populations. Designed for physical or virtual desktop platforms, RES Software enables IT professionals to centrally manage, automate and deliver secure, personalized and more productive desktop experiences for any user.

For more information about how RES Software users access their desktop information in the cloud, see RG021 – How to create a MS SQL Azure Database on their company blog.

Steve Marx is on a roll today (4/20/2010) with Changing Advanced FastCGI Settings for PHP Applications in Windows Azure:

One of the things you can do with the Hosted Web Core Worker Role I blogged about earlier is to specify the full range of FastCGI settings, including those recommended by the IIS team. This goes beyond the settings that are allowed in

web.roleConfig.I’ve added a download to the Hosted Web Core Worker Role code over on Code Gallery that adds the following FastCGI settings to

applicationHost.config:<fastCgi> <application fullPath="{approot}\php\php-cgi.exe" maxInstances="4" instanceMaxRequests="10000" requestTimeout="180" activityTimeout="180"> <environmentVariables> <environmentVariable name="PHP_FCGI_MAX_REQUESTS" value="10000" /> </environmentVariables> </application> </fastCgi>The PHP project also includes a sample web site with a

web.configthat configuresindex.phpas a default document and registers the PHP FastCGI handler for files with the.phpextension. To use the PHP project, you’ll need to download the latest binaries from php.net and add them under thephpfolder. Make sure there’s aphp.inifile in the root of that folder.To learn more about running PHP in Windows Azure, read “Using 3rd Party Programming Languages via FastCGI” from the Windows Azure blog.

Steve Marx explains how to Build Your Own Web Role: Running Hosted Web Core in Windows Azure in this 4/20/2010 post:

In this post, I’ll show how you can run your own instance of Hosted Web Core (HWC) in Windows Azure. This will give you the basic functionality of a web role, but with the flexibility to tweak advanced IIS settings, run multiple applications and virtual directories, serve web content off of a Windows Azure Drive, and more.

If you want to try this out yourself, you can download the full Visual Studio 2010 solution from Code Gallery.

The code I share in this blog post will work today, but I should point out that Windows Azure will provide the full power of IIS in the future, without the need for you to manage your own Hosted Web Core process.

I should also point out that by running HWC yourself, you’re taking responsibility for a lot of things that are completely abstracted and automated away in the existing Windows Azure web role. Use this code only if you’re comfortable managing your own IIS configuration files and debugging HWC errors.

What is Hosted Web Core?

IIS Hosted Web Core is what Windows Azure uses to power web roles. It runs web applications using the same pipeline as IIS, but with a few restrictions. Specifically, Hosted Web Core can only run a single application pool and a single process. It also does not include the Windows Process Activation Service (WAS). If you’re interested in learning more about using Hosted Web Core, I recommend blog posts by CarlosAg and Kanwaljeet Singla, in addition to the MSDN documentation.

Steve continues with the implementation details.

David Aiken recommends writing Azure code to handle failure in his Design to fail post of 4/20/2010:

I was chatting to a customer earlier about a solution they had built for Azure. They had implemented a thingythangy that stored a few hundred requests in memory, before dumping it into a blob. My immediate reaction was – “What happens when your role gets recycled, do you loose the cached requests?”

Windows Azure does not have an SLA for restarting your service, but if it did it would be 100%. Restarting is just a reality. Hardware fails, OS’s get patched, etc. At some point you will be restarted, maybe with a warning, but maybe not.

This by the way is no different from when you run your service on the server under your desk. At some point it will get restarted, loose power or some other such calamity.

One thing you need to think about when writing good code for the cloud is how to deal with this. …

David continues with two options “Ignore it and carry on” and “Write code to handle failure.”

Eric Nelson issues a Call for authors for new eBook on the Windows Azure Platform in this 4/20/2010 post:

I intend to pull together a FREE eBook on the Windows Azure Platform – but I need your help to make it rock!

If you have detailed experience of any aspect of the Windows Azure Platform and can spare a few hours of time to turn that into a short article (400 to 800 words) then please get in touch. This is not a big commitment but my suspicion is the end result will make for a cracking good read.

I am hoping for a mix – everything from lessons learnt from early adopters to introductions to elements of the platform to getting technologies such as Ruby up and running on Azure. 10 to 20 articles sound about right – which means I am after 10 to 20 authors :)

All I need from you right now is:

- One or two suggestions of topics you would like to cover

- A pointer to any example of your previous work – which could be as simple as a blog post or a work document.

For simplicity, just drop me an email direct to eric.nelson A@T microsoft.com. ..

The provisional dates are:

- Confirm authors and topics by 3rd May

- Get first draft from all authors by 10th May

- Complete reviews by 17th May

- Final versions by 24th May

- Published by 31st May

A new definition of “charity competition.”

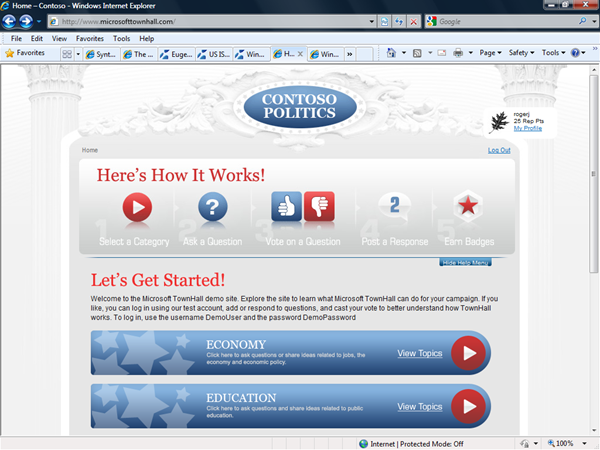

The Windows Azure Team’s Introducing Microsoft TownHall, a New Windows Azure-hosted Engagement Platform post of 4/19/2010 describes a new political crowdsourcing application:

Today at the Politics Online Conference in Washington, D.C. we launched TownHall, a new Windows Azure-hosted engagement platform that fosters, moderates and houses conversations between groups. As you may have seen on today's Microsoft on the Issues blog, TownHall is an ideal tool for politicians and other public officials (among others) who want to host online social experiences that drive richer discussion around top interests and concerns with the American public.

Think of TownHall as software that allows you to easily create a destination for folks to voice opinions, identify problems, offer solutions and come together around common interests and concerns. It is also a place to ask questions, where the most popular or relevant questions bubble to the top, so all can quickly see timely topics at any given moment. It does this by letting the community decide what's relevant, allowing folks to ‘vote up' questions that they would like to have answered or ‘vote down' questions that don't interest them. Check out this site to see TownHall in action.

One of the challenges (and opportunities) technology companies face today is delivering compelling, rich and interactive experiences across a number of devices. The cloud provides an opportunity to do just that. With TownHall, we've established a REST API in the cloud that enables us to deliver clients on a number of platforms and to do so quickly. Today, we've released source code you can use to create a great Web experience, and over the coming months, we'll release TownHall clients that run natively on the iPhone, the iPad, Google Android and Windows Phone 7 devices. We will also release a Facebook client that allows you to extend TownHall into your Facebook fan page as an embeddable widget, and for enterprise and B2B communities using TownHall, we'll release a SharePoint Web Part.

TownHall is designed to help you gain insight into your community. It's optimized to collect data from each engagement, providing insight you can use for analytics and to identify opportunities. It's also integrated with Facebook Connect, which reduces friction for your community to sign-up and provides you the opportunity to integrate social graph and demographic information into your data. All the data is stored in SQL Azure, which makes it easy to incorporate into existing systems or use with familiar tools like Microsoft Excel and Microsoft Access. In TownHall, we recognize that your data is valuable and that you own it. We see our role as empowering you with software, not owning and mining your data.

Because TownHall is hosted on Windows Azure, we do the heavy lifting for you. Windows Azure provides a scalable environment which makes TownHall ideal for people who don't want to manage bulky technical infrastructure. It's available using a pay-only-for-usage model, so customers don't have to pay for unnecessary hardware and bandwidth that might sit idle or worry about handling a crush of traffic around a spike in activity.

Licensing TownHall is free from Microsoft; you only pay to host TownHall on Windows Azure. With the cost benefits of the cloud, you could engage with hundreds of thousands of citizens for less than a penny each. …

Bruce Kyle describes Getting Started with SQL Azure Data Sync with Glen Berry’s gude in this 4/20/2010 post to the US ISV Developer Community blog:

MVP Glenn Berry has put together a guide to getting started with SQL Azure Data Sync. SQL Azure Data Sync is a free tool that allows you to easily create a SQL Azure database, and then synchronize the data periodically (on a table by table basis) with an on-premises SQL Server database.

The post, Playing With SQL Azure Data Synch, provides screenshots and the steps you can take to synchronize your data with SQL Azure.

The SQL Azure Data Synch Developer Quick Start page has instructions and links to the Microsoft Synch Framework 2.0 Software Development Kit (SDK) and the Microsoft Sync Framework Power Pack for SQL Azure November CTP (32-bit) which are needed to make this tool work. Even if you are using a 64-bit version of Windows, you should install the x86 version of the Power Pack. It also gives you a Visual Studio 2008 Template that allows you to take an existing SQL Azure database offline, this new template simplifies the task of creating an offline data cache within SQL Compact.

After you have installed the Synch Framework SDK and Power Pack, you can run the wizard to easily create a SQL Azure database and synchronize your on-premises data with SQL Azure. You have to have SQL Agent available and running on your local instance of SQL Server.

Berry’s blog post walks you though the steps. See Playing With SQL Azure Data Synch,

About SQL Azure Data Sync

Microsoft SQL Azure Data Sync provides symmetry between SQL Azure and SQL Server through bi-directional data synchronization. Using SQL Azure Data Sync organizations can leverage the power of SQL Azure and Microsoft Sync Framework to build business data hubs in the cloud allowing information to be easily shared with mobile users, business partners, remote offices and enterprise data sources all while taking advantage of new services in the cloud. This combination provides a bridge, allowing on-premises and off-premises applications to work together.

FullArmor announced that its PolicyPortal Delivers Cloud-based Compliance Monitoring, Management and Remediation for Endpoints is based on Windows Azure in this 4/20/2010 press release:

FullArmor, a leader in enterprise policy management, today announced that it has released for community technology preview a beta of the next version of PolicyPortal based on Windows Azure, Microsoft’s platform for building and deploying cloud based applications. This solution enables organizations to monitor unmanaged endpoint devices for compliance with desired configuration baselines from Microsoft System Center Configuration Manager 2007 and automatically remediate unauthorized changes without administrator intervention. In addition, PolicyPortal extends Microsoft System Center Service Manager 2010 by generating incidents in the console when configuration drift occurs on disconnected and roaming endpoints.

“Disconnected, roaming endpoints are typically unmanaged, and most susceptible to drifting out of compliance with corporate and regulatory standards. The next release of PolicyPortal is designed to help customers manage compliance for all their endpoints in a centralized, consistent and robust fashion,” said Danny Kim, chief technology officer of FullArmor. “Delivered as a cloud service, PolicyPortal makes it easy to manage devices disconnected from the network, while the integration with Microsoft management tools enables IT departments to monitor, enforce and report on endpoint compliance from a single, familiar user interface.” …

David Aiken’s Scaling out Azure post of 4/19/2010 begins:

<Rant Warning/>

In previous blog posts, I’ve talked about some of the patterns you can use to build your apps for the cloud, including Task-Queue-Task and de-normalizing your data using that pattern. But now something on scaling out.

When you are building apps in the cloud, you have to remember you are running in a shared environment and have no control over the hardware.

Let’s think about that for a moment.

In Windows Azure and SQL Azure we run you on hardware. Information on roughly what to expect can be found here (scroll down and expand compute instances), but here is a table of the compute part of Windows Azure:

Compute Instance Size CPU Memory Instance Storage I/O Performance Small 1.6 GHz 1.75 GB 225 GB Moderate Medium 2 x 1.6 GHz 3.5 GB 490 GB High Large 4 x 1.6 GHz 7 GB 1,000 GB High Extra large 8 x 1.6 GHz 14 GB 2,040 GB High So how fast is the memory? What kind of CPU caching do we have? How fast are the drives? What about the network?

For SQL Azure we don’t even tell you what it’s running on, although you can watch this to get a better idea of how “shared” you are.

The point I’m going to make is that when you control the hardware, you can figure out lots of things like the throughput of disk controllers, CPU & Memory and based on that knowledge create filegroups for databases that span multiple drives, install more cores, faster drives, more memory, faster networking – all to improve performance. You are scaling up.

In the cloud, things work differently – you have to scale out. You have lots of little machines doing little chunks of work. No more 32-way servers at your disposal to crank through that huge workload. Instead you need 32 x 1 way servers to crank through that workload. There are no file groups, no 15,000 rpm drives. Just lots of cheap little servers ready for you whenever you need them.

I get a lot of questions about the performance of this, that and the other and while I understand that information can be useful, I think they somewhat miss one of the points and the potential of using the cloud.

I don’t need 15,000 rpm drives and 8 cores to handle my anticipated peak workload. Instead I can have 20 servers working my data at peak times, and 5 servers the rest of the time. So stop thinking about how fast the memory is, and start figuring out how you can use as many servers as you need – when you need it.

Remember OUT not UP.

Michael Ren looks forward to SharePointAzure in his Sharepoint Online 2010 and Sharepoint Azure Platform post of 4/19/2010:

Windows Azure Platform is becoming the hottest topics among many new technologies — the cloud computing tech really shows IT world what it would look like in the near future — An IT revolution. Imaging that we will have most of the social/public facing RESTful Services/Web applications online, it is easy to build, access and consume, it is reliable, it has rich information — This is the next move for lots of Web services and applications we are using now.

So what is next move for Sharepoint in the near future – after the release of Sharepoint 2010 on May 12th? — It’s all about the “blue sky” (there’s lots of thoughts around search too: The way that search affects the way that people work, which worths to be discussed in other talks), or we can call it SharepointAzure (matching the name with Windows Azure).

It is here, it is happening, it is the future ….

Many people would ask: how far do we need to go from “Present” to the ”Future”? Cloud computing sounds too real to be true, it is not a real thing in our real life — “It looks like it is a fancy technology that will never happen to me, it is anoher version of ‘Internet of Things’“.

But it is here Already — Sharepoint Online has been announced for more than one year. We already started using the Azure technology in a real world with a decent product service. The current SharepointOnline is still limited with WSS3.0 functions, while after Microsoft starts supporting multi-tenant feature, some small and middle sized companies started looking into it and some companies started using it, like GlaxoSmithKline. These companies outsource IT services to Microsoft pretty much. With Sharepoint’s multi-tenant version, it targets more, smaller customers. Since then, Microsoft have been adding tons of customers in the multi-tenant space. In 2010, we’ll see more functionalities adding into the multi-tenant space. …

Eugenio Pace continues Windows Azure Guidance – Background Processing III (creating files for another system) with this 4/18/2010 post:

Last week Scott walked me through his current design for the “Integration Service” in our sample. Here’s some preview of this early thinking. As a reminder, our fictitious scenario has a process that runs every once in a while and generates flat files for some other system to process: it simply scans the database for “approved” expenses and then generates a flat file with information such as: employee, amount to be reimbursed, cost center, etc. After that, it updates the state of the expense to “processing”.

Note: in the real world, there would be a symmetric process that would import the results of that external process. For example, there would be another file coming back with a status update for each expense. We are just skipping that part for now.

Surprisingly, this very simple scenario raises quite some interesting questions on how to design it:

- How to implement the service?

- How to schedule the work?

- How to handle exceptions? What happens if the process/machine/data center fails in the middle of the process?

- How do we recover from those failures?

- Where does the “flat file” go?

- How do we send the “file” back to the on-premises “other system”?

Note: as you should know by now, we pick up our scenarios precisely because they are the perfect excuse to talk about this considerations. This is our “secret agenda” :-)

Eugenio continues with descriptions of three implementation options:

- No service

- A worker dedicated to generating these files

- Implement this as a “task” inside a Worker

Cory Fowler’s Cloudy thoughts of Gaming in the Future post of 4/18/2010 begins:

With Microsoft’s release of Windows Azure and moving towards a future of Cloud Computing, I’ve been waiting to hear what is to be done in the Market of Gaming, more specifically the Community Contributions using the XNA Framework. Up until XNA version 3.0 Microsoft’s plan was to keep Community Content as a Closed faced application that wouldn’t be able to communicate with the outside world. XNA 3.0 delivered Xbox Live integration into Community Games, allowing hobbyist developers the ability to make their creations multiplayer. With the Resent release of XNA 4.0, which falls after the Release of the Cloud, I was hopeful to find some sort of Cloud Applicable use for community developers.

XNA 4.0 did however bridge a recent gap that would have been introduced with the Release of the Windows Phone. With the number of Gaming platforms that are being introduced it would be beneficial to tie them together with the Cloud. Lets close our eyes and dream for a moment. …

Return to section navigation list>

Windows Azure Infrastructure

Mary Jo Foley’s Microsoft: Trust us to bridge the public-private cloud gap post to ZDNet’s All About Microsoft blog analyzes Bob Muglia 4/20/2010 keynote to the Microsoft Management Summit (MMS):

… From the System Center blog:

“This bridging of the gap between on-premises IT and public clouds is something that only Microsoft can offer. For example, through customers’ upcoming ability to use System Center Operations Manager onsite to monitoring applications deployed within Windows Azure, there is a lot more to IT management than simply moving a VM to the cloud!”

Microsoft still officials have said very little about exactly what the “private cloud” encompasses beyond the usual set of Microsoft products. (I keep asking for more on that front, to no avail.) Some of my ZDNet colleagues think the whole “private cloud” concept is just datacenter computing with a new, fancy name — something I’ve said, as well.

Update: Microsoft is going to announce a bit about one piece of its private-cloud infrastructure, officials just told me. Beta 2 of the company’s Dynamic Infrastructure Toolkit for System Center is going to kick off soon, a spokesperson said. Last we heard, the final version of that tookit was due to ship in the first half of this year.

On Tuesday, Muglia is going to be offering a roadmap for some of the new on-premises and cloud systems-management wares in the pipeline from the company, however. According to the System Center blog post, System Center Virtual Machine Manager V-next and Operations Manager V-next are both due out in 2011. System Center Configuration Manager V-Next also is a 2011 deliverable, according to the blog post.

Alex Williams reports on Bob Muglia’s keynote in his Microsoft: Everything Moves Faster in the Cloud article of 4/20/2010 for ReadWriteCloud:

Lori MacVittie asks Are you scaling applications or servers? as a preface to her I Find Your Lack of Win Disturbing post of 4/20/2010 about autoscaling:

Microsoft revealed a bit more about its container system for data centers, giving us some pause about it as a symbol of the cloud itself.

These boxes represent the future of cloud-based infrastructures for both shared and dedicated networks. Microsoft, Amazon, HP and a number of other vendors use these containers to operate cloud networks. They are becoming fully automated systems that physically represent how we are seeing a fundamental shift in how IT services are managed and deployed.

In his keynote at the Microsoft Management Summit, Executive Bob Muglia featured the company's container system used at its Chicago data center, illustrating the company's new datacenter and cloud management capabilities for mass deployment of virtualized technologies.

Muglia said the new container system is 10x less expensive than traditional data center infrastructures and 10x faster, too.

"Everything moves faster in the cloud,"Muglia said. …

The container is an independent, high-speed network optimized with virtualization technology. Muglia said every piece of the data center is tightly fit, almost bound to make one network that stores data and provides raw processing power.

Auto-scaling cloud brokerages appear to be popping up left and right. Following in the footsteps of folks like RightScale, these startups provide automated monitoring and scalability services for cloud computing customers. That’s all well and good because the flexibility and control over scalability in many cloud computing environments is, shall we say, somewhat lacking the mechanisms necessary to efficiently make use of the “elastic scalability” offered by cloud computing providers.

The problem is (and you knew there was a problem, didn’t you?) that most of these companies are still scaling servers, not applications. Their offerings still focus on minutia that’s germane to server-based architectures, not application-centric architectures like cloud computing. For example, the following statement was found on one of the aforementioned auto-scaling cloud brokerage service startups:

"Company X monitors basic statistics like CPU usage, memory usage or load average on every instance."

Wrong. Wrong. More Wrong. Right, at least, in that it very clearly confesses these are basic statistics, but wrong in the sense that it’s just not the right combination of statistics necessary to efficiently scale an application. It’s server / virtual machine layer monitoring, not application monitoring. This method of determining capacity results in scalability based on virtual machine capacity rather than application capacity, which is a whole different ball of wax. Application behavior and complexity of logic cannot be necessarily be tied directly to memory or CPU usage (and certainly not to load average). Application capacity is about concurrent users and response time, and the intimate relationship between the two. An application may be overwhelmed while not utilizing a majority of its container’s allocated memory and CPU. An application may exhibit increasingly poor performance (response times) as the number of concurrent connections increase toward its limits while still maintaining less than 70,80, or 90% of its CPU resources. An application may exhibit slower response time or lower connection capacity due to the latency incurred by exorbitantly computational expensive application logic. An application may have all the CPU and memory resources it needs but still be unable to perform and scale properly due to the limitations imposed by database or service dependencies.

There are so many variables involved in the definition of “application capacity” that it sometimes boggles the mind. One thing is for certain: CPU and memory usage is not accurate enough indicator of the need to scale out an application.

Lori continues with THE BIG PICTURE and INTEGRATION, IT’S WHAT DATA CENTERS CRAVE topics.

Alex Willaims asks Is the Cloud Suitable for Scaling Real-Time Applications? in this post to the ReadWriteCloud blog of 4/19/2010:

Twitter is moving to its own data center, showing that sometimes the cloud is not ideal for the real-time web.

This may seem ironic as cloud computing is largely credited for giving application developers access to commoditized server networks that they can scale up or down. Cloud services make it realistic for developers to create real-time services in the marketplace.

But at some point, the cloud is not ideal for a real-time web service provider. Twitter is a good example. And, so, we use this news to present our weekly poll: "Is the Cloud Suitable For Scaling Real-Time Applications?"

Is the Cloud Suitable For Scaling Real-Time Applications?online survey

According to Data Center Knowledge, Twitter now uses a managed hosting service from NTT America where it has a dedicated space. Twitter also uses Amazon Web Services to serve images, including profile pictures. Twitter parted ways with Joyent in January 2008.

The move [to] NTT America came in response to latency issues. Latency is not a major issue for small application developers that use a service like Rackspace or Amazon. But when a service scales, the issues become increasingly significant.

Alex continues with input about latency from John Adams at Twitter (Chirp 2010: Scaling Twitter) and Raghavan "Rags" Srinivas.

Chris Hoff (@Beaker) comments in Incomplete Thought: “The Cloud in the Enterprise: Big Switch or Little Niche?” of 4/19/2010 on Joe Weinman’s “The Cloud in the Enterprise: Big Switch or Little Niche?” essay of 4/18/2010:

Joe Weinman wrote an interesting post in advance of his panel at Structure ‘10 titled “The Cloud in the Enterprise: Big Switch or Little Niche?” wherein he explored the future of Cloud adoption.

In this blog, while framing the discussion with Nick Carr’s (in)famous “Big Switch” utility analog, he asks the question:

“So will enterprise cloud computing represent The Big Switch, a dimmer switch or a little niche?”

…to which I respond:

“I think it will be analogous to the “Theory of Punctuated Equilibrium,” wherein we see patterns not unlike classical dampened oscillations with many big swings ultimately settling down until another disruption causes big swings again. In transition we see niches appear until they get subsumed in the uptake.”

Or, in other words such as those I posted on Twitter: “…lots of little switches AND big niches“

Go see Joe’s panel. Better yet, comment on your thoughts here.

Richard Whitehead explains How to Manage Private Clouds Using Business Service Management in this 4/19/2010 post to eWeek.com’s Cloud Computing knowledge center. Here’s the abstract:

Cloud computing is an enticing model, promising a new level of flexibility in the form of pay-as-you-go, readily accessible, infinitely scalable IT services. Executives in companies big and small are embracing the concept, but many are also questioning the risks of moving sensitive data and mission-critical work loads into the cloud. Here, Knowledge Center contributor Richard Whitehead offers four tips on how to manage private clouds using service-level agreements and business service management technologies.

Hanu Kommalapati’s Windows Azure Platform for Enterprises article for the Internet.com Cloud Computing Showcase (sponsored by the Microsoft Windows Azure Platform was reprinted with permission from MSDN at http://msdn.microsoft.com/en-us/magazine/ee309870.aspx:

Cloud computing has already proven worthy of attention from established enterprises and start-ups alike. Most businesses are looking at cloud computing with more than just idle curiosity. As of this writing, IT market research suggests that most enterprise IT managers have enough resources to adopt cloud computing in combination with on-premises IT capabilities.

Hanu is a platform strategy advisor at Microsoft, and in this role he advises enterprise customers in building scalable line-of-business applications on the Silverlight and Windows Azure platforms.

Microsoft Government has a new (at least to me) Windows Azure Website for federal, state, and local government agencies:

For a government agency straining to meet its objectives in the face of tight budgets and infrastructure limitations, “cloud” technology can help you accomplish more with less. Cloud computing—employing remotely hosted machines and services—relieves your agency of the costly burden of owning and maintaining hardware and some software and lets you focus on your mission, while making it easier to connect constituents with your online services. The new Windows Azure platform is a set of cloud services and technologies—Windows Azure, SQL Azure Database, AppFabric, and Microsoft Codename “Dallas”—that your developers can use to more efficiently create Web services and manage data. Scalability and automated application deployment and management, combined with a consistent, familiar user experience across devices (including mobile), can mean faster distribution and reduced IT costs.

<Return to section navigation list>

Cloud Security and Governance

Chris Czarnecki’s Cloud Computing Technologies: Reducing Risk in a High Risk Environment post of 4/20/2010 to Learning Tree’s Cloud Computing blog begins:

Information Week recently published a list of organizations known as the Startup 50. This is a list of new technology companies(less that 5 years old) to watch. The companies were evaluated on a number of criteria including

- Innovation in technology

- Business model innovation

- Business value : lowered costs, increased sales, higher productivity, improved customer loyalty

From the companies that made the top 50, the biggest concentration of product areas were in three cloud computing related subjects: virtualization, cloud computing, software as a service. [Emphasis Chris’s.]

In my last blog I commented on an article claiming that IT professionals considered the risks of cloud computing outweighed the potential benefits. With IT risk fresh in my mind, the information Week article got me thinking that in the high risk environment of startups, cloud computing technologies can actually significantly reduce the risk involved with starting a new company. Further, for established organizations, whether developing new products to be delivered as a service, or as end users of new products delivered as a service, cloud computing can lower the initial investment required and thus associated project risk. I believe cloud computing can help reduce risks in the following two ways.

- By utilizing Infrastructure as a Service(IaaS). Consider a company developing a new software product to be delivered as a service. This potentially requires a significant investment in scalable hardware and software (infrastructure) to meet an as yet unknown demand in service usage. Building this infrastructure is costly. Utilizing cloud computing’s on demand, pay as you use infrastructure, eliminates the large upfront investment required and replaces it with costs that are proportional to usage which should be proportional to revenue growth and thus self funding.

- By utilizing the cloud as a product delivery mechanism. With this mode of delivery the risk to potential customers is minimal as they do not have the large upfront cost of buying the product. If after using it for a while the product turns out to be unsuitable, they stop using it and stop paying for it. Contrast this to the risk of paying upfront for an expensive product without actually knowing for sure that it will be suitable for the organization.

Hopefully this post has highlighted how cloud computing can actually lower risks for many organizations, from startups, cloud service providers through to cloud end users. Let me know what you think.

Ellen Messemer reports “Network access controls harder than ever to set up, survey says” as a preface to her Cloud computing makes IT access governance messier story of 4/19/2010 for NetworkWorld’s Data Center blog:

IT professionals are finding it harder than ever to set up access controls for network resources and applications used by organization employees, and cloud computing is only adding to their woes, a survey of 728 IT practitioners finds.

The Ponemon Institute's "2010 Access Governance Trends Survey," which asked 728 IT practitioners about their procedures and outcomes in setting up access to information resources, found the situation worsening over the past two years. In comparison to a similar survey done by Ponemon two years ago, this year's survey found 87% believed individuals had too much access to information systems, up 9% from 2008.

And in a new question asked this year about how use of cloud computing fits with access-control strategies, 73% of respondents said adoption of cloud-based applications is enabling business users to circumvent existing access policies.

Cloud-based services "are often purchased directly by business units without consideration of access governance," says the 2010 Access Governance Trends Survey, published Monday. The survey was sponsored by Aveksa.It is the people in the business units, rather than the IT department, that have growing influence over granting user access to information resources, with 37% in 2010 saying the business units had the responsibility as opposed to just 29% saying this in 2008.

But this doesn't necessarily seem to be advancing the goal that access-control policies are met, at least in the eyes of the IT professionals, over half of whom said they can't even keep pace with information-access requests. Nineteen percent even said "there's no accountability in who makes access decisions." …

Jay Heiser’s The emperor’s new cloud essay of 4/19/2010 to Gartner Blogs adopts a vitual apparel analogy:

Once upon a time, a pair of peddlars arrived at the Enterprise. Explaining that they were leading providers, they were immediately granted a meeting with King Cio. They explained to Cio that he was wearing outdated garments. He could not only dramatically increase his flexibility, but he could also fire some of his most annoyingly loyal staff, if he would just commit to using their new line of virtual clothing.

Cio was a shrewed leader, and he knew that the Wholly Roamin Emperor’s Seatoe had already decreed that the entire empire should virtualize their apparel as quickly as possible. King Cio, who’d read a very positive article about this in the Firewall Street Journal, didn’t want to be left behind, so he and his Canceller of the Chequer left with the Leading Providers to discuss you’s cases (Cio assumed that’s where they were going to keep his virtual machines).

Although King Cio couldn’t tell how they were constructed, he was awestruck by the description of the new clothing lines from vendors such as Gamble, Yippee and Muddle. Convincing himself that he could just make out the described pin stripes, he was sure that Forza offered the perfect outfits for his peasant relations team, combining elegant appearance (such as could be seen) with robust manufacture. However, some of the King’s information ministers were concerned about this last point. They demanded evidence of the construction techniques, the source of the materials, and they wanted guarantees.

The peddlars claimed that their virtual clothing line had successfully undergone the Apparel Statement of Standards, 70 times. They explained that their vendors paid huge amounts of money to very important firms that provided this authoritative guidance, ensuring that the results would be totally neutral and reliable. The ministers nodded their heads, wisely recognizing that there was no need for them to investigate any further. In the event of a later dispute, they were certain that their Apparel Statement of Standards would be covered. That’s all that mattered.

Patrick Thibideau claims “Some users knock the lack of cloud security standards and dubious contract terms” in his As cloud computing grows, customer frustration mounts analysis of 4/19/2010 for Computerworld:

Users who turned to cloud computing for some of its obvious benefits, such as the ability to rapidly expand and provision systems, are starting to shift their focus to finding ways to fix some early weaknesses.

Cloud computing today has some of the characteristics of a Wild West boom town, but its unchecked growth is leading to frustration, a word that one hears more and more in user discussions about hosted services.

For example, cloud customers -- and some vendors as well -- are increasingly grousing about the lack of data handling and security standards. Some note that there aren't even rules that would require cloud vendors to disclose where their clients' data is stored -- even if it's housed in countries not bound by U.S. data security laws.

Such frustrations are becoming more evident as more enterprises embrace the cloud computing model.

Take Orbitz LLC, the online travel company whose businesses offer an increasingly broad range of services, from scheduling golf tee times to booking tickets for concerts and cruises.

Ed Bellis, chief information security officer at Orbitz, says the company's decision to use cloud-based software-as-a-service (SaaS) offerings enabled it to grow more rapidly and freed managers to concentrate on core competencies.

However, Orbitz, which is both a user and a provider of cloud-based services, sees an urgent need for cloud security standards, Bellis said at the SaaScon 2010 conference here earlier this month. For instance, Orbitz must address a range of due diligence requirements that are "all across the board," ranging from on-site audits to data center inspections.

Patrick continues with an analysis of the Cloud Security Alliance’s standards now being developed.

<Return to section navigation list>

Cloud Computing Events

My 27 TechEd North America Sessions with Keyword “Azure” post of 4/20/2010 lists:

- 18 Breakout Sessions

- 5 Interactive Sessions

- 4 Hands-on Labs

scheduled for TechEd North America 2010. The posts abstracts are from the current TechEd Session Catalog.

Wade Wegner will present Using the Azure AppFabric with Surgical Skill at the Chicago Code Camp 2010 to be held 5/1/2010 at the IIT- Stuart Building, 10 West 31st, Chicago, IL 60616 USA:

Note: Not 100% sure that this is the exact title I'd want to use, but it gets close.

The premise of the talk is to show how you can use the capabilities of the Windows Azure AppFabric to gracefully extend applications into the cloud OR extend cloud-based applications by leveraging on-premises assets. The Azure AppFabric is an extremely powerful platform, but largely unknown. I want to pull back the veil through demonstration of different solutions - all written from scratch.

David Linthicum claims “At two different events dealing with cloud computing, attendees give a variety of reasons for considering the cloud, some good, some not so good” in his explanation of Why users are switching to the cloud of 4/20/2010:

I'm on the road today. I started out presenting a keynote about the intersection of SharePoint and the cloud at the SharepointConference.org event in Baltimore. After that, I hit the rails and headed to New York City to check out the Cloud Computing Expo at the Javits Center (did you know Amtrak trains have Wi-Fi now?).

What's most interesting about this conference is the size. Although vendor focused, it's the largest cloud computing conference I've ever seen. The attendees are mostly people who "need to know what the cloud is" and are in search of vendors and consultants to help them move systems to cloud computing platforms, either public or private. As I walk around and ask people about their motives for being here, I'm starting to see a pattern in the answers, listed below:

- They are cutting our IT budget. This was, surprisingly, the most common response. Many attendees are looking at "doing more with less" and have been hit with a new zero-growth policy for IT. That is, the new data won't get built after all, so they have the option of leveraging cloud computing or telling everyone there's no more capacity. Unfortunately, constraints like this never yield effective results -- and whether or not cloud computing is in the mix, I fear the same will happen here.

- It seems like the emerging way to do computing. This came in a close second. In other words, we're following the hype, and the hype is leading to the cloud. I would not say that strategic planning was a forte among memebers of this group.

- We are moving our products to the cloud. Many traditional enterprise software vendors are making the move to cloud computing. I suspect it will be a rough journey for some, considering that they must continue to serve existing users, and it's not easy to move from an on-premise, single-tenant architecture to a virtualized, multitenant architecture. I suspect most won't get there without a significant amount of help, time, and money.

Darryl K. Taft reports Amazon Debunks Top 5 Myths of Cloud Computing on 4/19/2010 from the 5th International Cloud Computing Conference & Expo in New York City:

As the 5th International Cloud Computing Conference & Expo (Cloud Expo) opens in New York City on April 19, Amazon Web Services (AWS) is tapping into the attention the event is placing on cloud computing to address some of what the company views as the more persistent myths related to the cloud.

Despite being among the first to successfully and profitably implement cloud computing solutions, AWS officials said the company still has to constantly deal with questions about the reliability, security, cost, elasticity and other features of the cloud. In short, there are myths about cloud computing that persist despite increased industry adoption and thousands of successful cloud deployments. However, in an exclusive interview with eWEEK at Amazon's headquarters in Seattle, Adam Selipsky, vice president of AWS, set out to shoot down some of the myths of the cloud. Specifically, Selipsky debunked five cloud myths.

"We've seen a lot of misperceptions about what cloud computing is," he said.

Thus, the Cloud Computing Expo, as well as the virtual Cloud Lab and Cloud Slam events happening during the same week, provides a solid backdrop for Amazon’s myth busting. …

Darryl expands on Selipsky’s purported myths:

- The Cloud Is Not Reliable

- Security and Privacy Are Not Adequate in the Cloud

- I Can Get All the Benefits of the Cloud by Creating My Own In-house Cloud or Private Cloud

- If I Can’t Move Everything at Once, the Cloud Isn’t for Me

- Cost Is the Biggest Driver of Cloud Adoption

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Nolan’s NetSuite, The Forgotten Man takes a fresh look at this relatively mature SaaS infrastructure provider:

Last week I took advantage of the opportunity to visit with NetSuite during their annual partner conference held here in San Francisco. I wasn’t expecting anything earth shattering, if only because this company has been around for a long time now, 12 years to be exact and probably as well known for it’s largest shareholder, Larry Ellison, as being a pioneer in software as a service business applications.

This is the point of my post title, NetSuite really is The Forgotten Man, not receiving the respect it is due so I wanted to get caught up and what I found reinforced impressions I already had but also revealed a more complex and interesting company that I expected.

Particularly interesting was the conversation with CEO Zach Nelson, who aside from presenting a confident and knowledgeable front for the company is a very likable guy who is willing to field tough questions and deliver thoughtful responses.

Netsuite has always had a couple of dings attached to them, first a weak channel strategy and somewhat related to that is a haphazard approach to partners. I have also heard from other people I respect that their customer service isn’t great but is improving.

The company recently brought on board a Salesforce.com veteran, Ragu Gnanasekar, to deal with platform and partner opportunities. It’s clear this directive is not to build an AppExchange clone, in fact having said that “nobody makes money with marketplaces” I am inclined to believe they bring as much challenge as opportunity. If not an app marketplace then what… suite extension is the answer and their release of a cloud development platform certainly supports that intention.

According to NetSuite, SuiteCloud 2.0’s capabilities enable enterprises to take full advantage of the significant economic, productivity and development benefits of cloud computing, including multi-tenant, always-on SaaS infrastructure and scalable, integrated applications for Accounting/ERP, CRM and Ecommerce.

Suite extension is not a new concept, large ERP players have relied on 3rd parties to extend their suites into verticals and app functionality that was deemed either to be non-strategic or too small to worry about. NetSuite appears to be taking a different line of reasoning, which is that their opportunity is to be the cloud of choice for core ERP functionality and then cede the extended functionality and verticalization to partners. I think this is smart because even the largest of software companies can focus successfully on a handful of markets, in Zach Nelson’s words NetSuite will focus on delivering an application suite for tech companies and service companies, the rest of the market they are committing to partners. …