Windows Azure and Cloud Computing Posts for 12/2/2009+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

••• Update 12/6/2009: Mitch Wagner: Can Electronic Medical Records Be Secured?; Alistair Croll: Charting the course of on-demand computing; IDGA: Cloud Computing for DoD & Government event; IDGA: CloudConnect event; Walter Pinson: The Importance of Cloud Abstraction; Rob Grey: Windows Azure Functional bits and tooling comparison; Panagiotis Kefalidis: Windows Azure - Dynamically scaling your application; Wade Wegner: WI Azure User Group – Windows Azure Platform update presentation; and others.

•• Update 12/5/2009: Michael Clark: Synchronizing Files to Windows Azure Storage Using Microsoft Sync Framework; Chris Hoff: Dear Public Cloud Providers: Please Make Your Networking Capabilities Suck Less; IBM: Why Enterprises Buy Cloud Computing Services; Don Schlichting: SQL Azure with ASP Dot Net; and others.

• Update 12/4/2009: Gunnar Peipman: Windows Azure – Using DataSet with cloud storage; Charles Babcock: Cloud Computing Will Force The IT Organization To Change; MedInformaticsMD: Healthcare IT Failure and The Arrogance of the IT Industry; Howard Marks: Private Cloud Storage Decoded; Amazon Web Services: AWS Launches the Northern California Region; Chris Hoff: Black Hat Cloud & Virtualization Security Virtual Panel on 12/9; Frank Gens and the IDC Predictions Team: IDC Predictions 2010: Recovery and Transformation and more.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts, Databases, and DataHubs”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page.

Off-Topic: SharePoint Nightmare: Installing the SPS 2010 Public Beta on Windows 7 of 11/27/2009, Unable to Activate Office 2010 Beta or Windows 7 Guest OS, Run Windows Update or Join a Domain from Hyper-V VM (with fix) of 11/29/2009, and Installing SharePoint 2010 Public Beta on a Hyper-V Windows 7 VM Causes Numerous Critical and Error Events of 12/2/2009.

Azure Blob, Table and Queue Services

•• Gunnar Peipman explains Using Windows Azure BLOB storage with PHP in this 12/5/2009 post:

My last posting described how to read and write files located in Windows Azure cloud storage. In this posting I will show you how to do almost same thing using PHP. We will use Windows Azure SDK for PHP. The purpose of this example is to show you how simple it is to use Windows Azure storage services in your PHP applications.

•• Michael Clark shows you how to write a FullEnumerationSimpleSyncProvider with the simple provider components of Sync Framework in his Synchronizing Files to Windows Azure Storage Using Microsoft Sync Framework post of 12/3/2009 to Liam Cavanagh’s (@liamca) Microsoft Sync Framework blog:

At PDC09 we talked quite a bit about how to synchronize data to the cloud. Most of this revolved around synchronizing structured data to and from SQL Azure. If you are interested in that, check out our Developer Quick Start, which includes a link to download Microsoft Sync Framework Power Pack for SQL Azure November CTP.

For this post, however, I want to augment that information to answer another question that we are frequently asked and were asked a number of times at PDC, “How can I synchronize things like files with Azure Blob Storage?” The answer at this point is that you’ll have to build a provider. The good news is that it’s not too hard. I built one that I’ll describe as part of this post. The full sample code is available here.

So how does it work? The sample itself consists of three major portions: the actual provider, some wrapper code on Azure Blob Storage, and a simple console application to run it. I’ll talk in a bit more depth about both the provider and the Azure Blob Storage integration. On the client side the sample uses Sync Framework’s file synchronization provider. The file synchronization provider solves a lot of the hard problems for synchronizing files, including moves, renames, etc., so it is a great way to get this up and going quickly.

The azure provider is implemented as a FullEnumerationSimpleSyncProvider, using the simple provider components of Sync Framework. Simple providers are a way to create Sync Framework providers for data stores that do not have built-in synchronization support.

Mike leads the Sync Framework Team and asks “One of the things that we’d like to know is if the community would like to see more posts of this nature. If so let us know by giving us some feedback and we’ll figure out some appropriate venue to get this kind of info out on a more regular basis.” I’d certainly like to see more posts like this, as I noted in a comment to Mike’s article.

• Howard Marks’ Private Cloud Storage Decoded article for InformationWeek Analytics’ 11/30 issue posits:

A storage cloud doesn't have to be public. A wide range of private cloud storage products have been introduced by vendors, including name-brand companies such as EMC, with its Atmos line, and smaller players like ParaScale and Bycast. Other vendors are slapping the “cloud” label on existing product lines. Given the amorphous definitions surrounding all things cloud, that label may or may not be accurate. What's more important than semantics, however, is finding the right architecture to suit your storage needs. …

• Gunnar Peipman explains how easy it is to store .NET DataSets in Azure blobs in his Windows Azure – Using DataSet with cloud storage post of 12/1/2009 :

On Windows Azure CTP some file system operations are not allowed. You cannot create and modify files located on server hard disc. I have small application that stores data to DataSet and I needed place where I can hold this file. The logical choice is cloud storage service of course. In this posting I will show you how to read and write DataSet to your cloud storage as XML-file.

Although my original code is more complex (there are other things you have to handle when using file based storage in multi-user environment) I give you here the main idea about how to write some methods to handle cloud storage files. Feel free to modify my code as you wish. …

My November 2009 Uptime Reports for OakLeaf Azure Demos Running in the South Central US Data Center post of 12/3/2009 shows that Microsoft’s South Central US (San Antonio), TX site almost achieved its “guarantee that when you deploy two or more role instances in different fault and upgrade domains your Internet facing roles will have external connectivity at least 99.95% of the time” with 99.46% uptime. The demo services run two instances each.

Mark O’Neill’s More on Microsoft's "Migrating Data To New Cloud" patent application post of 12/1/2009 offers links to related commentary by

and a link to the patent application itself.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

••• Jamie Thomson (@jamiet)’s Project Houston post of 12/6/2009 describes the SQL Azure team’s attempt to create an MSAccess-like database design and management tool with a Windows Presentation Foundation (WPF) UI, as described by Dave Robinson in his The Future of database development with SQL Azure session at PDC 2009:

Of late I’ve started to wonder about the direction that Microsoft may take their various development tools in the future. There has been an obvious move toward embracing the open source development community, witness the presence of open source advocate Matt Mullenweg on stage at the recent PDC09 keynote as proof of that. Also observe the obvious move to embracing the cloud as evidenced through the introduction of Azure and SQL Azure.

With all this going on though one thing struck me, Microsoft still have a need to keep the lights on and to that end one big way that they make money is by selling licenses for their development tools such as Visual Studio and SQL Server Management Studio and moreover one requires a license for Windows in order to run those tools. So no matter how much they say SQL Azure is open to none-Microsoft development shops (which indeed it is) you’re still pretty much reliant on some Microsoft software running on your laptop in order to make best use of it. …

Jamie includes a screen capture from the session video

••• Jayaram Krishnaswamy explains how to find VS2008’s Sync Services client template in his How to Synchronize with a Cloud Database post of 12/6/2009:

In fortifying and solidifying its relational cloud database service-SQL Azure, Microsoft has come up with a new tool, the Microsoft Sync Framework Power Pack for SQL Azure November CTP.

All that you need to do is to click and presto, you are in sync.

This tool [SQL Azure Data Sync Tool for SQL Server] makes synchronizing SQL Azure with a local server that much more efficient (compared to force fitting ADO.NET) and that much more reliable.

This power pack provides the SqlAzureSyncProvider that automates much of the synchronizing task, that is, a wizard will step in, and take charge.

However you may need to keep your SQL Server Agent up and running and happy. …

It's a pretty neat tool except that it did not install the client template in my Visual Studio 2008 SP1. …

Jayaram reports that the current Sync Framework Power Pack CTP installs only a C# template; he was looking for a VB version.

••• Walter Pinson’s The Importance of Cloud Abstraction post of 12/5/2009 points out “.NET developers can leverage their existing SQL Server database skills when developing against the [SQL] Azure platform:

My colleague, Peter Palmieri, just penned a blog post about Microsoft’s recent announcement that the Azure platform will offer extensive and familiar relational database features via SQL Data Services (SDS).

In his post, Leveraging Skills, Peter discusses the fact that .NET developers will be able to leverage their existing SQL Server database skills when developing against the Azure platform. In doing so, he has touched upon what I think is Microsoft’s most strategic advantage in the realm of cloud computing. Microsoft has a ready-made ecosystem and developer community from which to draw its consumer innovators and early adopters. And I believe it plans on leveraging that advantage to vanquish the competition. The sheer breadth and depth of these cloud consumer first-movers may prove to be game-changing. …

For the record, Palmieri’s post reports on Microsoft’s March 2009 announcement, which I wouldn’t categorize as “recent.”

•• Mike Finley suggests that you Learn more about SQL Azure by having a direct connection to the development team for technical assistance and feedback in this 12/5/2009 post:

To Sign up for SQL Azure Feedback Community Engagement:

- Step One: Please follow this link to complete your SQL Connect site registration (if you have not) by clicking on the “Your Profile” link in the upper right corner.

- Step Two: Once your registration is complete, click here and then click on "Respond to this Survey" to complete the profile.

Benefits to our Feedback Community Customers:

- In-depth knowledge on SQL Azure through presentations, direct QA/feedback sessions with our Dev team

- Closer technical relationship with our development team

- Access to information on future NDA-required SQL Azure Customer Programs

•• SQLServer-QA.net posted a SQL Server R2 and Azure related Technical rollup on http://sqlserver-qa.net/blogs/el/archive/2009/12/05/6132.aspx on 12/5/2009 with multiple links to SQL Server 2008 R2 and SQL Azure resources.

•• Don Schlichting provides an introduction to using SQL Azure with ASP Dot Net in this 12/4/2009

• Jamie Thomson (a.k.a. @jamiet) reports that he uploaded Brent Ozar’s TwitterCache SQL Server backup to a publicly accessible SQL Azure database in his TwitterCache now hosted on SQL Azure post of 12/3/2009:

Earlier today Brent Ozar blogged about how he had been archiving tweets from various people that he follows into a SQL Server database. …

He made a backup of that database available for download on his blog so that others could download it and have a play of their own. I thought that rather than have all those people that wanted to party on the data download and restore the database for themselves it might be fun (and prudent) to stick it somewhere where anyone could get it so (with Brent’s permission) I’ve hosted the database up on SQL Azure.

Here are the credentials that you’ll need if you want to connect:

Server name lx49ykb7y5.database.windows.net User name ro Password r3@d0nly Database Name brentotweets …

• Alex Handy reports Embarcadero taps into SQL Azure beta in this 11/27/2009 SDTimes article:

Microsoft's cloud-enabled database platform, codenamed SQL Azure, isn't yet ready for prime time. But that hasn't stopped developers and database administrators from signing on to try it out.

To enable developers and administrators to better experiment with the SQL Azure beta, Embarcadero Technologies has partnered with Microsoft to release a free version of DBArtisan specifically tailored to help with the move to Azure.

SQL Azure is based upon some SQL Server technologies, but it is being specially crafted for the cloud. Scott Walz, senior director of product management at Embarcadero, said that both developers and Microsoft have questions to be worked out about how a cloud-based database should work.

As such, DBArtisan 8.7.2 does not include optimization features, nor does it offer deep monitoring capabilities. But that is because even Microsoft hasn't yet figured out how to give that type of information or power to users, said Walz. …

Shervin Shabiki describes the Sync Framework Power Pack for SQL Azure in this 12/2/2009 post:

We are working on a cloud based application for a long time existing customer. we came across a pretty interesting challenge. The problem was; the New cloud based application needed to be able to integrate with our existing legacy applications. in particular our call center application. We have no intentions of moving this application to the cloud, however we need to be able to have access to some of that data with our new Application.

Couple of weeks ago At PDC, during the Keynote Andy Lapin of Kelley Blue Book explained how they keep their community database up-to-date using the technology to synchronize data between their on-Premises SQL Server and SQL Azure. the recording is on PDC website, Andy’s demo starts at 1:18:00.

So finally I downloaded Sync Framework Power Pack for SQL Azure November CTP Available for Download. This release includes:

- SQL Azure provider for Sync Framework.

- Plug-in for Visual Studio 2008 SP1.

- SQL Azure Data Sync Tool for SQL Server.

SQL Azure Data Sync Tool for SQL Server contains a wizard that walks users through the SQL Azure connection process, automating the provisioning and synchronization operations between SQL Server and SQL Azure.

Shervin promises a Webcast about the Data Sync Tool on 12/4/2009.

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

• Vittorio Bertocci explains Deep linking your way out of home realm discovery in this 12/3/2009 post:

Here there’s a very quick post about an often debated topic, home realm discovery.

When your web application trusts multiple identity providers, the first task you have when processing a request is figuring out from where the user is coming from. If the user is coming from your partner Adatum, he’ll have to authenticate with the Adatum (IP) STS before being able to access your application; if he comes from Contoso, he’ll have to authenticate with the Contoso STS before being able to authenticate with your application. The Contoso STS and the Adatum STS clearly live at different addresses, which means that you’ll typically need to feature some logic (often residing on your federation provider STS) to determine from where your user is coming from before starting the classic WS-Fed redirection dance. CardSpace provides a very neat solution to this, but it is not always readily available. The result is that everybody comes out with some different solution, which often involves the user to interact with a UI. For example the MFG will prompt you for a live id, and once it will sense that you entered an account belonging to a federated partner it will offer you to redirect to your IP STS. Others may offer a simple drop down which enumerates the federated partners, and ask you to pick your own (not especially handy if you want to keep your list of partners/customers private).

While those mechanisms are necessary, some times your users may get fed up to always have to handle UI tasks instead of enjoying smooth, heated-knife-though-butter SSO.

Well, here there’s a trick that we used in the internal version of FabrikamShipping for easing that pain: basically, we include in a deep link all the hops that a user from Adatum would go though when going through the authentication experience. As a result, adatum users will click on a link that points directly to the Adatum ADFS2 and already contains all the info for performing the redirects to the R-STS of the app and to the app itself. If you know about WS-Fed you may in principle build the link from scratch, but since I am notoriously lazy (and bad at remembering syntactic sugar) I prefer the following trick: I go through the authentication experience, and once I reach the authentication pages of the intended IP I save the URI currently displayed in the address bar. …

See Bruce Kyle’s Join in December Webcast on Securing REST Services in Azure post about Access Control Services in the Cloud Computing Events section.

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

••• Panagiotis Kefalidis explains Windows Azure - Dynamically scaling your application in this 12/6/2009 post:

When you have your service running on Windows Azure, the least thing you want is monitoring every now and then and decide if there is a necessity for specific actions based on your monitoring data. You want the service to be, in some degree, self-manageable and decide on its own what the necessary actions should take place to satisfy a monitoring alert. In this post, I’m not going to use Service Management API to increase or decrease the number of instances, instead I’m going to log a warning, but in a future post I’m going to use it in combination with this logging message, so consider this as a series of posts with this being the first one.

The most common scenario is dynamically increase or decrease VM instances to be able to process more messages as our Queues are getting filled up. You have to create your own “logic”, a decision mechanism if you like, which will execute some steps and bring the service to a state that satisfies your condition because there is no out-of-the-box solution from Windows Azure. A number of companies have announced that their monitoring/health software is going to support Windows Azure. You can find more information about that if you search the internet, or visit the Windows Azure Portal under Partners section.

••• Rob Grey’s Windows Azure Functional bits and tooling comparison compares how Amazon EC2, Google App Engine, and GoGrid “make your life easier than regular hosting” in this 12/6/2009 post:

This is post is part of an ongoing series I am writing about Microsoft’s new Windows Azure platform. Feel free to read that first for an introduction to this. …

Each of the vendors provide various services in their offerings, many of them common. The first thing they’ll tell you is that they provide the means of infinitely scaling your application and ensuring high availability. These points are not worth debating because I’m fairly certain that they can all live up to that promise, and I’m even more certain that at some point in time, they will all break that promise – and some have already had the opportunity to do so.

What we really need to dig into here is: assuming that high availability and scalability have been removed from the equation as problems areas – how else does the cloud make your life easier than regular hosting? That’s the real question here, as well as how it pertains to Windows Azure versus the other providers.

When it comes to comparing Windows Azure and the competition, there are quite a few areas I’ve delved into. What’s the one thing every developer dreads when they start a new project? Well…actually deploying the project of course! If they manage to get that far, most projects would already have put plenty of thought and planning in to architecture, resilience, performance and so on…right? Right?! …

••• JP Morgenthal claims the Two Critical and Oft Forgotten Features of Cloud Services are “Metering and Instrumentation” in a 12/6/2009 post:

An interesting facet of advancement is that it tends to limit know-how over time.

At one time the mechanic in your local garage could take your entire engine apart, fix any problem and put it back into running order.

Today, they can put a computer on the end, read a code, and hope it provides some understanding of the problem. Similarly, the application server revolution has unfortunately led to a generation of software developers that believe that the container can do all your instrumentation and metering for you, thus removing an impetus to build this into the application.

These developers lose sight that the container can only tell you part of the story; the part it sees as an observer. If you don't make more internals of your application observable, the most it can see is how often it hands off control to your application, what services your application uses from the container and when you application terminates control. Useful information, but not enough to develop large-scale real world services used by hundreds of thousands, or even millions of users. …

• Robert Rowley, MD contends Still a lot of work ahead for the ONC in this 12/4/2009 post to EHR Bloggers:

The Office of the National Coordinator (ONC) for Health IT has been developing a national health IT policy, driven by ARRA’s HITECH section that earmarks incentives for physicians to adopt Electronic Health Records (EHRs). Physicians who can demonstrate “meaningful use” of “certified” EHRs can begin earning incentive payments from CMS beginning in 2011 (the carrot), and start incurring penalties in the form of pay reduction if they have not shown adoption by 2015 (the stick).

Of course, as we have reviewed in this blog over the past several months, the specifics of what is meant by “meaningful use” and “certified” have been the subject of much deliberation. The ONC is advised by two committees to help sort out this policy and define these concepts: the HIT Policy Committee and the HIT Standards Committee. …

• MedInformaticsMD’s Healthcare IT Failure and The Arrogance of the IT Industry post of 12/3/2009 begins:

In the Wall Street Journal healthcare blog post "Safety Guru: ‘Health IT Is Harder Than It Looks’" here, reporter Jacob Goldstein reports on UC San Francisco hospitalist Bob Wachter's commentary that:

“... recent experience has confirmed that health IT is harder than it looks … Several major installations of vendor-produced systems have failed, and many safety hazards caused by faulty health IT systems have been reported.”

I would differ with Dr. Wachter only in that the "experience that health IT is harder than it looks" goes far beyond "recent", e.g., as in the wisdom of the Medical Informatics pioneers from the 1960's-1970's and earlier as in my post "Medical Informatics, Pharma, Health IT, and Golden Advice That Sits Sadly Unused" here. …

• Acumatica, the developer of an integrated set of web-based accounting, ERP, and CRM software, announced Acumatica SaaS Launched running on Windows Azure in an 11/17/2009 press release:

Acumatica … today announced availability of its SaaS solution which runs on Windows Azure and includes the Acumatica software subscription, the operating environment, backups, upgrades, a service level agreement, and automatic updates. The SaaS solution allows mid-sized businesses to purchase customized ERP software and services without installing and maintaining software or investing in hardware.

The Acumatica SaaS offering is unique because it allows customers to switch between an on-premise deployment and a SaaS deployment as their business needs change. Customers may take advantage of Acumatica’s migration service if they decide to move to SaaS or move from SaaS to an on-premise deployment.

Acumatica’s SaaS offering includes advanced financial features and customization tools. The web-based customization tools allow users to build custom reports and dashboards, modify business logic, change workflows, and build new modules. Customizations are maintained separately from core application code so upgrades and updates do not impact customized deployments. …

“Windows Azure reduces the time it takes ISVs such as Acumatica to profitably deploy a SaaS solution,” said Doug Hauger, general manager of Windows Azure at Microsoft Corp. “In less than five weeks, Acumatica’s team migrated a highly advanced accounting and ERP application to Windows Azure so customers with complex requirements are able to get their accounting services on demand.” …

John Moore reports Microsoft’s Amalga & HealthVault Land LTC Provider on 12/3/2009:

Just released this morning, Microsoft announced that it has landed Golden Living, a provider of long-term care (LTC) and like services for the elderly and disabled. Similar to the New York Presbyterian and the Caritas Christi Health wins, Golden Living will be adopting Amalga UIS for internal aggregation of a customer’s health data to facilitate care and HealthVault as the customer control repository of personal health information (PHI).

In each of these cases, these organizations are using the Amalga/HealthVault combination as a competitive differentiator. It remains to be seen if it will actually drive future business, but for today, it does put these organizations out front of their competitors in the adoption of new technologies and approaches in support of collaborative care that goes beyond the clinician to the community.

What is particularly interesting with this announcement is that the actual users of HealthVault at Golden Living are unlikely to be the residents of Golden Living facilities but their children, nephews and nieces. This may provide Microsoft one more avenue to educate the market, getting consumers comfortable with the concept of online storage and sharing of PHI (privacy and security) and understanding the utility that such services as HealthVault’s brings to market.

The Windows Azure Team’s Introducing Windows Azure Diagnostics post of 12/1/2009 provides an overview of this newly announced technology:

Windows Azure Diagnostics is a new feature in the November release of the Windows Azure Tools and SDK. It gives developers the ability to collect and store a variety of diagnostics data in a highly configurable way.

Sumit Mehrotra, a Program Manager on the Windows Azure team, has been blogging about the new diagnostics feature. From his first post Introducing Windows Azure Diagnostics:

“There are a number of diagnostic scenarios that go beyond simple logging. Over the past year we received a lot of feedback from customers regarding this as well. We have tried to address some of those scenarios, e.g.

- Detecting and troubleshooting problems

- Performance measurement

- Analytics and QoS

- Capacity planning

- And more…

Based on the feedback we received we wanted to keep the simplicity of the initial logging API but enhance the feature to give users more diagnostic data about their service and to give users more control of their diagnostic data. The November 2009 marks our first initial release of the Diagnostics feature.” …

Return to section navigation list>

Windows Azure Infrastructure

••• Paul Krill asks Can Windows Azure deliver on IT's interest in it? in this 12/3/2009 article for ComputerWorld:

Windows Azure, Microsoft's fledgling cloud computing platform, is piquing the interest of IT specialists who see it as a potential solution for dealing with variable compute loads. But an uptick in deployments for Azure, which becomes a fee-based service early next year, could take a while, with customers still just evaluating the technology.

"We'd be targeting applications that have variable loads" for possible deployment on Azure, said David Collins, a system consultant at the Unum life insurance company. The company might find Azure useful for an enrollment application. "We have huge activity in November and December and then the rest of the year, it's not so big," Collins said. Unum, however, is not ready to use Azure, with Collins citing issues such as integrating Azure with IBM DB2 and Teradata systems.

[Read InfoWorld's "Making sense of Microsoft's Azure." | See what Azure really delivers in the InfoWorld Test Center's first look preview.] …

••• Alistair Croll’s Charting the course of on-demand computing white-paper for the forthcoming Cloud Connect conference is an eight-page analysis of cloud computing’s future (site registration required):

Cloud Computing represents the biggest shift in computing of the last

decade. It’s as fundamental as the move from client-server computing to

web-centric computing, and in many ways more disruptive. For cloud

computing is a change in business models—the ability to efficiently allocate

computing power on demand, in a true utility model. It changes what

companies can do with IT resources.By 2012, cloud spending will grow almost threefold, to $42 B. Already, the

money spent on cloud computing is growing at over five times the rate of

traditional, on-premise IT. Cloud adopters are already bragging about their

lower costs and greater ability to adapt to changing market conditions.

But despite these trends, companies won’t switch to cloud models overnight.

Rather, they’ll migrate layers of their IT infrastructure over time. Between

today’s world of enterprise data centers and tomorrow’s world of ubiquitous

computing is a middle ground of hybrid clouds, opportunistic deployment,

and the mingling of consumer applications with business systems.

Alistair is the Principal Analyst for Bitcurrent.

•• IBM asks Why Enterprises Buy Cloud Computing Services and replies on 12/5/2009 with this graphic:

![[cloud+value.jpg.png]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEi3so7a0nJKQkeVRQQJLHI_bmOC-cMx4ilga33BfQs4p2PEwBxyt6csx6T6VM_HW_qgbNtb0pLoSss1eFanFWTHJTXawE5nLhJSu7cCkFBV-Swrzqx9DvNqXZusrZkbJkyo93VZ/s1600/cloud+value.jpg.png) •• Chris Hoff (@Beaker) makes this plaintive request Dear Public Cloud Providers: Please Make Your Networking Capabilities Suck Less. Kthxbye in a 12/4/2009 post:

•• Chris Hoff (@Beaker) makes this plaintive request Dear Public Cloud Providers: Please Make Your Networking Capabilities Suck Less. Kthxbye in a 12/4/2009 post:

… Incumbent networking vendors and emerging cloud/network startups are coming to terms with the impact of virtualization and cloud as juxtaposed with that of (and you’ll excuse the term) “pure” cloud vendors and those more traditional (Inter)networking service providers who have begun to roll out Cloud services atop or alongside their existing portfolio of offerings. …

For the purpose of this post, I’m not going to focus on the private Cloud camp and enterprise cloud plays, or those “Cloud” providers who replicate the same architectures to serve these customers, rather, I want to focus on those service providers/Cloud providers who offer massively scalable Infrastructure and Platform-as-a-Service offerings as in the second example above and highlight two really important points:

- From a physical networking perspective, most of these providers rely, in some large part, on giant, flat, layer two physical networks with the actual “intelligence,” segmentation, isolation and logical connectivity provided by the hypervisor and their orchestration/provisioning/automation layers.

- Most of the networking implementations in these environments are seriously retarded as it relates to providing flexible and extensible networking topologies which make for n-Tier application mapping nightmares for an enterprise looking to move a reasonable application stack to their service.

I’ve been experimenting with taking several reasonably basic n-Tier app stacks which require mutiple levels of security, load balancing and message bus capabilities and design them using several cloud platform providers offerings today. …

• Frank Gens and the IDC Predictions Team delivered IDC Predictions 2010: Recovery and Transformation on 12/3/2009 (site registration required to download PDF). Prediction #4. IT Industry's "Cloud" Transformation Will Greatly Expand and Mature in 2010 claims:

2010 will be a very big year in the continuing buildout and maturing of the cloud services delivery and consumption model — which, for the past three years, IDC has identified as the most important transformational force in the IT market. A unifying theme for 2010 will be the emergence of "enterprise grade" cloud services — services that support the more demanding security, availability, and manageability requirements of traditional IT in the cloud services world. …

• Maureen O’Gara reports “Intel is really trying to squeeze a container-based data center Cinderella-style into a rack” in her Intel and the Incredible Shrinking Cloud post of 12/4/2009:

Intel has built an experimental fully programmable 48-core chip that it’s nicknamed the Single-Chip Cloud Computer (SCC) and means to build at least a hundred more to pass out to industry and academic partners to use to develop new software applications and parallel programming models.

Microsoft, ETH Zurich, the University of California at Berkeley and the University of Illinois already have one and researchers from Intel, HP and Yahoo’s Open Cirrus initiative have already begun porting cloud applications to the widget using Hadoop, the Java software framework that supports data-intensive distributed applications.

Internally Intel has ported web servers, physics modeling and financial analytics to the thing.

Intel says SCC, which runs Windows and Linux and so legacy software, is a research platform meant to work out the many kinks of multi-headed programming. It will never be a product.

However, Intel means to start integrating key features of the work in a new line of Core-branded chips early next year and introduce six- and eight-core processors later in 2010. …

• Charles Babcock’s Cloud Computing Will Force The IT Organization To Change cover story for InformationWeek’s 11/30/2009 issue contends:

With Amazon's EC2, Google's AppEngine, and now Microsoft's Azure, cloud computing looks a lot less like some catch-all concept in the distance and more like a very real architecture that your data center has a good chance of being connected to in the near future.

If that happens, more than the technology must change. The IT organization, and how IT works with business units, must adapt as well, or companies won’t get all they want from cloud computing. Putting part of the IT workload into the cloud will require some different management approaches, and different IT skills, from what’s grown up in the traditional data center. …

Bernard Golden explains Why Benchmarking Cloud vs. Current IT Costs is So Hard on 12/3/2009:

A couple of weeks ago I was asked to moderate an HP-sponsored meeting on the subject of virtualization. Predictably, most of the discussion (attended by press and vendors including Citrix, Microsoft, Red Hat, and VMware) focused on cloud computing. It was a pretty lively session, but what I want to address here is an HP product portfolio called "IT Financial Management" that was discussed, along with its implications for cloud computing. As you might guess, the product focuses on financial analysis of IT operations, which is extremely relevant to the adoption of cloud computing.

Naturally, one of the topics raised during the panel was the potential cost benefits of cloud computing. …

The paucity of examples is troubling. Most of the organizations that have implemented cloud solutions have done so with an intuitive belief that inexpensive, pay-by-the-use computing must be less expensive than the current asset-heavy, low-automation environment characteristic of most IT organizations. But they've forged ahead, mostly, without real proof. …

David Linthicum says “There's big demand now for cloud engineers and architects -- but not many people able to fill them” in Need a job in IT? The cloud needs you on 12/3/2009:

It's always interesting to me to see the job growth in emerging spaces, such as cloud computing. Typically, the hype is huge around a concept (such as SOA, client/server, or distributed objects) about 8 to 12 months before there is notable job growth. This is often due to companies not understanding the value of the new technology, as well as to the lag in allocating budget and creating job reqs.

Cloud computing seems to be a different beast. After no fewer than four calls last week from headhunters looking for cloud architects, cloud engineers, and cloud strategy consultants, I decided to look at the job growth around cloud computing, using my usual unscientific measurements. This included a visit to the cloud job postings at indeed.com, which provides search and alerts for job postings and tracks trends.

Jon Brodkin quotes a Gartner keynoter in his Top data center challenges include social networks, rising energy costs Network World post of 12/1/2009:

Enterprise data needs will grow a staggering 650% over the next five years, and that's just one of numerous challenges IT leaders have to start preparing for today, analysts said as the annual Gartner Data Center Conference kicked off in Las Vegas Tuesday morning. …

Rising use of social networks, rising energy costs and a need to understand new technologies such as virtualization and cloud computing are among the top issues IT leaders face in the evolving data center, Gartner analyst David Cappuccio said in an opening keynote address.

The 650% enterprise data growth over the next five years poses a major challenge, in part because 80% of the new data will be unstructured, Cappuccio said. IT executives have to make sure data can be audited and meet regulatory and compliance objectives, while attempting to ensure that growing storage needs don't break the bank. Technologies such as thin provisioning, deduplication and automated storage tiering can help reduce costs.

James Hamilton analyzes Data Center Waste Heat Reclaimation claims in this 12/1/2009 post:

For several years I’ve been interested in PUE<1.0 as a rallying cry for the industry around increased efficiency. From PUE and Total Power Usage Efficiency (tPUE) where I talked about PUE<1.0:

In the Green Grid document [Green Grid Data Center Power Efficiency Metrics: PUE and DCiE], it says that “the PUE can range from 1.0 to infinity” and goes on to say “… a PUE value approaching 1.0 would indicate 100% efficiency (i.e. all power used by IT equipment only). In practice, this is approximately true. But PUEs better than 1.0 is absolutely possible and even a good idea. Let’s use an example to better understand this. I’ll use a 1.2 PUE facility in this case. Some facilities are already exceeding this PUE and there is no controversy on whether its achievable. …

Allan da Costa Pinto’s Scaling Facebook Applications in the Azure Cloud – A Success Story and Lessons Learned post of 12/1/2009 describes the following Azure success stories:

Success Story 1 – Thuzi: The first centers around a Facebook application built by Thuzi.com for Outback Steakhouse as part of a marketing promotion – the graph above is part of this story. Jim Zimmerman, CTO/Lead Developer of Thuzi discusses what to watch out for when going live and why a cloud back end was chosen (viral nature of the application, unpredictable and potential geometric adoption). “We don’t know much about running data centers…besides we didn’t want to purchase extra servers ‘just in case’.” [Emphasis Allan’s.]

Success Story 2 – RiskMetrics: The second story is very intriguing to me as I ponder scenarios of “hybrid” workloads - sharing work across on premise and cloud infrastructures. RiskMetrics detailed what they have accomplished and what they continue to work with Microsoft on. …

Allan is a Microsoft Industry Developer Evangelist based in Hartford, CT.

Bruce Kyle offers Everything Developers Need to Know About Azure in this 12/1/2009 list of msdev.com training videos, which range in length from 30 to 60 minutes:

- Windows Azure Overview, a high level overview for technical decision makers and business development managers.

- The Partner Story for Azure. Two videos draw from the Worldwide Partner Conference held in July that focuses on Azure from a Partner perspective.

- Windows Azure Fundamentals.

- Developing a Windows Azure Application covers developing a Windows Azure application from File>New.

- Microsoft SQL Azure Overview for the Technical Decision Maker gives you an understanding of SQL Azure and will highlight core functionality.

- Microsoft SQL Azure Overview for Developers covers: SQL Azure Overview, SQL Azure Data Access, SQL Azure Architecture, SQL Azure Provisioning Model.

- Microsoft SQL Azure RDBMS Support covers Creating, accessing and manipulating tables, views, indexes, roles, procedures, triggers, and functions, Insert, Update, and Delete, and Constraints.

- Microsoft SQL Azure Programmability cover how to programmatically interact with SQL Azure using the following APIs: • ADO.NET, ODBC, and PHP.

- Microsoft SQL Azure Tooling covers SQLCMD Basics, Deployment, Development, Management, Provisioning.

- Microsoft SQL Azure Security Model covers Authentication, Authorization.

- Windows Azure Storage covers how to use Windows Azure storage to store blobs and tables.

- Windows Azure - Worker Roles and Queue Storage covers Windows Azure queue storage for Web-Worker role communication.

Links to the above, plus the following four videos coming on 12/14/2009, are available at http://www.msdev.com/Directory/SearchResults.aspx?keyword=azure:

- Windows Azure Platform: AppFabric Overview

- Windows Azure Platform: AppFabric Fundamentals

- Windows Azure Platform: Introducing the Service Bus

- Windows Azure Platform: The Access Control Service

<Return to section navigation list>

Guy Barette delivers a detailed table of Azure Benefits for MSDN Subscribers in his 11/30/2009 post:

Cloud Security and Governance

••• Mitch Wagner asks Can Electronic Medical Records Be Secured? and answers in a feature-length story of 12/5/2009 for Information Week’s HealthCare newsletter: “While EMRs promise massive opportunities for patient health benefits and reductions in administrative costs, the privacy and security risks are daunting.”

While electronic medical records promise massive opportunities for patient health benefits and reductions in administrative costs, the privacy and security risks are equally huge.

The Obama administration has set an ambitious goal--to get electronic medical records on file for every American by 2014. The administration is offering powerful incentives: $20 billion in stimulus funds as per the American Recovery and Reinvestment Act (ARRA) of 2009, and stiff Medicare penalties for healthcare providers that fail to implement EMRs after 2014.

EMRs offer tantalizing benefits: Improved efficiency via the elimination of tons of paper files in doctors' offices, and better medical care through the use of the same kinds of database and data mining technologies that are now routine in other industries. One example: EMR systems can flag symptoms and potentially harmful drug interactions that busy doctors might otherwise miss.

But the accompanying privacy and security threats are significant. When completed, the nation's EMR infrastructure will be a massive store of every American's most personal, private information, and a potential target of abuse by marketers, identity thieves, and unscrupulous employers and insurance companies. …

• Lori MacVittie recommends “Using Anonymous Human Authentication to prevent illegitimate access to sites, services, and applications” in her 12/4/2009 No Shirt, No Shoes, No HTTP Service post:

In the “real world” there are generally accepted standards set for access to a business and its services. One of the most common standards is “No shirt, no shoes, no service.” Folks not meeting this criteria are typically not allowed past the doors of a business.

But on the web, access to services is implicit in the fact that the business is offering the service. If the HTTP service is accessible, it’s implicitly allowing connections and providing service without any standard criteria for access. This results in access by more than just customers and potential customers. Bots, spiders, and miscreants are afforded the same access to business services as more desirable visitors. This can unfortunately lead to compromise, theft, and corruption of data via myriad injection and attack methods – many of them automated.

While gating access to services that comes from offering a service is likely not the best solution (although it is a solution), there has to be a way to at least mitigate the automated abuse of open access to services by miscreants that leverage scripting to attack sites. …

• See Chris Hoff’s Great InformationWeek/Dark Reading/Black Hat Cloud & Virtualization Security Virtual Panel on 12/9 post in the Cloud Computing Events section.

Hovhannes Avoyan claims Most Cyber Attacks Can be Prevented with Monitoring in this 12/3/2009 post:

Did you know that nearly half of companies these days are reducing or deferring budgets for IT security, despite growing instances of web incursions into databases and other private information? That’s according to a 2009 study by PriceWaterhouse Coopers.

I came across that number while reading a story about in Wired that reports on a Senate panel’s finding that 80% of cyber attacks can be prevented. According to the Richard Schaeffer, information assurance director for the National Security Administration (NSA), who testified before the Senate Judiciary Subcommittee on Terrorism, Technology and Homeland Security, If network administrators simply instituted proper configuration policies and conducted good network monitoring most attacks would be prevented. …

Hovannes is is the CEO of Monitis, Inc., which provides the free mon.itor.us service used by OakLeaf to monitor its Azure demo projects.

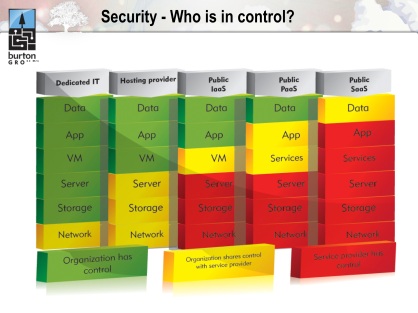

Scott Morrison describes Visualizing the Boundaries of Control in the Cloud in this 12/2/2009 post that carries “Cloud’s biggest problem isn’t security; it’s the continuous noise around security” as a subtitle.

Two weeks ago, I delivered a webinar about new security models in the cloud with Anne Thomas Manes from Burton Group. Anne had one slide in particular, borrowed from her colleague Dan Blum, which I liked so much I actually re-structured my own material around it. Let me share it with you:

This graphic does the finest job I have seen of clearly articulating where the boundaries of control lie under the different models of cloud computing. Cloud, after all, is really about surrendering control: we delegate management of infrastructure, applications, and data to realize the benefits of commoditization. But successful transfer of control implies trust–and trust isn’t something we bestow easily onto external providers. We will only build this trust if we change our approach to managing cloud security.

Cloud’s biggest problem isn’t security; it’s the continuous noise around security that distracts us from the real issues and the possible solutions. It’s not hard to create a jumbled list of things to worry about in the cloud. It is considerably harder to come up with a cohesive model that highlights a fundamental truth and offers a new perspective from which to consider solutions. This is the value of Dan’s stack. …

Pat Romanski’s Forrester: Security Concerns Hinder Cloud Computing Adoption post of 12/2/2009 begins:

Concerns about the security of cloud computing environments top the list of reasons for firms not being interested in the pay-per-use hosting model of virtual servers, according to the latest Enterprise And SMB Hardware Survey, North America And Europe, Q3 2009, by Forrester Research, Inc. (Nasdaq: FORR). Forty-nine percent of survey respondents from enterprises and 51 percent from small and medium-size businesses (SMBs) cited security and privacy concerns as their top reason for not using cloud computing. The survey of more than 2,200 IT executives and technology decision-makers in Canada, France, Germany, the UK, and the US is Forrester’s largest annual survey of emerging hardware trends for both enterprises and SMBs. The survey is part of Forrester’s Business Data Services (BDS) series, which provides an extensive data set for B2B Market Research professionals’ go-to-market strategy assessments. …

Business Wire’s DMTF Announces Partnership with Cloud Security Alliance press release of 12/1/2009 announces:

Distributed Management Task Force (DMTF), the organization bringing the IT industry together to collaborate on systems management standards development, validation, promotion and adoption, today announced that it will partner with the Cloud Security Alliance (CSA) to promote standards for cloud security as part of the ongoing work within the DMTF Open Cloud Standards Incubator. The two groups will collaborate on best practices for managing security within the cloud.

CSA is a non-profit organization, which was formed to promote the use of best practices for providing security assurance within cloud computing, and to educate the industry about the use of cloud computing to help secure other forms of computing. CSA’s membership comprises subject experts from throughout the IT industry.

As DMTF develops its requirements for secure cloud management, it will work with the CSA to utilize best practices and feedback to improve the security, privacy and trust of cloud computing. Both organizations plan to adopt appropriate existing standards, and assist and support recognized bodies that are developing new standards appropriate for cloud computing. Together they will promote a common level of understanding between the consumers and providers of cloud computing regarding necessary security. This partnership will help ensure alignment within the cloud computing industry.

<Return to section navigation list>

Cloud Computing Events

••• Wade Wegner reports on his WI Azure User Group – Windows Azure Platform update presentation in this 12/6/2009 post:

Last week I presented at the Wisconsin Azure User Group for the second time, along with Clark Sell. Our goal was to provide an overview of everything announced at the Professional Developers Conference (PDC) 2009. We made a ton of announcements, and I recommend you check out the Microsoft PDC website for more information, including videos and decks from all the presentations.

Shameless plug: watch my session on migrating applications to the Windows Azure platform with Accenture, CCH, Dominos, and Original Digital – Lessons Learned: Migrating Applications to the Windows Azure Platform.

While I was supposed to only spend twenty minutes talking about updates to the Windows Azure platform, I ended up spending over an hour.

Wade follows with a detailed outline and the slide deck of his presentation.

••• The Institute for Defense and Government Advancement (IDGA) announces its Cloud Computing for DoD & Government to be held on 2/22/ – 2/14/2010 at a venue to be announced in the Washington, DC metropolitan area.

••• IDGA will hold its CloudConnect Event on 3/15 - 3/18/2010 at the Santa Clara Convention Center, Santa Clara, CA. According to IDGA:

Cloud Connect offers a comprehensive conference for IT, executives and developers. Explore benefits and opportunities of cloud computing, learn how to develop successful business models and build relationships, or get in-depth technical information on cloud development:

- The ROI, cost and economics of on-demand computing

- Migration strategies to move from on-premise to cloud-based IT

- Vertical cloud specialization, tailoring features and architectures to specific applications, industries, and customer ecosystems.

- Case studies and lessons learned from those using clouds today

- "Infrastructure 2.0" platforms that are the framework for cloud-enabled organizations

- Industry standards and formal evolution

- "Big picture" futures, such as the impact of clouds on society or the consequences of ubiquitous computing

- Management, security, and compliance issues surrounding third-party services and public infrastructure, as well as policies.

- Dealing with (and profiting from) "big data" platforms and elasticity that clouds enable

• Chris Hoff (@Beaker) announces a Great InformationWeek/Dark Reading/Black Hat Cloud & Virtualization Security Virtual Panel on 12/9 in this 12/3/2009 post:

I wanted to let you know about about a cool virtual panel I’m moderating as part of the InformationWeek/Dark Reading/Black Hat virtual event titled “IT Security: The Next Decade” on December 9th.

There are numerous awesome speakers throughout the day, but the panel I’m moderating is especially interesting to me because I was able to get an amazing set of people to participate. Here’s the rundown — check out the panelists:

Virtualization, Cloud Computing, And Next-Generation Security

The concept of cloud computing creates new challenges for security, because sensitive data may no longer reside on dedicated hardware. How can enterprises protect their most sensitive data in the rapidly-evolving world of shared computing resources? In this panel, Black Hat researchers who have found vulnerabilities in the cloud and software-as-a-service models meet other experts on virtualization and cloud computing to discuss the question of cloud computing’s impact on security and the steps that will be required to protect data in cloud environments.

Panelists: Glenn Brunette, Distinguished Engineer and Chief Security Architect, Sun Microsystems; Edward Haletky, Virtualization Security Expert; Chris Wolf, Virtualization Analyst, Burton Group; Jon Oberheide, Security Researcher; Craig Balding, Cloud Security Expert, cloudsecurity.org

Moderator: Christofer Hoff, Contributing Editor, Black Hat

I wanted the perspective of architects/engineers, practitioners, researchers and analysts — and I couldn’t have asked for a better group.

Our session is 6:15pm – 7:00 EST.

Bruce Kyle suggests that you Join in December Webcast on Securing REST Services in Azure in his 12/2/2009 post to Microsoft’s US ISV Developer Community blog:

You’re invited to join in the Architect Innovation Cafe Web seminar on Securing REST-Based Services with Access Control Service. The Webcast is on December 22, 2009 at 11 am – 12:30pm PST (2 pm – 3:30 pm ET).

This webcast will provide a tour of Access Control Service (ACS) features and demonstrate scenarios where the ACS can be employed to secure REST-based WCF services and other web resources. You’ll learn how to configure ACS, learn how to request a token from the ACS, and learn how applications and services can authorize access based on the ACS token. …

Register here.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Maureen O’Gara reports “IBM is beta testing a public cloud, based on customizable virtual machines, for application software development” in her IBM Betas Test & Dev Cloud post of 12/6/2009:

IBM is beta testing a public cloud, based on customizable virtual machines, for application software development.

It’s called plainly enough IBM Smart Business Development and Test on the IBM Cloud and will provide compute and storage as a service with Rational Software Delivery Services, WebSphere middleware and Information Management database thrown in.

The Rational widgetry include[s] a complementary set of ready-to-use application lifecycle management tools.

IBM also said pre-configured integrations of some of these Rational services are available based on the Jazz framework, which dynamically integrates and synchronizes people, processes and assets associated with software development projects.

The services will support development across heterogeneous environments, including Java, open source and .NET.

• Amazon Web Services announces AWS Launches the Northern California Region on 12/4/2009:

Starting today, you can now choose to locate your AWS resources in our Northern California Region, which like other AWS Regions, contains multiple redundant Availability Zones. Utilizing this Region can reduce your data access latencies if you have customers or existing data centers in the Northern California area. This new Region is available for Amazon EC2, Amazon Simple Storage Service (Amazon S3), Amazon SimpleDB, Amazon Simple Queue Service (Amazon SQS), and Amazon Elastic MapReduce. For Northern California Region pricing, please see the detail page for each service at aws.amazon.com/products.

Werner Vogels announces Powerful New Amazon EC2 Boot Features in his 12/3/2009 post:

Today a powerful new feature is available for our Amazon EC2 customers: the ability to boot their instances from Amazon EBS (Elastic Block Store).

Customers like the simplicity of the AMI (Amazon Machine Image) model where they either choose a preconfigured AMI or upload their own AMI into Amazon S3. A wide variety of operating systems and software configurations is available for use. But customers have also asked us for more flexibility and control in the way that Amazon EC2 instances are booted such that they have finer grained control over for example what software configurations and data sets are available to the instance at boot time.

The ability to boot from Amazon EBS gives customers very powerful control over the boot configuration of the Amazon EC2 instances. In the traditional boot process, the root partition of the image will be the local disk, which is created and populated at boot time. In the new Amazon EBS boot process, the root partition is an Amazon EBS volume, which is created at boot time from an Amazon EBS snapshot. Other Amazon EBS volumes beyond the root disk can also made part of the instance before it is booted. This allows for a very fine-grain control of software and data configuration. An additional advantage of using the Amazon EBS boot process is that root partitions are no longer constrained by the size of the local disk and can be up to 1TB in size. And the new boot process is significantly faster because a local disk no longer needs to be populated. …

AWS’ official announcement of 12/3/2009 is here.

Brad Reed reports Verizon gets into cloud consulting on 12/2/2009 for Network World:

If you run an IT department, chances are you've been bombarded by vendors hyping their own brand of cloud computing services over the last year. …

In fact, you've probably been told that cloud computing is an ideal solution for businesses that want a massively scalable service that can give them large amounts of computing power on demand without the need to heavily invest in on-site physical infrastructure. And while it sounds good, there are obvious concerns: Cloud computing technology is still relatively new and is fraught with peril, as several high-profile outages and data losses have left many IT departments wary of the technology.

Verizon Business, however, says it's here to help. The company Wednesday launched the Verizon Cloud Computing Program to help IT departments transition their applications to a cloud environment. Bruce Biesecker, a senior strategist at Verizon Business, says the company is employing a "more traditional definition of cloud computing" that involves both a front-end portal that lets users add server capacity and a billing system that charges customers for computing as though it's a utility. As an example, Biesecker points to Verizon's own computing-as-a-service offering that provides on-demand capacity and charges customers only for the time they use the service. …

<Return to section navigation list>