Windows Azure and Cloud Computing Posts for 10/5/2009+

Windows Azure, Azure Data Services, SQL Azure Database and related cloud computing topics now appear in this weekly series.

Windows Azure, Azure Data Services, SQL Azure Database and related cloud computing topics now appear in this weekly series.

• Update 10/6/2009: Me: Amazon subsidizes SimpleDB charges; John Moore: Will NHIN be the new Health Internet? Geva Perry: Domain-specific clouds; WSJ Health Blog: Adam Bosworth’s Keas personal health management site; Wally B. McClure: Tweet Master (TwtMstr) Update;

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- .NET Services: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

Azure Blob, Table and Queue Services

• Johnny Halife posted the source code for his waz-blobs Ruby project to GitHub on 10/6/2009. According to Johnny, it’s a:

Windows Azure Blob Storage gem for Ruby. Create containers, create blobs, retrieve them and even more with this gem.

• Gaurav Mantri’s Comparing Windows Azure Storage Management Tools post of 10/6/2009 claims:

As a part of our preparation for the public beta release of Cloud Storage Studio (http://www.cerebrata.com/Blog/post/Cloud-Storage-Studio-is-now-available-in-public-beta.aspx), we did a comparison analysis of some of the tools available in the market which allow you to manage your Azure storage. We focused mainly on the core features available in Windows Azure (like creating tables etc.) and whether the tools have implemented these features.

You can download the comparison as a 45-kB PDF file here.

Mike Amundsen’s HTTP and concurrency essay of 10/4/2009 contends:

[C]oncurrency-checking is essential when handling writes/deletes in all multi-user applications. anyone who implements a multi-user application w/o concurrency-checking is taking extraordinary chances. [W]hen a customer finds out that concurrency-checking is missing, there will be big trouble. [E]specially if the way a customer discovers this problem is when important data gets clobbered due to a concurrency conflict between users.

He then goes on to discuss “pessimistic concurrency”, “optimistic concurrency”, “HTTP and concurrency”, and “Are you handling concurrency properly” topics.

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

Dana Gardner presents a Web data services extend data access and distribution beyond the RDB-BI straightjacket podcast of 10/5/2009 with a transcription. The podcast isPart 2 of the series sponsored by Kapow Technologies, with Jim Kobielus, senior analyst at Forrester Research, and Stefan Andreasen, co-founder and chief technology officer at Kapow Technologies.

<Return to section navigation list>

.NET Services: Access Control, Service Bus and Workflow

No significant new posts on this topic today.

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

• Wally McClure (@wbm) updated his Windows Azure Tweet Master (TwtMstr) sample project on 10/6/2009:

According to an e-mail from Wally, TwtMstr sports a new UI and it now has two new search features.

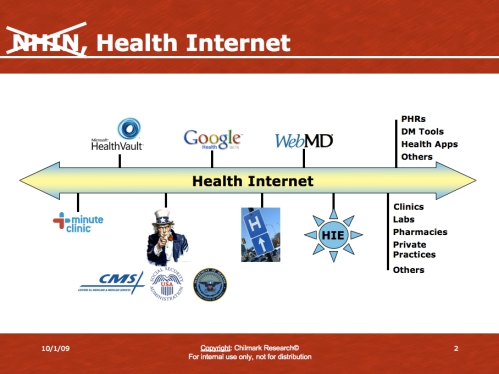

• John Moore’s NHIN: The New Health Internet? post of 10/6/2009 to the Chilmark Research blog claims NHIN is better suited for Personal Health Records (PHR) than clinician-centric Electronic Medical Records (EMR):

Chilmark has not been a big fan of the National Health Information Network (NHIN) concept. It was, and in large part still is, a top heavy federal government effort to create a nationwide infrastructure to facilitate the exchange of clinical information. A high, lofty and admirable goal, but one that is far too in front of where the market is today. The NHIN is like putting in an interstate highway system (something that did not happen until Eisenhower came to office) when we are still traveling by horse and buggy. Chilmark has argued for a more measured approach beginning locally via HIEs established by IDNs (our favorite as there is a clear and compelling business case) and RHIOs in regions where competitors willingly chose not to compete on data, rather seeing value in sharing data.

But what might happen if the folks in DC stopped talking about the NHIN as some uber-Health Exchange, but instead positioned it as a consumer-focused platform? [Emphasis John’s.] …

Image credit: Chilmark

… At the ITdotHealth [National Health IT Forum] event many of the participants (Google Health, HealthVault, MinuteClinic, etc.) stated that they “are in” and are willing to work with the feds to insure that their respective platforms/services will be able to readily connect to and exchange PHI upon a consumer’s request over the Health Internet. Even EMR giant Cerner voted tentative support for the idea if the Health Internet would assist them in helping their customers (clinicians, clinics, hospitals) meet some of the forthcoming meaningful use criteria that is now being formulated by CMS – Chopra at the June CONNECT event and Park at this one basically inferred that providing the capability for an EHR to connect to the Health Internet would address some aspects of meaningful use. ..

• WSJ Health Blog’s A New Company from the Former Google Health Guy post of 10/6/2009 reports on the new company Adam Bosworth’s launched after leaving Google:

Adam Bosworth, the guy who used to be Google’s VP of engineering and point man on health stuff, has his own company now — a health site called Keas.

The basic idea is straightforward: Plug in your health data, and get a plan to stay healthy. As your health changes, the plan changes. A profile of the company [by Steve Lohr] lands in this morning’s New York Times, which notes that the startup has some big-name partners: Google Health, Microsoft HealthVault and the big lab company Quest Diagnostics.

Google Health and Microsoft HealthVault aim to be personal health records, digital repositories of people’s health info. “But I decided my focus should be on the other side of the equation — what to do with the data,” Bosworth told the NYT.

Of course, plenty of other people are trying to figure out how to do this sort of thing. As the WSJ’s Kara Swisher noted last week Microsoft just launched “My Health Info,” which is supposed to work with HealthVault to allow people to do research, get guidance and monitor their health.

Quest Diagnostics’ Very Informed Patient (VIP) program enables importing clinical laboratory test results into HealthVault, Google Health, Keas, or MyCare360 PHR accounts if your physician participates in Quest’s Care360 Labs and Meds program. Quest’s Share lab results with patients online page provides details for health care providers, who provide patients with a temporary PIN number to authorize release of lab results to their patients. I’ll provide more details on the Byzantine process for syncing Quest data with HealthVault in a later post.

James Hamilton discusses Microsoft Research’s VL2: A Scalable and Flexible Data Center Network in this 10/5/2009 post:

Data center networks are nowhere close to the biggest cost or the even the most significant power consumers in a data center (Cost of Power in Large Scale Data Centers) and yet substantial networking constraints loom large just below the surface. There are many reasons why we need innovation in data center networks but let’s look at a couple I find particularly interesting and look at the solution we offered in a recent SIGCOMM paper VL2: A Scalable and Flexible Data Center Network.

If you are interested in digging deeper into the VL2 approach:

- The VL2 Paper: VL2: A Scalable and Flexible Data Center Network

- An excellent presentation both motivating and discussing VL2: Networking the Cloud

In my view, we are on the cusp of big changes in the networking world driven by the availability of high-radix, low-cost, commodity routers coupled with protocol innovations.

It would be interesting to know if Microsoft’s current data centers implement part or all of the VL2 networking mode.

The Windows Azure Team’s Upcoming Changes to Windows Azure Logging post of 10/3/2009, which just appeared on Monday morning, proves that the team listened to developer complaints about Azure’s inelegant and cumbersome logging infrastructure:

At commercial launch, Windows Azure will feature an improved logging system. The new system will give users greater flexibility over what information is logged and how it is collected.

In preparation for the new logging system rollout, next week we will disable the ability to retrieve logs via the Windows Azure Portal. Your existing applications will continue to run without modification.

Why a New Logging System?

In our initial Community Technology Preview, we provided a logging API that allowed developers to write custom messages to an append-only log. The API was built on top of the efficient event tracing capabilities of Windows.

We’re expanding the functionality of the logging system to simplify common use cases. In the new logging system, you’ll have the ability to collect other kinds of data, such as performance counters. You’ll have the ability to automatically push your logs to Windows Azure storage at an interval you specify, in a structured format that’s easy to query. You’ll have the ability to reconfigure your logging on the fly, so you don’t have to decide up-front exactly what data you’ll need to debug problems.

The new logging system retains the best attributes of our initial logging API (simplicity and efficiency) while adding important features to help you build robust and reliable applications on Windows Azure.

Sounds good to me. I was one of the myriad folks who complained about the Byzantine process for monitoring exceptions.

Lucas Mearian’s Report: Lack of eHealth standards, privacy concerns costing lives post of 10/2/2009 to NetworkWorld carries an “Early detection through trending can save thousands of lives” subtitle:

Mining electronic patient data to discover health trends and automate life-saving health alerts for patients and their doctors will be the greatest benefit of electronic medical records (EMR), but a survey released today finds a lack of standards, privacy concerns by hospitals and patients and technology limitations is holding back progress.

Hundreds of billions of gigabytes of health information are now being collected in EMRs, and three-quarters (76%) of more than 700 healthcare executives recently surveyed by PricewaterhouseCoopers LLP agree that mining that information will be their organization's greatest asset over the next five years, both for saving patient lives and saving money.

Glenn Laffel MD, PhD analyzes the same PriceWaterhouseCoopers report in his Secondary Health Data a Gold Mine post of 10/5/2009:

With health care organizations collecting hundreds of billions of gigabytes of data per year and the Feds poised to assure widespread dissemination of EHRs at long last, PricewaterhouseCoopers has released a survey of industry executives in which 75% predict their organizations’ data will be their most valuable asset in 5 years.

Secondary use of all that data will, according to the respondents, improve the quality of care and public health, reduce costs and get drugs to market faster and more safely.

In its study, the New York consultancy tallied replies from execs representing 482 providers, 136 insurers and 114 drug/life sciences companies.

Nearly two-thirds of surveyed providers said they already use secondary data to some degree. The numbers were 54% and 66% for payers of pharmaceutical companies, respectively. Respondents noted that in addition to EHRs, secondary data came from claims data, lab and x-ray reports, clinical trials and disease management companies.

<Return to section navigation list>

Windows Azure Infrastructure

• Geva Perry’s The Purpose-Driven Cloud: Part 3 - Domain-Specific Clouds of 10/6/2009 begins:

This is the third post in the series I described in The Purpose-Driven Cloud. the series is an attempt to categorize the various cloud platforms available today (and that may be available in the future). Each installment examines one of the dimensions that differentiates various cloud platforms. Previous posts discussed the Usability-Driven Cloud and Framework-Based Clouds. This post is about:

Domain-Specific Clouds

Unlike general purpose clouds, such as IaaS clouds (for example, Amazon Web Services) and PaaS clouds (e.g., Google App Engine), domain-specific clouds are cloud platforms intended for developing apps (or components of apps) with a particular kind of functionality.

The example that epitomizes this concept is Twilio -- a cloud platform focused entirely on telephony and voice applications, as I described in detail in What's Really Exciting About Cloud Computing. …

David Linthicum explains on 10/5/2009 Why SOA Needs Cloud Computing - Part 1: “IT has become the single most visible point of latency when a business needs to change.” Dave expands on his contention:

It's Thursday morning, you're the CEO of a large, publicly traded company, and you just called your executives into the conference room for the exciting news. The board of directors has approved the acquisition of a key competitor, and you're looking for a call-to-action to get everyone planning for the next steps.

You talk to the sales executives about the integration of both sales forces in three months time, and they are excited about the new prospects. You talk to the HR director who is ready to address the change they need to make in two months. You speak to the buildings and maintenance director who can have everyone moved that needs to be moved in three months. Your heart is filled with pride.

However, when you ask the CIO about changing the core business processes to drive the combined companies, the response is much less enthusiastic. "Not sure we can change our IT architecture to accommodate the changes in less than 18 months, and I'm not even sure if that's possible," says the CIO. "We simply don't have the ability to integrate these systems, nor the capacity. We'll need new systems, a bigger data center..." You get the idea.

As the CEO you can't believe it. While the other departments are able to accommodate the business opportunity in less than five months, IT needs almost two years? …

Frank Gens announces IDC’s New IT Cloud Services Forecast: 2009-2013 in this 10/5/2009 post:

Last year, we published IDC’s first forecast of IT cloud services, focusing on enterprise adoption of public cloud services in five big IT categories through 2012. For the past several months, dozens of IDC analysts have collaborated to refine, deepen and extend our cloud services forecasts. In this post, we share this year’s update of our top-level cloud services forecast, now extended through 2013. [The full forecast, including 2008 as well as 2010-2012, will be published shortly in IDC's Cloud Services: Global Overview subscription program.]

The New Forecast

Here is the new forecast, segmented by offering category, for 2009 and 2013:

Click image (credit IDC) to enlarge

These figures represent revenues for offerings delivered via the cloud services model in five major enterprise IT segments (as defined in IDC’s IT market taxonomies): Application Software, Application Development and Deployment Software, Systems Infrastructure Software, and Server and Disk Storage capacity. These figures do not include spending for private cloud deployments; they look only at public IT cloud services offerings. …

David Linthicum observes that SaaS Horror Stories Are Starting to Appear in this 10/5/2009 post:

On Twitter, my fellow cloud guy and twitter buddy, James Urquhart of Cisco, and I were kicking around the notion that few cloud horror stories have yet to emerge. I've seen a few, but most of those who have problems with cloud computing are reluctant to go on record... That is, until this story by Tony Kontzer, who does a great job highlighting some issues that Pulte Homes had with cloud computing, in this case, issues with a SaaS vendor.

"Well over a year ago, Batt told me that his confidence in the cloud had been destroyed. He'd made an aggressive leap by deploying a large IT vendor's on-demand CRM application, imagining all the benefits he'd been told about, both by the vendor and his peers at other companies. He and his staff spent weeks ironing out all the integrations between the CRM application and several other IT systems, a process that proceeded smoothly. But when it came time to make changes to the CRM configuration, all the other applications went down, forcing Batt to uncouple everything and rethink things. It was easy to understand his frustration."

Matt Asay asks Is cloud computing the Hotel California of tech? in this 10/5/2009 post to his The Open Road blog for CNet News:

Depending on your perspective, it either opens up computing or closes it off. Customers don't seem to care one way or another, happily shoveling data into cloud services like Google, Facebook, and others without (yet) wondering what will happen when they want to leave.

Cloud computing may just be the Hotel California of technology.

I say this because even for companies, like Google, that articulate open-data policies, the cloud is still largely a one-way road into Web services, with closed data networks making it difficult to impossible to move data into competing services. Ever tried getting your Facebook data into, say, MySpace? Good luck with that. Social networks aren't very social with one other, as recently noted on the Atonomo.us mailing list.

Carl Brooks contends Private cloud isn’t a new market in this 10/5/2009 post to the IT Business Exchange’s Troposphere blog:

Private cloud is a touchy subject these days. Proponents say it’s inevitable, detractors say it’s all marketing. Enterprises, who are supposed to be clamoring for it, are cautious: hearing endless pitches from endless different angles will do that.

“We only have ourselves to blame,” said Parascale CEO Sanjai Krishnan. He said, month over month, more than half the people he pitches to say they’ve come to learn about cloud and cut through the hype. He said that enterprises are hearing about cloud, but what they’re hearing is, ‘do everything a different way’, and that’s not attractive.

Michael Vizard’s Cloud Computing: Changing the Balance of IT Power essay of 10/5/2009 begins:

Every time there is a major inflexion point in terms of IT, there is usually a corresponding change in competitive edge.

With the arrival of cloud computing as a new type of IT platform, some folks are starting to wonder if we’re about the see a significant narrowing of the IT competitive edge gap between companies that will especially benefit companies in developing countries.

In much the same way people point to rise of mobile phone technology in Asia as an example of how one part of the world took advantage of emerging technology to leapfrog other countries, the same potential now exists with the rise of cloud computing. …

Dan Kuznetsky pans marketing-speak in his Fourth type of cloud computing post of 10/5/2009 to ZDNet’s Virtually Speaking blog:

It is pretty much agreed in the industry that there are three expressions of cloud computing: software-as-a-service (SaaS), platform-as-a-service (Paas) and infrastructure-as-a-service (Iaas). Several vendors, hoping to gain some marketing and branding traction, have started to call what they’re doing “the fourth type of cloud computing.” For the most part, I don’t believe what their offering is the fourth type of cloud computing. …

In the past few weeks, I’ve seen a number of suppliers try to present their service offerings as the fourth instance of cloud computing. In most cases, these services appear to be merely advisory or development consulting services. These services, of course, don’t really meet the minimum requirement to be called an expression of cloud computing.

I’ve thought of a number of possibilities for a fourth type of cloud computing. One example would be a service that would front-end a number of cloud computing offerings allowing an automatic deployment across multiple cloud computing suppliers’ environments. This apporach would support workload management, workload service level management, workload failover and the like.

How would you define the fourth type of cloud computing?

Bill Zack’s Fault Tolerance in Windows Azure post of 10/3/2009 to MSDN’s Innov8showcase blog describes recent publications and blog posts about Windows Azure:

Ever since we announced Windows Azure there has been speculation about how reliable it will be and how we will insure that your application will continue to operate reliably in the face of changes to your application or the underlying software. Although we did say that we use multiple role instances and update domains to insure that your allocation has no single point of failure it was not until recently that we exposed more of the details of how this will work.

Now we have published the documentation on how this will work here and there is an excellent blog post here that explains the process in more detail.

There is also a new Deployment and Management API documented here which can be used as an alternative to using the portal for many deployment and management operations. But that will have to wait for another blog post.

Phil Wainewright’s The as-a-service business model post of 10/5/2009 to ZDNet’s Software as Services blog begins:

It’s interesting to see other industries taking a lead from SaaS and learning lessons from what I’ve started calling the ‘as-a-service’ business model. Especially when you consider that most ISVs still haven’t the least idea what SaaS really entails.

An article republished yesterday on paidcontent.org is by guest author Mika Salmi, former president of Global Digital Media at Viacom/MTV. Time To Change The Lens: Media As A Service discusses the impact of digital distribution on the media industry and argues that the software industry’s transition to SaaS illustrates what lies ahead:

“Shipping or downloading a static physical or digital product is a dying business. Pioneers like Salesforce.com, and now Google with their office apps, are showing how a ‘product’ is not a discrete thing. Rather, it’s an ongoing relationship — with continuous updates and two-way communication — with customers and even between customers.”

So now it’s MaaS or is it Xaas (Everything as a Service)?

<Return to section navigation list>

Cloud Security and Governance

GovInfoSecurity.com offers Jon Oltsik’s A Prudent Approach for Storage Encryption and Key Management whitepaper of May 2009:

|

|

<Return to section navigation list>

Cloud Computing Events

• Health Data News presents a Client Computing in the Health Care Industry Web seminar on 10/22/2009 sponsored by Intel and HP:

Innovation is key to healthcare, as is cost reduction. By reviewing the trends in client computing, this web seminar will discuss many of the important elements that are critical for success in client and overall access computing. Among the topics to be discussed are:

- Optimization

- Segmentation

- Innovation

- Virtualization

- Consumerization

- Collaboration

The dialog will focus on the book of the same title which will provide a framework for the ongoing continuous process improvement. The objective is to identify and share in the knowledge transfer and discuss best practices from a practitioner point of view.

Register here.

When: 10/22/2009 11:00 AM PTWhere: Internet (Web seminar)

Yeshim Dentz announces on 10/5/2009 in her Intel, Univa, Diamond Define Cloud Computing Best Practices post:

When: 10/8/2009 11:00 AM PTA team of industry leaders in cloud computing will be hosting a free webcast to provide insights and best practices on cloud-based IT service delivery. Experts from Intel Corporation, Univa UD and Diamond Management and Technology Consultants will lead the session, which takes place on Thursday, October 8 at 2:00pm ET.

Titled “Cloud as a Service Delivery Platform: Best Practices and Practical Insights,” the webcast will allow participants to get key questions answered about delivering cloud-based application services. Speakers will address lessons learned in both strategic planning and execution phases of cloud service delivery, presenting valuable tips and discussing experiences in a highly interactive forum. The webcast will also present the latest requirements that end users are demanding. …

Webcast presenters:

- Billy Cox, Director, Cloud Strategy and Planning, Software and Services Group, Intel Corporation

- Chris Curran, Partner and CTO, Diamond Management & Technology Consultants

- Jason Liu, CEO, Univa UD

Where: Internet (Webinar)

IBM announces in Cloud Computing “a series of Cloud 101, 201, and 301 sessions to help you understand the benefits of cloud computing, which workloads are best suited for each type of cloud environment, and the architectural design needed for a successful implementation.”

Cloud 101 & 201: Cloud Overview & Business Benefits:

- Choose from one of three sessions

- 06 Oct, 2009, 11 a.m. Eastern Standard Time (EST)

- 07 Oct, 2009 5 a.m. Eastern Standard Time (EST

- 07 Oct, 2009 9 a.m. Eastern Standard Time (EST)

Cloud 301: Technical Attributes of the IBM Cloud Solutions

- Choose from one of three sessions

- 08 Oct 2009, 11 a.m. Eastern Standard Time (EST)

- 09 Oct 2009, 5 a.m. Eastern Standard Time (EST)

- 09 Oct, 2009 9 a.m. Eastern Standard Time (EST)

Liam Cavanaugh’s Sync Framework and our upcoming conferences post of 10/5/2009 announces:

The next few months are going to be very busy for us here in the Sync Framework team. Not only do we have Sync Framework v2 coming out shortly, we also have a number of conferences where we will talk about the work we have been doing with some of external companies and internal groups here at Microsoft to allow developers to plug in synchronization to systems like SQL Server and SQL Azure. [Emphasis added.]

Starting in November, we will be at SQL PASS, and then at the PDC for the launch of SQL Azure. Last week I recorded a Channel 9 video along with Buck Woody and Michael Rys on the upcoming SQL PASS Summit. You can view the video here:

Maybe we’ll finally find out more about DataHub at PDC 2009.

When: 11/17 to 11/19/2009

Where: Los Angeles Convention Center, Los Angeles, CA, USA

The PDC 2009 team announced on 9/30 and 10/2/2009 the following new sessions for Windows Azure and cloud-related sessions:

REST Services Security Using the Microsoft .NET Access Control Service

Come hear how easy it is to secure REST Web services with the .NET Services Access Control Service (ACS). Learn about ACS fundamentals including how to request and process tokens, how to configure ACS, and how to use ACS to integrate your REST Web service with Active Directory Federation Services. Also see how to apply ACS in a variety of scenarios using a few popular programming models including the Windows Communication Foundation and Microsoft ASP.NET Model-View-Controller (MVC).

Building Hybrid Cloud Applications with the Microsoft .NET Service Bus

Explore patterns, practices, and insights gained from our early adopter programs for how to use the .NET Service Bus to move applications into the cloud or distribute applications across sites while retaining the ability to efficiently communicate between them. Also learn how to disentangle cross-application relationships along the way.

Enabling Single Sign-On to Windows Azure Applications

Learn how the Windows Identity Foundation, Active Directory Federation Services 2.0, and the claims-based architecture can be used to provide a uniform programming model for dentity and single sign-on across applications running on-premises and in the cloud.

Bridging the Gap from On-Premises to the Cloud

Hear how Microsoft views the future of cloud computing and how it is starting to deliver this vision in the Windows Azure platform. Learn how applications can be written to preserve much of the investment in code, programming models and tools, yet adapt to the scale-out, distributed, and virtualized environment of the cloud. Also learn about cloud computing's new operational challenges around networking, security, and compliance.

ADO.NET Data Services: What’s new with the RESTful data services framework

Join this code-heavy session to discuss the upcoming version of ADO.NET Data Services, a simple, standards-based RESTful service interface for data access. Come see new features in action and learn how Microsoft products are using ADO.NET Data Services to expose and consume Data Services to achieve their goals around data sharing.

When: 11/17 to 11/19/2009

Where: Los Angeles Convention Center, Los Angeles, CA, USA

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• My Amazon Undercuts Windows Azure Table and SQL Azure Costs with Free SimpleDB Quotas post of 10/5/2009 laments the absence of Microsoft subsidies for Windows Azure development and demonstration instances, data storage, and bandwidth that I proposed in my 9/8/2009 Lobbying Microsoft for Azure Compute, Storage and Bandwidth Billing Thresholds for Developers post:

Jeff Barr’s Don't Forget: You Can Use Amazon SimpleDB For Free! post of 10/2/2009 to the AWS blog reminds potential users:

“You can keep up to 1 gigabyte of data in SimpleDB without paying any storage fees. You can transfer 1 GB of data and use up to 25 Machine Hours to process your queries each month. This should be sufficient to allow you to issue about 2 million PutAttribute or Select calls per month.” [Emphasis Jeff’s.]

While Amazon’s SimpleDB charge threshold doesn’t represent a substantial amount of money, it’s still better than Azure’s pricing for instances, table/blob storage and bandwidth, especially for demo applications or projects in development.

• Tim Greene’s Bitbucket's downtime is a cautionary cloud tale post of 10/6/2009 to NetworkWorld’s Security blog claims:

Bitbucket’s weekend troubles with Amazon’s cloud services are instructive, but don’t necessarily indicate a problem with cloud security.

The major lesson to learn from Bitbucket’s experience is don’t put all your bits in one bucket. The company, which hosts a Web-based coding environment, entrusted all its network to Amazon’s cloud services, either its EC2 computing resources or its EBS storage service.

Any business that is going to do that needs a plan B for when things go wrong as they did last weekend when Bitbucket apparently suffered a DDoS attack that took Bitbucket and Amazon about 20 hours to diagnose and fix. Meanwhile, customers of Bitbucket couldn’t work on their own projects because they couldn’t reach Bitbucket. …

Symantec announces It will launch an object-based file storage service in next year in this somewhat premature press release of 10/5/2009:

Symantec Corp. unveiled a new application that that the company promises can help companies build internal highly scalable, high-performance file-based cloud storage systems using commodity server hardware and the arrays of most storage vendors.

At the same time, Sean Derrington, director of storage management and high availability services at Symantec, disclosed that the company also plans to launch an online object-based file storage service, code-named S4, over the next year. Derrington provided few details of the new cloud service, but did note that it will to scale to tens of petabytes for corporate users.

I wonder what Amazon thinks about Symantec’s “S4” code-name?

Jeff Barr’s New Elastic MapReduce Goodies: Apache Hive, Karmasphere Studio for Hadoop, Cloudera's Hadoop Distribution post of 10/5/2009 to the Amazon Web Services blog describes presentations at the Hadoop World conference:

Earlier today, Amazon's Peter Sirota took the stage at Hadoop World and announced a number of new goodies for Amazon Elastic MapReduce. Here's what he revealed to the crowd:

Apache Hive Support

Elastic MapReduce now suports Apache Hive. Hive builds on Hadoop to provide tools for data summarization, ad hoc querying, and analysis of large data sets stored in Amazon S3. Hive uses a SQL-based language called Hive QL with support for map/reduce functions and complex extensible user defined data types such as JSON and Thrift. You can use Hive to process structured or unstructured data sources such as log files or text files. Hive is great for data warehousing applications such as data mining and click stream analysis. …

Karmasphere Studio For Hadoop

Amazon Elastic MapReduce is now supported by Karmasphere Studio For Hadoop. Based on the popular NetBeans IDE, Hadoop Studio supports development, debugging, and deployment of job flows directly from your desktop to Elastic MapReduce. …

Cloudera's Hadoop Distribution

Peter also announced that a private beta release of Amazon Elastic MapReduce support for Cloudera's Hadoop distribution. Cloudera customers can obtain a support contract to gain access to custom Hadoop patches and help with the development and optimization of processing pipelines. …

Tim Anderson reports Adobe uses Amazon platform for cloud LiveCycle ES2 on 10/5/2009:

Just spotted this from today’s Adobe’s LiveCycle ES2 announcement:

- Adobe is also announcing the ability for enterprise customers to deploy LiveCycle ES2 as fully managed production instances in the cloud, with 24×7 monitoring and support from Adobe, including product upgrades. LiveCycle ES2 preconfigured instances will be hosted in the Amazon Web Services cloud computing environment.

This is neat: Amazon’s Elastic Compute Cloud handles the infrastructure, but customers get fully supported hosted services from Adobe.

Maintaining a global infrastructure for high-volume cloud services is hugely expensive, which restricts it to a few very large companies. Using Amazon removes that requirement at a stroke. I wonder if Adobe also uses Amazon for Acrobat.com – hosted conferencing and document-based collaboration – or plans to do so?

OpSource claims on 10/5/2009 that their OpSource Cloud (Beta) offers an easy way to provision, deploy and manage your Enterprise-Class applications or your testing and development environments from anywhere, anytime, and:

OpSource Cloud is the first Cloud solution to meet enterprise production application requirements for security, performance and control. Every OpSource Cloud user automatically receives a Virtual Private Cloud which allows them to set their preferred amount of public Internet connectivity. [Emphasis OpSource’s.]

This private network, combined with a compliant multi-tier architecture built on corporate standard technology, makes OpSource Cloud a true extension of enterprise IT. And extensive controls seal the deal for IT management. Finally, OpSource Cloud is available for online purchase by the hour, with an active online community augmenting its 24x7 phone support.

<Return to section navigation list>