Windows Azure and Cloud Computing Posts for 10/7/2013+

Top Stories This Week:

- Satya Nadella (@satyanadella) asserted The Enterprise Cloud takes center stage in a 10/7/2013 post to the Official Microsoft Blog in the Windows Azure Infrastructure and DevOps section.

- Alex Simons (@Alex_A_Simons) posted An update on dates, pricing and sharing some cool data! in the Windows Azure Access Control, Active Directory, and Identity section.

- Paolo Salvatori (@babosbird) announced Service Bus Explorer 2.1 adds support for Notification Hubs and Service Bus 1.1 in a 9/30/2013 post (missed when published) with bug fixes on 10/9/2013 in the Windows Azure Service Bus, BizTalk Services and Workflow section

| A compendium of Windows Azure, Service Bus, BizTalk Services, Access Control, Caching, SQL Azure Database, and other cloud-computing articles. |

‡ Updated 10/13/2013 with new articles marked ‡.

• Updated 10/9/2013 with new articles marked •.

Note: This post is updated weekly or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

- Windows Azure SQL Database, Federations and Reporting, Mobile Services

- Windows Azure Marketplace DataMarket, Power BI, Big Data and OData

- Windows Azure Service Bus, BizTalk Services and Workflow

- Windows Azure Access Control, Active Directory, and Identity

- Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

- Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure and DevOps

- Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

- Visual Studio LightSwitch and Entity Framework v4+

- Cloud Security, Compliance and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Windows Azure Blob, Drive, Table, Queue, HDInsight and Media Services

<Return to section navigation list>

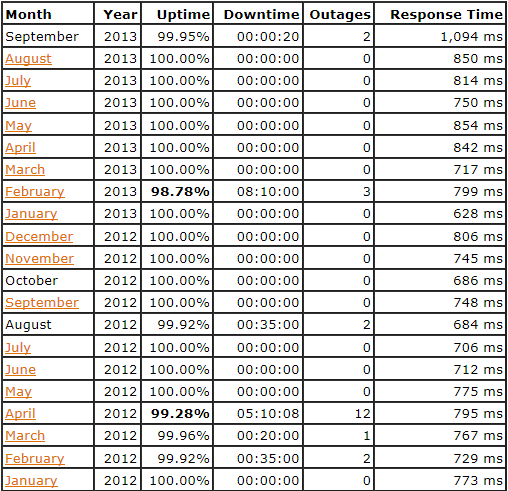

My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: September 2013 = 99.95% post of 10/7/2013 begins:

My (@rogerjenn) live OakLeaf Systems Azure Table Services Sample Project demo project runs two small Windows Azure Web role compute instances from Microsoft’s South Central US (San Antonio, TX) data center. This report now contains more than two full years of uptime data.

…

This is the twenty-eighth uptime report for the two-Web role version of the sample project since it was upgraded to two instances. Uptimes below SLA 99.9% minimums are emphasized. Reports will continue on a monthly basis.

The six-month string of 100% uptime came to an end on 9/4/2013.

Philip Fu posted [Sample Of Oct 6th] Azure Storage Backup Sample to the Microsoft All-In-One Code Framework blog on 10/6/2013:

Sample Download : http://code.msdn.microsoft.com/CSAzureBackup-78eee246

The sample code demonstrates how to backup Azure Storage in Cloud..

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

<Return to section navigation list>

Windows Azure SQL Database, Federations and Reporting, Mobile Services

‡ Nick Harris (@cloudnick) and Chris Risner (@chrisrisner) produced CloudCover Episode 116: Cross Platform Notifications using Windows Azure Notifications Hub on 10/11/2013:

In this episode Nick Harris and Chris Risner are joined by Elio Damaggio a Program Manager on the Notification Hubs team. During this episode Elio demonstrates the following concepts:

- Sending Push Notifications to Windows Store apps with Notification Hubs

- Sending Push Notifications to iOS and Android apps with Notification Hubs

- Using templates to send device agnostic push notifications to all devices connected to a Notification Hub

In the News:

- New memory intensive instance for Virtual Machines and Cloud Services

- Support for Oracle VMs and stopped VM management

- Multiple Active Directories and AD Two Factor Authentication

- Restoring your account spending limit

- New storage library

- IP and Domain restrictions for Web Sites

‡ Maarten Balliauw (@maartenballiauw) described Developing Windows Azure Mobile Services server-side in a 10/11/2013 post:

Word of warning: This is a partial cross-post from the JetBrains WebStorm blog. The post you are currently reading adds some more information around Windows Azure Mobile Services and builds on a full example and is a bit more in-depth.

With Microsoft’s Windows Azure Mobile Services, we can build a back-end for iOS, Android, HTML, Windows Phone and Windows 8 apps that supports storing data, authentication, push notifications across all platforms and more. There are client libraries available for all these platforms which can be used when developing in an IDE of choice, e.g. AppCode, Google Android Studio or Visual Studio. In this post, let’s focus on what these different platforms have in common: the server-side code.

This post was sparked by my buddy Kristof Rennen’s session for our user group. During his session he mentioned a couple of times how he dislikes Node.js and the trial-and-error manner of building the server-side due to lack of good tooling. Working for a tooling vendor and intrigued by the quest of finding a better way, I decided to post the short article you are currently reading.

Do note that I will focus more on how to get your development environment set-up and less on the Windows Azure Mobile Services feature set. Yes, you will learn some of the very basics but there are way better resources available for getting in-depth knowledge on the topic.

Here’s what we will see in this post:

- Setting up a Windows Azure Mobile Service

- Creating a table and storing data

- A simple HTML/JS client

- Adding logic to our API

- Working on server-side logic with WebStorm

- Sending e-mail using an Node.js module

- Putting our API to the test with the REST client

- Unit testing our logic

The scenario

Doing some exploration is always more fun when we can do it based on a simple scenario. Whenever JetBrains goes to a conference and we have a booth, we like to do a raffle for licenses. The idea is simple: come to our booth for a chat, fill out a simple form and we will pick random names after the conference and send a free license.

For this post, I’ve created a very simple form in HTML and JavaScript, collecting visitor name and e-mail address.

Once someone participates in the raffle, the name and e-mail address are stored in a database and we send out an e-mail thanking that person for visiting the booth together with a link to download a product trial.

Setting up a Windows Azure Mobile Service

First things first: we will require a Windows Azure account to start developing. Next, we can create a new Mobile Service through the Windows Azure Management Portal.

Next, we can give our service a name and pick the datacenter location for it. We also have to provide the type of database we want to use: a free, 20 MB database, or a full-fledged SQL Database. While Windows Azure Mobile Services is always coupled to a database, we can build a custom API with it as well.

Once completed, we get several tabs to work with. There’s the initial welcome screen, displaying links to documentation and client libraries. The other tabs give access to monitoring, scaling, how we want to authenticate users, push notification settings and logs. Since we want to store data of booth visitors, let’s enter the Data tab.

Creating a table and storing data

From the Data tab, we can create a new table. Let’s call it Visitor. When creating a new table, we have to specify access rules for the API that will be available on top of it.

We can tell who can read (API GET request), insert (API POST request), update (API PATCH request) and delete (API DELETE request). Since our application will only insert new data and we don’t want to force booth visitors to log in with their social profiles, we can specify inserts can be done if an API key is provided. All other operations will be blocked for outside users: reading and deleting will only be available through the Windows Azure Management Portal with the above settings.

Do we have to create columns for storing booth visitor data? By default, Windows Azure Mobile Services has “dynamic schema” enabled which means we can throw some JSON at our Mobile Service it and it will store data for us.

A simple HTML/JS client

As promised earlier in this post, let’s see how we can build a simple client for the service we have just created. We’ll go with an HTML and JavaScript based client as it’s fairly easy to demonstrate. Again, have a look at other client SDK’s for the platform you are developing for.

Our HTML page exists of nothing but two text boxes and a button, conveniently named name, email and send. There are two ways of sending data to our Mobile Service: calling the API directly or making use of the client library provided. Both are easy to do: the API lives at https://<servicename>.azure-mobile.net/tables/<tablename> and we can POST a JSON-serialized object to it, an approach we’ll take later in this blog post. There is also a JavaScript client library available from https://<servicename>.azure-mobile.net/client/MobileServices.Web-1.0.0.min.js which our client is using.

As we can see, a new MobileServiceClient is created on which we can get a table reference (getTable) and insert a JSON-formatted object. Do note that we have to pass in an API key in the client constructor, which can be obtained from the Windows Azure Management Portal under the Manage Keys toolbar button.

From the portal, we can now see the data we’re submitting from our simple application:

Adding logic to our API

Let’s make it a bit more exciting! What if we wanted to store a timestamp with every record? We may want to have some insight into when our booth was busiest. We can send a timestamp from the client but that would only add clutter to our client-side code. Also if we wanted to port the HTML/JS client to other platforms it would mean we have to make sure every client sends this data to our mobile service. In short: this calls for some server-side logic.

For every table created, we can make use of the Script tab to add custom logic to read, insert, update and delete operations which we can write in JavaScript. By default, this is what a script for insert may look like:

The insert function will be called with 3 parameters: the item to be stored (our JSON-serialized object), the current user and the full request. By default, the request.execute() function is called which will make use of the other two parameters internally. Let’s enrich our item with a timestamp.

Hitting Save will deploy this script to our mobile service which from now on will store an inserted timestamp in our database as well.

This is a very trivial example. There are a lot of things that can be done server-side: enforcing validation, record filtering, storing data in other tables as well, sending e-mail or text messages, … Here’s a post with some common scenarios. Full reference to the server-side objects is also available.

Working on server-side logic with WebStorm

Unfortunately, the in-browser editor for server-side scripts is a bit limited. It features no autocompletion and all code has to go in one file. How would we create shared logic which can be re-used across different scripts? How would we unit test our code? This is where WebStorm comes in. We can access the complete server-side code through a Git repository and work on it in a full IDE!

The Git access to our mobile service is disabled by default. Through the portal’s right-hand side menu, we can enable it by clicking the Set up source control link. Next, we can find repository details from the Configure tab.

We can now use WebStorm’s VCS | Checkout From Version Control | Git menu to bring down the server-side code for our Windows Azure Mobile Service.

In our project, we can see several folders and files. The service/api folder can hold custom API’s (check the readme.md file for more info). service/scheduler can hold scripts that execute at a given time or interval, much like CRON jobs. service/shared can hold shared scripts that can be used inside table logic, custom API’s and scheduler scripts. In the service/table folder we can find the script we have created through the portal: visitor.insert.js. Also note the visitor.json file which contains the access rules we configured through the portal earlier.

From now on, we can work inside WebStorm and push to the remote Git repository if we want to deploy our new code.

Sending e-mail using a Node.js module

Let’s go back to our initial requirements: whenever someone enters their name and e-mail address in our application, we want to send out an e-mail thanking them for participating. We can do this by making use of an NPM module, for example SendGrid.

Windows Azure Mobile Services comes with some NPM modules preinstalled, like SendGrid and Twilio. However we want to make sure we are always using the same version of the NPM package, so let’s install it into our project. WebStorm has a built-in package manager to do this, however Windows Azure Mobile Services requires us to install the module in a non-standard location (the service folder) hence we will use the Terminal tool window to install it.

Once finished, we can start working on our e-mail logic. Since we may want to re-use the e-mail logic (and we want to unit test it later), it’s best to create our logic in the shared folder.

In our shared module, we can make use of the SendGrid module to create and send an e-mail. We can export our sendThankYouMessage function to consumers of our shared module. In the visitor.insert.js script we can require our shared module and make use of the functionality it exposes. And as an added bonus, WebStorm provides us with autocompletion, code analysis and so on.

Once we’ve updated our code, we can transfer our server-side code to Windows Azure Mobile Services. Ctrl+K (or Cmd+K on Mac OS X) allows us to commit and push from within the IDE.

Putting our API to the test with the REST client

Once our changes have been deployed, we can test our API. This can be done using one of the client libraries or by making use of WebStorm’s built-in REST client. From the Tools | Test RESTful Web Service menu we can craft our API calls manually.

We can specify the HTTP method to use (POST since we want to insert) and the URL to our Windows Azure Mobile Services endpoint. In the headers section, we can add a Content-Type header and set it to application/json. We also have to specify an API key in the X-ZUMO-APPLICATION header. This API key can be found in the Windows Azure Management Portal. On the right-hand side we can provide the text to post, in this case a JSON-serialized object with some properties.

After running the request, we get back response headers and a response body:

No error message but an object is being returned? Great, that means our code works (and should also be sending out an e-mail). If something does go wrong, the Logs tab in the Windows Azure portal can be a tremendous help in finding out what went wrong.

Through the toolbar on the left, we can export/import requests, making it easy to create a number of predefined requests that can easily be run over and over for testing the REST API.

Unit testing our logic

With WebStorm we can easily test our JavaScript code and custom Node.js modules. Let’s first set up our IDE. Unit testing can be done using thenodeunit testing framework which we can install using the Node.js package manager.

Next, we can create a new Run Configuration from the toolbar selecting Nodeunit as the configuration type and entering all required configuration details. In our case, let’s run all tests from the test directory.

Next, we can create a folder that will hold our tests and mark it as a Test Source Root (open the context menu and use Mark Directory As | Test Source Root). Tests for Nodeunit are always considered modules and should export their test functions. Here’s a very basic example which tells Nodeunit to wait for one assertion, assert that a boolean is true and marks the test case completed.

Of course we can also test our business logic. It’s best to create separate modules under the shared folder as they will be easier to unit test. However if you do have to test the actual table scripts (like insert functionality), there is a little trick that allows doing just that. The following snippet exports the insert function outside of the table-specific module:

We can now test the complete visitor.insert.js module and even provide mocks to work with. The following example loads all our modules and sets up test expectation. We’re also overriding specific functionalities such as the sendThankYouMessage function to just make sure it’s called by our table API logic.

The full source code for both the server-side and client-side application can be found onhttps://github.com/maartenba/JetBrainsBoothMobileService.

If you would like to learn more about Windows Azure Mobile Services and work with authentication, push notifications or custom API’s checkout the getting started documentation. And if you haven’t already, give WebStorm a try.

Enjoy!

Related posts

- Hands-on Windows Azure Services for Windows A couple of weeks ago, Microsoft announced their Windows Azure Services for Windows Server. If you&r...

- Introducing Windows Azure Companion – Cloud for the masses? At OSIDays in India, the Interoperability team at Microsoft has made an interesting series of announ...

- Lightweight PHP application deployment to Windows Azure Those of you who are deploying PHP applications to Windows Azure, are probably using the Windows Azu...

• Kirill Gavrylyuk (@kirillg_msft) and Josh Twist (@joshtwist) will host a Twitter #AzureChat - Windows Azure Mobile Services on 10/10/2013 at 12:30 PM PST, as reported in the Cloud Computing Events section below.

<Return to section navigation list>

Windows Azure Marketplace DataMarket, Cloud Numerics, Big Data and OData

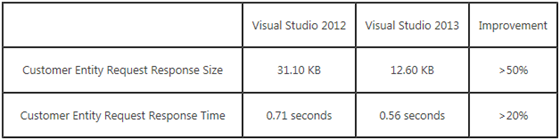

• Matt Sampson described A Slimmer OData Format in his LightSwitch Performance Win in Visual Studio 2013 post of 10/9/2013 in the Visual Studio LightSwitch and Entity Framework v4+ section below.

• Matt Sampson described A Slimmer OData Format in his LightSwitch Performance Win in Visual Studio 2013 post of 10/9/2013 in the Visual Studio LightSwitch and Entity Framework v4+ section below.

Philip Fu posted [Sample Of Oct 7th] Using ACS as an OData token issuer to the Microsoft All-In-One Code Framework blog on 10/8/2013:

Sample Download : http://code.msdn.microsoft.com/VBAzureACSAndODataToken-406f4fe1

The sample code demonstrates how to invoke the WCF service via Access control service token. Here we create a Silverlight application and a normal Console application client. The Token format is SWT, and we will use password as the Service identities.

You can find more code samples that demonstrate the most typical programming scenarios by using Microsoft All-In-One Code Framework Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates. If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, or read the introduction on our homepage http://1code.codeplex.com/.

<Return to section navigation list>

Windows Azure Service Bus, BizTalk Services and Workflow

‡ Brian Loesgen (@BrianLoesgen) described how to perform an In-place upgrade of ESB Toolkit 2.1 to 2.2 in a 10/10/2013 post:

It seems most working with the ESB Toolkit 2.2 have done so from a clean install. As I couldn’t find any guidance on how to do this, I thought I would post the solution to save others some time. We needed to do this as we were doing an upgrade of BizTalk Server 2010 to BizTalk Server 2013.

- Run the ESBTK 2.1 configuration wizard, choose “unconfigure features”, and unconfigure eveything

- In the BizTalk Admin tool, remove any references that any BizTalk applications have to the Microsoft.Practices.ESB BizTalk application

- In the BizTalk Admin tool, do a full stop of the Microsoft.Practices.ESB BizTalk application and remove it. Then go to “add/remove programs” and uninstall it

- In “add/remove programs”, uninstall the ESB Toolkit

- In “add/remove programs”, uninstall Enterprise Library 4.1

- (This step is CRUCIAL) From an administrative command prompt, navigate to c:\windows\assembly\GAC_MSIL. Remove any subdirectories that begin with “Microsoft.Practices.ESB” (if you get an “access denied”, close all programs except for the command prompt). See the tip below on creating a batch file.

- Install Enterprise Library 5.0 (you do not need the source code)

- Install ESB Toolkit 2.2 from the BizTalk 2013 installation media

- Run the ESB Toolkit configuration wizard and configure the toolkit. Be sure to choose “use existing database” for the exception and itinerary databases

If you miss step 6, the old ESB assemblies will be found and they will be trying to load EntLib 4.1 assemblies, leading to a baffling error message.

For step 6, we did a DIR and piped the output to a file, which we then took into Excel and cleaned up, creating a batch file to do all the removals for us. Thanks to Sandeep Kesiraju for his help developing the above.

• Paolo Salvatori (@babosbird) announced Service Bus Explorer 2.1 adds support for Notification Hubs and Service Bus 1.1 in a 9/30/2013 post (missed when published) with bug fixes on 10/9/2013:

The Service Bus Explorer 2.1 uses the Microsoft.ServiceBus.dll client library which is compatible with the Service Bus for Windows Server 1.1, but not with the version 1.0. For this reason, I included the old version of the Service Bus Explorer in a zip file called 1.8 which in turn is contained in the zip file of the current version. The old version of the Service Bus Explorer uses the Microsoft.ServiceBus.dll 1.8 which is compatible with the Service Bus for Windows Server.

The Service Bus Explorer 2.1 supports for Service Bus for Windows Server 1.1. In particular, this version introduces the possibility to visualize the information comtained in the MessageCountDetails property of a QueueDescription, TopicDescription, SubscriptionDescription object.

- This functionality allows to clone and send the selected messages to a the same or alternative queue or topic in the Service Bus namespace. If you want to edit the payload, system properties or user-defined properties, you have to select, edit and send messages one at a time. In order to do so, double click a message in the DataGridView or right click the message and click Repair and Resubmit Selected Message from the context menu. This opens up the following dialog that allows to modify and resubmit the message or to save the payload to a text file.

Important Note: the Service Bus does not allow to receive and delete a peeked BrokeredMessage by SequenceNumber. Only deferred messages can by received by SequenceNumber. As a consequence, when editing and resubmitting a peeked message, there's no way to receive and delete the original copy.

- The Service Bus Explorer 2.1 introduces support for Notification Hubs. See the following resources for more information on this topic:

The Notification Hubs node under the namespace root node allows to manage notification hubs in defined in Windows Azure Service Bus namespace.

- The context menu allows to perform the following actions:

- Create Notification Hub: create a new notification hub

- Delete Notification Hubs: delete all the notification hubs defined in the current namespace.

- Refresh Notification Hubs: refreshed the list of notification hubs.

- Export Notification Hubs: exports the definition of all the notification hubs to a XML file.

- Expand Subtree: expands the tree under Notification Hubs node.

- Collapse Subtree: collapse the tree under Notification Hubs node.

- The Create Notification Hub allows to define the path, credentials, and metadata for a new notification hub:

- If you click an existing notification hub, you can view and edit credentials and metadata:

- The Authorization Rules tab allows to review or edit the Shared Access Policies for the selected notification hub.

- The Registrations buttons opens a a dialof that allows the registrations to query:

- You can select one of the following options:

- PNS Handle: this option allows to retrieve registrations by ChannelUri (Windows Phone 8 and Windows Store Apps registrations), DevieToken (Apple registrations), GcmRegistrationId (Google registrations)

- Tag: this options allows to find all the registrations sharing the specified tag.

- All: this options allows to receive n registrations where n is specified by the Top Count parameter. This value specifies also the page size. In fact, the tool supports registration data paging and allows to retrieve more data using the continuation mechanism.

- The Registrations tab allows to select one or multiple registrations from the DataGridView.

- The navigation control in the bottom of the registrations control allows to navigate through pages.

- The DataGridView context menu provides access to the following actions:

- Update Selected Registrations: update the selected registrations.

- Delete Selected Registrations: delete the selected registrations.

- The PropertyGrid on the right-hand side allows to edit the properties (e.g. Tags or BodyTemplate) of an existing registration.

- The Create button allows to create a new registration. Select the registration type from the dropdownlist and enter mandatory and optional (e.g. Tags, Headers) information and click the Ok button to confirm.

- The Template tab allows to send template notifications:

- The Notification Payload read-only texbox shows the payload in JSON format.

- The Notification Properties datagridview allows to define the template properties.

- The Notification Tags datagridview allows to define one or multiple tags. A separate notification is sent for each tag.

- The Additional Headers datagridview allows to define additional custom headers for the notification.

- The Windows Phone tab allows to send native notifications to Windows Phone 8 devices.

- The Notification Payload texbox shows the payload in JSON format. When the Manual option is selected in the dropdownmenu under Notification Template, you can edit or paste the payload direcly in the control. When any of the other options (Tile, Toast, Raw) is selected, this field is read-only.

- The Notification Template dropdownlist allows to select between the following types of notification:

- Manual

- Toast

- Tile

- Raw

- When Toast, Tile, or Raw is selected, the datagridview under the Notification Template section allows to define the properties for the notification, as shown in the figures below.

- The Notification Tags datagridview allows to define one or multiple tags. A separate notification is sent for each tag.

- The Additional Headers datagridview allows to define additional custom headers for the notification.

- The Windows tab allows to send native notifications to Windows Store Apps running on Windows 8 and Windows 8.1.

- The Notification Payload texbox shows the payload in JSON format. When the Manual option is selected in the dropdownmenu under Notification Template, you can edit or paste the payload direcly in the control. When any of the other options (Tile, Toast, Raw) is selected, this field is read-only.

- The Notification Template dropdownlist allows to select between the following types of notification:

- Manual

- Toast templates. For more information, see The toast template catalog (Windows store apps).

- Tile templates. For more information, see The tile template catalog (Windows store apps).

- When Toast, Tile, or Raw is selected, the datagridview under the Notification Templatesection allows to define the properties for the notification, as shown in the figures below.

- The Notification Tags datagridview allows to define one or multiple tags. A separate notification is sent for each tag.

- The Additional Headers datagridview allows to define additional custom headers for the notification.

- The Apple and Google tabs provides the ability to send, respectively, Apple and Gcm native notifications. For brevity, I omit the description of the Apple tab as it works the same way as the Google one.

- The Json Payload texbox allows to enter the payload in JSON format.

- The Notification Tags datagridview allows to define one or multiple tags. A separate notification is sent for each tag.

- The Additional Headers datagridview allows to define additional custom headers for the notification.

- Added the possibility to select and resubmit multiple messages in a batch mode from the Messages and Deadletter tabs of queues and subscriptions. It's sufficient to select messages in the DataGridView as shown in the following picture, right click to show the context menu and choose Resubmit Selected Messages in Batch Mode.

Minor changes

- Fixed code of the Click event handler for the Default button in the Options Form.

- Replaced the DataContractJsonSerializer with the JavaScriptSerializer class in the JsonSerializerHelper class.

Fixed a problem when reading Metrics data from the RESTul services exposed by a Windows Azure Service Bus namespace.

- Changes the look and feel of messages in the Messages and Deadletter tabs of queues and subscriptions.

- Introduced indent formatting when showing and editing XML messages.

You can download the tool from MSDN Code Gallery.

Paolo reported bug fixes in the following two tweets of 10/9/2013:

Explorer http://bit.ly/LDOa5i: Fixed a bug that prevented to use a SAS connection string to access a namespace.

Posted a new version of

#WindowsAzure#ServiceBus Explorer [at]http://bit.ly/LDOa5i that fixes a bug affecting notification hub registrations.

• Paolo Salvatori (@babosbird) described How to integrate Mobile Services with a LOB app via BizTalk Adapter Service in a 10/9/2013 post:

Introduction

This sample demonstrates how to integrate Windows Azure Mobile Service with a line of business application, running on-premises or in the cloud, via Windows Azure BizTalk Services, Service Bus Relayed Messaging, BizTalk Adapter Service and BizTalk Adapter for SQL Server. The Access Control Service is used to authenticate Mobile Services against the XML Request-Reply Bridge used by the solution to transform and route messages to the line of business applications. For more information on Windows Azure BizTalk Services and in particular BizTalk Adapter Service, please see the following resources:

- BizTalk Adapter Service Architecture

- Runtime: BizTalk Adapter Service Runtime Components

- Development: Connecting to LOB Applications from a BizTalk Services Project

- BizTalk Adapter Service PowerShell Cmdlets

- Securing Connections with LOB Applications

- BizTalk Adapter Service Troubleshooting

The solution demonstrates how to use a Notification Hub to send a push notification to mobile applications to advice that a new item is available in the products database. For more information on Notification Hubs, see the following resources:

- Windows Azure Notification Hubs Overview

- Getting Started with Notification Hubs

- Notify users with Notification Hubs

- How to Use Service Bus Notification Hubs

- Push Notifications REST APIs

- Send cross-platform notifications to users with Notification Hubs

You can download the code from MSDN Code Gallery.

Scenario

A mobile service receives CRUD operations from a client application (Windows Phone 8 app, Windows Store app, HTML5/JS web site), but instead of accessing data stored in Windows Azure SQL Database, it invokes a LOB application running in a corporate data center. The LOB system, in this sample represented by a SQL Server database, uses the BizTalk Adapter Service to expose its functionality as a BasicHttpRelayBinding relay service to external applications and in particular to a XML Request-Reply Bridge running in a Windows Azure BizTalk Services namespace. The endpoint exposed by the BizTalk Adapter Service on Windows Azure Service Bus is configured to authenticate incoming calls using a relay access token issued ACS. The WCF service created by the BizTalk Adapter Service runs on a dedicated web site on IIS and uses the BizTalk Adapter for SQL Server to access data stored in the ProductDb database hosted by a local instance of SQL Server 2012.

The mobile service custom API acquires a security token from the Access Control Service to authenticate against the BizTalk Service that acts as a message broker and intermediary service towards the two services providers based in Europe and in the US. The server script creates the SOAP envelope for the request message, includes the security token in the HTTP Authorization request header and send the message to the runtime address of the underlying XML Request-Reply Bridge. The bridge transforms the incoming message into the canonical request format expected by the BizTalk Adapter for SQL Server and

promotes its SOAP Action header to the RequestAction property. In the message flow itinrerary, the value of this property is used to choose one of four routes (Select, Delete, Update, Create) that connect the bridge to BizTalk Adapter Service. Each route sets the SOAP Action of the outgoing SOAP request based on on the type of the CRUD operation. The BizTalk Adapter Service running on-premises as a WCF service accesses the data stored in the ProductDb database on a local instance of SQL Server using the BizTalk Adapter for SQL Server. This data is returned to Windows Azure BizTalk Services in SOAP format. The bridge applies a map to transform the incoming message into the response format expected by the caller then it sends the resulting message back to the mobile service. The mobile service changes the format of the response message from SOAP/XML to JSON format, then extracts and sends the result data back to the client application.NOTE:

- Look at How to integrate a Mobile Service with a SOAP Service Bus Relay Service to see a custom API can be used to invoke a WCF service that uses a BasicHttpRelayBinding endpoint to expose its functionality via a SOAP Service Bus Relay Service.

- Look at How to integrate a Mobile Service with a REST Service Bus Relay Service to see a custom API can be used to invoke a WCF service that uses a WebHttpRelayBinding endpoint to expose its functionality via a REST Service Bus Relay Service.

Architecture

The following diagram shows the architecture of the solution.

Message Flow

- The client application (Windows Phone 8 app, Windows Store app or HTML5/JavaScript web site) sends a request via HTTPS to the biztalkproducts custom API of a Windows Azure Mobile Service. The HTML5/JS application uses the invokeApi method exposed by the HTML5/JavaScript client for Windows Azure Mobile Services to call the mobile service. Likewise, the Windows Phone 8 and Windows Store apps use the InvokeApiAsync method exposed by the MobileServiceClient class. The custom API implements CRUD methods to create, read, update and delete data. The HTTP method used by the client application to invoke the user-defined custom API depends on the invoked operation:

- Read: GET method

- Add: POST method

- Update: POST method

- Delete: DELETE method

- The custom API sends a request to the Access Control Service to acquire a security token necessary to be authenticated by the Windows Azure BizTalk Services namespace. The client uses the OAuth WRAP Protocol to acquire a security token from ACS. In particular, the server script sends a request to ACS using a HTTPS form POST. The request contains the following information:

- wrap_name: the name of a service identity in ACS used to authenticate with the Windows Azure BizTalk Services namespace (e.g. owner)

- wrap_password: the password of the service identity specified by the wrap_name parameter.

- wrap_scope: this parameter contains the relying party application realm. In our case, it contains the public URL of the bridge. (e.g. http://babonet.biztalk.windows.net/default/ProductService)

- ACS issues and returns a security token. For more information on the OAuth WRAP Protocol, see How to: Request a Token from ACS via the OAuth WRAP Protocol.

- The mobile service user-defined custom API performs the following actions:

- Extracts the wrap_access_token from the security token issued by ACS and uses its value to create the Authorization HTTP request header.

- Creates a SOAP envelope to invoke the XML Request-Reply Bridge. The custom API uses a different function to serve the request depending on the HTTP method and parameters sent by the client application.

- getProduct: this function is invoked when the HTTP method is equal to GET and the querystring contains a productid or id parameter.

- getProducts: this function is invoked when the HTTP method is equal to GET and the querystring does not contain any parameter.

- getProductsByCategory: this function is invoked when the HTTP method is equal to GET and the querystring contains a category parameter.

- addProduct: this function is invoked when the HTTP method is equal to POST and the request body contains a new product in JSON format.

- updateProduct: this function is invoked when the HTTP method is equal to PUT or PATCH and the request body contains an existing product in JSON format.

- deleteProduct: this function is invoked when the HTTP method is equal to DELETE and the querystring contains a productid or id parameter.

- Uses the https Node.js module to send the SOAP envelope to the XML Request-Reply Bridge.

- The XML Request-Reply Bridge performs the following actions:

- Validates the incoming request against one of the following XML schemas. For more information, see XML Request-Reply Bridge : Specifying the Schemas for the Request and Response Messages and XML Request-Reply Bridge : Configuring the Validate Stage for the Request Message.

- MobileServiceAddProduct

- MobileServiceDeleteProduct

- MobileServiceUpdateProduct

- MobileServiceGetProduct

- MobileServiceGetProducts

- MobileServiceGetProductsByCategory

- Promotes the SOAP Action of the incoming message with the name RequestAction. For more information, see XML Request-Reply Bridge : Configuring the Enrich Stage for the Request Message.

- Uses one of the following maps to transform the message into the canonical request format expected by the BizTalk Adapter Service. This format is defined by one of the documents defined in the SQLAdapterTableOperation.dbo.Products.xsd XML schema. For more information, see Message Transforms.

- AddProductMap

- DeleteProductMap

- UpdateProductMap

- GetProductMap

- GetProductsMapGetProductsByCategoryMap

- The The XML Request-Reply Bridge uses the Route Rules and Route Ordering Table to route the request to the BizTalk Adapter Service. The value of RequestAction promoted property is used to choose one of four routes (Select, Delete, Update, Create) that connect the bridge to BizTalk Adapter Service. Each route sets the SOAP Action of the outgoing SOAP request based on on the type of the CRUD operation:

The configuration file of the destination node in the message flow itinerary defines a BasicHttpRelayEndpoint client endpoint to communicate with the relay service exposed by the BizTalk Adapter Service. For more information, see Including a Two-Way Relay Endpoint and Routing Messages from Bridges to Destinations in the BizTalk Service Project.

- TableOp/Insert/dbo/Products

- TableOp/Update/dbo/Products

- TableOp/Delete/dbo/Products

- TableOp/Insert/dbo/Products

- The Two-Way Relay Endpoint sends the request message to the BizTalk Adapter Service.

- The BizTalk Adapter Service uses the BizTalk Adapter for SQL Server to execute the CRUD operation contained in the request message.

- The BizTalk Adapter Service returns a response message to the bridge via relay service.

- The relay service forwards the message to the bridge.

- The XML Request-Reply Bridge performs the following actions:

- Validates the incoming request against one of the documents defined in the SQLAdapterTableOperation.dbo.Products.xsd XML schema. For more information, see XML Request-Reply Bridge : Specifying the Schemas for the Request and Response Messages and XML Request-Reply Bridge : Configuring the Validate Stage for the Request Message.

- Uses one of the following maps to transform the message into the canonical response format expected by mobile service. This format is defined by the CalculatorResponse XML schema. For more information, see Message Transforms.

- AddProductResponseMap

- DeleteProductResponseMap

- UpdateProductResponseMap

- GetProductResponseMap

- GetProductsResponseMap

- GetProductsByCategoryResponseMap

- The XML Request-Reply Bridge sends the response message to the mobile service. The mobile service sends a push notification to mobile devices that registered one or more templates (e.g. a toast notification and/or a live tile) with the notification hub. In particular, the notification is sent with a tag equal to productservice. For more information, see Send cross-platform notifications to users with Notification Hubs.

- The custom API performs the following actions:

- Uses the xml2js Node.js module to change the format of the response SOAP message from XML to JSON.

- Flattens the resulting JSON object to eliminate unnecessary arrays.

- Extracts data from the flattened representation of the SOAP envelope and creates a response message in JSON object.

- Returns data in JSON format to the client application

NOTE: the mobile service communicates with client applications using a REST interface and messages in JSON format, while it communicates with the XML Request-Reply Bridge using SOAP messages.

Access Control Service

The following diagram shows the steps performed by a WCF service and client to communicate via a Service Bus Relay Service. The Service Bus uses a claims-based security model implemented using the Access Control Service (ACS). The service needs to authenticate against ACS and acquire a token with the Listen claim in order to be able to open an endpoint on the Service Bus. Likewise, when both the service and client are configured to use the RelayAccessToken authentication type, the client needs to acquire a security token from ACS containing the Send claim. When sending a request to the Service Bus Relay Service, the client needs to include the token in the RelayAccessToken element in the Header section of the request SOAP envelope. The Service Bus Relay Service validates the security token and then sends the message to the underlying WCF service. For more information on this topic, see How to: Configure a Service Bus Service Using a Configuration File.

Prerequisites

- Visual Studio 2012 Express for Windows 8

- Windows Azure Mobile Services SDK for Windows 8

- and a Windows Azure account (get the Free Trial)

…

Paolo continues with details of Building the Sample.

<Return to section navigation list>

Windows Azure Access Control, Active Directory, Identity and Workflow

Alex Simons (@Alex_A_Simons) posted An update on dates, pricing and sharing some cool data! to the Active Directory blog on 10/4/2013:

Back in August I blogged about some new capabilities that we turned on in preview that will simplify managing access to many popular SaaS applications.

Today I want to give you an update on our plans and also share some data demonstrating the tremendous customer growth we are seeing!

Since July we've made major progress in delivering our Application Access Enhancements for Azure AD. We've turned on preview of our single-sign on capabilities using our SAML federation capabilities and our new password vaulting system. We've turned on our outbound identity provisioning system and have it working with a wide selection of SAAS Applications. And as of last Friday, we have completed integrating with 227 SAAS Application!

Now that we are this far along, it is a good time to share some more news with you:

First, we are on track to GA these enhancements before the end of 2013.

Second, we have decided that these enhancements will be free! Yes, that's right, we are going to make them available at no cost.

These free enhancements for Windows Azure AD include:

- SSO to every SaaS app we integrate with – Users can Single Sign On to any app we are integrated with at no charge. This includes all the top SAAS Apps and every app in our application gallery whether they use federation or password vaulting. Unlike some of our competitors, we aren't going to charge you per user or per app fees for SSO. And with 227 apps in the gallery and growing, you'll have a wide variety of applications to choose from.

- Application access assignment and removal – IT Admins can assign access privileges to web applications to the users in their directory assuring that every employee has access to the SAAS Apps they need. And when a user leaves the company or changes jobs, the admin can just as easily remove their access privileges assuring data security and minimizing IP loss

- User provisioning (and de-provisioning) –IT admins will be able to automatically provision users in 3rd party SaaS applications like Box, Salesforce.com, GoToMeeting. DropBox and others. We are working with key partners in the ecosystem to establish these connections, meaning you no longer have to continually update user records in multiple systems.

- Security and auditing reports – Security is always a priority for us. With the free version of these enhancements you'll get access to our standard set of access reports giving you visibility into which users are using which applications, when they were using and where they are using them from. In addition, we'll alert you to un-usual usage patterns for instance when a user logs in from multiple locations at the same time. We are doing this because we know security is top of mind for you as well.

Our Application Access Panel – Users are logging in from every type of devices including Windows, iOS, & Android. Not all of these devices handle authentication in the same manner but the user doesn't care. They need to access their apps from the devices they love. Our Application Access Panel is a single location where each user can easily access and launch their apps.

We will also start a Preview of a premium set of enterprise features in the near future so keep an eye out for that!

In addition to this news, I thought it would be fun to share some of the data we're collecting on the rapidly growing adoption of Windows Azure AD.

As of yesterday, we have processed over 430 Billion user authentications in Azure AD, up 43% from June. And last week was the first time that we processed more than 10 Billion authentications in a seven day period. This is a real testament to the level of scale we can handle! You might also be interested to learn that more than 1.4 million business, schools, government agencies and non-profits are now using Azure AD in conjunction with their Microsoft cloud service subscriptions, an increase of 100% since July.

And maybe even more amazing is that we now have over 240 million user accounts in Azure AD from companies and organizations in 127 countries around the world. It is a good thing we're up to 14 different data centers – it looks like we're going to need it.

So as you can see, Azure AD is getting a world-wide workout and we're proud of how it's shaping up!

<Return to section navigation list>

Windows Azure Virtual Machines, Virtual Networks, Web Sites, Connect, RDP and CDN

• Kevin Remde (@KevinRemde) answered How safe is my Windows Azure virtual machine? in part 50 of his So Many Questions, So Little Time series on 10/9/2013 in the Cloud Security, Compliance and Governance section below.

<Return to section navigation list>

Windows Azure Cloud Services, Caching, APIs, Tools and Test Harnesses

Michael Washam (@mwashamtx) explained Calling the Windows Azure Management API from PowerShell in a 10/8/2013 post:

Most of the time using the Windows Azure PowerShell cmdlets will accomplish whatever task you need to automate. However, there are a few cases where directly calling the API directly is a necessity.

In this post I will walk through using the .NET HttpClient object to authenticate and call the Service Management API along with the real world example of creating a Dynamic Routing gateway (because it is not supported in the WA PowerShell cmdlets).

To authenticate to Windows Azure you need the Subscription ID and a management certificate. If you are using the Windows Azure PowerShell cmdlets you can use the built in subscription management cmdlets to pull this information.

$sub = Get-AzureSubscription "my subscription" $certificate = $sub.Certificate $subscriptionID = $sub.SubscriptionIdFor API work my preference is to use the HttpClient class from .NET. So the next step is to create an instance of it and set it up to use the management certificate for authentication.

$handler = New-Object System.Net.Http.WebRequestHandler # Add the management cert to the client certificates collection $handler.ClientCertificates.Add($certificate) $httpClient = New-Object System.Net.Http.HttpClient($handler) # Set the service management API version $httpClient.DefaultRequestHeaders.Add("x-ms-version", "2013-08-01") # WA API only uses XML $mediaType = New-Object System.Net.Http.Headers.MediaTypeWithQualityHeaderValue("application/xml") $httpClient.DefaultRequestHeaders.Accept.Add($mediaType)Now that the HttpClient object is setup to use the management certificate you need to generate a request.

The simplest request is a GET request because any parameters are just passed in the query string.

# The URI to the API you want to call # List Services API: http://msdn.microsoft.com/en-us/library/windowsazure/ee460781.aspx $listServicesUri = "https://management.core.windows.net/$subscriptionID/services/hostedservices" # Call the API $listServicesTask = $httpClient.GetAsync($listServicesUri) $listServicesTask.Wait() if($listServicesTask.Result.IsSuccessStatusCode -eq "True") { # Get the results from the API as XML [xml] $result = $listServicesTask.Result.Content.ReadAsStringAsync().Result foreach($svc in $result.HostedServices.HostedService) { Write-Host $svc.ServiceName " " $svc.HostedServiceProperties.Location } }However, if you need to do something more complex like creating a resource you can do that as well.

For example, the New-AzureVNETGateway cmdlet will create a new gateway for your virtual network but it was written prior to the introduction of Dynamic Routing gateways (and they have not been updated since…).

If you need to create a new virtual network with a dynamically routed gateway in an automated fashion calling the API is your only option.$vnetName = "YOURVNETNAME" # Create Gateway URI # http://msdn.microsoft.com/en-us/library/windowsazure/jj154119.aspx $createGatewayUri = "https://management.core.windows.net/$subscriptionID/services/networking/$vnetName/gateway" # This is the POST payload that describes the gateway resource # Note the lower case g in <gatewayType - the documentation on MSDN is wrong here $postBody = @" <?xml version="1.0" encoding="utf-8"?> <CreateGatewayParameters xmlns="http://schemas.microsoft.com/windowsazure"> <gatewayType>DynamicRouting</gatewayType> </CreateGatewayParameters> "@ Write-Host "Creating Gateway for VNET" -ForegroundColor Green $content = New-Object System.Net.Http.StringContent($postBody, [System.Text.Encoding]::UTF8, "text/xml") # Add the POST payload to the call $postGatewayTask = $httpClient.PostAsync($createGatewayUri, $content) $postGatewayTask.Wait() # Check status for success and do cool thingsSo there you have it.. When the WA PowerShell cmdlets are behind the times you can quickly unblock with some direct API intervention.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

‡ Alexandre Brisebois asserted I Take it Back! Use Windows Azure Diagnostics in a 10/13/2013 post:

I used to create my own logging mechanisms for my Windows Azure Cloud Services. For a while this was the perfect solution to my requirements. But It had a down side, it required cleanup routines and a bit of maintenance.

I used to create my own logging mechanisms for my Windows Azure Cloud Services. For a while this was the perfect solution to my requirements. But It had a down side, it required cleanup routines and a bit of maintenance.

In the recent months I changed my mind about Windows Azure Diagnostics and if you’re not too adventurous and don’t need your logs available every 30 seconds, I strongly recommend using them. They’ve come such a long way since the first versions that I’m now willing to wait the full minute for my application logs to get persisted to table storage.

The issues I had with Windows Azure Diagnostics were because of my ignorance and half because of irritating issues that used to exist.

- I didn’t know how to efficiently read from Windows Azure Diagnostics

- Changing Windows Azure Diagnostics configurations used to mean a new release (code changes and deployment)

Gaurav Mantri wrote an excellent blog post about an effective way of fetching diagnostics data from Windows Azure Diagnostics Table. In his post, Gaurav mentions that the table’s PartitionKey is UTC time ticks rounded to the minute. This is actually really practical! Because it means that we can query for multiple time spans in parallel.

Get Windows Azure Diagnostics Cloud Table Query

Using code from my post about querying over Windows Azure Table Storage Service I created the following example to demonstrate how to query Windows Azure Diagnostics using UTC ticks as the PartitionKey.

public class GetWindowsAzureDiagnostics<TEntity> :

CloudTableQuery<TEntity>

where TEntity : ITableEntity, new()

{

private readonly string tableStartPartition;private readonly string cacheKey;

private readonly string tableEndPartition;public GetWindowsAzureDiagnostics(DateTime start, DateTime end)

{

tableStartPartition = "0" + start.ToUniversalTime().Ticks;

tableEndPartition = "0" + end.ToUniversalTime().Ticks;var queryCacheHint = "GetWindowsAzureDiagnostics"

+ tableStartPartition

+ tableEndPartition;cacheKey = queryCacheHint;

}public override Task<ICollection<TEntity>> Execute(CloudTable model)

{

if (model == null)

throw new ArgumentNullException("model");return Task.Run(() =>

{

var condition = MakePartitionKeyCondition();var tableQuery = new TableQuery<TEntity>();

tableQuery = tableQuery.Where(condition);

return (ICollection<TEntity>)model.ExecuteQuery(tableQuery).ToList();

});

}public override string GenerateCacheKey(CloudTable model)

{

return cacheKey;

}private string MakePartitionKeyCondition()

{

var startTicks = tableStartPartition.ToUpperInvariant();

var partitionStarts = TableQuery.GenerateFilterCondition("PartitionKey",

QueryComparisons.GreaterThanOrEqual,

startTicks);var endTicks = tableEndPartition.ToUpperInvariant();

var partitionEnds = TableQuery.GenerateFilterCondition("PartitionKey",

QueryComparisons.LessThanOrEqual,

endTicks);return TableQuery.CombineFilters(partitionStarts, TableOperators.And, partitionEnds);

}

}Using the GetWindowsAzureDiagnostics query

public async Task<ICollection<DynamicTableEntity>> GetDiagnostics()

{

TimeSpan oneHour = TimeSpan.FromHours(1);

DateTime start = DateTime.UtcNow.Subtract(oneHour);var query = new GetWindowsAzureDiagnostics<DynamicTableEntity>(start, DateTime.UtcNow);

return await TableStorageReader.Table("WADLogsTable").Execute(query);

}Setting Up Windows Azure Diagnostics Configurations

From the Solution Explorer right click on the role’s definition located in the Clod Project.

Then select Properties.

Enable Diagnostics and specify a storage account connection string. Be sure to use a separate account from you application’s production storage account. Each storage account has a target performance of 20,000 transactions per second, therefore using a different storage account will not penalize your application’s performance.

Click on the Edit button

Use this window to configure the application’s diagnostics.

Updating Windows Azure Diagnostics from Visual Studio’s Server Explorer

From the Server Explorer, select the Cloud Service role open the context menu.

From this menu, select Update Diagnostics Settings…

From this menu, you will be able to modify the current Windows Azure Diagnostics configurations. Clicking on Ok will update the role instances on Windows Azure with the new settings.

Next Steps

Once you’ve gathered Windows Azure Diagnostics for a little while, you will probably want to have a look. To accomplish this you have a couple options like third party tools. The Azure Management Studio can help you browse the Windows Azure Diagnostics data. On the other hand, if you need something more custom, you can use a method similar to the table storage query from this blog post. I usually query storage directly because I haven’t come across the perfect tool.

How do you work with Windows Azure Diagnostics data?

In the recent months I changed my mind about Windows Azure Diagnostics and if you’re not too adventurous and don’t need your logs available every 30 seconds, I strongly recommend using them. They’ve come such a long way since the first versions that I’m now willing to wait the full minute for my application logs to get persisted to table storage.

The issues I had with Windows Azure Diagnostics were because of my ignorance and half because of irritating issues that used to exist.

- I didn’t know how to efficiently read from Windows Azure Diagnostics

- Changing Windows Azure Diagnostics configurations used to mean a new release (code changes and deployment)

Gaurav Mantri wrote an excellent blog post about an effective way of fetching diagnostics data from Windows Azure Diagnostics Table. In his post, Gaurav mentions that the table’s PartitionKey is UTC time ticks rounded to the minute. This is actually really practical! Because it means that we can query for multiple time spans in parallel.

Get Windows Azure Diagnostics Cloud Table Query

Using code from my post about querying over Windows Azure Table Storage Service I created the following example to demonstrate how to query Windows Azure Diagnostics using UTC ticks as the PartitionKey.

public class GetWindowsAzureDiagnostics<TEntity> :

CloudTableQuery<TEntity>

where TEntity : ITableEntity, new()

{

private readonly string tableStartPartition;private readonly string cacheKey;

private readonly string tableEndPartition;public GetWindowsAzureDiagnostics(DateTime start, DateTime end)

{

tableStartPartition = "0" + start.ToUniversalTime().Ticks;

tableEndPartition = "0" + end.ToUniversalTime().Ticks;var queryCacheHint = "GetWindowsAzureDiagnostics"

+ tableStartPartition

+ tableEndPartition;cacheKey = queryCacheHint;

}public override Task<ICollection<TEntity>> Execute(CloudTable model)

{

if (model == null)

throw new ArgumentNullException("model");return Task.Run(() =>

{

var condition = MakePartitionKeyCondition();var tableQuery = new TableQuery<TEntity>();

tableQuery = tableQuery.Where(condition);

return (ICollection<TEntity>)model.ExecuteQuery(tableQuery).ToList();

});

}public override string GenerateCacheKey(CloudTable model)

{

return cacheKey;

}private string MakePartitionKeyCondition()

{

var startTicks = tableStartPartition.ToUpperInvariant();

var partitionStarts = TableQuery.GenerateFilterCondition("PartitionKey",

QueryComparisons.GreaterThanOrEqual,

startTicks);var endTicks = tableEndPartition.ToUpperInvariant();

var partitionEnds = TableQuery.GenerateFilterCondition("PartitionKey",

QueryComparisons.LessThanOrEqual,

endTicks);return TableQuery.CombineFilters(partitionStarts, TableOperators.And, partitionEnds);

}

}Using the GetWindowsAzureDiagnostics query

public async Task<ICollection<DynamicTableEntity>> GetDiagnostics()

{

TimeSpan oneHour = TimeSpan.FromHours(1);

DateTime start = DateTime.UtcNow.Subtract(oneHour);var query = new GetWindowsAzureDiagnostics<DynamicTableEntity>(start, DateTime.UtcNow);

return await TableStorageReader.Table("WADLogsTable").Execute(query);

}Setting Up Windows Azure Diagnostics Configurations

From the Solution Explorer right click on the role’s definition located in the Clod Project.

Then select Properties.

Enable Diagnostics and specify a storage account connection string. Be sure to use a separate account from you application’s production storage account. Each storage account has a target performance of 20,000 transactions per second, therefore using a different storage account will not penalize your application’s performance.

Click on the Edit button

Use this window to configure the application’s diagnostics.

Updating Windows Azure Diagnostics from Visual Studio’s Server Explorer

From the Server Explorer, select the Cloud Service role open the context menu.

From this menu, select Update Diagnostics Settings…

From this menu, you will be able to modify the current Windows Azure Diagnostics configurations. Clicking on Ok will update the role instances on Windows Azure with the new settings.

Next Steps

Once you’ve gathered Windows Azure Diagnostics for a little while, you will probably want to have a look. To accomplish this you have a couple options like third party tools. The Azure Management Studio can help you browse the Windows Azure Diagnostics data. On the other hand, if you need something more custom, you can use a method similar to the table storage query from this blog post. I usually query storage directly because I haven’t come across the perfect tool.

How do you work with Windows Azure Diagnostics data?

Satya Nadella (@satyanadella) asserted The Enterprise Cloud takes center stage in a 10/7/2013 post to the Official Microsoft Blog:

The following post is from Satya Nadella, Executive Vice President, Cloud and Enterprise at Microsoft.

Today as we launch our fall wave of enterprise cloud products and services, I’ve been reflecting on how things change. A few years ago when I joined what was then the Server and Tools Business, I had the opportunity to talk to financial analysts about our business. Interestingly, after I covered the trends and trajectory of a $19 billion business – what would independently be one of the top three software companies in the world – there were no questions. Zero.

Well, recently that has changed. As of late, there has been a lot of interest in what I call the commercial business, which spans nearly every area of enterprise IT and represents about 58 percent of Microsoft’s total revenue. It’s a critical business for us, with great momentum and one to which we are incredibly committed.

But as people look at our commercial business in this age of cloud computing, big data and the consumerization of IT, people are asking questions about our future strength in the enterprise. Will Microsoft continue to be at the core of business computing in, say, 10 years? I’ll be honest that there’s a little déjà vu in that question; 10 years ago many people doubted our ability to be an enterprise company and today we surely are. But, it’s a question worth exploring.

My answer is Yes. Yes, I believe we will be at the core of, and in fact lead, the enterprise cloud era. I’ll explain why.

The enterprise move to the cloud is indeed going to be huge – we’re talking about a potential IT market of more than 2 trillion dollars – and that move is just getting started. To be the leader in this next era of enterprise cloud you must:

- Have best-in-class first-party SaaS applications– on your cloud

- Operate a public cloud – at massive, global scale – that supports a broad range of third parties

- Deliver true hybrid cloud capabilities that provide multi-cloud mobility

These are the characteristics enterprises need and want as they move to the cloud. So, let’s briefly look at how Microsoft is doing in these areas.

We are delivering best-in-class first party software-as-a-service applications: Business services like Office 365, Yammer, Dynamics CRM, and consumer services like Bing, Outlook.com, Xbox Live and more than 200 other services. The widespread use of services like Office 365 provides a foundation for other critical cloud technologies that enterprises will adopt, such as identity and application management.

We are delivering a global public cloud platform in Windows Azure - the only public cloud with fully supported infrastructure and platform services. Windows Azure is available in 109 countries, including China, and supports eight languages and 19 currencies, all backed by the $15 billion we’ve invested in global datacenter infrastructure. And we’re partnering broadly across the industry to provide third-party software and services in our cloud, from Oracle to Blinkbox and everything in between.

We are also delivering hybrid solutions that help enterprises build their own clouds with consistency, enable them to move without friction across clouds, and let them use the public cloud in conjunction with their own clouds. Our hybrid approach spans infrastructure, application development, data platform and device management, and we build these solutions with the insight we get from running massive first-party applications and our own public cloud. We’re living and learning at cloud scale, and we are engineering on cloud time.

These areas guide our enterprise cloud strategy, investments and execution. These are the criteria by which I evaluate our progress and compare us against our competition, both new and old. I’d say we have done very good work. Do we have room to improve? Always. But at the end of the day, when I look across those three areas, we are the only cloud provider – new or old – who is delivering in all three to help enterprises realize the promise of the cloud.

Our fall wave delivers significant advancements across each of these areas, touching nearly every aspect of IT. The enterprise move to the cloud is on, and today marks a major milestone in that journey.

Steven Martin (@stevemar_msft) posted Announcing Dedicated Federal Cloud and Improved Pricing, Networking, and Identity Options on 10/7/2013:

Enterprises frequently tell us that issues such as price / performance, identity management, security and speed are of utmost importance in their cloud computing journey, and our commitment to partnering with our customers on these issues has never been greater. Today, Satya Nadella reiterated this commitment with a series of announcements supporting our Cloud OS vision—including a number of updates specific to Windows Azure that address these concerns.

Today’s announcements for Windows Azure are:

- Enterprise Savings & Generous Terms. Starting November 1, we’re making it easier than ever for enterprises to use Azure. Their enterprise commitment to Microsoft will bring them our best Azure prices based on their infrastructure spend. These enterprise discounts will be better than Amazon’s on commodity services like compute, storage and bandwidth (WindowsAzure.com will continue to match Amazon on those services). We’ve also extended those same great rates to any unplanned growth they may have on Azure, so they are free to grow significantly in Azure as their organizations demand it. Finally, customers can now pay us at the end of the year for that unplanned growth, as long as that extended use is within a certain threshold. These changes bring significant value to enterprises looking to invest in a cloud platform that will allow them to evolve over time.

- More choice for private, fast cloud access. Today we announced a partnership with Equinix to provide peering exchange locations for connections to the cloud which are low latency, fast and private. With more than 950 networks and over 110,000 cross-connects, Equinix has a massive global data center footprint that will enable our customers even greater reach and choice. This partnership will building from our recent AT&T announcement, as we strive to offer more choice than any other public cloud vendor and work to bring the cloud to our customers. We will on-board a limited number of early trial customers this year and open up for general availability in the first half of 2014.

- Dedicated cloud environment for government agencies. Like enterprises, the U.S. government is eager to realize the benefits of the cloud, adopting a Cloud First policy for any new investments. Microsoft is committed to supporting these initiatives and today is announcing its plans to offer a dedicated public cloud environment designed to meet the distinct needs of U.S. state, local and federal government agencies. This announcement comes on the heels of last week’s FedRAMP JAB P-ATO for Windows Azure, adding to rapidly accruing momentum showcasing Windows Azure’s leadership in government and cloud security.

- Enterprise-grade identity and access, for free. Last week we posted a blog highlighting Azure AD momentum. In addition, this month Microsoft will begin providing all Windows Azure customers enterprise-grade identity and access with Windows Azure Active Directory, providing a highly secure log-in experience and improved administrator capabilities for cloud applications. Every Azure subscriber will get a tenant for free, and multiple directories can be created under a tenant for maximum efficiency and scale.

Today’s announcements all support our Cloud OS vision, offering our customers consistency across environments and the ability to choose from a variety of clouds – Azure, service provider clouds, or their own data centers. This provides a flexible, simple approach which no other cloud provider in the industry can match. And with more than half of the Fortune 500 using Azure, and a growth rate 2 times the speed of the market, it’s clear that demand for this unique approach continues to rise.

Check out a free trial of Windows Azure and see for yourself why enterprises like Aston Martin, 3M, Xerox, United Airlines, Starbucks, Toyota and more are betting on Microsoft’s cloud offerings.

<Return to section navigation list>

Windows Azure Pack, Hosting, Hyper-V and Private/Hybrid Clouds

Chris Talbot prefixed his Cloud Cruiser to Provide Economic Insights for Azure Users article of 10/9/2013 for the Talkin’ Cloud blog with “Cloud Cruiser is now available on Windows Server 2012 R2 via the Windows Azure Pack. But what's the big deal? Cloud Cruiser is able to provide more insight into the economics of enterprises and hosters that are using cloud services on Azure:”

Need some more information for your customers on the operational and financial aspects of being a Microsoft (MSFT) Windows Azure user? Maybe that kind of knowledge would turn a "maybe" into a "yes" during the sales cycle. That's where Cloud Cruiser may come in handy.

It's not a company that comes up in conversation on Talkin' Cloud too often, but Cloud Cruiser plays in the cloud financial management area. Now it has brought its economic insights to Microsoft Windows Azure customers with the launch of the Windows Azure Pack for Windows Server 2012 R2.

Working directly from the Azure portal, Cloud Cruiser provides customers with advanced financial management, chargeback and cloud billing. The new Azure-based service is being targeted at enterprises, but there doesn't seem to be any reason why this couldn't work also for the midsize or even SMB markets. The cloud service provider market (or cloud services brokerages) should be able to make good use of the service, as well.

The service enables customers to "seamlessly manage both the operational and financial aspects of their Windows Azure cloud from a single pane of glass." According to Cloud Cruiser, the service helps customers to get answers to a few key questions, including:

- How can I automate my cloud billing or chargeback?

- Is my cloud profitable?

- Who are my top customers by revenue?

- What is the forecasted demand for my services?

- How can I use pricing as a strategic weapon?

"The inclusion of Cloud Cruiser with Windows Server is a clear signal from the largest software company in the world that financial management is integral to every cloud strategy," said Nick van der Zweep, vice president of Strategy for Cloud Cruiser, in a prepared statement. "This relationship helps ensure that businesses can deliver innovative, scalable, and profitable cloud services."

The Windows Server 2012 R2 Windows Azure Pack was released as part of Microsoft's vision to have a consistent cloud operating system platform.

The Microsoft Server and Cloud Platform Team posted Highlights from Oracle OpenWorld 2013 – Blazing New Trails on 10/9/2013:

Recently, Microsoft blazed new trail with its first-ever sponsored presence at Oracle OpenWorld. This participation was in celebration of the new partnership between Oracle and Microsoft which will provide customers more flexibility and choice for how they deploy Oracle’s software on Microsoft’s Cloud OS platforms.

The week was punctuated by Brad Anderson’s keynote delivery, announcing the preview availability of Oracle virtual machine images in the Windows Azure image gallery. If you weren’t one of the 10,000 in attendance, you can watch highlights of Brad’s OpenWorld keynote here (full-length version also available). Social channels buzzed with surprised and positive reactions to the announcements including comments like the following:

Those reactions were mirrored in the exhibition hall and at Microsoft’s breakout sessions where product experts answered questions ranging from “What is Windows Azure?” to “Do you really have Oracle Linux running in Windows Azure?” (by the way, the answer is YES!).

Has Microsoft and Oracle’s unexpected relationship peaked your interest as well? Try out the new images for yourself. Visit WindowAzure.com/Oracle for more information and to activate your free trial account.

Microsoft Public Relations asserted “New Windows Server, System Center, Visual Studio, Windows Azure, Windows Intune, SQL Server, and Dynamics solutions will accelerate cloud benefits for customers” in its Microsoft unleashes fall wave of enterprise cloud solutions press release of 10/7/2013:

Microsoft Corp. on Monday announced a wave of new enterprise products and services to help companies seize the opportunities of cloud computing and overcome today’s top IT challenges. Complementing Office 365 and other services, these new offerings deliver on Microsoft’s enterprise cloud strategy.

Satya Nadella, Cloud and Enterprise executive vice president, said, “As enterprises move to the cloud they are going to bet on vendors that have best-in-class software as a service applications, operate a global public cloud that supports a broad ecosystem of third party services, and deliver multi-cloud mobility through true hybrid solutions. If you look across the vendor landscape, you can see that only Microsoft is truly delivering in all of those areas.” More comments from Nadella can be found on The Official Microsoft Blog.

Hybrid infrastructure and modern applications

To help customers build IT infrastructure that delivers continuous services and applications across clouds, on Oct. 18 Microsoft will release Windows Server 2012 R2 and System Center 2012 R2. Together, these new products empower companies to create datacenters without boundaries using Hyper-V for high-scale virtualization; high-performance storage at dramatically lower costs; built-in, software-defined networking; and hybrid business continuity. The new Windows Azure Pack runs on top of Windows Server and System Center, enabling enterprises and service providers to deliver self-service infrastructure and platforms from their datacenters. [Emphasis added.]

Building on these hybrid cloud platforms, customers can use Visual Studio 2013 and the new .NET 4.5.1, also available Oct. 18, to create modern applications for devices and services. As software development becomes pervasive within every company, the new Visual Studio 2013 Modern Lifecycle Management solution helps enable development teams, businesspeople and IT managers to build and deliver better applications, faster.

Enabling enterprise cloud adoption