Windows Azure and Cloud Computing Posts for 11/26/2011+

| “Clouds” by Cannonball Adderley |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Liam Cavanagh (@liamca) explained How to Sort Azure Table Store results Chronologically in an 11/27/2011 post:

I just learned a handy trick for sorting results from the Azure Table Store chronologically. The problem I had is that Windows Azure Tables did not allow me to perform an OrderBy LINQ query on the results that were coming back and by default all of the items were returned sorted by the PartitionKey and RowKey. This meant that the results were coming back lexically sorted which was the opposite order to what I wanted because I wanted to show the top 100 most recently added items first. If I used the default sorting from Azure Tables, I would have to retrieve all the rows within a certain timeframe and then sort them in my code. This is not only innefficient, but also costly because of all the data than would need to be retrieved when I just want the top 100.

The trick I learned to handle this came from this whitepaper which uses a RowKey value of DateTime.MaxValue.Ticks - DateTime.UtcNow.Ticks to allow me to sort the items by an offsetted time from newest items to older items.

For example, to add the row:

LogEvent logEvent = new LogEvent(); logEvent.PartitionKey = userName; logEvent.RowKey = string.Format("{0:D19}", DateTime.MaxValue.Ticks - DateTime.UtcNow.Ticks); ; logEvent.UserName = userName; logEvent.EventType = EventType; logEvent.EventValue = EventValue; logEvent.EventTime = DateTime.Now;Then to retrieve the values, I query the results like this:

string rowKeyToUse = string.Format("{0:D19}", DateTime.MaxValue.Ticks - DateTime.UtcNow.Ticks); var results = (from g in tableServiceContext.CreateQuery<LogEvent>("logevent") where g.PartitionKey == User.Identity.Name && g.RowKey.CompareTo(rowKeyToUse) > 0 select g).Take(100);By doing this, I was able to retrieve the results in the correct order or most recently added items first and then limiting the results to the most recent 100 rows by using the the .Take(100) parameter.

Avkash Chauhan (@avkashchauhan) described Windows Azure Cloud Drive and Snapshots limit[s] in an 11/26/2011 post:

A recent question was raised about Windows Azure Cloud drive and snapshots limits so [here] is the answer:

[A] Windows Azure cloud drive [has] no limit[s] in terms of having snapshots. You can have as many as drive snapshots [as] you would want. However as the counter goes up the performance degrades. To [keep a] cloud drive’s performance up to mark, keep the snapshot count to 10 or less, as having

more than 10 orhigher snapshot count you might start getting poor performance.

Lennart Stoop (@TheAmph) posted A blob storage hive provider for Umbraco 5 (beta) on 11/25/2011:

An introduction into Umbraco Hive and creating a Hive provider which stores media on Azure blob storage.

Related files: download the source code – download the web application

Jupiter is just around the corner

With its first beta release on November 11th the road to Umbraco 5 (aka Jupiter) has brought us very close to its destination. The members of the Umbraco core team have been working really hard on stabilizing the API and many of our beloved (and improved) CMS features are well taking shape.

As Umbraco 5 remains open source all current and future releases are made available through Codeplex which allows developers of the Umbraco community to explore and work with the source code, to contribute and to provide the core team with valuable feedback.

After having attended the awesome Umbraco v5 hackathon organized by BUUG and hosted at our offices in Amsterdam – and also very much inspired by Alex Norcliffe’s talk at the Microsoft offices in Brussels – we really wanted to get our hands dirty and – deep dive into hive!

Umbraco Hive

Many of the recent Umbraco 5 talks and presentations involve Hive, a completely new and extremely powerful concept in the upcoming version. Much unlike a traditional data layer in most n-tier or n-layer (web) applications Hive represents an abstraction layer for developers to plug in multiple, stackable data providers. These Hive providers can pull data (read and/or write) from nearly any data source and allow for smooth integration within the Umbraco back office as well as a transparent way of querying the data in the front-end.

The first beta release comes with three Hive providers, created by the core team:

- A persistence provider that already supports SQL server and SQL CE (which uses NHibernate as ORM)

- An Examine provider for data indexation (which uses the Lucene.NET indexer)

- An IO provider which stores data on the local file system

Basically this means Hive already supports the vast majority of (.NET) based websites.

By the way, didn’t I mention these providers are stackable? In a recent demo (video currently not available yet) Alex quickly swapped the Examine provider with the persistence provider, having Umbraco run entirely on a file index without using a database at all. Although that’s a neat trick by itself, the true power of Hive is the ability to query multiple, stacked providers: imagine having data stored in both a database and a file index while data is always retrieved from the file index in order to gain a performance boost… Hive will make it work.

Community hackathons

In many countries the ongoing v5 hackathons allow Umbraco developers to come together, share experiences, learn and contribute. These sessions are the perfect opportunity to experiment with the various new concepts in v5 such as hive, hive providers, property editors, surface controllers, tree controllers and much more.

During the BUUG hackathon we were able to play around with the WordPress Hive provider created by the Karminator. Basically the provider connects to a WordPress blog and fetches categories and posts which are then loaded into the Umbraco back office. And even though our efforts of making the provider writeable were somewhat fruitless (#h5is) it did allow us to get familiar with Hive and understand how to create and configure a custom Hive provider.

The source code of the WordPress hive provider can be found on bitbucket and has also been added to the Umbraco 5 Contrib project on Codeplex which was introduced during the recent UK hackathon.

Since we weren’t able to attend the Umbraco UK festival and we didn’t want to wait until the next hackathon to further explore v5, we started a somewhat experimental side project: whether or not the websites we create are hosted on Windows Azure we often want to store resources on Windows Azure CDN or blob storage. In Umbraco v5 style sheets, scripts, templates and media uploads are stored on the local file system. All of this is handled by the IO Hive provider, so we decided to create a Hive provider which is to save these files on blob storage, and it works!

As for the more technical part of this post, let’s have a closer look at the setup.

Configuring the Blob Storage Hive provider

When you have a closer look at the Hive configuration file you will notice that a Hive provider can be configured separately for each of the storage types known by Umbraco.

The hive configuration file, located in App_Data\Umbraco\Config

The configuration file allows you to configure the type of the Factory class that is used by Hive to instantiate the provider’s entity repository (more on this later) as well as a reference to the provider configuration section which is located in the web.config. As shown below this configuration section allows users to further configure the Hive provider.

The umbraco configuration section in web.config

As for the blob storage provider we allow users to configure the name of the connection string of the blob storage account (which is to be added to the connection strings section) as well as the name of the blob container.

Creating a custom configuration section is fully supported by the Umbraco v5 Framework and is just a matter of creating/inheriting some classes that will allow the settings to be injected into the Entity repository (handled by IOC, very sweet). Make sure to have a look at the source code to find out exactly how easy it is ;-)

The provider dependency helper is a property of the abstract entity repository

Creating the Blob Storage Hive provider

Although we haven’t gotten around testing the provider for all storage types just yet, obviously the first thing we had in mind was having the provider handle media file uploads. The one thing which distinguishes media from other storage types is is that it uses both a persistence provider and a storage provider. This approach provides a (much needed) separation of the concept of media on the one hand and the actual physical storage on the other hand (I will come back to this in a moment).

As we won’t be replacing the persistence provider we were able to reuse plenty of the code written for the IO provider. So instead of walking through all the code, let’s just have a look at the key differences (and imperfections).

When you create a custom Hive provider you start out with creating an Entity model and a schema, allowing you to convert an arbitrary data model into a model which can be interpreted by Hive. Hive provides base entity classes for both model and schema (currently TypedEntity and EntitySchema) and base repository classes (currently AbstractEntityRepository and AbstractSchemaRepository) which allow Hive to query against model, schema and relations.

The main problem we faced when creating a model and schema for blob storage is that we noticed the core property editor used for uploading files in the back office creates a File model (IO provider). Since we did not want to create a custom upload property editor just yet, we decided to have our Blob model derive from the File model instead.

The Blob typed entity which derives from Umbraco.Persistence.Model.IO.File

Definitely something we will need to reconsider in the future, but for the sake of getting the project of the ground quickly it seems like a fair solution. This is also the reason why we didn’t put too much effort in tailoring the Blob schema just yet (a simple schema with a node name attribute seems to work just fine).

Next we copy/pasted the entity repository used by the IO provider and implemented most of the key logic in having data stored on blob storage. This actually all went pretty smooth and the one thing worth mentioning here is probably the use of Hive ID’s. The IO provider assembles Hive ID’s by normalizing the file path and although I’m sure it makes perfect sense we didn’t get it straight away and decided to implement our own strategy which basically comes down to: blob – container – blob name (which includes the GUID of the persisted media).

Although we haven’t yet fully implemented all of the entity/relation operations (and revision support for that matter) we did implement some of the relation operations which basically allow for the creation of thumbnails (AddRelation and PerformGetChildRelations). Having these implemented was amazingly straightforward by the way, so a big thumbs up to the core team! And again, you should really check out the source code to find out just how easy it was ;-)

The AddRelation method of the Entity Repository

That’s basically it, and now we seriously wanted to test-drive this baby! Unfortunately the upload property editor did require a small fix in order to make it work with our blob storage provider: the code used to create a thumbnail creates a bitmap by passing in a file path. To fix this we just had to replace 1 line of code (maybe 20 characters?) which I think still is impressive considering we are reusing the existing property editor ;-)

A small fix in the core Upload property editor

And it just works!

Media section in a local Umbraco v5 beta installation

Cloudberry explorer showing the media files on blob storage

We were actually somewhat surprised to see it work immediately as we first figured we would still have to make some changes in having the editor display the thumbnails (they’re on blob storage, so what about the URL?) and this is when we found out Umbraco 5 uses a custom mechanism for rendering media, i.e. the following URL will display an image uploaded as media:

http://umb5beta.local/Umbraco/Media/Proxy/media%24empty_root%24%24_p__nhibernate%24_

v__guid%24_28a171dda97048f98e6b9fa501792a5b?propertyAlias=uploadedFile

Remember the separation of the concept of media and the actual physical storage I mentioned earlier? Right!

What’s next?

Although we realize this is just a very basic implementation we would certainly love to see it being further developed into a solid Jupiter hive provider, and of course we love to hear your feedback and suggestions.

We will make sure the source code becomes available on the Umbraco 5 contrib project as soon as possible, but you can already just download it here or download a full web application which has the provider already configured (it uses SQL CE so no need to setup a database).

At These Days we are already looking forward to the next release of Jupiter!

<Return to section navigation list>

SQL Azure Database and Reporting

Liam Cavanagh (@liamca) described his new Notification and Job Scheduling Service for SQL Azure on 11/26/2011:

I have been working on a new service for monitoring a SQL Azure database that I call Cotega. The purpose of Cotega is to help SQL Azure database administrators keep a close eye on their database through notifications and job scheduling. SQL Server has a technology called the SQL Server Agent that does a similar set of things but unfortunately there is nothing currently available for SQL Azure to do this type of monitoring. In most cases you can implement this type of functionality within your own Windows Azure worker role, but if you just have a few databases or are just trying things out, it can be time consuming and costly to build and allocate the 2+ worker roles needed to ensure availability.

As I worked on new Azure based services, I found there were a number of basic things that I as the administrator wanted to know about my database. For example:

- Is the database up and running?

- Are users having any connection issues?

- Is my database running out of space?

- Are there any performance issues?

- How many users are active?

On top of that there were a number of scheduled jobs that I wanted to execute, such as:

- Backup (copy) the database on a regular basis

- Execute some clean-up jobs

Right now, the service allows you to define the monitoring and job execution schedules in minute intervals (starting at one minute) and when an event occurs (i.e., Disk space exceeds 950MB) you have the choice of either sending an email alert or running a local stored procedure.

The service is currently in an early Alpha stage and I want to make it clear that although I work for Microsoft, this service has no relationship to Microsoft. Also, if you would are interested in this type of scheduling and notification, but would prefer to do it within your own Worker Roles, I am happy to send you the queries and give you some tips on how to get started.

I am now on-boarding a few customers to help verify requirements and capabilities. If you are interested in giving it a try, please let me know.

Liam is a Sr. Program Manager for Microsoft's Emerging Cloud Data Services group covering SQL Azure, the “father” of SQL Azure Data Sync and a major contributor to the Microsoft Sync Framework.

SQLBlog posted a SQL Azure Reporting Server – Windows Azure platform tutorial on 11/26/2011:

Using SQL Azure Reporting, you can deploy a reporting solution to the Windows Azure platform environment. This solution will not only use data from the cloud SQL Azure database, you will also be deploying the actual Azure report up to the cloud environment. This will make the Cloud database report accessible to all to the end users who can access the data via the internet.

In order to create the SQL Azure report, we need to do the following three things:

- Create a new SQL Azure reporting server in Windows Azure platform

- Build a report using SQL Server business intelligence studio development studio (BIDS)

- Deploy the SQL Azure report to the cloud environment

Today we are going to simply look at the first step,

Create a new SQL Azure Reporting server in Windows Azure platform

We are assuming you already have a Windows Azure account and that you have already setup a SQL Azure cloud database. If you have not, please read this blog post on the topic.

We login to our Windows Azure account at this site:

Next we are going to create a SQL Azure Reporting server. This will be used to render reports from our SQL Azure cloud database to the Windows Azure platform. From the left menu, click on Reporting tab and then select Create a new SQL Azure Report Server from the right side.

This is shown right below.

Next you will get the SQL Azure Reporting server agreement. Go ahead and click “I agree …” and then click Next. This is shown in the screen shot right below.

In the next section, you can select the SQL Azure Subscription that you would like to use for the cloud database report. Select the Region for the SQL Azure Reporting Server should use. Ideally this location should be the same as the one you selected when you set up your original SQL Azure Server.

Since we are in Dallas TX, we have listed ours as South Central US. This is shown right below.

In the next step, we are going to setup the administrator account for SQL Azure Reporting Server. You will need to have a username and password for this account. We enter our account information in the next dialog box. Click Finish after this.

Next it will go ahead and create the reporting server. In our case the Azure SQL Report Server has the web service URL are listed below.

https://4z2w-e8fke.reporting.windows.net/ReportServer

After we create the SQL Azure reporting server, we can look at some of the options available to us. When you are logged in your Windows Azure platform account and select the Reporting tab on the left, you will see these options on the Report server taskbar:

Server: Create and delete Report server

Manage User: You can create and delete report users

Data Source: Ability to manage multiple SQL Azure data sources

Folder and Permissions: This can help you organize the reports and control access to themAfter we created the SQL Azure report server, we see the following options for Reporting Services tab.

Finally lets go ahead and log into the SQL Azure Report Server. Go ahead and type the Web service URL for the reporting server that was provided to you. Enter the username and password to login. Here is what we see on our end.

Once you login you should see something like this as follows. Notice we do not have any report folders or actual reports create just yet. We will do that in another blog post.

Related links to SQL Azure Reporting Services:

Andy Wu described SQL Azure Reporting with On-premise Report Manager in an 11/17/2011 post (missed when published):

Item management for SQL Azure Reporting can be done by Report Manager!

Steps:

1. Get a SQL Reporting Services instance. You may install it from a SQL Express free download.

2. Configure SSL for Report Manager.

3. Create an aspx page to log on to SQL Azure Reporting. A sample is at the end of this page.

4. Edit rsreportserver.config to configure Report Manager to use SQL Azure Reporting as its Report Server, and use the above page to log on automatically.

An Example:

1. The changed section of rsreportserver.config. Update it with a valid SQL Azure Reporting URL.

<UI>

<ReportServerUrl>https://as3hu99nxt.reporting.windows.net/reportserver

</ReportServerUrl>

<CustomAuthenticationUI><loginUrl>/Pages/AzureLogon.aspx</loginUrl>

<UseSSL>True</UseSSL>

</CustomAuthenticationUI>

<PageCountMode>Estimate</PageCountMode>

</UI>2. AzureLogon.aspx file, which is attached here. It should be added to ReportManager/Pages folder. Update it with a valid SQL Azure Reporting URL, user name, and password.

Let me know if you have any questions.

Attachment: AzureLogon.aspx

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics and OData

My (@rogerjenn) Twitter Sentiment Analysis: A Brief Bibliography post of 11/26/2011 begins:

Twitter’s TweetStream is a Big Data mother lode that you can filter and mine to gain business intelligence about companies, brands, politicians and other public persona. Social media data is a byproduct of cloud-based Software as a Service (SaaS) Internet sites targeted at consumers, such as Twitter, Facebook, LinkedIn and StackExchange. Filtered versions of this data are worth millions to retailers, manufacturers, pollsters, politicians and political parties, as well as marketing, financial and news analysts.

Sentiment Analysis According to Wikipedia

Wikipedia’s Sentiment Analysis topic begins:

Sentiment analysis or opinion mining refers to the application of natural language processing, computational linguistics, and text analytics to identify and extract subjective information in source materials.

Generally speaking, sentiment analysis aims to determine the attitude of a speaker or a writer with respect to some topic or the overall contextual polarity of a document. The attitude may be his or her judgment or evaluation (see appraisal theory), affective state (that is to say, the emotional state of the author when writing), or the intended emotional communication (that is to say, the emotional effect the author wishes to have on the reader).

Subtasks

A basic task in sentiment analysis[1] is classifying the polarity of a given text at the document, sentence, or feature/aspect level — whether the expressed opinion in a document, a sentence or an entity feature/aspect is positive, negative, or neutral. Advanced, "beyond polarity" sentiment classification looks, for instance, at emotional states such as "angry," "sad," and "happy."

Early work in that area includes Turney [2] and Pang [3] who applied different methods for detecting the polarity of product reviews and movie reviews respectively. This work is at the document level. One can also classify a document's polarity on a multi-way scale, which was attempted by Pang [4] and Snyder [5] (among others):[4] expanded the basic task of classifying a movie review as either positive or negative to predicting star ratings on either a 3 or a 4 star scale, while Snyder [5] performed an in-depth analysis of restaurant reviews, predicting ratings for various aspects of the given restaurant, such as the food and atmosphere (on a five-star scale).

A different method for determining sentiment is the use of a scaling system whereby words commonly associated with having a negative, neutral or positive sentiment with them are given an associated number on a -5 to +5 scale (most negative up to most positive) and when a piece of unstructured text is analyzed using natural language processing, the subsequent concepts are analyzed for an understanding of these words and how they relate to the concept. Each concept is then given a score based on the way sentiment words relate to the concept, and their associated score. This allows movement to a more sophisticated understanding of sentiment based on an 11 point scale. Alternatively, texts can be given a positive and negative sentiment strength score if the goal is to determine the sentiment in a text rather than the overall polarity and strength of the text [6]. …

The topic continues with Methods, Evaluation, Sentiment Analysis and Web 2.0 and See Also sections. From the References section:

- Michelle de Haaff (2010), Sentiment Analysis, Hard But Worth It!, CustomerThink, http://www.customerthink.com/blog/sentiment_analysis_hard_but_worth_it, retrieved 2010-03-12.

- Peter Turney (2002). "Thumbs Up or Thumbs Down? Semantic Orientation Applied to Unsupervised Classification of Reviews". Proceedings of the Association for Computational Linguistics. pp. 417–424. arXiv:cs.LG/0212032.

- Bo Pang; Lillian Lee and Shivakumar Vaithyanathan (2002). "Thumbs up? Sentiment Classification using Machine Learning Techniques". Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP). pp. 79–86. http://www.cs.cornell.edu/home/llee/papers/sentiment.home.html.

- Bo Pang; Lillian Lee (2005). "Seeing stars: Exploiting class relationships for sentiment categorization with respect to rating scales". Proceedings of the Association for Computational Linguistics (ACL). pp. 115–124. http://www.cs.cornell.edu/home/llee/papers/pang-lee-stars.home.html.

- Benjamin Snyder; Regina Barzilay (2007). "Multiple Aspect Ranking using the Good Grief Algorithm". Proceedings of the Joint Human Language Technology/North American Chapter of the ACL Conference (HLT-NAACL). pp. 300–307. http://people.csail.mit.edu/regina/my_papers/ggranker.ps.

- Thelwall, Mike; Buckley, Kevan; Paltoglou, Georgios; Cai, Di; Kappas, Arvid (2010). "Sentiment strength detection in short informal text". Journal of the American Society for Information Science and Technology 61 (12): 2544–2558. http://www.scit.wlv.ac.uk/~cm1993/papers/SentiStrengthPreprint.doc.

The Thelwall, et al. paper, which describes analyzing MySpace comments, is most germane to Twitter sentiment analysis.

Twitter Sentiment Analysis at Microsoft Research

Microsoft Research has maintained active research projects for natural language processing (NLP) for many years at its San Francisco campus and later Microsoft Research Asia, headquartered in Beijing, China.

The QuickView Project at Microsoft Research Asia

According to a July 2011 paper by the Microsoft QuickView Team, presented at SIGIR ’11, July 24–28, 2011, Beijing, China (ACM 978-1-4503-0757-4/11/07), QuickView is an advanced search service that uses natural language processing (NLP) technologies to extract meaningful information, including sentiment analysis, as well as index tweets in real time. From the paper’s Extended Abstract:

With tweets being a comprehensive repository for super

fresh information, tweet search becomes increasingly popular.

However, existing tweet search services, e.g., Twitter 1,

offer only simple keyword based search. Owing to the noisy

and informal nature of tweets, the returned list does not

contain meaningful information in many cases.This demonstration introduces QuickView, an advanced

tweet search service to address this issue. It adopts a series

of natural language processing technologies to extract useful

information from a large volume of tweets. Specifically, for

each tweet, it first conducts tweet normalization, followed

by named entity recognition(NER). Our NER component

is a combination of a k-nearest neighbors(KNN) classifier

(to collect global information across recently labeled tweets)

with a Conditional Random Fields (CRF) labeler (to exploit

information from a single tweet and the gazetteers). Then

it extracts opinions (e.g., positive or negative comments

about a product). After that it conducts semantic role labeling(

SRL) to get predicate-argument structures(e.g.,verbs

and their agents or patients), which are further converted

into events (i.e., triples of who did what). We follow Liu

et al. [1] to construct our SRL component. Next, tweets

are classified into predefined categories. Finally, non-noisy

tweets together with the mined information are indexed.

On top of the index, QuickView enables the following two

brand new scenarios, allowing users to effectively access their

interested tweets or fine-grained information mined from

tweets.Categorized Browsing. As illustrated in Figure 1(a),

QuickView shows recent popular tweets, entities, events,

opinions and so on, which are organized by categories. It

also extracts and classifies URL links in tweets to allow users to check out popular links in a categorized way.Fig. 1(a) A screenshot of the categorized browsing scenario.

Advanced Search. As shown in Figure 1(b), QuickView

provides four advanced search functions: 1) search results

are clustered so that tweets about the same/similar topic are

grouped together, and for each cluster only the informative

tweets are kept; 2) when the query is a person or a company

name, two bars are presented followed by the words that

strongly suggest opinion polarity. The bar’s width is proportional

to the number of associated opinions; 3) similarly, the

top 6 most frequent words that most clearly express event

occurrences are presented; 4) users can search tweets with

opinions or events, e.g., search tweets containing any positive/

negative opinion about Obama or any event involving

Obama. [Emphasis added.]Fig 1(b). A screenshot of the advanced search scenario.

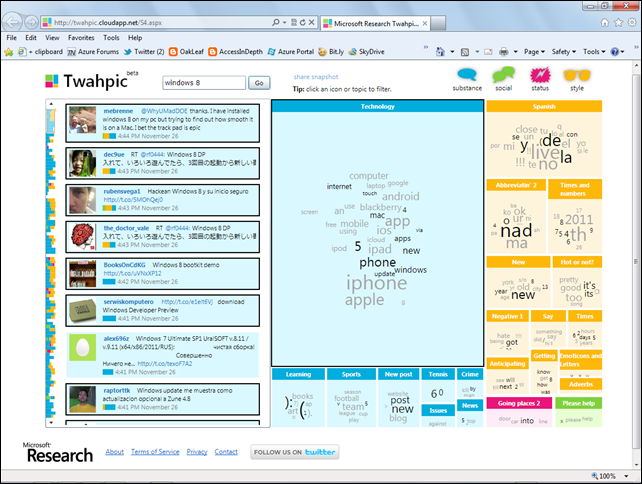

Twahpic: Twitter topic modeling

"Twahpic" shows what tweets on Twitter™ are about in terms of both topics (like sports, politics, Internet, etc) and axes of Substance, Social, Status, and Style. Twahpic uses Partially Labeled Latent Dirichlet Analysis (PLDA) to identify 200 topics used on Twitter. Try the demo and see what topics someone uses or what a hashtag is all about!

Try the Windows Azure demo: http://twahpic.cloudapp.net/

Publications

- Daniel Ramage, Susan Dumais, and Dan Liebling, Characterizing Microblogs with Topic Models, in Proc. ICWSM 2010, American Association for Artificial Intelligence , May 2010

Here’s a screen capture of Twahpic displaying Tweets about Windows 8 on 11/26/2011:

Twitter Sentiment, a Demo Using the Google App Engine

Stanford University students Alec Go, Richa Bhayani and Lei Huang developed a Google App Engine project called Twitter Sentiment “to research the sentiment for a brand, product, or topic.” Here are the results for Windows 8 on a relatively small Tweet sample:

According to the Twitter Sentiment help file:

What is Twitter Sentiment?

Twitter Sentiment allows you to research the sentiment for a brand, product, or topic.

Twitter Sentiment is a class project from Stanford University. We explored various aspects of sentiment analysis classification in the final projects for the following classes:

- CS224N Natural Language Processing in Spring 2009, taught by Chris Manning

- CS224U Natural Language Understanding in Winter 2010, taught by Dan Jurafsky and Bill MacCartney

- CS424P Social Meaning and Sentiment in Autumn 2010, taught by Chris Potts and Dan Jurafsky

What are the use cases?

Who created this?

Twitter Sentiment was created by three Computer Science graduate students at Stanford University: Alec Go, Richa Bhayani, and Lei Huang. Twitter Sentiment is an academic project. It is neither encouraged nor discouraged by our employers.

How does this work?

You can read about our approach in our technical report: Twitter Sentiment Classification using Distant Supervision. We also added new pieces that aren't described in this paper. For the latest in this field, read the papers that cite us.

Can you help me?

Sure, we really like helping people with machine learning, natural language processing, or social media analysis questions. Feel free to contact us if you need help.

How is this different?

Our approach is different from other sentiment analysis sites because:

- We use classifiers built from machine learning algorithms. Some other sites use a simpler keyword-based approach, which may have higher precision, but lower recall.

- We are transparent in how we classify individual tweets. Other sites do not show you the classification of individual tweets and only show aggregated numbers, which makes it difficult to assess how accurate their classifiers are.

Related work

If you like Twitter Sentiment, you might like Twitter Earth, which allows you to visualize tweets on Google Earth.

Lohith G N (@Kashyapa) posted an OData Spotlight - Sesame Data Browser guided tour on 11/24/2011:

In my endeavor to figure out the ecology of Open Data Protocol or OData, recently I started a series which I have termed it as "OData Tool Spotlight". So my first spotlight was on "LINQ Pad". You can read about that here: OData Tool Spotlight – LINQ Pad.

In this article, we will look into another tool within the OData ecology. This time I bring to you a OData Data Browser by name "Sesame Data Browser". So lets take a lap around Sesame Data Browser.

Sesame Data Browser:

Sesame Data Browser is the brain child of "Fabrice MARGUERIE". Fabrice is a Software Architect and Web Entrepreneur [, as well as the lead author of LINQ in Action]. Sesame is a suite of data and development tools and Sesame Data Browser is the first product from the suite. Sesame Data Browser is one of the tools we can use to consume OData. Sesame Data Browser is a Silverlight based application which runs in the browser. It also has the capability to be installed as a OOB application or Out Of Browser application. We will look into this in more details in one of the section below.

Where is Sesame Data Browser on the web:

Head over to the following URL to experience Sesame Data Browser:

http://metasapiens.com/sesame/data-browser/preview/Here is the screen shot of how the application looks like:

Not a fancy looking one but believe me when I say – it does what it supposed to do. Wait till we explore the capabilities.

Creating a new connection to a OData Feed:

On the home page, left hand top corner we have "New Connection" button. Clicking on that will show for now "OData" as one of the option. Select that option and we will be taken to the following New Connection page. Lets look at in close details of the page:

The New Connection window is divide into 3 sections. They are:

Address: Here is where you will enter OData Feed Address or URI/URL.

Authentication: Here you will specify the type of authentication that the OData Service is using. The Authentication mode available are as follows:

Anonymous – as the name says there is no Authentication and is open for any user.

Windows Azure – If the Odata Service uses Windows Azure to authenticate then you will need to specify the Access Key here. Here is the snapshot:

This mode will only work when you install Sesame Data Browser on to your desktop as Out Of Browser app.

SQL Azure: SQL Azure out of the box supports exposing of tables as an OData Feed. This option will let you to connect to such a service. You would need to provide Security Token Service (STS) endpoint and the Issuer Name and Secret Key. Here is the snapshot:

Azure DataMarket: Azure DataMarket is a new marketplace where Developers & Information workers can use the service to easily discover, purchase and manage premium data subscriptions in the Windows Azure Platform. You will need a account key to access any data source in the market. Here is the snapshot:

HTTP Basic: If the OData Service uses a Basic HTTP authentication i.e. UserName and Password in the request headers, this option will allow you to provide the credentials to use. Here is the snapshot:

Example OData Feeds: Lists some of the popular and widely used OData Service feeds. Click on one of them will automatically get their URI/URL and paste it in the Address text box.For e.g. I have selected Netflix fron the exaple feeds and its URI is automatically populated in the address tech box:

Click Ok to create a new Connection. For further examples I will be creating a connection to Netflix OData Service catalog.

Executing OData Operations:

Once a connection has been established, we will get a Tab for the selected connection. Here is the snapshot:

As you can see browser is able to pull all the Entity sets exposed in the Service root document and display as a link which can be clicked just like an hyperlink on a webpage. Lets examine Genres entity set that is exposed. When I click on Genres, here is how the results are displayed:

As you can see the Genres entity set has been retrieved and shown in a neat grid format. lets examine the results pane a little bit more.

Results Pane:

Header Text: First thing we see on the results pane is the header. The header will show text which is something like "Query <N> – <Entity Set Name>". In our example, I have executed my first query as "Genres". So it is showing "Query 1 – Genre". Here is the snapshot:

Link Feature: This is an interesting feature of the browser. If you are visualizing a data set and in particular an entity set, then you can share the exact same snapshot with anybody else in 3 ways. They are:

Email: Sesame Browser will give a link which can be emailed to other users. Here is the email link for the Genre entity set from our example: http://metasapiens.com/sesame/data-browser/preview?cn-provider=OData&cn-Uri=http%3a%2f%2fodata.netflix.com%2fCatalog%2f&query=http%3a%2f%2fodata.netflix.com%2fCatalog%2fGenres

Just open the link in a browser you will be directly taken to the Genre entity set of Netflix OData Service.

HTML Anchor Tag: This will give you a HTML anchor tag which can be embed in any webpage. When navigated will show the Sesame Data Browser with the entity set query executed. Try the below link:

HTML Code: The Sesame Data Browser with the executed Query can be completed embed into any webpage. What it does is, it inserts a IFRAME and points the source of the IFRAME to Sesame Data Browser and to the Genre entity set. Here is the live example:

Now lets look at the actual results itself. It is displayed very neatly in a grid format. The grid will spit out all the columns that the feed contains. There is a way to choose only those columns that you are interested in, instead of everything. We will see that in a minute. Here is a screenshot of the grid:

The grid contains 2 more tabs – namely XML, and Map. XML tab displays the raw XML data as it received from the server.

Map tab – as the name goes will display a Bing Map if the data contained any spatial information. Here is a screen shot of Map tab on a data set that contains spatial data:

Filtering Data:

The Sesame Data Browser also has the capability to filter data. On the Grid tab we have "Funnel" icon which is used to toggle the filter row. The first row will turn into a filter row. Here is an example:

Choosing Columns for display:

Sesame Data Browser also has the ability to let you choose only those columns that you need. A data set may be exposing N columns but you may be interested in a few of those. So the Column Chooser button on the Grid tab provides this functionality. Here is how to do that – I am taking Titles data set of Netflix to showcase this:

If you filter or choose column, Sesame Data Browser has a cool way to extract the Query formed as part of the action. Bottom of the Grid tab we have "Query String" text box which will list the query generated. For e.g. Genres data set has a navigation property called "Titles". By default everything will be retrieved for Genres when you navigate. Here is the Query String generated:

But when I say I don’t want to retrieve the Titles Navigation property, here is how the Query String formed:

So if you want to write a OData query, easiest would be to use Sesame Data Browser, retrieve the data set and check & uncheck columns and Sesame Data Browser will generate the Query for you. I would say this is a kool feature

.

Desktop Version of Sesame Data Browser:

Sesame Data Browser is developed in Silverlight technology. It also supports what is known as Out Of Browser experience i.e. although a web application, this can be installed on a Desktop similar to other desktop application but still runs in the sandbox of a browser. So you wont feel a difference and you get a feel like it’s a app running on your desktop. Steps to install application on to your desktop:

- Visit http://metasapiens.com/sesame/data-browser/preview/

- On the page you will see a "Install on desktop" button. Click on that to install.

- Once installed you will be able to launch it from your desktop.

Conclusion:

Some time back we had seen LINQPad as a OData Tool. Now we saw Sesame Data Browser. In my opinion LINQPad is more for a developer who wants to play around LINQ but still use it to retrieve data from an OData feed. Where Sesame Data Browser stands is – a browser for Developers and Non Developers. It is very easy for Non Developers to quickly come and visualize a data set. Where it stands apart is the features like drilling down into a Navigational property and automatic recognition of media. I highly recommend first time OData users to play around with this tool.

Thanks to Fabrice Marguerie for the heads-up.

My Problems Browsing Codename “Social Analytics” Collections with Popular OData Browsers post of 11/4/2011 described browsing default OData datasets delivered by the Microsoft Codename “Social Analytics” provider.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Marius Zaharia (@zaharia1010) described Important Changes in Windows Azure SDK Distribution in an 11/25/2011 post:

With November 2011's distribution, Microsoft smoothly introduced some important changes in Windows Azure SDK 1.6:

- Windows Azure SDK adds a particule to its name: Windows Azure SDK for .NET

- suddenly a question appears: is this a retreat from the interoperability field (regarding PHP & Java dev/deployment on Windows Azure roles), or they simply wanted to add an emphasis of the relationship with the underlying Framework

Windows Azure AppFabric is now Windows Azure .NET Libraries

- This will deeply busculate all search engine records and web references relative to this. I find this move pretty shocking; when first, it changed from .NET Services to Azure AppFabric, it took some time for us to find the way the new references..., now it changed again?... I don't really get it.

- Windows Azure .NET Libraries (that is, the former Windows Azure AppFabric) will be installed with the Windows Azure SDK (for .NET, I assume... right?)

- This is rather a good move - as the way is to the unification of different Azure "clubs"; by thus, it will get simpler for the developer (less entry points to the resources)

- Then, the first enhancements listed under the new Azure SDK nomination are those of AppFabric features:

Service bus enhancements:

- Support for ports in messaging operations: You can now specify that messaging operations use port 80 or port 443. Set the ConnectivityMode enumeration to Http.

- Exception contract refinements: Exception messages throughout the service bus managed API set are improved and refined.

- Relay load balancing: You can now open multiple listeners (up to 25) on the same endpoint. When the service bus receives a request for your endpoints, the system load balances which of the connected listeners receives the request or connection/session.

Caching enhancements:

- Client side connection pooling: Connection pooling now enables all DataCacheFactory instances to share the same pool of connections. This makes it easier to manage your connections within each role instance.

- Performance improvement for cache access times: The client-side caching binaries have been optimized to improve access times of cached objects.

- Custom serialization: You now have the option of implementing a custom serializer for caching, in order to optimize the serialized form of your objects in the cache.

- Other interesting aspect is related to Windows Azure HPC (High Performance Computing) and it comes not from the "what's new" content itself, but from a side note:

"Note: LINQ to HPC is in Community Technology Preview (CTP). This feature will not offer release-level support."

There are many, many, many other things added/enhanced in 1.6 SDK. I will get back later on the rest of them.

- This is in phase with Microsoft's recent announces regarding the Hadoop platform adoption - so we may assume the Windows Azure HPC feature will be either shut down, or radically transformed.

I agree with most of Marius’ issues with changing names in midstream.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Avkash Chauhan (@avkashchauhan) described Windows Azure instance uptime and instance re-initialization in an 11/27/2011 post:

Windows Azure virtual machines goes at least once a month for guest OS update and similarly Host OS update. Because of it the Windows Azure virtual machine in which your application in running, will [be] part of guest or host OS update and will be down for a few minutes per month during those update process. Besides these scheduled update it is possible your virtual machine may be down for other potential reasons. In other words

you can case, the Windows Azure virtual machines uptime can be interrupted due to any reason and here we will see what the potential reasons are which can interrupt the virtual machine uptime.

Instance uptime interruption: A virtual machine Instance uptime is considered the amount of time virtual machine instance is running without interruption. This could be impacted due the following two reasons:

- Host OS servicing- It is typically done once each month mainly for compliance and keeping your virtual machine secure. This host OS servicing does a systematic reboot, following user defined update domains, throughout all machines owned by the Windows Azure fabric controller.

- For Web/Worker roles, guest agent components in virtual machine are updated during host OS update which re-initialize the OS to insert the newest guest agent. This OS is still the original OS version you defined.

- For VM role – Instance is shut down and restart after the update, without re-initializing it.

- A Potential hardware failure: A hardware failure which is a rare event however still considered a potential reason to re-allocate virtual machine instance on another physical machine. This will give you a new virtual machine for your current running instance and considered a fresh virtual machine instance.

Instance re-Initialization: Instance re-Initialization is considered a process in which your instance is re-initialized with a clean VHD for given VM Role. For VM role, Instance Re-Initialization could happen in following few situations:

- Hardware failure:

- In this case instance is re-allocated to another physical machine. It is non-deterministic for specific instance and will start with a fresh OS disk and fresh local resource disk in this case the platform supplied local storage

- Non-responsive Instance:

- In this case the instance will be forcibly shutdown and when it occurs, a fresh OS image will be taken to avoid any possible corruption that may have occurred from the forced shutdown. Instance still use the existing local storage resource disk.

- Virtual machine Shutdown failure:

- When host OS update is started, an stopping event is generated and if instance does not successfully shutdown after ten minutes, virtual machine will be forced to shut down to progress host OS update. This results in a re-initialization of OS disk with the existing local storage resources.

Thanks to Windows Azure Team for providing this information.

Avkash works on Sunday, too.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Paul van Bladel described A straightforward approach for a create and detail screen for entity inheritance in an 11/26/2011 post:

Introduction

Quite often in business apps, one has to deal with object oriented relationships between entities making up your domain model. For example, a person can be a student or a teacher or administration personnel. They share some common characteristics, but depending on their role, they have some specific properties.

Setting up such a data structure and corresponding detail screen in LightSwitch is not difficult, but making things both flexible (in the sense that it should be easy to add other specialized tables) and maintainable, providing some kind of basic infrastructure for entity specialization can help.

We’ll focus on a very simple sample (read: entities without not too many fields).

Data modelling entity specialization

From a data perspective, there are multiple tables for each “specialization” category (e.g. Student, Teacher), carrying only the fields relevant for the specialized entity. Each specialised entity inherits a series of properties of a “master” entity (e.g. Person).

As you know, object orientation and relational database models doesn’t go well together. Obviously, the best way to make a perfect object oriented model of you data, is without a database, but then you might run into persistence problems

So, the idea is to get the best of both worlds. There is enough literature about this subject and what’s important for me, is how can Lightswitch cope as best as possible with entity inheritance.

A simple inheritance data model

As announced in the introduction, we will deal with a Person, Student and Teacher table. I’ll warn you immediately, later in this post you’ll see some code which might look quite involved, and which might look a bit over-kill for the simple setting I present you. I trust on your imagination to transpose this example to a more extended scenario where a master table can be inherited by 15 sub types and where each sub type has 56 fields.

You can see a simple master table (Person) with some fields (some of them mandatory). A very important field is the Type field. It really drives the specialization relationships. The Type field has a choice list attached with following values:

The precise naming is extremely important. Students and Teachers refer to the “specialization” entity sets, which you’ll see here:

Note that both Student and Teacher have a mix of mandatory and optional fields. Both Teacher and Student refer via a 0..1 –>1 relationship to person. So, database-technically, a person can exist as a person as such. Again, database-technically, a person could be both a Teacher and a Student. Nonetheless, that’s not what we want here: we presume that upon creation of a person, a clear choice of the person’s nature has been defined: a person as such can not exist and should be either a student, either a teacher (in other words, the specialization categories are mutual exclusive). These are prerequisites of my example.

The user interface for a student and teacher detail screen

We want only one screen which can handle both a student and a teacher entity. The reason why we want one screen is that we want to be “open for extension”. The properties of the master entity (person) are on the first half of the screen, and the specialized properties ont he second half.

The specialized entities are organised with a tab control. Depending on the type property in the master entity, a particular tab is activated. The other tabs are set invisible, they have anyhow no data. Remember, the sub types are mutual exclusive.

Setting the correct tabs visible, and the incorrect invisble, is all possible with the .FindControl() method, but is quite cumbersome to do. Especially, in the scenario where additional sub types are introduced, controls being renamed, etc. So, a more generic approach is desirable.

A two-step approach for entity creation

A basic problem with the creation of entities based on specialization is introduced by the “type field” in the master entity (person). The problem, in the context of entity creation (on a create screen) is that the type field defines the nature of the sub type, but obviously, the user can change her mind and change the type field to another value. What will you do when the user entered already 25 fields of the previous sub type? Delete the sub type and create another one? But maybe she will change back to the original sub type?

In short, that’s not a practical way of organizing things. Keep it simple and go for a two-step approach when it comes to creating specialized entities:

Step 1: present only the master entity fields and let the user choose a sub type (via dropdown or something else).

Step 2: from the moment the user clicked on save, the entity detail screen is opened with the correct sub type is shown in the tab control and the user can provide the specific sub type fields. The user can still edit the master entity fields except the type field.

In step 2, the user can cancel the save operation. When doing so, the master entity will still be there, so that later on, she can continue entering the sub type fields.

This 2 step create way, is an approach that works for me. Nonetheless, the code I provide later in the post, can be easily adapted to cope with another transaction schema.

The Search Screen

Here again, keep it sample and restrict the list of fields in the result grid to the fields of the master entity. If that’s not good enough, mix everything together.

The Server side

Nothing specific is necessary on the server side.

The domain model (the middle tier)

Also, nothing special to be foreseen, except that for making it possible that the entity type field in the people entity becomes read-only when the state of the entity in no longer in add mode. This can be done as follows:

public partial class Person { partial void Type_IsReadOnly(ref bool result) { result = (this.Details.EntityState != EntityState.Added); } }Next, we need a helper class in the middle tier. I’ll explain the purpose later, but provide the code here. Create a new class in the Common Project with following static method:

public static class ApplicationCommonActivatorHelper { public static object CreateEntityInstance(string typeName) { Type t = Type.GetType(typeName); if (t != null) return Activator.CreateInstance(t); return null; } }The client side

The create new Person screen

It boils down to the following. Make sure to include to student nor teacher specific fields (they are provided on the detail screen) and that the choice list of the type field is up to date. The code behind class doesn’t contain anything specific.

The search screen

Again, very straightforward and no specific code behind content.

The detail screen

This one is more involved. We kept the most interesting (I hope at least) for the end. The screen design of the detail screen is very simple but the code behind contains some advanced techniques.

The tabs layout contains the two (mutual exclusive) sub types (student and teacher). The easiest way to get them there, is dragging and dropping from the PersonProperty in the viewmodel.

Ok, now we come to the most important part, the code behind of the detail screen.

My purpose is clear and simple :when a new sub type is introduced (e.g. adminPersonnel) I don’t want to touch the code behind of the detail screen, it should be simply self containing.

The main tasks of the code behind for this screen is:

- make sure the correct tab is visible and gets the focus and make sure the non-relevant tabs are hidden.

- make sure to instantiate the correct sub type in case it has not been instiantiated yet.

As said, the code behind is generic, it has no reference to teachers and students.

public string EntityTypeName { get; set; } public string ParentPropertyName { get; set; } public string ChildTabGroupName { get; set; } partial void PersonDetail_InitializeDataWorkspace(List<IDataService> saveChangesTo) { ParentPropertyName = "PersonProperty"; ChildTabGroupName = "PersonTabGroup"; EntityTypeName = this.DataWorkspace.ApplicationData.Details.GetModel().EntitySets.Where(s => s.Name.ToUpper() == this.PersonProperty.Type.ToUpper()).Single().EntityType.Name; this.FindControl(ChildTabGroupName).ControlAvailable += (s, e) => { var ctrl = e.Control as System.Windows.Controls.Control; var contentItem = ctrl.DataContext as Microsoft.LightSwitch.Presentation.IContentItem; foreach (var child in contentItem.ChildItems) { IEntityReferenceProperty subEntity = child.Details as IEntityReferenceProperty; if (subEntity.Name.ToUpper() == EntityTypeName.ToUpper()) { child.IsVisible = true; this.FindControl(child.ContentItemDefinition.Name).Focus(); } else { child.IsVisible = false; } } }; InstantiateEntitySubTypeWhenNull(this.PersonProperty.Id, this.PersonProperty.Type, this.PersonProperty); } private void InstantiateEntitySubTypeWhenNull(int entityId, string entitySubTypeName, IEntityObject parentProperty) { IDataService dataService = this.DataWorkspace.ApplicationData; ICreateQueryMethod singleOrDefaultQuery = dataService.Details.Methods[entitySubTypeName + "_SingleOrDefault"] as ICreateQueryMethod; ICreateQueryMethodInvocation singleOrDefaultQueryInvocation = singleOrDefaultQuery.CreateInvocation(entityId); IEntityObject entityObject = singleOrDefaultQueryInvocation.Execute() as IEntityObject; if (entityObject == null) { //makes use of static class in common dll. parentProperty.Details.Properties[EntityTypeName].Value = ApplicationCommonActivatorHelper.CreateEntityInstance("LightSwitchApplication." + EntityTypeName); } }As you see, everything is happening in the InitializeDataWorkspace method.

The first part is making sure that the correct tab is visible and gets the focus. The nice thing is that everything can be processed without any intimate knowlegde of the sub entities involved.

The second part is encapsulated in the InstantiateEntitySubTypeWhenNull private method. We first try to find out, if we still need to instantiate the sub type. Since we know, deliberately, the sub type only by it’s name we have to use reflection to instantiate the entity type. For doing this, we need the ApplicationCommonActivatorHelper (I got this helper class via the lightswitch forum from LS__, many thanks !) in the common project. The reason why we put this method in the common project is that the entity types are also stored over there. In silverlight it’s quite complicated to get in a generic way a reference to a referenced DLL via reflection. The problem is that you have to use the full qualified name containing also a version number which changes all the time.

The only 2 “entry points” in the code behind are the values of 2 properties:

ParentPropertyName = "PersonProperty"; ChildTabGroupName = "PersonTabGroup"Extending the master entity with another sub type

The proof of the pudding is in the eating. Let’s try now to introduce a new sub type “AdminStaff” and check what’s the work to be done:

Create the AdminStaff entity and create relation to Person

Update the choice list with AdminStaffs (the entity set name of the new sub type)

Update the person detail screen

Just drag and drop the adminStaff navigation property from the viewmodel to the tabslayout.

That’s all, as you can see from following screen:

Conclusion

It’s perfectly possible in LightSwitch to make things a bit more generic, reusable and maintainable even without using the extension framework. So far, I have little experience with the LightSwitch extension framework, but probably the above would be a reasonable starting point for an “Inheritance based detail screen template extension“.

Michael Washington (@ADefWebserver) posted a LightSwitch: It Is About The Money (It Is Always About The Money) essay on 11/25/2011:

But First, The JavaScript

I just looked at the latest JQuery release notes and noted the issues that they had to fix due to web browser compatibility issues. We simply must believe that these people are at the top of their game. If they have this many issues to deal with, aren’t I crazy if I even think of writing any JavaScript without using JQuery?

The rule I follow is, keep the JavaScript to a minimum, and always use JQuery or some other big JavaScript library. The reason is web browser “incompatibilities” and it is very costly and is an issue that is not changing any time soon.

IT is fueled on the money it saves for businesses with the Line Of Business (LOB) applications that we programmers create. However, lately, IT and the programmers creating the LOB apps, cost too much money. The reason, the JavaScript.

JavaScript wasn’t so costly back when we used it to validate date fields and we only had to worry about IE 4 and Netscape 4. However, now it is used for pop up calendars, type ahead searches boxes, hiding and disabling buttons, and creating popups.

With the advent of HTML5, the future promises yet more JavaScript.

JavaScript is is not the most economical choice for LOB apps. The reason? It takes a lot of time to create and debug, this costs a lot of money. However, “cost” is relative. Something only “cost too much” when there is an alternative that is cheaper. Well that alternative has arrived with LightSwitch. Our “customers” do not want to pay more money… if given an option, they won’t pay more money, period.

The “Reach” Argument

If you need “reach” you need to use HTML… My focus here is on LOB apps. A single LightSwitch developer can produce as much as 5 HTML/HTML5 developers and complete the project in half the time with 90% less bugs and 100% web browser compatibility (on the web browsers that can run Silverlight 4).

The deciding factor will be the JavaScript. The majority of time the HTML/HTML5 programmers will spend coding and mostly debugging will be the JavaScript. Plus that is assuming that they are using JQuery which is saving them a ton of time.

The advantage HTML has over LightSwitch is “reach”. HTML runs on more devices. It will run on an IPad. If your application needs to run on an IPad then don’t use LightSwitch. However, if it doesn’t, and you don’t need “reach”, why are you spending the extra money?

Do you really think companies will pay $10,000 for a application that will manage their inventory when they can get an application that will do the same thing for $2,000 ?

What about quality? LightSwitch requires you to write less code so you have less bugs. To the end-user that is higher quality. Also, just because a company spends a lot of money, that does not mean that It doesn’t mean that the project wont be a failure.

Yes, LightSwitch Can Handle It

The perception problem that LightSwitch has had, is that people thought it was only good for “simple” apps. Over the past year, many of us on the LightSwitch Help Website have proven that LightSwitch is suitable for your biggest enterprise applications.

I will give a presentation at Visual Studio Live in Orlando this December titled “Unleash The Power: Implementing Custom Shells, Silverlight Custom Controls and WCF RIA Services in LightSwitch”.

In the presentation, I will show the techniques used to create professional quality applications using LightSwitch in a fraction of the time to do a comparable application using HTML. Primarily this is achieved using Silverlight Custom Controls and WCF RIA Services.

After the presentation, I plan to make the code available (in late December) in the LightSwitch Star Contest:

It is about the money, It is always been about the money, and it always will be about the money

My point is that the cost for LOB applications must and will come down. The technology to do that is here in LightSwitch.

I don’t see it as a negative thing. When resources are directed toward programmers, they are taken from somewhere else. Your customers will demand that you reduce costs for IT projects dramatically if the company across the street has reduced its costs dramatically.

Yes HTML5 is cool, but your customers don’t care. They want a satisfactory solution to their needs at the most economical (cheapest) cost…. period.

I have been programming .Net/JavaScript applications for over a decade and I know how much it costs to create an application. The cost is in direct relation to how much time it takes to construct the application. A programmer only has so much time in their life and they want to sell their precious working hours to the highest bidder.

When the time to create an application is reduced, the cost is reduced.

With LightSwitch, you can reduce the time required to create an application by 80%+ and therefore reduce the cost of a $10,000 project to less than $3,000. It’s not magic, just a reduction in time required to perform the same actions. Look at the descriptions of the time it took to create some of the applications in the LightSwitch Star Contest.

When given a choice, your customers will only spend $10,000 if they have to. They will do the math and realize that the deployment of IPads to the warehouse for mobile inventory is not such a great idea after all if the inventory app is going to cost $10k (100 hours @ $100 an hour). For $2k (20 hours @ $100 an hour) you can create an inventory application in LightSwitch and run it on an EXOPC Slate:

People Will Do The Math

It will not be possible to keep this a secret. People will do the math, they always do the math

.

Michael didn’t mention the devops cost savings resulting from LightSwitch projects’ ease of deployment to Windows Azure.

Pluralsight (@pluralsight) reported Free Visual Studio LightSwitch Training in an 11/21/2011 post (missed when posted):

In partnership with Microsoft, we’ve released a professional Visual Studio LightSwitch training course that you can watch for free on the MSDN LightSwitch Developer Center. Check it out (under the “Essential Videos” section below) and start your learning now!

This professional course was designed for those who need to start from ground zero with Visual Studio LightSwitch and get up to speed quickly. We hope you enjoy it!

If you like what you see, be sure to check out how you can get access to hundreds of online training courses for only $29/mo.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Brian Hitney announced Azure Camps Coming Soon! in an 11/26/2011 post:

Jim, Peter, and I are gearing up for another road trip to spread the goodness that is Windows Azure! The Windows Azure DevCamp series launched recently with a two-day event in Silicon Valley, and we’re jumping on the bandwagon for the East Region.

We have five stops planned in December, and we’re doing things a bit differently this go-round. Most of the events will begin at 2 p.m. and end at 9 p.m. – with dinner in between of course. The first part will be a traditional presentation format and then we’re bringing back RockPaperAzure for some “hands-on” time during the second half of the event. We’re hoping you can join us the whole time, but if classes or your work schedule get in the way, definitely stop by for the evening hackathon (or vice versa). By the way it wouldn’t be RockPaperAzure without some loot to give away, so stay “Kinected” to our blogs for further details on what’s at stake!

Here’s the event schedule, be sure to register quickly as some venues are very constrained on space. You’ll want to have your very own account to participate, so no time like the present to sign up for the Trial Offer, which will give you plenty of FREE usage of Windows Azure services for the event as well as beyond.

Registration Link Date Time NCSU, Raleigh NC Mon, Dec. 5th, 2011 2 – 9 p.m. Microsoft, Farmington CT Wed., Dec. 7th, 2011 2 – 9 p.m. Microsoft, New York City Thur., Dec. 8th, 2011 9 a.m. – 5 p.m. Microsoft, Malvern PA Mon., Dec. 12th, 2011 2 – 9 p.m. Microsoft, Chevy Chase MD Wed., Dec. 14th, 2011 2 – 9 p.m.

Wely Lau announced I am officially MCPD in Windows Azure on 11/25/2011:

Due to bunch of busy stuff, I had not have chance to blog.

Anyway, I am pleased to share that I am now officially [a] MCPD (Microsoft Certified Professional Developer) in Windows Azure.

Obtaining MCPD in Windows Azure requires three exams:

- Exam 70-513 – MCTS prerequisite: TS: Windows Communication Foundation Development with Microsoft .NET Framework 4

- Exam 70-516 – MCTS prerequisite: TS: Accessing Data with Microsoft .NET Framework 4

- Exam 70-583 – MCPD requirement: PRO: Designing and Developing Windows Azure Applications

Passing the core 70-538 Beta Exam

I have successfully passed the beta exam of Designing and Developing Windows Azure Applications about a year ago. Beta exam is more challenging than normal exam. There’re no official preparation material, no training kit, no e-book, no online training. It just … states a few topics [that will be tested] with … short descriptions.

Passing the MCTS Prerequisite: 70-516 and 70-513

As you can see …, there are two prerequisites exams that need to be accomplished apart form the core exam 70-583. I had passed the 70-516 last September and 70-513 just last week.

With that, here’s my MCPD cert in Windows Azure

Congratulations, Wely!

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Derrick Harris (@derrickharris) reported Heroku launches SQL Database-as-a-Service in an 11/22/2011 post to Giga Om’s Structure blog:

Platform-as-a-Service provider Heroku (s crm) is expanding its horizons by offering an on-demand version of the PostgreSQL Database-as-a-Service. The new service, aptly called Heroku Postgres, is a commercial version of what Heroku has been providing to its own developers for years, only it’s now available to all developers regardless where they host their applications. It’s a pretty bold move for Heroku to offer a database service, but it also makes perfect sense.

Like many technology companies, Heroku has had to tweak open-source software — Postgres, in this case — to fit its unique needs. Unlike many other companies like Facebook and Google, (s goog) however, Heroku is already in the business of selling an application Platform-as-a-Service. If it thinks its own unique brand and delivery model for Postgres is strong enough to stand on its own without the rest of the PaaS components, then it might as well offer it to the world.

“When we started the company, we thought of this database service being a whole business that someone else should start,” Heroku Co-Founder James Lindenbaum told me. When no one came along with a better offering, Heroku just kept working on its Postgres service and eventually decided it would be valuable to a broad audience who, for one reason or another, can’t utilize the entire Heroku platform.

Heroku Postgres comes in six plan options, starting at $200 a month and reaching $6,400 a month. The service is billed by the second — not the hour, like many cloud computing services — and Heroku is adamant about its reliability and security. In the blog post announcing the new service, product manager Matt Soldo writes, “Heroku Postgres has successfully and safely stored 19 billion customer transactions, and another 400 million transactions are processed every day.”

He also notes a new feature in Heroku’s service, called Continuous Protection, designed to ensure customers never lose their data:

[Continuous Protection] is a set of technologies designed to prevent any kind of data loss even in the face of catastrophic failures. Taking advantage of PostgreSQL’s WAL (write-ahead-log of each change to the database’s data or schema), Heroku Postgres creates multiple, geographically distributed copies of all data changes as they are written. These copies are constantly checked for consistency and corruption. If a meteor were to wipe out the each coast, you won’t lose your data.

Heroku also is playing up the pure-play, open-source nature of its offering: “We are committed to running community PostgreSQL ‘off-the-shelf’ – unforked and unmodified,” Soldo wrote. During a phone call, he explained to me that Heroku chose Postgres in the first place because it “is owned by no one” (unlike MySQL(s orcl)) and because it’s “exceedingly mature in terms of its durability and reliability.”

Certainly, Heroku parent company Salesforce.com isn’t about to complain about Heroku doing something crazy such as trying to sell a Database-as-a-Service. Salesforce.com launched its own database service, called Database.com, shortly before buying Heroku late last year. However, whereas Lindenbaum says Database.com is ideal for applications that want to tie into the Salesforce.com platform and utilize certain management features, Heroku Postgres targets all modern web applications that rely on a relational database. Heroku has already proven quite popular among various types of programmers, ranging from Facebook developers to enterprise developers.

Heroku joins a number of other companies offering relational databases as a cloud service, including Amazon (s amzn) (Relational Database Service), Microsoft (s msft) (SQL Azure) and Xeround. Google (s goog) also has its Google Cloud SQL offering, although it’s currently limited to App Engine applications.

<Return to section navigation list>

0 comments:

Post a Comment