Windows Azure and Cloud Computing Posts for 3/2/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 3/3/2011 with added articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket, Big Data and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• Sreedhar Pelluru described Synchronization Services for ADO .NET for Devices: Improving performance by skipping tables that don’t need synchronization in a 3/2/2011 post to the Sync Framework Team blog:

Performance is an important factor when you synchronize databases that consist of large number of tables. One way to improve performance is to identify tables that have no data to synchronize and exclude them from the synchronization process.

SqlCeClientProvider performs some processing for every table you request to be synchronized irrespective of whether the table has changed data or not. For a table full of changed data, this processing time is minimal compared to the time required for the actual synchronization. However, for a table with nothing to synchronize, the processing time is an overhead because it is the time that does not need to be spent. In fact, this overhead could affect performance of overall synchronization process significantly if there are large numbers of tables that have no changed data. Therefore, you should identify tables that have no data to synchronize and exclude them from the synchronization process to improve performance.

In a test we conducted in our labs, we used a database with 100 tables, out of which only 10 contained the changed data (200 rows inserted into each) that need to be synchronized. By skipping the other 90 tables during synchronization process, the overall synchronization time was reduced by 75-80%.

There are many ways to detect the changed tables on both server side and the client side. The following example code demonstrates one way to do this.

Construct sync agent's sync group with tables that have changed data:

private SyncAgent PrepareAgent(List<SyncTable> clientTables) { SyncGroup agentGroup = new SyncGroup("ChangedGroup"); SyncAgent changedAgent = new SyncAgent(); foreach (SyncTable table in clientTables) { table.SyncGroup = agentGroup; this.ClearParent(table); changedAgent.Configuration.SyncTables.Add(table); } return changedAgent; } Get changed tables from local client database: private List<SyncTable> GetChangedTablesOnClient() { // Initialize the list of tables to synchronize List<SyncTable> tables = new List<SyncTable>(); // Open connection to the database if (clientSyncProvider.Connection.State == System.Data.ConnectionState.Closed) { clientSyncProvider.Connection.Open(); } // Prepare Command SqlCeCommand command = new SqlCeCommand(); command.Connection = (SqlCeConnection)clientSyncProvider.Connection; command.Parameters.Add(new SqlCeParameter("@LCSN", System.Data.SqlDbType.BigInt)); // Retreive changed tables foreach (string tableName in this.clientTables.Keys) { // Build a command for this table command.CommandText = GetQueryString("[" + tableName + "]"); // Execute the command long lcsn = GetLastSyncCsn(command.Connection, tableName); command.Parameters["@LCSN"].Value = lcsn; int result = (int)command.ExecuteScalar(); // If the table contains changed data if (result > 0) { // then add it to the synchronization list tables.Add(this.clientTables[tableName]); } } command.Dispose(); return tables; }Get the changed tables from the server side

// service method public Collection<string> GetServerChanges(SyncGroupMetadata groupMetadata, SyncSession syncSession) { Collection<string> tables = new Collection<string>(); SyncContext changes = _serverSyncProvider.GetChanges(groupMetadata, syncSession); if (changes.DataSet.Tables.Count > 0) { foreach (DataTable table in changes.DataSet.Tables) { if (table.Rows.Count > 0) { tables.Add(table.TableName); } } } return tables; } // client side methods to get the server changes by calling the service proxy method private string[] GetServerChanges() { SyncGroupMetadata metaData = this.GetMetadata(); SyncSession session = new SyncSession(); session.ClientId = clientSyncProvider.ClientId; return this.proxyExtension.GetServerChanges(metaData, session); } private SyncGroupMetadata GetMetadata(Collection<string> clientTables) { SyncGroupMetadata groupMetadata = new SyncGroupMetadata(); foreach (string tableName in clientTables) { SyncTableMetadata tableMetadata = new SyncTableMetadata(); tableMetadata.LastReceivedAnchor = clientSyncProvider.GetTableReceivedAnchor(tableName); tableMetadata.TableName = tableName; groupMetadata.TablesMetadata.Add(tableMetadata); } return groupMetadata; }

Brian Swan explained Getting Started with the SQL Server JDBC Driver in a 3/2/2011 post:

Okay, okay. I know that Java Database Connectivity (JDBC) doesn’t have much (if anything) to do with PHP, so I apologize in advance if you are tuning in expecting to find something PHP-related. However, I temper my apology with the idea that getting out of your comfort zone is generally beneficial to your growth. The fun part is that it is very often beneficial in ways you cannot predict.

So, with that said, I’m embarking on an investigation of the Microsoft JDBC Driver for SQL Server in hopes that I will learn something new (maybe even many things). I do not plan to stop writing about PHP, so consider this trip to be a jaunt down a side street. In addition to my usual PHP-related content, I’ll aim to make Java/JDBC-related posts a couple times each month as I learn new and interesting things. But, when you start walking down side streets, you never know where you’ll end up…

What piqued my interest in the JDBC driver were two blog posts: Improving experience for Java developers with Windows Azure and JDBC 3.0 for SQL Server and SQL Azure Available. The latter post highlights SQL Azure connectivity using JDBC while the former post highlights the Windows Azure Starter Kit for Java. I’ve written several posts about PHP and the Azure platform, so I was curious about running Java in the Microsoft cloud. But first, I needed to figure out the basics of installation and executing simple queries, which is what I’ll cover in this post.

Installing the Java Development Kit (JDK)

The SQL Server JDBC documentation indicates that the SQL Server JDBC 3.0 driver is compliant with the JDBC 4.0 specification and is designed to work with all major Sun equivalent Java virtual machines, but is tested on Sun JRE 5.0 or later. Keeping my eye on the X.0’s, I installed the Java Development Kit (JDK) 6 (which you need for developing Java applications) from here: http://www.oracle.com/technetwork/java/javase/downloads/index.html. (Be sure to download the JDK, which includes the JRE. )

After you install the JDK, you need to make it accessible to your system by adding the path to the bin folder to your Path environment variable. If you haven’t done this before, here’s what you do:

1. Click Start –> Control Panel –> System –> Advanced Settings.

2. In the System Properties window, click Environment Variables:

3. In the System Variables section, select the Path variable and click Edit.

4. At the end of the existing value, add a semi-colon followed by the path to your JDK bin directory and click OK:

You should now be ready to compile and run Java programs.

Installing the SQL Server JDBC Driver

There are several versions of the SQL Server JDBC driver available for download on the Microsoft download site (mostly because each driver is compatible with different versions of Java). If you are ultimately interested in having SQL Azure access from Java, make sure you download this one: SQL Server JDBC Driver 3.0 for SQL Server and SQL Azure. Note that the download is a self extracting zip file. I recommend creating a directory such as this in advance: C:\Program Files\Microsoft SQL Server JDBC Driver. Then, you can simply unzip the file (sqljdbc_3.0) to that directory.

Next, you need to provide access to the JDBC driver classes from Java. There are a few ways to do this. I found the easiest way was to create a new environment variable, called classpath, and set its value to this:

To do this, follow steps 1 and 2 from above, then click on New in the User variables section:

Then enter classpath as the name of the variable and set it value to .;C:\Program Files\Microsoft SQL Server JDBC Driver\sqljdbc_3.0\enu\sqljdbc4.jar (or wherever your sqljdbc4.jar file is located) and click OK.

Click OK out of the Environment Variables, and System Properties dialogs.

Now we are ready to write some simple code.

Connecting and retrieving data

Finally, we can write some Java code that will connect to a database and retrieve some data. I won’t go into a Java tutorial here, but I will say that I have only written very little Java code in the past and I was able to figure out how to write the code below easily. Granted, I did find these topics in the docs helpful: Working with a Connection and JDBC Driver API Reference.

A few things did surprise me, probably because they are different than they are in PHP:

- Even though the classes are imported, I still had to use Class.forName to dynamically load the SQLServerDriver class.

- The connection string elements are different than they are with the SQL Server Driver for PHP (“user” vs. “UID”, “password” vs. “PWD”, and “database” vs. “Database”).

- The index for the returned columns starts at 1, not 0.

And, obviously, you have to put on your OOP hat when writing Java…

// Import the SQL Server JDBC Driver classes

import java.sql.*;class Example

{

public static void main(String args[])

{

try

{

// Load the SQLServerDriver class, build the

// connection string, and get a connection

Class.forName("com.microsoft.sqlserver.jdbc.SQLServerDriver");

String connectionUrl = "jdbc:sqlserver://ServerName\\sqlexpress;" +

"database=DBName;" +

"user=UserName;" +

"password=Password";

Connection con = DriverManager.getConnection(connectionUrl);

System.out.println("Connected.");

// Create and execute an SQL statement that returns some data.

String SQL = "SELECT CustomerID, ContactName FROM Customers";

Statement stmt = con.createStatement();

ResultSet rs = stmt.executeQuery(SQL);// Iterate through the data in the result set and display it.

while (rs.next())

{

System.out.println(rs.getString(1) + " " + rs.getString(2));

}}

catch(Exception e)

{

System.out.println(e.getMessage());

System.exit(0);

}

}

}To compile the code above, I first saved the file to C:\JavaApps as Example.java (class names are supposed to match file names). Then, I opened a command prompt, changed directories to C:\JavaApps and ran the following:

C:\JavaApps> javac Example.java

Doing that created a file called Example.class (in the same directory) which I could then run with this command:

C:\JavaApps> java Example

If you run the example against the Northwind database, you should see a nice list of customer IDs and names.

That’s it! Obviously, that’s just a start. I’ll continue to investigate with the idea of eventually getting things running on the Azure platform. if you have specific things you’d like me to investigate, please comment below.

<Return to section navigation list>

Marketplace DataMarket, Big Data and OData

• Sashi Ranjan of the Microsoft Dynamics CRM Blog explained Using OData Retrieve in Microsoft Dynamics CRM 2011 in a 3/2/2011 post:

With the release of Microsoft Dynamics CRM 2011, we have added a new Windows Communication Foundation (WCF) data services (ODATA) endpoint. The endpoint facilitates CRUD operation on entities via scripts using Atom or Json format. In this blog I would be talking about some of the considerations when using the endpoint, specifically around the use of retrieves.

First, the operations supported over this endpoint are limited to create, retrieve, update and delete. The REST philosophy does not support other operations and so we followed J. We did not implement others since the story around service operations is not fully developed in the current WCF data services offering.

The $format and $inlinecount operators are not supported. We only support the $filter, $select, $top, $skip, $orderby

Some of the restrictions when using the implemented operators are:

Operator

Restrictions

$expand

Max expansion 6

$top

Page size is fixed to max 50 records

$top gives the total records returned across multiple pages

$skip

When using with distinct queries, we are limited to the total (skip + top) record size = 5000.

In CRM the distinct queries does not use paging cookie are and so we are limited by the CRM platform limitation to the 5000 record.

$select

One level of navigation property selection is allowed I.e.

…/AccountSet?$select=Name,PrimaryContactId,account_primary_contact

…/AccountSet?$select=Name,PrimaryContactId,account_primary_

contact/LastName&$expand=account_primary_contact

$filter

Conditions on only one group of attributes are allowed. By a group of attribute I am referring to a set of conditions joined by And/Or clause.

The attribute group may be on the root entity

.../TaskSet?$expand=Contact_Tasks&$filter=Subject eq 'test' and Subject ne null

(or) on the expanded entity.

.../TaskSet?$expand=Contact_Tasks&$filter=Contact_Tasks/FirstName eq '123‘

Arithmetic, datetime and math operators are not supported

Under string function we support Substringof, endswith, startswith

$orderby

Order are only allowed on the root entity.

Navigation

Only one level of navigation is allowed in any direction of a relationship. The relationship could be 1:N, N:1, N:N

Nicole Hemsoth reported Expert Panel: What’s Around the Bend for Big Data? in a 3/2/2011 post to the HPC in the Cloud blog:

This week we checked in with a number of thought leaders in big data computing to evaluate current trends and extract predictions for the coming years. We were able to round up a few notable experts who are driving big data innovations at Yahoo!, Microsoft, IBM, Facebook’s Hadoop engineering group, and Revolution Analytics—all of which are frontrunners in at least one leg of the race to capture, analyze and maintain data.

While their research and technical interests may vary, there is a general consensus that there are some major developmental shifts that will change the way big data is perceived, managed, and of course, harnessed to power analytics-driven missions.

Although it’s difficult to present an overarching view of all trends in this fluid space, we were able to pinpoint some notable movements, including a broader reach (and subsequent boost in innovation) for big data and its use; enhancements to data as a service models following increased adoption; and more application-specific trends bound to shape the big data landscape in coming years. As you might imagine, we also considered these movements in the context of opportunities (and challenges) presented by cloud computing.

Widespread Adoption via Increased Innovation

One of the most echoed statements about current trends in the big data sphere (from storage to analytics and beyond) is that as data grows, the tools needed to negotiate the massive swell of information will evolve to meet demand.

According to Todd Papaioannou, Vice President, Cloud Architecture at Yahoo, Inc., and former VP of architecture and emerging technologies at Teradata, the big trend for big data this year will be widespread adoption of via increased innovation, particularly in the enterprise—a setting that has been reticent thus far. He notes that while this push for adoption was not the case for traditional business one or two years ago it is being enabled by the “expanding Hadoop ecosystem of vendors who are starting to appear, offering commercial tools and support, which is something that these traditional enterprise customers require.”

In addition to the richer ecosystem that is developing around big data, Papaioannou believes that much of the innovation in the Hadoop and big data ecosystem will be centered around enabling much more powerful analytical applications.

Of the future of big data, he says that sometime down the road, “people will be amazed at the data they used to throw away because the insights and value they gain from that data will drive key decisions in their businesses.” On that note, there will be more emphasis on this valuable information and its organization. Papaioannou states that companies will have a big data strategy that will be a complimentary piece of their overall general data and BI strategy. In his view there will be no more “islands of data” but at the core there will be a big data platform, which will be at the center of a seamless data environment.

In terms of cloud’s role in the coming wave of big data innovation and adoption, he suggests that cloud will drive down even further the cost associated with storing and processing all of this data, offering companies a much wider menu of options from which to choose.

CTO of Revolution Analytics, David Champagne also weighed in on the role of cloud computing in the push to optimize big data processes, nothing that there are some major hurdles when it comes to large datasets and the issue of data locality. His view is that there need to be effective ways to either collect the data in the cloud, push data up to the cloud, or ensure low latency connections between cloud resources and on-premise data stores.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• The Windows Azure AppFabric Team explained How BEDIN Shop Systems has been utilizing the Windows Azure AppFabric Service Bus as part of their aKite “Connected Retail” solution in a 3/1/2011 post:

Yesterday during the “Cloud Computing in Retail Breakfast” at the Euroshop Retail Design Conference in Dusseldorf, Germany, Microsoft partner BEDIN Shop Systems made an announcement regarding their retail industry software-as-a-service (SaaS) solutions powered by the Windows Azure platform.

BEDIN Shop Systems announced that their Windows Azure-based aKite® Vers.2.0 software services suite has gained significant customer acceptance since its introduction on July 11, 2010 at Microsoft’s Worldwide Partner Conference (WPC) as European retailers have increasingly realized the benefits of cloud computing. New customers include mobile phone distribution chains in Italy, Italian retailers Briocenter, Bruel and luxury brand Jennifer Tattanelli.

One of the interesting capabilities of their solution is aDI , aKite® Document Interchange, a software service based on the Windows Azure AppFabric Service Bus that greatly simplifies integration with legacy ERPs and other on-premises systems such as aKite® DWH, a Microsoft SQL Server for custom business intelligency (BI) updated in near-real-time from all stores. The solution uses the Service Bus capabilities to connect to on-premises assets in a secure manner without having to open the on-premises firewall.

For more details about BEDIN’s announcement, view their press release.

You can also start enjoying the benefits of cloud computing and the Windows Azure platform using our free trial offers. Just click on the link below and get started!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Wade Wegner (@WadeWegner) adds more details about the Windows Azure Platform Training Kit and Course, February 2011 Update on 3/2/2011:

The February 2011 update of the Windows Azure Platform Training Kit includes several updates and bug fixes for the hands-on labs. Most of the updates were focused on supporting the new Windows Azure AppFabric February CTP and the new portal experience for AppFabric Caching, Access Control, and the Service Bus. The specific content that was updated in this release includes:

Hands-on Lab – Building Windows Azure Apps with the Caching service

- Hands-on Lab – Using the Access Control Service to Federate with Multiple Business Identity Providers

- Hands-on Lab – Introduction to the AppFabric Access Control Service V2

- Hands-on Lab – Introduction to the Windows Azure AppFabric Service Bus Futures

- Hands-on Lab – Advanced Web and Worker Roles – fixed PHP installer script

- Demo Script – Rafiki PDC Keynote Demo

The setup scripts for all hands-on labs and demo scripts have also been updated so that the content can easily be used on a machine running Windows 7 SP1. [Emphasis added.]

You can download the February update of the Windows Azure Platform Training kit. We’re also continuing to publish the hands-on labs directly to MSDN to make it easier for developers to review and use the content without having to download an entire training kit package. You can also view the HOLs in the online kit.

Finally, if you’re using the training kit or if you have feedback on the content, we would love to hear from you. Please drop us an email at azcfeed@microsoft.com.

• The Windows Azure Team reported a White Paper, "The Global Conference Is Real: The Success of the PDC10 Player and Application", Now Available on 3/2/2010:

A new white paper, "The Global Conference is Real: The Success of the PDC10 Player and Application", is now available for download. This white paper outlines how Microsoft transformed its Professional Developer Conference (PDC) into an unprecedented virtual conference built on the Windows Azure platform to engage a record number of virtual attendees and deliver a compelling and engaging web experience.

PDC is a developer-focused Microsoft event that is attended by 5,000 to 7,000 developers, mostly from the United States. Thousands more developers would like to attend this event in person, but they face the obstacle of high travel costs, particularly in a difficult economy. For PDC10, Microsoft decided to address these concerns by taking the conference to the Internet.

While an onsite conference limited to a 1,000 attendees took place in 2010, the focus was on the online conference. This online setup allowed developers from all over the world to attend the conference for the price of an Internet connection. Beyond providing traditional linear video that replicated a traditional conference experience, Microsoft delivered a virtual conference built on multiple Microsoft technologies, including the Windows Azure platform, to enrich and surpass that experience with:

- High-quality integration of multiple high-definition video streams, content streams, and parallel video tracks

- Multiple simultaneous audio translations from English into French, Spanish, Japanese, and Chinese, plus live closed-captioning in English

- Unprecedented online interaction via an integrated Twitter client, live global question and answer, real-time polling, and dynamic scheduling

- The ability for anyone, anywhere to access conference content anonymously, and to interact with that content in real time

The results were impressive:

- In contrast to the 5,000 to 7,000 attendees of the traditional Microsoft developer conference, PDC10 attracted more than 100,000 developers and 300,000 individual video views during its two-day span. Throughout the month of November 2010, viewers requested archived and on-demand content more than 338 million times.

- Audiences visited the site from more than 150 countries over the two-day conference period. About 10 percent of the online audience used the simultaneous-translation feature, further demonstrating the success of the solution in extending the PDC's reach to non-native English speakers.

- The solution supported more than 5,000 concurrent connections with an average response time to requests of less than one-tenth of a second (0.07 seconds).

- Attendees viewed more than 500,000 hours of conference content and the average video satisfaction rating for PDC content was 3.75 out of 4 (where 4 was "very satisfied"), and the average value rating was 3.9 out of 5 (where 5 was extremely valuable).

To Learn More:

- Visit the PDC10 conference site at: http://player.microsoftpdc.com/

- Download the Content Management System at: www.codeplex.com

- Download the Silverlight Media Framework at: smf.codeplex.com

- Download the Silverlight Rough Cut Editor at: code.msdn.microsoft.com/RCE

- Learn about Windows Azure at: www.microsoft.com/windowsazure

Richard Banks (@rbanks54) explained Running Neo4j on Azure in a 2/22/2011 post (missed when published):

For those of you who don’t know what I’m talking about, Neo4j is a graph DB, which is great for dealing with certain types of data such as relationships between people (I find it ironic that relational databases aren’t great for this) and it’s perfect for the project I’m working on at the moment.

However this project also needs to be deployable on Azure, which means if I want a graph DB for storing data, I need something running on Azure. I’d originally looked at Sones for doing this since it was the only one around that I knew could run on Azure, but my preference was to run Neo4j instead, because I know it a little better, and because I know that the community around it is quite helpful.

The great news is that as of just recently (a week ago at time of writing) Neo4j can now run on Azure! Happy days!! and “Perfect Timing”™ for my project. I just had to try it out. P.S. Feel free to go ahead and read the Neo4j blog post because it has a basic explanation of how to set it up, has some interesting notes on the implementation and also talks about the roadmap ahead.

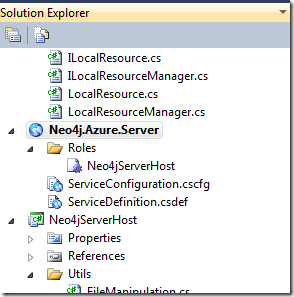

Here’s a simple step-by-step on how to get Neo4j running on you local Azure development environment:

1. Download the Neo4j software from http://neo4j.org/download/. I grabbed the 1.3.M02 (windows) version.

2. You’ll also need to download the Neo4j Azure project, which is linked to from the Neo4j blog post.

3. I’m assuming you have an up to date Java runtime locally, so go to your program files folder and zip up the jre6 folder. Call it something creative like jre6.zip :-)

4. If you haven’t already done so I’d also suggest you get yourself a utility for managing Azure blob storage. I’m using the Azure Blob Studio 2011 Visual Studio Extension.

5. Spin up Visual Studio and load the Neo4j Azure solution. Make sure the Azure project is the startup project

6. Next, upload both the Ne04j and JRE zip files you have to Azure blob storage. Note that by default the Azure project looks for a neo4j container, so it’s best to create that container first:

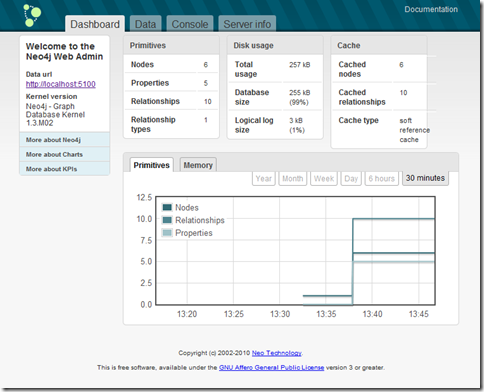

7. Now it’s time to spin this thing up! Press the infamous F5 and wait for a while as Azure gets it act in order, deploys the app and starts everything up for you. You’ll know things are up and running when a neo4j console app appears on your desktop. You may also get asked about allowing a windows firewall exception, depending on your machine configuration.

Behind the scenes, the Neo4j Azure app will pull both the java runtime and neo4j zip files down from blob storage, unzip them and then call java to start things up. As you can imagine, this can take some time.

If you have the local Compute Emulator running, then you should be able to see logging information indicating what’s happening, for example:

8. Once everything is up and running you should be able to start a browser up and point it to the administration interface. On my machine that was http://localhost:5100. You can check the port number to use by having a look at the top of the log for this:

You should then see something like this:

That chart is showing data being added to the database. Just what we want to see!

So that’s it. The server is up and running and it wasn’t too hard at all! The Neo team is looking to make the whole process much simpler, but I think this is a great start and it is very welcome indeed!

If you do have problems, check the locations in the settings of the Neo4jServerHost in the Azure project to make sure they match your local values. You might just need to adjust them to get it working.

P.S. If you have questions, then ask in the Neo4j forums. I’m not an expert in any of this ;-) I’m just really pleased to be able to see it running on Azure.

The Windows Azure Team posted BEDIN Announces Windows Azure Platform-Based Solutions for the European Retail Community on 3/2/2011:

Yesterday during the "Cloud Computing in Retail Breakfast" at the Euroshop Retail Design Conference in Dusseldorf, Germany, Microsoft partner BEDIN Shop Systems announced that their Windows Azure-based aKite Vers.2.0 software services suite has gained significant customer acceptance since its introduction last year.

European retailers have increasingly realized the benefits of cloud computing; new customers include mobile phone distribution chains in Italy, Italian retailers Briocenter, Bruel and luxury brand Jennifer Tattanelli. Please read the press release for more details about this announcement.

• OnWindows offers more background on BEDIN Shop Systems in

BEDIN Shop Systems has more than 20 years’ experience in software development for retail stores and an internationally recognised innovation track record. Our latest achievement is aKite: the first POS and in-store software-as-a-service (SaaS) solution based on Windows Azure cloud computing platform.

Innovation does not happen by chance: the previous incarnation of aKite was Retail Web Services (RWS), a service oriented architecture central hub that manages enterprise resource planning (ERP) integration and data sharing with stores for the largest DIY chain in Italy. This demonstrated the smart clients and SaaS power and quality of service over traditional architectures.

The evolution towards the aKite suite of cloud computing service for retail stores was completed in 2009 when RWS was redesigned to take full advantage of a modern platform-as-a-service like Azure and a POS and an in-store smart client was released.

A SQL Server compact edition database in every POS.net front-store increases speed of service and allows disconnected operations. SHOP.net, the back-store smart client, manages dashboards, reporting, prices, promotions, stock and replenishment.

aKite is designed for easy integration. Version two has seen the introduction of aKite Document Interchange (aDI), a software service based on Azure Service Bus that greatly simplifies integration with legacy ERP and other on-premise systems such as aKite DWH, a behind the firewall SQL Server-based application, updated in near-real-time with sales from every store for business intelligence activity.

aKite : the most direct link with stores is up in the clouds !

<Return to section navigation list>

Visual Studio LightSwitch

The ADO.NET Team announced EF 4.1 Is Coming (DbContext API & Code First RTW) in a 3/2/2011 post:

Our latest EF Feature Community Technology Preview (CTP5) has been out for a few months now. Since releasing CTP5 our team has been working hard on tidying up the API surface, implementing changes based on your feedback and getting the quality up to production ready standards. At the time CTP5 was released we also announced that we would be releasing a production ready go-live in Q1 of 2011 and we are on track to deliver on the commitment.

Our plan is to deliver a Go-Live Release Candidate version of the DbContext API and Code First in mid to late March. This Release Candidate will be titled ADO.NET Entity Framework 4.1. Approximately a month after the RC ships we plan to release the final Release to Web (RTW). We are not planning any changes to the API surface or behavior between RC and RTW, the release is purely to allow any new bugs found in the RC build to be evaluated and potentially fixed.

Why ADO.NET Entity Framework 4.1?

This is the first time we’ve released part of the Entity Framework as a stand-alone release and we’re excited about the ability to get new features into your hands faster than waiting for the next full .NET Framework release. We plan to release more features like this in the future. We chose to name the release EF 4.1 because it builds on top of the work we did in version 4.0 of the .NET Framework.

Where is it Shipping?

EF 4.1 will be available as a stand-alone installer that will install the runtime DbContext API and Code First components, as well as the Visual Studio Item Templates that allow using the new DbContext API surface with Model First and Database First development. We also plan to make the bits available as a NuGet package.

What’s Coming in RC/RTW?

The majority of our work involves small API tweaks and improving quality but there are a few changes coming in the RC that we want to give you a heads up about.

- Rename of DbDatabase

We heard you, admittedly the name was a little strange. It is going back to ‘Database’ for RC/RTW.- Rename of ModelBuilder

To align with the other core classes ModelBuilder will become DbModelBuilder.- Validation in Model First and Database First

The new validation feature was only supported in Code First in CTP5. In RC the validation feature will work with all three development workflows (Model First, Database First, and Code First).- Intellisense and online docs

We’ve held off on extensively documenting the CTPs because the API surface has been changing so much. RC will have intellisense and online documentation.- Removing Code First Pluggable Conventions

This was a very painful decision but we have decided to remove the ability to add custom conventions for our first RC/RTW. It has become apparent we need to do some work to improve the usability of this feature and unfortunately we couldn’t find time in the schedule to do this and get quality up the required level. You will still be able to remove our default conventions in RC/RTW.There are also a few common requests that are not going to be available in the RC/RTW, we really want to address these scenarios and plan to do so in coming releases. We’ve had overwhelming feedback asking for a go-live release though and have decided to finish our current feature set and ship it.

- Code First bulk column renaming not supported

On a few forum threads we had discussed possibly re-enabling the ability to rename columns when specifying properties to be mapped, i.e. modelBuilder.Entity<Person>().Map(m => m.Properties(p => new { person_id = i.Id, person_name = p.Name }));

This is not going to be supported in our first RC/RTW.- No compiled query support from DbContext

Unfortunately due to some technical limitations in the compiled query functionality we shipped in .NET Framework 4.0 we are unable to support compiled queries via the DbContext API. We realize this is a painful limitation and will work to enable this for the next release.- No stored procedure support in Code First

The ability to map to stored procedures is a common request but is not going to be available in our first RC/RTW of Code First. We realize this is an important scenario and plan to enable it in the future but didn’t want to hold up a go-live release for it.Database Schema Evolution (Migrations)

One of the most common requests we get is for a solution that will evolve the database schema as your Code First model changes over time. We are working on this at the moment but we’ve also heard strong feedback that this shouldn’t hold up the RTW of Code First. In light of this our team has been focusing on getting the current feature set production ready. Hence a schema evolution solution will not be available for our first RTW. You’ll start to see more on this feature once we have RTW released.

Thank You

It has been great to have so much community involvement helping us drive these features, we thank you for giving us your valuable input.

ADO.NET Entity Framework Team

Entity Framework articles are in this section because Visual Studio LightSwitch depends on EF 4+.

Return to section navigation list>

Windows Azure Infrastructure

David Pallman (@davidpallman) reported Book Release: The Windows Azure Handbook, Volume 1: Strategy & Planning in a 3/1/2011 post:

I’m very pleased to announce that my first Windows Azure book is now available, The Windows Azure Handbook, Volume 1: Planning & Strategy. In this post I’ll give you a preview of the book. This is the first in a four-volume series that covers 1-Planning, 2-Architecture, 3-Development, and 4-Management. For information about the book series see my previous post or visit the book web site at http://azurehandbook.com/. The book can be purchased through Amazon.com and other channels.

This first volume is intended for business and technical decision makers and is concerned with understanding, evaluating, and planning for the Windows Azure platform. Here’s how the book is organized:

Part 1, Understanding Windows Azure, acquaints you with the platform and what it can do.

• Chapter 1 explains cloud computing.

• Chapter 2 provides an overview of the Windows Azure platform.

• Chapter 3 describes the billing model and rates.Part 2, Planning for Windows Azure, explains how to evaluate and plan for Windows Azure.

• Chapter 4 describes a responsible process for cloud computing evaluation and adoption.

• Chapter 5 describes how to lead discussions on envisioning risk vs. reward.

• Chapter 6 is about identifying cloud opportunities for your organization.

• Chapter 7 explains how to profile applications and determine their suitability for Windows Azure.

• Chapter 8 describes how to approach migrations and estimate their cost.

• Chapter 9 covers how to compute Total Cost of Ownership (TCO) and Return on Investment (ROI).

• Chapter 10 is about strategies for adopting Windows Azure.Here are the chapter introductions to give you an idea of what each chapter covers:

Introduction

This book is about cloud computing using Microsoft’s Windows Azure platform. Accordingly, I have a two-fold mission in this introduction: to convince you cloud computing is worth exploring, and to convince you further that Windows Azure is worth exploring as your cloud computing platform. Following that I’ll explain the scope, intended audience, and organization of the book.Chapter 1: Cloud Computing Explained

In order to appreciate the Windows Azure platform it’s necessary to start with an understanding of the overall cloud computing space. This chapter provides general back¬ground information on cloud computing. We’ll answer these questions:

• What is cloud computing?

• How is cloud computing being used?

• What are the benefits of cloud computing?

• What different kinds of cloud computing are there?

• What does cloud computing mean for IT?Chapter 2: Windows Azure Platform Overview

In the previous chapter we explained cloud computing generally. Now it’s time to get specific about the Windows Azure platform. We’ll answer these questions:

• Where does Windows Azure fit in the cloud computing landscape?

• What can Windows Azure do?

• What are the technical underpinnings of Windows Azure?

• What are the business benefits of Windows Azure?

• What does Windows Azure cost?

• How is Windows Azure managed?Chapter 3: Billing

The Windows Azure platform has many capabilities and benefits, but what does it cost? In this chapter we’ll review the billing model and rates. We’ll answer these questions:

• How is a Windows Azure billing account set up and viewed?

• Is Windows Azure available in my country, currency, and language?

• What is the Windows Azure pricing model?

• What are the metering rules for each service?

• What is meant by “Hidden Costs in the Cloud”?Chapter 4: Evaluating Cloud Computing

Planning for cloud computing is an absolute necessity. Not everything belongs in the cloud, and even those applications that are well-suited may require revision. There are financial and technical analyses that should be performed. In this chapter we’ll explain how to evaluate cloud computing responsibly. We’ll answer these questions:

• What is the ideal rhythm for evaluating and adopting cloud computing?

• What is the value and composition of a cloud computing assessment?

• What should an organization’s maturity goals be for cloud computing?Chapter 5: Envisioning Risk & Reward

In order to make an informed decision about Windows Azure you need more than a mere understanding of what cloud computing is; you also need to determine what it will mean for your company. In this chapter we’ll answer these questions:

• How do you find the cloud computing synergies for your organization?

• How do you air and address risks and concerns about cloud computing?

• How will cloud computing affect your IT culture?Chapter 6: Identifying Opportunities

Some applications and organizational objectives fit cloud computing and the Windows Azure platform well and some do not. This chapter is a catalog of patterns and anti-patterns that can help you swiftly identify candidates and non-candidates even if you are surveying a large portfolio of IT assets. Of course, candidates are only that: candidates. Business and technical analysis is necessary to make ultimate determinations of suitability. We’ll look at the following:

• Which scenarios are a good fit for Windows Azure?

• Which scenarios are a poor fit for Windows Azure?Chapter 7: Profiling Applications

Applications, like people, are complex entities with many different attributes. In order to determine how good a fit an application is for cloud computing with Windows Azure, you’ll need to consider it from multiple angles and then come to an overall conclusion. We’ll look at the following:

• How do you profile an application?

• How can an application be scored for suitability?

• How should suitability scores be used?Chapter 8: Estimating Migration

Some applications migrate easily to Windows Azure while others may require moderate or significant changes. Migration should begin with a technical analysis in which the extent of architectural, code, and data changes are determined. Once scoped, migration costs can be estimated and factored into the ROI calculation to determine if a migration makes sense. We’ll answer these questions:

• How is a migration candidate analyzed?

• How is a migration approach determined?

• How can operational costs be optimized in a migration?

• How is the extent of development work estimated?Chapter 9: Calculating TCO & ROI

Whether or not cost reduction is your motivation for using cloud computing you certainly want to know what to expect financially from the Windows Azure Platform. Once you have a candidate solution in view you can calculate Total Cost of Ownership, Savings, and Return on Investment (if you have collected sufficient information). If you have skilled financial people at your disposal you should consider involving them in your analysis. In this chapter we present simple but revealing analyses anyone can perform that help you see the financial picture. We’ll answer these questions:

• How is TCO calculated for a Windows Azure solution?

• How is savings determined?

• How is ROI calculated?Chapter 10: Adoption Strategies

Whether your use of Windows Azure is light or extensive, you should certainly be using it for well-defined reasons. A technology wave as big as cloud computing can be a game-changer for many organizations and is worth evaluating as a strategic tool. In this chapter we help you consider the possibilities by reviewing different ways in which Windows Azure can be used strategically by organizations. We also consider the impact it can have on your culture. We’ll answer these questions:

• Are there different levels of adoption for Windows Azure?

• How can Windows Azure be strategically used by IT?

• How can Windows Azure affect your culture?

• How can Windows Azure further your business strategy?Well, there you have it—The Windows Azure Handbook, Volume 1: Strategy & Planning. I believe this is the only book for Windows Azure that covers business, planning, and strategy. I hope it is useful to organizations of all sizes in evaluating and adopting Windows Azure responsibly.

I say again: “Bravo, David!”

Lori MacVittie (@lmacvittie) asserted We need to remember that operations isn’t just about deploying applications, it’s about deploying applications within a much larger, interdependent ecosystem in a preface to her How to Build a Silo Faster: Not Enough Ops in your DevOps post of 3/2/2011 to F5’s DevCentral blog:

One of the key focuses of devops – that hardy movement that seeks to bridge the gap between development and operations – is on deployment. Repeatable deployment of applications, in particular, as a means to reduce the time and effort that goes into the deployment of applications into a production environment.

But the focus is primarily on the automation of application deployment; on repeatable configuration of application infrastructure such that it reduces time, effort, and human error. Consider a recent edition of The Crossroads, in which CM Crossroads Editor-in-Chief Bob Aiello and Sasha Gilenson, CEO & Co-founder of Evolven Software, discuss the challenges of implementing and supporting automated application deployment.

So, as you have mentioned, the challenge is that you have so many technologies and have so many moving pieces that are inter-dependant and today - each of the pieces come with a lot of configuration. To give you a specific example, you know, the WebSphere application and service, which is frequently used in the financial industry, comes with something like, 16,000 configuration parameters. You know Oracle, has 100s and 100s, , about 1200 parameters, only at the level of database server configuration. So, what happens is that there is a lot of information that you still need to collect, you need to centralize it.

-- Sasha Gilenson, CEO and Co-founder of Evolven Software

The focus is overwhelmingly on automated application deployment. That’s a good thing, don’t get me wrong, but there is more to deploying an application. Today there is still little focus beyond the traditional application infrastructure components. If you peruse some of the blogs and articles written on the subject by forerunners of the devops movement, you’ll find that most of the focus remains on automating application deployment as it relates to the application tiers within a data center architecture. There’s little movement beyond that to include other data center infrastructure that must be integrated and configured to support the successful delivery of applications to its ultimate end-users.

That missing piece of the devops puzzle is an important one, as the operational efficiencies sought by enterprises by leveraging cloud computing , virtualization and dynamic infrastructure in general is, in part, the ability to automate and integrate that infrastructure into a more holistic operational strategy that addresses all three core components of operational risk: security, availability and performance.

It is at the network and application network infrastructure layers where we see a growing divide between supply and demand. On the demand side we see increases for network and application network resources such as IP addresses, delivery and optimization services, firewall and related security services. On the supply side we see a fairly static level of resources (people and budgets) that simply cannot keep up with the increasing demand for services and services management necessary to sustain the growth of application services.

INFRASTRUCTURE AUTOMATION

One of the key benefits that can be realized in a data center evolution from today to tomorrow’s dynamic models is operational efficiency. But that efficiency can only be achieved by incorporating all the pieces of the puzzle.

That means expanding the view of devops from the application deployment-centric view of today into the broader, supporting network and application network domain. It is in understanding the inter-dependencies and collaborative relationships of the delivery process that is necessary to fully realize on the efficiency gains proposed to be the real benefit of highly-virtualized and private cloud architectural models.

This is actually more key than you might think as automating the configuration of say, WebSphere, in an isolated application-tier-only operational model may be negatively impacted in later processes when infrastructure is configured to support the deployment. Understanding the production monitoring and routing/switching polices of delivery infrastructure such as load balancers, firewalls, identity and access management and application delivery controllers is critical to ensure that the proper resources and services are configured on the web and application servers. Operations-focused professionals aren’t off the hook, either, as understanding the application from a resource consumption and performance point of view will greatly forward the ability to create and subsequently implement the proper algorithms and policies in the infrastructure necessary to scale efficiently.

Consider the number of “touch points” in the network and application network infrastructure that must be updated and/or configured to support an application deployment into a production environment:

Firewalls

- Load balancers / application delivery controller

- Health monitoring

- load balancing algorithm

- Failover

- Scheduled maintenance window rotations

- Application routing / switching

- Resource obfuscation

- Network routing

- Network layer security

- Application layer security

- Proxy-based policies

- Logging

- Identity and access management

- Access to applications by

- user

- device

- location

- combinations of the above

- Auditing and logging on all devices

- Routing tables (where applicable) on all devices

- VLAN configuration / security on all applicable devices

The list could go on much further, depending on the breadth and depth of infrastructure support in any given data center. It’s not a simple process at all, and the “checklist” for a deployment on the operational side of the table is as lengthy and complex as it is on the development side. That’s especially true in a dynamic or hybrid environment, where resources requiring integration may themselves be virtualized and/or dynamic. While the number of parameters needing configuration of a database, as mentioned by Sasha above is indeed staggering, so too are the parameters and policies needing configuration in the network and application network infrastructure.

Without a holistic view of applications as just one part of the entire infrastructure, configurations may need to be unnecessarily changed during infrastructure service provisioning and infrastructure policies may not be appropriate to support the business and operational goals specific to the application being deployed.

DEVOPS or OPSDEV

Early on Alistair Croll

coined the concept of managing applications in conjunction with its supporting infrastructure “web ops.” That term and concept eventually morphed into devops and been adopted by many of the operational admins who must manage application deployments.

But it is becoming focused on supporting application lifecycles through ops with very little attention being paid to the other side of the coin, which is ops using dev to support infrastructure lifecycles.

In other words, the gap that drove the concept of automation and provisioning and integration across the infrastructure, across the network and application network infrastructure, still exists. What we’re doing, perhaps unconsciously, is simply enabling us to build the same silos that existed before a whole lot faster and more efficiently.

The application is still woefully ignorant of the network, and vice-versa. And yet a highly-virtualized, scalable architecture must necessarily include what are traditionally “network-hosted” services: load balancing, application switching, and even application access management. This is because at some point in the lifecycle both the ability to perform and economy of scale of integrating web and application services with its requisite delivery infrastructure becomes an impediment to the process if accomplished manually.

By 2015, tools and automation will eliminate 25 percent of labor hours associated with IT services.

As the IT services industry matures, it will increasingly mirror other industries, such as manufacturing, in transforming from a craftsmanship to a more industrialized model. Cloud computing will hasten the use of tools and automation in IT services as the new paradigm brings with it self-service, automated provisioning and metering, etc., to deliver industrialized services with the potential to transform the industry from a high-touch custom environment to one characterized by automated delivery of IT services. Productivity levels for service providers will increase, leading to reductions in their costs of delivery.-- Gartner Reveals Top Predictions for IT Organizations and Users for 2011 and Beyond

Provisioning and metering must include more than just the applications and its immediate infrastructure; it must reach outside its traditional demesne and take hold of the network and application network infrastructure simply to sustain the savings achieved by automating much of the application lifecycle. The interdependence that exists between applications and “the network” must not only be recognized, but explored and better understood such that additional efficiencies in delivery can be achieved by applying devops to core data center infrastructure.

Other we risk building even taller silos in the data center, and what’s worse is we’ll be building them even faster and more efficiently than before.

Dan Kusnetzky asked Is cloud computing about productivity or something else? in a 3/2/2011 post to ZDNet’s Virtually Speaking blog:

From time to time, I read about a study that appears to be using a measurement that doesn’t make sense. When I see such a study, I have to wonder if the sponsors understood the basic concept.

I just came across just such a study. Gary Kim recently published Cloud Computing Won’t Boost Productivity Much, Study Suggests which mentions a study published by the U.K. Department for Business, Innovation and Skills. The conclusion of the study is that cloud computing is not going to contribute substantially to the productivity of small to medium size enterprises in the U.K.

While this is an interesting notion, Cloud Computing is a delivery consumption model allowing organizations to access computing resources larger than they may have been able to purchase, more complex than their IT team could manage and also to be able to deploy, tear down and then redeploy these resources very rapidly without having to acquire real estate, build buildings, obtain power and communication, etc. It is also about having access to enterprise-class applications and tools that might be more expensive than an organization would be able to acquire on its own.

Cloud computing is far more about agility, cost control and being able to do things perviously impossible than about being able to do more of the same old thing.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• Gavin Clarke (@gavin_clarke) and Mary Jo Foley (@maryjofoley) wonder “where, oh, where is Microsoft's Windows Azure [Platform] Appliance” starting at 00:31:45 in their 00:44:11 Elop's choice: Microsoft and Nokia take a bruising Microbite podcast for The Register of 3/2/2010:

Stephen Elop's decision to make Windows Phone the Nokia smartphone operating system of choice could be rationalized, even defended, right up until the point where Microsoft's new phone platform bricked Samsung Omina 7 phones.

Suddenly, and without any real explanation, Windows Phone 7 couldn't be updated on 10 per cent of phones. Imagine if a Windows Update wouldn't update 10 per cent of the Windows PCs out there.

Now, there's are questions over the operating system's reliably. And the incident highlights the hoops customers must jump through simply to update their phone's software.

Meanwhile, Microsoft is putting Internet Explorer 9 on the Mango release of Windows Phone later this year. That's right - IE 9, not something that's "IE-like". How will getting rid of Microsoft's creaky old browser for mobile change the browser market-share war? Will it mean Microsoft can shift attention from talking about Javascript, HTML5 compliance, and privacy?

@00:31

:45 And where, oh, where is Microsoft's Windows Azure [Platform A]ppliance, promised with such fanfare last summer? The thing was supposed to arrive by now, from Dell, Hewlett-Packard and Fujitsu. And could the device's future be threatened by [Satya Nadella, ] the new executive Steve Ballmer picked to lead Microsoft's business group responsible for Azure?

Join Reg software editor Gavin Clarke and All-About-Microsoft blogger Mary-Jo Foley on the latest MicroBite as they assess the impact of the Microkia Samsung bricking, look at where IE is headed, and put the microscope on Microsoft's new Azure man.

You can listen using the Reg media player below, or by downloading the MP3 here or Ogg Vorbis here.

Microsoft’s current Windows Azure Platform Appliance page’s “Who’s using the appliance?” section says:

Dell, eBay, Fujitsu and HP intend to deploy the appliance in their datacenters to offer new cloud services.

As Mary Jo noted in the podcast, eBay is still testing WAPA for rollout. See also Oakleaf’s Larry Dignan (@ldignan) posted on 2/18/2011 a video clip of Dave Cullinane, eBay’s Chief Information Security Officer, reaffirming eBay to build its own private cloud with Windows Azure item in Windows Azure and Cloud Computing Posts for 2/17/2011+. Culliname doesn’t mention WAPA by name.

• Derrick Harris reported Dell Cloud OEM Partner DynamicOps Gets $11M in a 2/28/2011 post to GigaOm’s Structure blog:

Burlington, Mass.-based startup DynamicOps has raised $11 million in Series B funding from Sierra Ventures, Next World Capital and investment bank Credit Suisse’s Next II venture group. As I reported in October, DynamicOps emerged as the commercial materialization of an internal virtualization management project within Credit Suisse (s cs) in 2008, and it has since expanded into the private cloud computing space. By way of an OEM deal, DynamicOps’ Cloud Automation Manager software provides the self-service component of Dell’s (s dell) Virtual Integrated System management suite. Given its already-solid foundation, the new capital could go a long way toward making DynamicOps a household name in the private-cloud space.

That DynamicOps has an existing customer base to which it can pitch its Cloud Automation Manager distinguishes it from most private-cloud startups that must start their sales efforts from Step 1. I noted in my earlier post that DynamicOps was able to build a solid customer base for its virtualization management software, Virtual Resource Manager, based in part on its already having been proven within Credit Suisse. As VP of Marketing Rich Bordeaux told me then, one customer currently manages 30,000 VMs and virtual desktops and is looking to have more than 60,000 within 18 months. Now, it has $11 million more “to fund global sales, marketing and development of its private cloud automation solutions.”

But the disclaimer that applies to all private-cloud efforts — be it Cloud.com, Nimbula, Abiquo, Eucalyptus or whomever — applies to DynamicOps, as well, which is that we’ve still yet to see any real uptake (publicly, at least) of these products beyond service providers and some proofs-of-concept within traditional businesses. The technology is generally innovative, and surveys regularly show relatively high interest in private cloud overs public clouds, so it seems like mainstream adoption shouldn’t be too far out. It wouldn’t too surprising to see DynamicOps be the company to break through — even beyond the users it garners through the Dell integration — but we’re still waiting for evidence that anybody can.

Related content from GigaOM Pro (sub req’d):

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) asserted App Stores: From Mobile Platforms To VMs – Ripe For Abuse in a 3/2/2011 post:

This CNN article titled “Google pulls 21 apps in Android malware scare” describes an alarming trend in which malicious code is embedded in applications which are made available for download and use on mobile platforms:

Google has just pulled 21 popular free apps from the Android Market. According to the company, the apps are malware aimed at getting root access to the user’s device, gathering a wide range of available data, and downloading more code to it without the user’s knowledge.

Although Google has swiftly removed the apps after being notified (by the ever-vigilant “Android Police” bloggers), the apps in question have already been downloaded by at least 50,000 Android users.

The apps are particularly insidious because they look just like knockoff versions of already popular apps. For example, there’s an app called simply “Chess.” The user would download what he’d assume to be a chess game, only to be presented with a very different sort of app.

Wow, 50,000 downloads. Most of those folks are likely blissfully unaware they are owned.

In my Cloudifornication presentation, I highlighted that the same potential for abuse exists for “virtual appliances” which can be uploaded for public consumption to app stores and VM repositories such as those from VMware and Amazon Web Services:

The feasibility for this vector was deftly demonstrated shortly afterward by the guys at SensePost (Clobbering the Cloud, Blackhat) who showed the experiment of uploading a non-malicious “phone home” VM to AWS which was promptly downloaded and launched…

This is going to be a big problem in the mobile space and potentially just as impacting in cloud/virtual datacenters as people routinely download and put into production virtual machines/virtual appliances, the provenance and integrity of which are questionable. Who’s going to police these stores?

(Update: I loved Christian Reilly’s comment on Twitter regarding this: “Using a public AMI is the equivalent of sharing a syringe.”)

/Hoff

Image via Wikipedia

Related articles

- Google pulls 21 apps in Android malware scare (cnn.com)

- More than 50 Android apps found infected with rootkit malware (guardian.co.uk)

The HPC in the Cloud blog reported Survey Shows Poor Performance of Cloud Applications Bears High Costs, Delays Cloud Adoption in a 3/2/2011 press release:

DETROIT, March 2, 2011 -- Compuware Corporation (Nasdaq: CPWR), the technology performance company, today announced the findings of a cloud performance survey conducted by Vanson Bourne. The survey of 677 businesses in North America and Europe examined the impact application performance has on cloud application strategy and deployments.

The survey reveals that the majority of organizations in both regions are greatly aware that poor performance of cloud applications has a direct impact on revenue. The survey shows that businesses in North America are losing on average almost $1 million per year because of the poor performance of their cloud-based applications. In Europe, the figure is more than $0.75 million. The survey also reveals that organizations are delaying their cloud deployments because of performance concerns and believe that service level agreements (SLAs) for cloud applications should be based on actual end-user experience and not just on service provider availability metrics.

Other key survey findings include:

* 58 percent of organizations in North America and 57 percent of organizations in Europe are slowing or hesitating on their adoption of cloud-based applications because of performance concerns.

* 94 percent of organizations in North America and 84 percent of organizations in Europe believe that SLAs for cloud applications have to be based on the actual end-user experience, not just service provider availability metrics."These survey results highlight the growing awareness that the ability of IT organizations to guarantee the performance of cloud applications is severely restricted, and more rigorous SLAs are required for issues such as internet connection and performance, end-user experience and other factors," said Richard Stone, Cloud Computing Solutions Manager at Compuware Corporation. "The ability to monitor and measure these new, business-oriented SLAs will be a critical necessity. Performance in the cloud requires an integrated application performance management solution that provides application owners with a single, end-to-end view of the entire delivery chain."

Download the survey whitepaper here: http://bit.ly/etPRlv.

Compuware delivers the market's only application performance management solution Gomez and Vantage that provides broad visibility and deep-dive resolution across the entire application delivery chain, spanning both the enterprise and the Internet. These unrivaled capabilities make Compuware the global standard for optimizing application performance.

Methodology

In June 2010, Compuware commissioned independent market research company, Vanson Bourne to conduct a study of IT director's in three European markets to gain a greater understanding of the attitude towards cloud computing in Europe. The research was done via telephone to a pre-qualified audience determined by Vanson Bourne based on Compuware's requirements. In conducting the survey, Vanson Bourne questioned 300 European IT directors in large enterprise organizations with more than 1000 employees. Specifically they questioned 100 IT directors in the UK, 100 in Germany and 100 in France. The objective was to gain a deeper insight on overall attitudes towards cloud computing performance and insights into the impact of cloud computing performance on their organizations.

Compuware asked the same questions to the North American market to see how similar the attitudes of business leaders in the two markets compared. The North American survey was conducted in October 2010 via e-mail to 377 organizations in the United States and Canada.

Today, March 02, 2011, 2 hours ago

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

• Randy Bias (@randybias) reported participation by CloudScaling personnel in his Cloud Connect 2011 post of 3/2/2011:

The annual Cloud Connect conference is next week (March 7-10). It’s quickly become one of the most well-produced events in our industry, and we’re honored to participate. In fact, I consider Cloud Connect to be the thought leadership event for cloud computing.

The 2011 event is the most ambitious yet in the diversity of topics and sheer size. Alistair Croll’s team has put together an excellent series of sessions and participants.

Four folks from our leadership team are participating in 12 panels plus a keynote address this year:

- Randy Bias, Co-Founder & CTO

- Andrew Shafer, Vice President of Engineering

- Francesco Paola, Vice President, Client and Partner Services

- Paul Guth, Vice President, Technical Operations

Below is a quick lineup of where we’re speaking and when. Come see us in Santa Clara at the Convention Center, and bring the tough questions: you know we like a good debate!

Monday, March 7, 2011

Cloudy Operations

Grand Ballroom E — 8:59 – 4:30

Andrew ShaferCloud Performance Summit: Tools and Techniques for Performance Optimization

Grand Ballroom H — 1:15 – 4:30

Paul GuthTuesday, March 8, 2011

Keynote

Theater — 9:45 – 9:55

Randy BiasOpen Source Built the Web – Now It’s Helping to Build the Cloud

Grand Ballroom G — 2:30 – 3:30

Randy BiasHow Does ROI Drive Architecture?

Grand Ballroom E — 3:45 – 4:30

Randy BiasPlatforms & Ecosystems: Birds of a Feather (BOF)

Grand Ballroom H — 6:00 – 7:30

Andrew ShaferWednesday, March 9, 2011

Private Cloud Conference

Virtualization on Demand vs. Private Cloud — Room 203

11:15 – 12:15

Randy BiasReal Barriers and Solutions to Implementing Private Cloud

Room 203 — 1:15 – 2:15

Randy Bias and Francesco PaolaImplementing Private Cloud: Open Source vs Everyone Else

Room 203 — 2:30 – 3:30

Randy BiasHybrid Cloud Computing: Final Answer or Transitory Architecture?

Room 203 — 3:45 – 4:30

Randy BiasThursday, March 10, 2011

Moving from “Dev vs. Ops” to “DevOps”

Grand Ballroom E — 8:15 – 9:15

Andrew Shafer and Paul GuthPerformance and Monitoring

Grand Ballroom G — 9:30 – 10:30

Randy BiasAsk the Experts: The DevOps Panel

Grand Ballroom E — 10:45 – 11:45

Andrew Shafer and Paul GuthIf you’ve not registered yet, do it today. We’ll see you in Santa Clara.

Randy, and to a lesser extent Andrew, are likely to be hoarse by Friday morning.

Jeff Wettlaufer [pictured below] reported Identity and Access coming to MMS 2011 in a 3/2/2011 post to Steve Wiseman’s blog:

Looking for Identity and Access content at MMS? Our teammate Brjann posted this over on his blog…..

Microsoft Management Summit in Las Vegas , March 21-25, will host breakout sessions and a ask the experts booth on Identity and Access for the first time. I’m very excited to be able to deliver four different sessions during the week that should give attendees a good overall view into the important role IDA plays in datacenter and cloud management with BI05 where we will focus on System Center and private cloud and BI08 with a bit more focus on public cloud SaaS and PaaS offerings.

The center of our Identity and Access solutions is of course Active Directory so all session will look at extending on that platform with integration into cloud using technologies such as AD Federation Services in BI07 and Forefront Identity Manager in BI06.

BI05 Identity & Access and System Center: Better Together

System Center and IDA are integrated already and leveraging Active Directory but couldn’t you do more? This session talks about roles and identities in System Center products and how we can leverage IDA solutions. Demos of how can we use a tool such as Forefront Identity Manager in conjunction with the roles and access policies in System CenterBI06 Technical Overview of Forefront Identity Manager (FIM)

Join us for a lap around Forefront Identity Manager going through architecture, deployment and business scenarios in a presentation filled with demos. This session should give you a good understanding of what FIM is and what it can do to put you in control of identities across different directories and applications.BI07 Technical Overview of Active Directory Federation Services 2.0

Join us for a lap around Active Directory Federation Services 2.0 covering architecture, deployment and business scenarios for using AD FS to extend single sign on from on-premises Active Directory to Office 365, Windows Azure and partners.BI08 Identity & Access and Cloud: Better Together

Organizations have started using services such as Office 365 and Windows Azure but are worried about security and how to manage identities and provide access. This session goes through how Identity and Access from Microsoft with solutions such as Forefront Identity Manager, AD Federation Services, Windows Identity Foundation and Windows Azure AppFabric Access Control Services play together to enable organizations to access cloud applications and also enable access for their customers and partners.

Jeff is Senior Technical Product Manager, System Center, Management and Security Division.

See my Cloud Management/DevOps Sessions at Microsoft Management Summit 2011 of 2/28/2011 for a list of all cloud-related sessions at MMS 2011.

Patriek van Dorp (@pvandorp) announced a Developer Contest: Develop the Windows Azure User Group NL Website on 3/2/2011:

As the number of members (a staggering 180 at the moment of this writing) exceeds all our expectations, we are becoming aware that we cannot do without a good website. A portal on which we can do announcements and keep track of upcoming events, handle registration for local events , share files like presentations and demo’s, collect suggestion and feedback and were we can collect information about speakers and so on.

The requirements for the portal are:

- It must run in Windows Azure of course.

- It must be manageable and dynamic.

- It must have an announcement section for announcing local events or webcasts.

- It must have a Registration section for registering to these events

- It must have a calendar for keeping track of upcoming events.

- It must have some kind of download section from which users can download presentations and/or demo’s.

- It must have a suggestions and feedback section.

Microsoft will sponsor two Windows Azure instances and a Storage Account to host the website in Windows Azure.

By using, for instance Orchard or mojoPortal this website could be up in no time!

Follow @wazugNL and tweet your suggestions. Send your e-mail address by Direct Message to exchange more information.

Patriek is a Senior Microsoft Technology Specialist at Sogeti in the Netherlands

Patriek van Dorp (@pvandorp) also reported on 3/2/2011 a DevDays 2011 Pre-conference: Azure scheduled for 4/27/2011:

27th of April Microsoft in association with Pluralsight facilitates an in-depth Windows Azure trainingday and online preparation session. This preparation session should get developers up to speed for the in-depth trainingday at the pre-conference at DevDays 2011. Attendees receive a free subscription for a month for all Pluralsight online Azure training courses.

For more information checkout the DevDays 2011 website.

Penton Media announced the Cloud Connections conference and exposition to be held 4/17 through 4/21 at the Bellagio hotel, Las Vegas, NV:

Whether you are just starting to evaluate or are fine-tuning your cloud strategy – this event is for you! It’s 4 days of technical sessions, networking opportunities, and bet-the-business market knowledge for business leaders, IT managers and directors, and developers to explore cloud computing services and products, deepen technical skills, and determine best-practices solutions for implementing private or public cloud infrastructure and services.

Cloud Connections brings together business decision makers, IT managers and directors, and developers to explore cloud computing services and products, deepen technical skills, and determine best-practices solutions for implementing private or public cloud infrastructure and services.

- Determine best-practices solutions for implementing private or public cloud infrastructure and services.

- Understand how to leverage and apply ideas and proven examples into your organization

- Learn how to maximize cloud performance and minimize costs

- Learn about identity federation - protocols, architecture and uses

- Compare security solutions for the cloud

- Network with your peers, IT pros, carriers and a wide range of cloud infrastructure, product and service vendors!

The conference is co-located with Penton’s Mobil Connections and Virtualization Connections conferences.

Penton has deployed a Cloud IT Pro Online sister Web site to its Windows IT Pro and Connected Planet Online (formerly TelephonyOnline.com) sites.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Joe Panettieri described How Cisco CTO Padmasree Warrior Got Her Cloud Game On in a 3/2/2011 post to the TalkinCloud blog:

When Padmasree Warrior joined Cisco Systems as chief technology officer a bit more than two years ago, CEO John Chambers had a special assignment for her. Chambers’ mandate to Warrior: Develop a cloud computing strategy. Fast forward to this week’s Cisco Partner Summit 2011 in New Orleans, and Warrior (pictured) sounds pretty confident about Cisco’s cloud progress.

During a press briefing that ended about ten minutes ago, Warrior described how Cisco started to develop a cloud strategy and ultimately launched the Cisco Cloud Partner Program this week. The story starts a bit more than two years ago, when Warrior formed a working group within Cisco. It included about 15 to 20 people who met on weekends and evenings to whiteboard viewpoints and begin to sketch some key ideas. The group explored potential emerging applications and the role of the network in the cloud.