Windows Azure and Cloud Computing Posts for 2/15/2010+

| Windows Azure, SQL Azure Database and related cloud computing topics now appear in this weekly series. |

• Updated 2/16/2010 2:30 PM PST: Updates for Tuesday

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Table and Queue Services

- SQL Azure Database (SADB)

- AppFabric: Access Control, Service Bus and Workflow

- Live Windows Azure Apps, Tools and Test Harnesses

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now download and save the following two online-only chapters in Microsoft Office Word 2003 *.doc format by FTP:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available from the book's Code Download page; these chapters will be updated for the January 4, 2010 commercial release in February 2010.

Azure Blob, Table and Queue Services

Nadezhda Lukyanova’s Register Azure Storage Account with CloudBerry Explorer post of 2/15/2010 asserts “In this post we will show you how to get Microsoft Azure project, create storage account and register the account:”

Microsoft Azure blob storage is alternative cloud storage service that helps store unlimited amount of binary data at low cost. As of February, 1 2010 the service is out of beta and you have to start paying for usage. One of the exciting thing[s] about Microsoft Azure (and I can’t help sharing it here) is that there is actually a so called Introductory Special offer that enables you to try Windows Azure at no charge! It includes 500 MB of free storage and 10 000 free storage transaction per month.

In this post we will show you how to get Microsoft Azure project, create storage account and register the account with CloudBerry Explorer for Windows Azure. …

<Return to section navigation list>

SQL Azure Database (SADB, formerly SDS and SSDS)

• Steve Hale of the Microsoft SQL Server Native Client (SQLNCli) team posted Using SQL Server Client APIs with SQL Azure Version 1.0 on 2/12/2010:

This post describes some of the issues that the application developer should be aware of when writing ODBC, ADO.NET SQLClient, BCP, and PHP code that connects to SQL Azure. There is no intention here to replace the SQL Azure documentation available on MSDN (http://msdn.microsoft.com/en-us/library/ee336279.aspx). Rather, the intent here is to provide a quick discussion of the primary issues impacting applications ported to or written for SQL Azure vs. SQL Server. Developers should reference the MSDN documentation for more detailed description of SQL Azure functionality.

Using supported SQL Server client APIs (ODBC, BCP, ADO.NET SQLClient, PHP) with SQL Azure is relatively easy, however, SQL Azure, while very similar, is not SQL Server. Because of this there are some pitfalls that need to be avoided to have the best experience when writing an application that connects to SQL Azure. …

Steve continues with the details geting the most out of SQLNCli with SQL Azure.

James Hamilton discusses MySpace’s capability to scaling 440 SQL Server instances running 1,000 databases to support 130 million unique monthly users in his Scaling at MySpace post of 2/15/2010:

MySpace makes the odd technology choice that I don’t fully understand. And, from a distance, there are times when I think I see opportunity to drop costs substantially. But, let’s ignore that, and tip our hat to the MySpace for incredibly scale they are driving. It’s a great social networking site and you just can’t argue with the scale they are driving. Their traffic is monstrous and, consequently, it’s a very interesting site to understand in more detail.

Lubor Kollar of SQL Server just sent me this super interesting overview of the MySpace service. My notes follow and the original article is at: http://www.microsoft.com/casestudies/Case_Study_Detail.aspx?casestudyid=4000004532. …

I particularly like social networking sites like Facebook and MySpace because they are so difficult to implement. Unlike highly partitionable workloads like email, social networking sites work hard to find as many relationships, across as many dimensions, amongst as many users as possible. I refer to this as the hairball problem. There are no nice clean data partitions which makes social networking sites amongst the most interesting of the high scale internet properties. More articles on the hairball problem:

The combination of the hairball problem and extreme scale makes the largest social networking sites like MySpace some of the toughest on the planet to scale. Focusing on MySpace scale, it is prodigious:

- 130M unique monthly users

- 40% of the US population has MySpace accounts

- 300k new users each day

The MySpace Infrastructure:

- 3,000 Web Servers

- 800 cache servers

- 440 SQL Servers

Looking at the database tier in more detail:

- 440 SQL Server Systems hosting over 1,000 databases

- Each running on an HP ProLiant DL585

- 4 dual core AMD procs

- 64 GB RAM

- Storage tier: 1,100 disks on a distributed SAN (really!)

- 1PB of SQL Server hosted data

As ex-member of the SQL Server development team and perhaps less than completely unbiased, I’ve got to say that 440 database servers across a single cluster is a thing of beauty. [Emphasis added.]

More scaling stores: http://perspectives.mvdirona.com/2010/02/07/ScalingSecondLife.aspx.

Hats off to MySpace for delivering a reliable service, in high demand, with high availability. Very impressive.

If MySpace can solve the “hairball” problems with scaling out SQL Server, I wonder why SQL Azure can’t support databases larger than 10 GB.

Carlos Femmer complains about the complexity of Transferring data from Sql Server 2008 R2 to Sql Azure in this 2/14/2010 post:

The current state of Sql Server 2008 R2 seems like it has a ways to go when dealing with Sql Azure. I tried several different ways of exporting data to load into Sql Azure and after several attempts, I have documented what I needed to move data over. Here is a quick and dirty way of moving data from Sql Server 2008 R2 to Sql Azure.

However, Carlos’ approach isn’t as quick as doing the same job with George Huey’s SQL Server Migration Wizard, as I explain in Using the SQL Azure Migration Wizard v3.1.3/3.1.4 with the AdventureWorksLT2008R2 Sample Database of 1/23/2010.

<Return to section navigation list>

AppFabric: Access Control, Service Bus and Workflow

Thanigainathan’s Visual Studio 2010 RC first look and Windows Azure Tools post of 2/15/2010 describes installing VS 2010 RC and the latest release of Windows Azure Tools for Visual Studio:

With respect to Azure Development, there is a new set of tools released for this RC build as well. Rather it is a combined build for VS 2008 SP1 as well as VS 2010 RC. You can download the same from Windows Azure Tools for Microsoft Visual Studio 1.1 (February 2010)

Before this, you would want to remove the Windows Azure tools for Visual Studio 2010 Beta 2 as well as Windows Azure tools for Visual Studio 2008. In fact, the un-installation process for me was to remove the Windows Azure Tools for Visual Studio 2010 Beta 2 as well as the one for VS 2008.

Then, I removed VS 2010 Beta 2. This process was fairly simple, with the Visual Studio 2010 Beta 2 icon in Add/Remove programs removing all the installed programs that came as a part of the installation.

Post that, I installed VS 2010 RC and then installed the Windows Azure Tools for Microsoft Visual Studio 1.1 which is a single installer that puts the development templates for both the RC edition as well as VS 2008.

Sachin Prakash Sancheti’s ws2007HttpRelayBinding and Windows Azure Client post of 2/15/2010 to the Infosys blog begins:

Recently we have faced this issue of not being able to consume a WCF service which was exposed using ws2007HttpRelayBinding from Windows Azure Web Role as a client. We had registered a service on AppFabric Service Bus with ws2007HttpRelayBinding; below is the service registration code for the service.

Sachin continues with a detailed explanation of how he solved the problem.

<Return to section navigation list>

Live Windows Azure Apps, Tools and Test Harnesses

• The rumor on Twitter is that #Cerebrata will launch a new Azure Diagnostics Manager. Click here for a brief demonsration video.

• Tathagata Chakraborty explains why he abandoned ASP.NET MVC for classic ASP.NET in his Windows Azure, ASP.NET, and ASP.NET MVC post of 2/16/2010:

I had to slow down the frequency of posts cause I was busy working on a Windows Azure project (which is really not much more than a prototype right now). I have become quite a fan of Azure due to the ease with which one can get up and started with the platform. This, in spite of the fact that there is hardly any readable (and up-to-date) guide available for Azure.

One of the most difficult things for me was deciding whether to use ASP.NET or ASP.NET MVC to build the web role for the project. I don’t know either of these languages/frameworks. However, I know that ASP.NET MVC should be a better fit for those coming from other MVC-like platforms, for example, Ruby on Rails and Django. I few months back I had done a smallish project using Django and was therefore instantly attracted towards ASP.NET MVC.

Unfortunately, after some quick dives into ASP.NET MVC I realized that, without some background in ASP.NET (and C#), learning ASP.NET MVC could present some steep curves. So after creating a few sample projects in ASP.NET MVC, I finally decided to ditch all that and use ASP.NET instead for my first real Azure project. …

• Mohamed Mosallem’s Azure Reader Architecture post of 2/16/2010 describes his live Azure Reader project that I found to work with the OakLeaf Blog’s Atom 1.0 feed:

As I promised you before I will explain the Azure Reader application, a sample RSS reader based on the azure platform. In this post I will walk you through the application architecture and code.

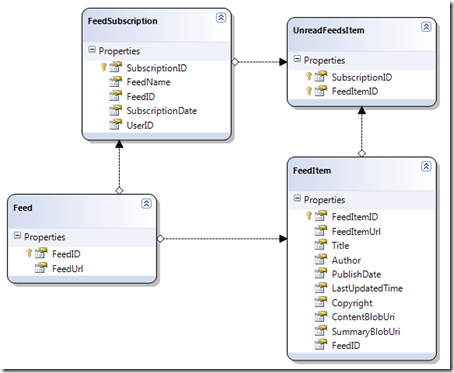

Let’s start by describing the data model which is fairly simple, we have a table for storing the Feeds (ID and URL), and we have a table FeedsSubscriptions for storing the users’ subscriptions to the feeds, feeds items are stored in the FeedsItems table where we store the different properties of the feed item like title, URL, author, publish time, etc., the actual content and summary of the feed item is not stored in the database directly instead the content is stored in windows azure blob storage and is referenced in the table using the ContentBlobUri and SummaryBlobUri fields.

The application has three roles, 1 Web role and two worker roles. …

Mohamed continues with a description of the Web and worker roles.

The Innov8Showcase team’s Monitoring Applications in Windows Azure post of 2/14/2010 reports:

Hedgehog’s Cloud Application Monitor was created to fill a gap in creating application for the Azure Cloud.

There is no easy way to monitor applications running in the cloud, and no quickly accessible repository of historical data.

The Cloud Application Monitor downloads diagnostic information from the cloud and stores it in a local SQL server database.

Hedgehog has posted this application on Codeplex as a free download.

I commented earlier today that the Innov8Showcase post omitted a link to the CodePlex download, which I added above.

Enterra, Inc. describes creating the Enterra WebCam Viewer with Windows Azure, SQL Azure and Silverlight in its detailed How We Developed [a] Web Application on MS Azure post of 2/15/2010:

Today the Internet has a significant number of live web cameras available for everyone. Web cameras allow users to view video streaming in a real time or get specific frames upon request. However, the cameras can’t store video for some time period. This is obvious as calculating resources (disk space on server and processor time allotted for request serving) are needed for video storing or photo collections storing. We decided to implement this functionality in our project Enterra WebCam Viewer.

Enterra WebCam Viewer – is a web application with the following features:

- When user opens the application he/she should see a list of public cameras served by the application (that is cameras for which video is archived);

- When user goes to a certain camera, he/she should have an option to view video (or separate frames) for a specified time period.

The features described above are features for a user with “Guest” rights. Cameras can’t appear in the application by themselves. Someone should add them. Let users add them. For these purposes we have features for users registered in the system:

- New user registration, simple user account management (password change);

- User cameras management: camera creation, camera deletion, camera edit. …

Sanjay Bhatia explains why Izenda ported its reporting platform to Windows Azure in this 00:02:34 YouTube AzurePartner video segment of 2/15/2010:

Izenda builds fastest reporting platform for the web. Izenda is excited about Windows Azures scalability. Windows Azure was clear choice for Izenda due to pricing strategy. Windows Azure allows instant deployment.

Sanjay is Founder and CEO - Izenda, Atlanta, Georgia.

Scott MacFarlane discusses migration to Windows Azure for his sales tax automation service in this 00:02:28 YouTube AzurePartner video segment of 2/15/2010:

Avalara is a sales tax automation company. Cloud computing allows Avalara to be more scalable, reliable, and cost effective. Windows Azure is a natural progression for Avalara because they are a Microsoft shop.

Scott is CEO of Avalara, Bainbridge Island, WA. Click here to run a Google Video Search that returns 38 more of these entertaining video snippets.

Return to section navigation list>

Windows Azure Infrastructure

• David Linthicum reports “We are shifting their IT resources, we are modernizing them, and even building new ones -- clearly, the data center is here to stay” in his The death of the data center has been greatly exaggerated – again post of 2/16/2010 to the InfoWorld Cloud Computing blog:

I was 19 years old and sitting in my 200-level computer science class when I first heard about the "death of the data center." Or, at that time, how minicomputers and the emerging presence of this thing called a microcomputer (what we now call a PC), where going to eliminate the need for centers that contain computing resources, my professor stated.

More recently, with the emerging interest in cloud computing, there has been a renewed call for the demise of data centers as we shift to a more public cloud-oriented or utility computing model.

I did not buy it then, and I'm not buying now. Indeed, while the data centers are changing significantly around the use of cloud computing, I suspect that we'll have many new data centers in the near term, before data centers actually normalized. …

• Lori MacVittie claims in her WILS: Layer 7 (Protocol) versus Layer 7 (Application) post to the F5 blog of 2/16/2010:

The problem with HTTP (okay, one of the problems with HTTP, happy now?) is that it resides at the top of the “stack” regardless of whether we identify the “stack” as based

upon the TCP/IP stack or the OSI model stack. In either case, HTTP sits at the top like a a king upon his throne. There’s nothing “higher” than the application in today’s networking models.

But like every good king, HTTP has a crown: the actual application data exchanged in the body of an HTTP transaction. In the good old days, when intermediaries (proxies) were only able to see the protocol portion of HTTP, i.e. its headers, this wasn’t a problem. When someone said “layer 7 switching” we understood it to mean the capability to make load balancing or routing decisions based on the HTTP headers. But then load balancers got even smarter and became “application aware” and suddenly “layer 7 switching” came to represent both load balancing/routing decisions based on HTTP headers or on its payload, i.e. the application data, a.k.a. “content based routing (CBR)” on the software side of the data center. …

• Eric Nelson reports that his UK MSDN Flash Podcast made it onto Zune.net in this 2/16/2010 post to his IUpdatable blog:

Great to see the MSDN Flash Podcast I host has made it onto Zune.net – even if I can’t access it myself as I’m in the UK :-(

However a US colleague pinged me about it and sent me the following. Looks rather grand :-)

The latest episode was on Azure – and I’m thinking about spinning off a second podcast show just on Azure.

• Ian Wilkes delivers Cloud platform choices: a developer's-eye view in this 2/15/2010 post to Ars Technica, which begins:

Cloud computing is one of the most hyped technology concepts in recent memory, and, like many buzzwords, the term "cloud" is overloaded and overused. A while back Ars ran an article attempting to clear some of the confusion by reviewing the cloud's hardware underpinnings and giving it a proper definition, and in this article I'll flesh out that picture on the software side by offering a brief tour of the cloud platform options available to development teams today. I'll also discuss these options' key strengths and weaknesses, and I'll conclude with some thoughts about the kinds of advances we can expect in the near term. In all, though, it's important to keep in mind that what's presented here is just a snapshot. The cloud is evolving very rapidly—critical features that seem to be missing today may be standard a year from now. …

The article covers Amazon Web Services, Rackspace, and others, but Ian classifies Windows Azure as an Infrastructure as a Service (IaaS), instead of a Platform as a Service (PaaS). This makes me wonder about the value of the remainder of his post.

Lori MacVittie prefaces her The Devil is in the Details post of 2/15/2010 with “Or more apropos, it’s in the complex and intimate relationship between applications and their infrastructure:”

What’s the difference between a highly virtualized corporate data center and a cloud computing environment? There are probably many, but the most important distinction – and the one that earns the latter a “cloud computing” tag – is certainly that the former lacks a comprehensive orchestration system and was likely not architected using a rapid, infrastructure inclusive, scalability strategy.

Mitch Garnaat, “The Elastician”, recently managed to sum up what should be every modern data center’s motto in a single, six word tweet: “Scale is not just about servers.” In fact, I am hereby dubbing this “Garnaat’s Theorem”, as this fundamental data center truth has been established and proven more times than some of us would care to remember.

Mitch could not be more right. Scale is not just about servers, and for corporate data centers and cloud computing providers looking to realize the benefits of rapid elasticity and on-demand provisioning scale simply must be one of the foundational premises upon which a dynamic data center is built. And that includes the infrastructure. …

Lori concludes:

…What should be clear, however, is that Garnaat’s Theorem holds true: scale is not just about servers. There are many, many more pieces of the architectural puzzle that go into scalability and it behooves data center architects to consider the impact of scaling approaches – up, out, virtual, physical – before jumping into an implementation.

This isn’t the first time I’ve touched upon this subject, but it’s a concept that needs to be reiterated – especially with so many pundits and analysts looking for the next big virtualization wave to crash onto the infrastructure beachhead. I’m here to tell you, though, that the devil is in the details. The architectural details.

William Vambenepe reminisces about the WS-* standards fiasco and asks Can Cloud standards be saved? from the complexity introduced by vendor control of software standards and disdain for input to the standards process from independent experts in this 2/15/2010 post:

One of the most frustrating aspects of how Web services standards shot themselves in the foot via unchecked complexity is that plenty of people were pointing out the problem as it happened. Mark Baker (to whom I noticed Don Box also paid tribute recently) is the poster child. I remember Tom Jordahl tirelessly arguing for keeping it simple in the WSDL working group. Amberpoint’s Fred Carter did it in WSDM (in the post announcing the recent Amberpoint acquisition, I mentioned that “their engineers brought to the [WSDM] group a unique level of experience and practical-mindedness” but I could have added “… which we, the large companies, mostly ignored.”)

The commonality between all these voices is that they didn’t come from the large companies. Instead they came from the “specialists” (independent contractors and representatives from small, specialized companies). Many of the WS-* debates were fought along alliance lines. Depending on the season it could be “IBM vs. Microsoft”, “IBM+Microsoft vs. Oracle”, “IBM+HP vs. Microsoft+Intel”, etc… They’d battle over one another’s proposal but tacitly agreed to brush off proposals from the smaller players. At least if they contained anything radically different from the content of the submission by the large companies. And simplicity is radical. …

I was present at the beginning of the WS-* standardization effort and hope that the cloud computing service industry can avoid repeating that fiasco.

Bernard Golden asserts “During the next two to five years, you'll see enormous conflict about the technical pros and cons of cloud computing. Three of cloud's key characteristics will create three IT revolutions, each with supporters and detractors” in his Cloud Computing Will Cause Three IT Revolutions article of 2/9/2010 for CIO.com:

Every revolution results in winners and losers — after the dust settles. During the revolution, chaos occurs as people attempt to discern if this is the real thing or just a minor rebellion. All parties put forward their positions, attempting to convince onlookers that theirs is the path forward. Meanwhile, established practices and institutions are disrupted and even overturned — perhaps temporarily or maybe permanently. Eventually, the results shake out and it becomes clear which viewpoint prevails and becomes the new established practice -- and in its turn becomes the incumbent, ripe for disruption. …

Bernie goes on to detail the Change in IT Operations and what groups will be winners and losers.

<Return to section navigation list>

Cloud Security and Governance

• Chris Hoff (aka @Beaker)’s Comments on the PwC/TSB Debate: The cloud/thin computing will fundamentally change the nature of cyber security… begins:

I saw a very interesting post on LinkedIn with the title PwC/TSB Debate: The cloud/thin computing will fundamentally change the nature of cyber security…

PricewaterhouseCoopers are working with the Technology Strategy Board (part of BIS) on a high profile research project which aims to identify future technology and cyber security trends. These statements are forward looking and are intended to purely start a discussion around emerging/possible future trends. This is a great chance to be involved in an agenda setting piece of research. The findings will be released in the Spring at Infosec. We invite you to offer your thoughts…

The cloud/thin computing will fundamentally change the nature of cyber security…

The nature of cyber security threats will fundamentally change as the trend towards thin computing grows. Security updates can be managed instantly by the solution provider so every user has the latest security solution, the data leakage threat is reduced as data is stored centrally, systems can be scanned more efficiently and if Botnets capture end-point computers, the processing power captured is minimal. Furthermore, access to critical data can be centrally managed and as more email is centralised, malware can be identified and removed more easily. The key challenge will become identity management and ensuring users can only access their relevant files. The threat moves from the end-point to the centre.

What are your thoughts?

My response is simple.

Cloud Computing or “Thin Computing” as described above doesn’t change the “nature” of (gag) “cyber security” it simply changes its efficiency, investment focus, capital model and modality.

Chris backs up his response with a cogent argument.

• Gert Hansen of Astaro asks “The virtues of virtualisation and cloud computing will figure in most enterprise IT infrastructure discussions during 2010. But as the industry moves towards a new IT infrastructure play, what are the implications on IT security?” in his The impact on security of virtualisation and cloud computing post of 2/16/2010 to Out-Law.com:

Virtualisation has already proven its worth in delivering cost savings through server consolidation and better use of resources. Greater use of the technology across server infrastructures, in other areas of the IT stack, and at the desktop is widely anticipated.

The uptake of Software-as-a-Service applications such as salesforce.com, and the success of IT service outsourcing demonstrate how centralised remote computing approaches can also provide more efficient ways to deliver technology resources to users, helping cloud computing to gain greater buy-in from corporate decision-makers.

Out-Law.com claims to offer IT and e-commerce legal help from international law firm Pinset Masons.

• Jonathan Feldman asserts “A well-crafted service-level agreement and exit strategy are essential as you engage with cloud computing providers” in his How To Protect Your Company In The Cloud post of 2/12/2010 to the latest InformationWeek online issue:

Business executives are reading about cloud computing and asking when their companies are going to get on board. CIOs must do three things to respond to those requests and to help business units take advantage of cloud computing without putting them at undue risk.

First, don't be dismissive of the cloud. Business units will simply bypass IT if it doesn't provide guidance. Second, advise business leaders on cloud risks and risk-mitigation strategies. Third, when the decision to use a cloud service is made, establish realistic and balanced service-level agreements. …

Jon continues with eight questions about the “three things.”

Reuven Cohen explains Why Cloud Computing is More Secure in this 2/15/2010 post:

In the midst of the 1990's economic bubble, Alan Greenspan once famously referred to all the excitement in the market as Irrational exuberance. Similarly in today's cloud computing market a lot of the discussions seem to be driven by a new set of irrational expectations. The expectation by some that cloud computing will solve all man's problems and by others the expectation that cloud computing is inherently flawed. Flawed by an ended less list of problems most notably that of security. Like most things in life, the reality is probably somewhere in the middle. So I thought I'd take a closer look at the unrestrained pessimism and sometimes irrationality found in the cloud security discussions.

To understand security, you must first understand the psychology of how [cloud] security itself is marketed and bought. It's marketing based on fear, uncertainty and most certainly doubt (FUD). Fear that your data will be unwittingly exposed, uncertainty of who you can trust and doubt that there is any truly secure remote environments. At first glance these are all logical, rational concerns, hosting your data in someone else's environment means that you are giving away partial control and oversight to some third party. This is a fact. So in the most basic sense if you want to micro-manage your data, you'll never have a more secure environment than your own data center. Complete with bio-metric entry, gun toting guards and trust worthy employees. But I think we all know that "your own" data center also suffers from it's own issues. Is that guard with the gun actually trust worthy? (Among others)

Recently it occurred to me that the problem with cloud security is a cog[n]itative one. In a typical enterprise development environment security is mostly an after thought, if a thought at all. The general consensus is it's behind our firewall, or our security team will look at it later, or it's just not my job. For all practical purposes most programmers just don't think about security. What's interesting about cloud computing is all the FUD that's been spread has had an interesting consequence, programmers are actually now thinking about security before they start to develop & deploy their cloud applications and cloud providers are going out of their way to provide increased security (Amazon's VPC for example). This is a major shift, pro-active security planning is something that as far I can tell has never really happened before. Security is typically viewed as a sunk cost (sunk costs are retrospective past costs which have already been incurred and cannot be recovered). But the new reality is that cloud computing is in a lot of ways more secure simply because people are actually spending time looking at the potential problems beforehand. Some call it foresight, I call it completely and totally rational.

<Return to section navigation list>

Cloud Computing Events

• Shea Strickland of The Gold Coast .NET SIG announces in its Meeting, Thursday! post of 2/15/2010 that:

Steven Nagy is coming down from Brisbane to help us through: Windows Azure Web and Worker Roles, Windows Azure Storage, SQL Azure, .Net Service Bus, Developer Fabric and local debugging, deployment.

On 2/18/2010 at Griffith University’s G03 Theatre 2, a new venue.

• Michele Bustamante will present a 1-day class about Single Sign-on Claim based access with Michele Bustamante on 3/22/2010 at the Felix Konferansesenter, Oslo, Norway. The fee is 1,800 NKr, exclusive of VAT. Here’s the outline:

- Federated Identity Overview

- Architectural Scenarios

- Windows Identity Foundation (WIF) Overview

- WIF and Claims–Based Access Control

- Security Token Services

- Windows CardSpace

- Federation with the Access Control Service

The above is subject to my limited skills for translating Norwegian, which are even less than those for Swedish and Danish, despite having spent many pleasant weeks in those parts.

• Waggener*Edstrom invites the press to attend in its Microsoft to Host More Than 300 Government, Education Leaders at 8th Annual U.S. Public Sector CIO Summit press release of 2/16/2010:

The Microsoft U.S. Public Sector CIO Summit on Feb. 24–25 [at the Microsoft Conference Center, 16070 NE 36th Way, Bldg. 33, Redmond, WA 98052] will bring together more than 300 government and education leaders from around the country, as well as Microsoft Corp. executives, product managers and partner organizations. Throughout the event, Microsoft and its partners will discuss and demonstrate the latest technologies and preview emerging government and education solutions. Members of the press will have an opportunity to attend general sessions, ask questions during special one-on-one sessions and converse with customers between sessions.

The following four of nine sessions relate to Windows Azure:

- Virtualization and the Windows Azure Platform. Bob Muglia, president of the Server and Tools Business at Microsoft

- Cloud Computing Solutions. Ron Markezich, corporate vice president of Microsoft Online

- An Insider Look at the Windows Azure Platform. Yousef Khalidi, distinguished engineer for Windows Azure at Microsoft

- Next-Generation Datacenters. Rick Bakken, director of Business Development & Strategy, Global Foundation Services at Microsoft ; and Christian Belady, lead principal infrastructure architect, Global Foundation Services at Microsoft

Microsoft has plenty chutzpah holding a government meeting 2,324 miles from the capitol beltway.

Patrick LeBlanc reports Technet and MSDN coming to Baton Rouge on 3/4/2010 in this 2/15/2010 post:

On March 4th Microsoft will be hosting two half-day events in Baton Rouge, LA. These free live learning sessions will explore the latest technologies that you can apply immediately. Join your local event team and get the inside track on new technologies for IT professionals and take a tour of the latest .Net development tools.

Topics Include:

- The Next Wave: Windows Azure

- What’s new in Visual Studio 2010

- Hyper V: Tools to build the Ultimate Virtual Test Network

- What’s new in .Net Framework 4.0

- Automating Your Windows 7 Deployment with MDT 2010

- SharePoint Development using Visual Studio 2010

Use the following links to register:

- 8:30 am – 12:30 pm (Window Azure and Windows 7) register

- 1:00 pm – 4:30 pm (VS 2010 and SharePoint Development) register

Cory Fowler will deliver presentations on Windows Azure and SQL Azure at the PrairieDevCon 2010 conference to be held in Regina, Saskatchewan, Canada on 6/2 to 6/3/2010. According to Cory’s Register Early and Save… post of 2/15/2010:

Later on this year I’ll be heading to Saskatchewan to present on Windows Azure and SQL Azure at PrairieDevCon. If you live in Canada, or are willing to travel, PrairieDevCon has shaped up to quite the event. With 50 sessions presented by 25 Speakers (including myself) over 2 days.

I look forward to meeting the presenters and some new developers while I’m at the conference. Make sure you get your tickets early to save yourself some money, on two days of learning.

Microsoft’s Brad Smith explains Fourth-Amendment issues with cloud computing CSPAN “Communicators” interview named the Future of Cloud Computing, which aired 2/13/2010 and was delivered on-demand in a 00:30:50 YouTube video:

Brad Smith, Microsoft Corp. Senior V.P. & General Counsel, speaks to "The Communicators" about the future of "Cloud Computing." Program from Saturday, Feb. 13, 2010.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Geva Perry asks Rackspace: The Avis of Cloud Computing? and compares Rackspace and Amazon Web Services ranking in Jay Rosen’s 500,000 Leading Web Sites in this 2/15/2010 post:

Rackspace is in essence the #2 in the cloud computing business (well, at least when it comes to infrastructure-as-a-service). Guy Rosen, who writes the Jack-of-All-Clouds blog, publishes a monthly State of the Cloud blog post in which he tracks the usage of leading cloud providers among the 500,000 leading web sites. It gives a good sense of how these companies are faring.

It's a pretty tight race between Amazon and Rackspace -- with Rackspace having about 87.5% of the number of sites that Amazon does. But back when I met the two Rackspace execs in Las Vegas last year, their cloud business was at an early stage and they had recently made their first acquisitions in the space (Slicehost and JungleDisk). They hadn't even published their open API for what was then called Mosso (later renamed as Rackspace Cloud).

The Amazon Web Services Blog’s Webinar: Leverage the Cloud for High-Traffic, High-Profile Web Marketing Events post of 2/15/2010 announces:

On February 23, 2010, AWS, RightScale, and LTech will present a new webinar!

Titled Leverage the Cloud for High-Traffic, High-Profile Web Marketing Events, the webinar will show you how to protect your brand and your marketing spend by ensuring that your website or promotional site is performing optimally.

You'll see how a cloud-based deployment will give you the infrastructure needed to handle the massive and often unpredictable traffic brought on by a marketing campaign. You'll learn about AWS, RightScale's management offering, and LTech's new Brandscale service.

Registration is free and you can sign up now.