Windows Azure and Cloud Computing Posts for 9/9/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry (@WayneBerry) continued his series with Securing Your Connection String in Windows Azure: Part 3 of 9/9/2010:

This is the third part in a multi-part blog series about securing your connection string in Windows Azure. In the first blog post (found here) a technique was discussed for creating a public/private key pair, using the Windows Azure Certificate Store to store and decrypt the secure connection string. In the second blog post (found here) I showed how the Windows Azure administrator imported the private key to Windows Azure. In this blog post I will show how the SQL Server Administrator uses the public key to encrypt the connection string.

In this technique, there is a role of the SQL Azure administrator; he has access to the public key, the SQL Azure portal, and the SQL Azure administrator login and password. His job it to:

- Encode the connection string with the public key.

- Secure the SQL Azure Portal administrator login/password

- Restrict the SQL Azure login (which is not the administrator login) in the connection string.

Because the SQL Azure administrator has access to the public key, he can encode the connection string and knows the password to the production database. He has more access to SQL Azure than any other user in the example scenario.

Restricting the User

Before the connection string is encoded, the SQL Azure administrator needs to restrict the SQL Azure account in the connection string to reduce the attack surface and make the production database more secure. Don’t use the SQL Azure database administrative user account in your connecting string. Instead, as part of security best practices, create another user that just has the permissions that the web role needs. If the web site doesn’t need to create tables, don’t allow the web user to create a table. If the web site is just reading data, make the user read-only. Restricting the web user will reduce the damage to your database if the connection string does become compromised. Find out how to create a user in SQL Azure by reading this blog post. Restricting the SQL Azure user also restricts the Windows Azure administrator (this role is discussed in this blog post).

Importing the Public Key

The first thing that the SQL Azure administrator has to do is take the public key gotten from the Windows Azure administrator and import it into their local certificate store on their local box. The aspnet_regiis.exe tool which performs the encoding on the web.config uses the local certificate store.

- Click Start, type mmc in the Search programs and files box, and then press ENTER.

- On the File menu, click Add/Remove Snap-in.

- Under Available snap-ins, double-click Certificates.

- Select Computer account, and then click Next.

- Click Local computer, and then click Finish.

- In the Personal store, right click, under “All Tasks”, click Import. Browse to the .cer file (public key gotten from the Windows Azure administrator) and import the certificate.

- Once you have the certificate import, right click on it and choose Open, this will bring up the Certificate dialog, choose the details tab, scroll to the bottom and copy the thumbprint property. We will need this later

Download and Compiling the Provider

This could be done ahead of time by the developers and the two installer files (setup.exe and the installer.msi) could be emailed to the SQL Server Administrator, however if they haven’t done it, the SQL Administrator will need to download and compile the provider. You will need Visual Studio 2005 or Visual Studio 2008 on your box. Follow these steps:

- From the MSDN Code Gallery download the .zip with the source code.

- Save everything in the .zip file to your local machine.

- Find the PKCS12ProtectedConfigurationProvider.sln file and open it as a solution with Visual Studio.

- From the Tool Menu Choose Build | Build Solution.

- In the Installer/bin/release directory there should be a setup.exe.

- Execute this setup.exe and install the provider.

The installer will put Pkcs12CertProtectedConfiguratoinProvider.dll assembly file into the Global Assembly Cache so that the aspnet_regiis.exe can find it when you go to encrypt the web.config.

Encrypting Web.Config

Now that you have the provider assembly in the Global Assembly Cache and the public certificate installed on your box, you are ready to encrypt the connection string section of the web.config file, here is how:

- Get the web.config file from the developers

- If you are using source control, check out the web.config file (you will be modifying it).

- Add a connectionString similar to the one below in the Web.config file. This connection string should be similar to the one that comes from the SQL Azure Portal. However, it should contain the restricted user name and password.

<connectionStrings> <add name="SQLAzureConn" connectionString="Initial Catalog=aspnetdb;data source=.;uid=user;pwd=secretpassword" providerName="System.Data.SqlClient"/> </connectionStrings>- Add and configure the custom protected configuration provider. To do this, add the following <configProtectedData> section to the Web.config file in the web role. Note that the thumbprint should be set to the thumbprint value from the Certificate dialog in the Microsoft Management Console, with all the spaces removed.

<configProtectedData> <providers> <add name="CustomProvider" thumbprint="4badf1eea9666d95c1c046fde32008c5e3bf20d9" type="Pkcs12ProtectedConfigurationProvider.Pkcs12ProtectedConfigurationProvider, PKCS12ProtectedConfigurationProvider, Version=1.0.0.0, Culture=neutral, PublicKeyToken=34da007ac91f901d"/> </providers> </configProtectedData>- Run the following command from a Visual Studio command prompt to encrypt the connectionStrings section using the custom provider. Set the current directory in the Visual Studio command prompt to the folder containing the Web.config file.

aspnet_regiis -pef "connectionStrings" "." -prov "CustomProvider"

If the encryption is successful, you will see the following output:

Here is what is happening:

- aspnet_regiis.exe finds the web.config in the current directory and loads it.

- Using the –prov switch it finds the provider section in configProtectedData and figures out the full name of the assembly containing the provider.

- aspnet_regiis.exe loads the assembly from the Global Assembly Cache and calls the Initialize method in the assembly which checks to make sure that there is a thumbprint property in the web.config file.

- aspnet_regiis.exe then calls the Encrypt method of the Pkcs12CertProtectedConfiguratoinProvider.dll assembly which loads the public certificate from the Certificate store using the thumbprint as a primary key to the store. Using the –pef switch from the command line it loads the connection string section in the web.config and encrypts it.

- Once the connection string section is encrypted, it is written back to the web.config like so:

<connectionStrings configProtectionProvider="CustomProvider"> <EncryptedData Type="http://www.w3.org/2001/04/xmlenc#Element" xmlns="http://www.w3.org/2001/04/xmlenc#"> <EncryptionMethod Algorithm="http://www.w3.org/2001/04/xmlenc#aes192-cbc" /> <KeyInfo xmlns="http://www.w3.org/2000/09/xmldsig#"> <EncryptedKey xmlns="http://www.w3.org/2001/04/xmlenc#"> <EncryptionMethod Algorithm="http://www.w3.org/2001/04/xmlenc#rsa-1_5" /> <KeyInfo xmlns="http://www.w3.org/2000/09/xmldsig#"> <KeyName>rsaKey</KeyName> </KeyInfo> <CipherData> <CipherValue>aA4kyC0pNY8VFnPtLcC...=</CipherValue> </CipherData> </EncryptedKey> </KeyInfo> <CipherData> <CipherValue>6Fg9VWR5/...</CipherValue> </CipherData> </EncryptedData> </connectionStrings>- Check the web.config file back into source control, or give it back to the developers.

Summary

In the next blog post I will discuss the role of the developer and the code they need to add to the web role project to get the encrypted connection string.

Nick Boyer interviews David Robinson on 9/9/2010 in a 00:05:41 Cloud & SQL Azure Tech Talk with David Robinson (NZ 2010) video:

Nick Bowyer interviews David Robinson about SQL Azure at Tech.Ed New Zealand 2010.

No significant articles today.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Clemens Vasters (@clemensv) posted Windows Azure AppFabric Overview session from TechEd Australia 2010 on 9/8/2010:

In case you need a refresher or update about the things me and our team work on at Microsoft, go here for a very recent and very good presentation by my PM colleague Maggie Myslinska from TechEd Australia 2010 about Windows Azure AppFabric with Service Bus demos and a demo of the new Access Control V2 CTP

Steve Nagy posted TechEd 2010 Australia on 9/9/2010:

A couple of weeks ago I had the honor of kicking off the cloud track at TechEd 2010 Australia, where I delivered a level 300 “Lap Around the Windows Azure Platform”.

For those looking for my slides or the Service Bus demo I did, you can download them here (as zip files):

As has been my ‘thing’ of late, I took some photos of the audience prior to the start of the talk. I take no responsibility for any rude signs or funny faces.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Sanjeep J. Alur shows you how to Jumpstart an Enterprise to Windows Azure with Migration Assessment Tool (MAT) in this 9/9/2010 post:

Often when we think about embracing Cloud for Enterprise assets, the most daunting question that come up is related to the candidate application. Yes! From an Enterprise point of view, lot goes into thinking in terms of opting for an off premise, on-premise or a Hybrid model. However the most important of all is the kind of application chosen to be positioned in the context of Windows Azure.

In addition to the above said hosting models, one has to constantly think through the value that the entire opportunity brings to the table. Capabilities exist on the cloud and definitely the value. The onus is on an Enterprise to realize benefits out of the value that Windows Azure offers. Bear in mind the challenges that have to be considered in terms of business and technology. While we all realize the benefits of Windows Azure in totality, one has to get into a detailed assessment and planning mode before getting into action.

Some of the questions often asked are:

- What applications are worth moving or a perfect candidate to be moved to the cloud?

- How much of architectural and design changes does an application goes through before moving it to the cloud?

- What kind of effort is involved & how long it might take to move to the cloud?

- How much money am I going to save?

All of the above are not easy to decipher without a structured process or methodology. Here is where the Migration Assessment Tool (MAT) comes to the rescue. MAT is a desktop based tool that offers structured approach to rapidly assess business and technical considerations for migration to windows azure. The tool is designed to take a large number of business & technical data as inputs related to an application and helps Enterprises take strategic decisions on the movement.

MAT generates a report as an outcome of the above activity leading with clear recommendations on the impact of migration. The indicators come in the form of ‘Low’, ‘Medium’ or ‘High’ impact. Medium or High impact are the ones that typically requires a level of effort depending on the complexity of the existing solution/architecture.

MAT is available for partners (to download) [from the Assess and plan an application’s migration to Windows Azure course.] MAT can be self-branded & customized by a partner in extending assessment activities as a service offering.

A great tool to leverage, in assessing Enterprise Applications for migration to Windows Azure.

The Microsoft IT Showcase team produced an eight-page Microsoft IT Starts Migration of Microsoft.com to Windows Azure Platform white paper in 9/2010:

The Video Showcase site on Microsoft.com hosts thousands of videos and other rich media content that hundreds of thousands of customers worldwide view every day. Learn how Microsoft Information Technology (MSIT) quickly built and deployed the Social eXperience Platform (SXP) on the Windows Azure™ technology platform to enable social media capabilities across Microsoft.com.

Introduction

Microsoft has adopted a “we’re all in” strategy for the cloud. As part of this strategy, MSIT is migrating Microsoft.com to the cloud via Windows Azure. SXP is one of the first technologies that MSIT has built and deployed in the cloud by using Windows Azure. This article focuses on the decisions that the SXP team made when creating the Windows Azure-based service. The article discusses the many advantages that Windows Azure provides and also discusses lessons learned.

The Video Showcase Site on Microsoft.com

The Video Showcase site on Microsoft.com hosts more than 8000 videos that assist Microsoft customers in their search for information about products and services. The marketing agencies that Microsoft works with use the site as a video repository. The typical video is four to five minutes long. Customers view and learn from the video content and can comment on, rate, and share videos with their friends and colleagues on other social media channels and sites. The goal is to match customer intent with Video Showcase content and to create a sense of community and interest in Microsoft products.

The user experience for the site was developed with Microsoft® Silverlight® technology. The Video Showcase site previously used a third-party on-premises solution to manage comments and ratings, to filter out profanity and spam, and to do site moderation. This solution used blogs and blog posts to enable comments. Although the solution worked, it was hard to understand and explain. Maintenance costs were high and there were also issues related to upgrades, scalability, performance, and availability. Also, the business intelligence (BI) and information related to ratings and comments were either difficult to access or not available at all. For these reasons, MSIT developed SXP, which is essentially a platform as a service (PaaS).

To learn more about the Video Showcase site, visit http://www.microsoft.com/showcase/en/US/default.aspx.

The Social eXperience Platform

SXP is a multi-tenant Web service that enables social media capabilities across Microsoft.com. SXP’s first tenant is the Video Showcase site. Customers can use the SXP Windows Azure-based service to manage comments and ratings, and to filter out profanity and spam. SXP provides the ability to comment on or rate anything, including Web pages, news stories, videos, blog posts, and so on. As long as a unique ID can be assigned, users can comment on or rate the item. The SXP team manages comments and filters via the SXP Moderation Tool (built with Silverlight), which is also available via Windows Azure.

SXP also provides a Really Simple Syndication (RSS) feed aggregation, grooming, and publication service to all Microsoft.com sites. This service enables marketing site managers to easily manage and subscribe to relevant content across both desktop computers and mobile devices.

The SXP service is a back-end service for comments and ratings; it is not the front-end Web server. The service does not host the videos (they are hosted on MSN® Video Web Services) and does not host the HTML that users see on the screen.

SXP Architecture

The SXP solution takes advantage of the best of both on-premises and cloud-based technologies. SXP runs on Windows Azure and the SQL Azure™ Database, but the Video Showcase Web servers are standard Microsoft.com servers running in Microsoft’s internal data center. This combination of technologies enabled the SXP team to quickly create a solution with a high level of interoperability that adheres to the security and governance requirements that are typical of a large enterprise.

Figure 1 shows the SXP architecture.

Figure 1. SXP architecture

…

Results

The service went live in April 2010. After 120 days of operation, the SXP team compared results with the previous third-party solution for the same calendar quarter. Table 1 summarizes the before and after statistics.

Table 1. Comparison of third-party solution to SXP solution over a 120-day period

Focus area

Before

After

Availability

99.1 percent

99.98 percent

IT support costs

$15,000 per month

$1,050 per month (standard customer-facing pricing)

Planned downtime

2 to 4 hours

0 hours

Hardware provisioning time

2 to 3 weeks for virtual machines; 5 to 6 weeks for physical servers

30 minutes for server environment provisioning

Availability. The table shows that migrating from the third-party product to SXP and Windows Azure dramatically increased service availability. Availability is measured at the transaction level. On most days, the service is available 100 percent of the time. In the month of July 2010, the service was available 99.999 percent of the time. In fact, Windows Azure running in the South Central data center has a similar availability profile to the Microsoft.com data center in Eastern Washington. This means that for a fraction of the cost, developers can comfortably deploy a Windows Azure application and get about the same availability worldwide as the Microsoft.com data center. The SXP team expects that the availability gains that Windows Azure makes possible will reduce downtime by more than 48 hours over a one-year period. …

The team continues with additional details of cost savings and other benefits of substituting Windows Azure for the previous solution.

The Windows Azure Team posted a Real World Windows Azure: Interview with Bob West, Director of the Office of Reapportionment, Florida House of Representatives case study on 9/9/2010:

As part of the Real World Windows Azure series, we talked to Bob West, Director of the Office of Reapportionment for the Florida House of Representatives, about using the Windows Azure platform to help capture accurate U.S. Census statistics. Here's what he had to say:

MSDN: Tell us about the Florida House of Representatives and how you use technology to help serve your constituents. West: The Florida House of Representatives is part of the Florida Legislature and has 120 members, each representing a political district in the state. We rely on technology to help us communicate with residents and to support several initiatives, one of which is the redistricting process, which, by law, must happen every 10 years after the census is taken.

MSDN: What were the biggest challenges that the Florida House of Representatives faced prior to implementing the Windows Azure platform?

West: We wanted to develop a web-based application to encourage residents to participate in the redistricting process, but after analyzing the infrastructure changes required to support the kind of application that we envisioned, we realized that it was too costly-topping U.S.$300,000 over a four-year period to host the application and data. We also took into consideration that we'd have to build an infrastructure to handle extremely high volumes of traffic during peak periods. Furthermore, we wanted to ensure that any technology solution we implemented could be used for multiple projects that could help us to better serve citizens

MSDN: Can you describe the solution you built with Windows Azure to address your need for cost-effective scalability?

West: Prior to developing a redistricting application for Windows Azure, we developed a pilot service to evaluate the platform. MyFloridaCensus (http://www.myfloridacensus.gov/), which uses the Microsoft Silverlight 3 browser plug-in and is hosted in Windows Azure, uses Bing Maps and a Microsoft Geocoding web service, along with census data, to highlight areas that may have been missed by the U.S. Census Department. Citizens can use the Microsoft ASP.NET application to report whether or not they've been counted, and, in turn, the House works with the U.S. Census Bureau to count those citizens who were missed. We use a Windows Communication Foundation communication protocol to access two 10-gigabyte Microsoft SQL Azure databases to store census geography and data.

Green lines are land parcels, blue lines represent U.S. Census paths, and red areas indicate where some residents may have been missed by the U.S. Census.

MSDN: What makes your solution unique?

West: The technology stack-with its scalability and visually appetizing design-will create a direct line of dialogue between Floridians and Florida politicians on what is traditionally one of the most politically charged, but unfortunately ambiguous, issues for the average person. We've proven the Windows Azure platform as a scalable solution, and therefore we can reuse much of that code for our redistricting application. That said, because the redistricting application will require additional storage, we will use a 50-gigabyte SQL Azure database, and may consider supplementing our storage solution with Windows Azure Table storage. These features will support the new application that will allow citizens to participate in the redistricting process online by submitting designs for suggested district boundaries.

MSDN: What kinds of benefits are you realizing with Windows Azure?

West: Based on the information we gathered from MyFloridaCensus, we learned that of the 19,000 citizens who reported their census status, 2,250 households were initially missed by the U.S. Census Department. Each resident represents $1,500 in federal funds that we receive, so having accurate census data has a positive fiscal impact for our state. In addition, we can quickly scale up without the costs of an on-premises infrastructure. In fact, we estimate that we will avoid approximately $300,000 in infrastructure costs. However, the savings and federal funding are only part of the story. The big picture is that Floridians will be able to attain unprecedented levels of participation in defining their elected representation for the decade to follow.

- Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000007975

- To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Mary Jo Foley (@maryjofoley) reported “Microsoft is promising ‘Windows Azure integration’ with the coming release, but the Microsoft-hosted version of CRM 2011 won’t be running on Windows Azure” in her Microsoft to deliver public betas of CRM 2011 and app store in mid-September post of 9/9/2010 to ZDNet’s All About Microsoft blog:

Microsoft is signing up testers, as of September 9, who want to kick the tires of the company’s next-generation CRM software, service and app store.

The betas won’t be available until later this month — next week some time in the case of Dynamics CRM 2011, and before the end of the month, in the case of the Dynamics Marketplace, Microsoft officials said. The sign-up site for the CRM 2011 beta is http:///www.crm2011beta.com.

Update: Some testers aren’t having to wait, and getting access immediately to the beta bits, as of September 9. (Here’s a link to the on-premises CRM 2011 beta code from the Microsoft Download site.) A spokesperson said: “The team has started to roll out access especially [to] folks that had signed up earlier to be informed of the beta. Essentially how the process works is that once people sign up for the beta they get a confirmation saying that they will have details sent to their email address and looks like the team is already starting to respond/provide access.” But the official beta delivery timing is “by next week.”

The final version of the 2011 release of the CRM Online service — which users can opt to have hosted by Microsoft or Microsoft’s partners — is slated for before the end of 2010. The final version of the Dynamics CRM 2011 software is due in early 2011.

Microsoft has been testing privately its next CRM release, codenamed “CRM 5,” since earlier this year. The coming beta is the first public test build. The beta of the service will be available in eight languages and 36 markets when they launch next week.

The Dynamics Marketplace, which is Microsoft’s answer to Salesforce’s AppExchange store, will be a free way for customers to find hundreds of applications and services that build on top of Microsoft’s CRM/xRM platforms. Earlier this summer, Microsoft officials said the Marketplace would launch in September, but didn’t mention it would be a beta that would be available.

CRM 2011 will add a native Microsoft Outlook Client, an Office-like Ribbon and more user personalization options. It also will provide guided process dialogs, inline data visualizations, performance and goal management capabilities and real-time dashboards, company officials said.

Microsoft is promising “Windows Azure integration” with the coming release, but the Microsoft-hosted version of CRM 2011 won’t be running on Windows Azure. (CRM Online is hosted in Microsoft datacenters, but won’t be hosted on Azure until some unspecified time in the future, execs have said.) Microsoft also is offering “contextual Microsoft SharePoint document repositories” with the coming version, but still has not made CRM a fully integrated component of its Microsoft-hosted Business Productivity Online Suite (BPOS) of services.

The Dynamics CRM 2011 software and service will be available in English, French, German, Hebrew, Italian, Japanese, Spanish, and Chinese. The beta of Microsoft Dynamics CRM 2011 on-premises software will be available worldwide, and the beta of Microsoft Dynamics CRM Online will be available in Austria, Australia, Brazil, Belgium, Canada, Colombia, Czech Republic, Chile, Denmark, Finland, France, Germany, Greece, Hong Kong, Hungary, India, Ireland, Israel, Italy, Japan, Luxembourg, Malaysia, Mexico, Netherlands, New Zealand, Norway, Poland, Portugal, Puerto Rico, Romania, Singapore, Spain, Sweden, Switzerland, the United Kingdom, and the United States.

Robert McNeill and Bill McNee coauthored a EU SaaS Temperatures Are Rising Research Report for Saugatuck Technology on 9/8/2010 (site registration required):

What is Happening?

SaaS demand continues to grow in Europe, despite the tough economic climate over the past two years. While a variety of established global SaaS brands have led the charge, European buyers have clearly sought-out regional and pan-European providers with specific knowledge and offerings that address local market issues and/or regulatory challenges.

Further, European buyers are bucking the growing trend in the US toward buying direct from SaaS solution providers, and instead are maintaining their long-held preference to purchase and work with regional consultancies, VARs, SIs and other channel and deployment partners who have local resources and value-add. The move toward building custom cloud applications and the deployment of broader cloud platforms will no doubt only reinforce this preference.

The authors continue with the usual “Why is it Happening” and “Market Impact” topics, supporting their conclusions with a survey that had “more than 200 European responses.”

Don MacVittie (@dmacvittie) asserted “It is the nature of Internet communications that it will still be difficult to maintain information over the life of the session” as a preface to his How Developers Will Impact Cloud Expenses post of 9/8/2010:

We developers used to be obsessed with optimizations. Like a child with an Erector Set and a whole lot of spare parts, we always wanted to “make it better”. In our case, better was faster and using less memory/CPU resources. Where development came from – a few Kilobytes of memory, a much slower CPU, and non-optimizing compilers, this all made sense. But the rest of IT, and indeed, the business, didn’t want to see us build our Erector set higher, or make our code more complex buy more efficient, machines were speeding up at a relatively constant rate and the need was no longer there.

Flash forward to today, and we have multiple cores running at hundreds of times the speed of the 286 and 386 families, memory that would have been called “infinite” or “unbelievable” in those days, compilers that optimize, the web server and networking layers in front of most apps, and everything from the bus to the hard disk running faster. You would think that the need to optimize was 100% behind us, right?

WRONG

Just as I am rediscovering Erector Sets and their new-new-newest competitors (like the great educational robot kits from OWI Robotics shown at right) with The Toddler, the cycle has completed, and developers are going to find themselves needing to recall how to optimize. While their optimizations will generally be in a slightly different vein than they were in the past – optimizing compilers do a “good enough” job at the things we used to worry about like loops and counters – it will be optimization just the same. Most cloud providers charge you in a variety of ways, including throughput, number of servers, amount of storage, CPU utilization… The list goes on, depending upon your provider, some subset of these will be on your bill. Even if you are internal-only for cloud, the idea that you have X resources and the network is once again going to be the bottleneck is real. The ability to “spin up” another 100 or 1000 instances means your infrastructure – servers and network in particular - is going to have to work a lot harder to maintain performance.

The reason is simple. Cloud services charge by what you use. If your application is high-volume and you use more resources than is strictly necessary, well the Cost of Cloud Over Time indicates that you will have to reduce your expenses one way or another, and optimizing your code to utilize less resources that you’re paying by the month for is one of the obvious choices.

No, I’m not saying that we’ll be delving down into assembler or inlined routines again, that’s what the optimizing compiler and/or interpreter do for you, I am saying that unnecessary network connections, routines that use CPU power and could be written more intelligently, and the bulk that you send over the network in response to user queries will have room for improvement, and your organization will be motivated to take advantage of that room to squeeze extra dollars out of the IT budget.

LOTS TO WORRY ABOUT, BUT NOT EVERYTHING

Storage will have similar issues, but I contend that developers have less control over the storage aspect, as applications will likely only be a small percentage of the data saved in unstructured formats. Databases on the other hand will require the services of DBAs again. The act of optimizing access speed (generally by rolling back from third normal form) and amount of data being stored (generally by utilizing the most intense versions of third normal form) will require experience and forethought. Most developers aren’t specialists in the access patterns of databases, and databases are not yet smart enough to do this stuff for you.

There are non-development ways to handle the network traffic too – at least the bulk sent over the network – via tools like F5’s LTM and WOM that will allow you to reduce the packets or compress the contents of packets, reducing your overall throughput for billing purposes. But for connections that don’t need to be made in the first place? Those developers will have to ferret out. Situations like where you do an NS-Lookup on an IP, and that information is later needed in a different part of the code. Rather than do another lookup it will be worthwhile to take the time to pass the information around or (don’t tell anyone I suggested this) make it global to the application.

It is the nature of Internet communications that it will still be difficult to maintain information over the life of the session, but again, there are solutions, most involving the DB or client-side storage, both of which increase your network usage, but one of the two is probably a necessary evil with the structure of the Internet as it grew up. Note that I said one of the two, not both. In the age of cloud, if you store session info in the DB and send it back to the client, you’re paying double. The debate over which is better has been concluded and re-concluded more times than I can count, Cloud only changes it in the sense that many cloud providers charge you for in/out network usage. If your cloud is internal, you probably only care if you are burying your Internet connection (something products like our WOM and EDGE Gateway are designed to help alleviate).

So think about what you’re doing when you’re doing it, optimize as you code, but optimize with an eye to “is this going to cost money in the cloud”, because the simple optimizations of old are taken care of for you, and your monthly cloud bill could be smaller.

Meanwhile, I’m going to snag the dinosaur erector kit next time I’m at HobbyTown USA, just so it is around when The Toddler is old enough to use it. And no, I have no relationship with any of the toy vendors mentioned, other than The Toddler loves the T3 Robot pictured above.

Return to section navigation list>

VisualStudio LightSwitch

Maureen O’Gara reported “TrackVia, the Denver cloud-based database outfit, has added a cloud database application development platform” in a preface to her Cloud Databases for Dummies post of 9/9/2010:

TrackVia, the Denver cloud-based database outfit, has added a cloud database application development platform that's supposed to make the cloud accessible to non-technical business users by letting them build their own business-critical database-driven applications instead of waiting around interminably for IT or some software developer to conjure something up that probably won't suit anyway.

It claims IT won't mind - and might even become corporate champions - because the scalable widgetry is secure, with reliable support and enterprise-class controls. Heck, it means less work for them and no upfront costs.

It's supposed to put a very simple user interface on top of a feature-rich relational database that can be securely accessed and shared over the web.

The four-year-old company, backed by Access Venture Partners, Flywheel Ventures and folks like Rackspace founder Lew Moorman, claims a thousand enterprise, small business and government customers around the world for its 4Nines online database, a competitor of Intuit's QuickBase.

Collectively, these customers represent more than a billion records including a bunch that belong to Honda, which stumbled on TrackVia during a desperate Google search for an "online database easy" in 2008 when the bottom fell out of the car market and it needed to keep tabs on all the millions of dollars worth of vehicles it was warehousing here, there and everywhere. Nothing else reportedly suited. Once found Honda was up and running in a couple of days.

Twenty-four groups across Honda-Acura now use the stuff according to TrackVia COO Chris Basham, who called it a testament to TrackVia's viral nature once it gets inside a company.

Other users include Brink's, ADP, Khosla Ventures, Liberty Mutual, Prudential, Shell, Subaru, Toyota, Bed Bath & Beyond, Dow, the United States Olympic Committee and US Cellular.

TrackVia revolves around an integrated "data canvas" that lets users view, analyze, manipulate and apply productivity tools to their records in real-time - without losing sight of the data they're working with. They can feed data from web forms; export data to Excel; search, filter and create customized views that include table, calendar, map and graph formats; import Word documents; display address data on a Google Map; blast out mass e-mails; create labels; time, user, and date-stamp every change; and synchronize with other third-party apps and databases.

There are no theoretical limits on the number of records or attached files, and all the data is backed up.

Aside from a 14-day free trial, subscriptions start at $99 a month for five users, 100,000 records and 1GB of storage with a basic set of features. Ten users cost $249 month for 300,000 records, 4GB of storage and workgroup features. Five hundred users run $4,999 a month for a million records, 40GB of storage and professional features like a 99.5% SLA.

TrackVia employs a multi-channel approach appealing to resellers, infrastructure cloud providers, integrators and consultants and developers and ISVs looking to SaaS-enable or annuitize their solutions.

The company uses Rackspace for its cloud, [and is] meaning to add Amazon.

<Return to section navigation list>

Windows Azure Infrastructure

Roberto Bonini compared Windows Azure and Google App Engine usage costs in his Core Competencies and Cloud Computing post of 9/9/2010:

Wikipedia defines Core Competency as:

Core competencies are particular strengths relative to other organizations in the industry which provide the fundamental basis for the provision of added value. Core competencies are the collective learning in organizations, and involve how to coordinate diverse production skills and integrate multiple streams of technologies. It is communication, an involvement and a deep commitment to working across organizational boundaries.

So, what does this have to do with Cloud Computing?

I got thinking about different providers of cloud computing environments. If you abstract away the specific feature set of each provider what were the differences remaining that set these providers apart from each other.

Now, I actually starting thinking about this backwards. I asked myself why Microsoft Windows Azure couldn’t do a Google App Engine and offer free applications. I had to stop myself there and go off to Wikipedia and remind myself of the quotas that go along with an App Engine free application:

Hard limits

- Apps per developer: 10

- Time per request: 30 sec

- Blobstore size (total file size per app): 2 GB

- HTTP response size: 10 MB

- Datastore item size: 1 MB

- Application code size: 150 MB

Free quotas

- Emails per day: 2,000

- Bandwidth in per day: 1,000 MB

- Bandwidth out per day: 1,000 MB

- CPU time per day: 6.5 hours per day

- HTTP Requests per Day: 1,300,000*

- Datastore API calls per day: 10,000,000*

- Data stored: 1 GB

- URLFetch API calls per day: 657,084*

Now the reason why I even asked this question, was the fact that I got whacked with quite a bit of a bill for the original Windows Azure Feed Reader I wrote earlier this year. That was for my honours year university project, so I couldn’t really complain. But looking at those quotas from Google, I could have done that project many times over for free.

This got me thinking. Why does Google offer that and not Microsoft? Both of these companies are industry giants, and both have boatloads of CPU cycles.

Now, Google, besides doing its best not to be evil, benefits when you use the web more. And how do they do that? They go off and create Google App Engine. Then they allow the average dev to write an app they want to write and run it. For free. Seriously, how many websites run on App Engine’s free offering?

Second, Google is a Python shop. Every time someone writes a new Library or comes up with a novel approach to something, Google benefits. As Python use increases, some of that code is going to be contributed right back into the Python open source project. Google benefits again. Python development is a Google Core competency.

Finally, Google is much maligned for its approach to software development: thrown stuff against the wall and see what sticks. By giving the widest possible number of devs space to go crazy, the more apps are going to take off.

So, those are all Googles core competencies:

- Encouraging web use

- Python

- See what sticks

And those are perfectly reflected in App Engine.

Lets contrast this to Microsoft.

Microsoft cater to writing line of business applications. They don’t mess around. Their core competency, in other words, is other companies IT departments. Even when one looks outside the developer side of things, one sees that Microsoft office and windows are all offered primarily to the enterprise customer. The consumer versions of said products aren’t worth the bits and bytes they take up on disk. Hence, windows Azure is aimed squarely at companies who can pay for it, rather than enthusiasts.

Secondly, Windows Azure uses the .Net Framework, another uniquely Microsoft core competency. With it, it leverages the C# language. Now, it is true that .net is not limited to Windows, nor is Windows Azure a C# only affair. However, anything that runs on Windows Azure leverages the CLR and the DLR. Two pieces of technology that make .Net tick.

Finally, and somewhat related, Microsoft has a huge install base of dedicated Visual Studio users. Microsoft has leveraged this by creating a comprehensive suite of Windows Azure Tools.

Hopefully you can see where I’m going with this. Giving stuff away for free for enthusiasts to use is not a Microsoft core competency. Even with Visual Studio Express, there are limits. Limits clearly defined by what enterprises would need. You need to pay through the nose for those.

So Micrososft core competencies are:

- Line of Business devs

- .Net, C# and the CLR/DLR

- Visual Studio

Now, back to what started this thought exercise – Google App Engines free offering. As you can see its a uniquely Google core competency, not a Microsoft one.

Now, what core competencies does Amazon display in Amazon Web Services?

Quite simply, Amazon doesn’t care who you are or what you want to do, they will provide you with a solid service at a very affordable price and sell you all the extra services you can handle. Amazon does the same things with everything else, so why not cloud computing. Actually, AWS is brilliantly cheap. Really. This is Amazon’s one great core competency and they excel at it.

So, back to what started this thought exercise – a free option. Because of its core Competencies, Google is uniquely positioned to do it. And by thinking about it, Microsoft and Amazon’s lack of a similar offering becomes obvious.

Also, I mentioned the cost of Windows Azure.

Google App Engine and its free option mean that university lecturers are choosing to teach their classes using Python and App Engine rather than C# and Windows Azure.

Remember what a core competency is. Wikipedia defines Core Competency as:

Core competencies are particular strengths relative to other organizations in the industry which provide the fundamental basis for the provision of added value. Core competencies are the collective learning in organizations, and involve how to coordinate diverse production skills and integrate multiple streams of technologies. It is communication, an involvement and a deep commitment to working across organizational boundaries.

I guess the question is, which offering makes the most of their parent companies core competencies? And is this a good thing?

General Chicken passed on 6 Scalability Lessons on 9/9/2010 to the High Scalability blog:

Jesper Söderlund not only put together a few interesting scalability patterns, he also came up with a few interesting scalability lessons:

- Lesson #1. Put Smarty compile and template caches on an active-active DRBD cluster with high load and your servers will DIE!

- Lesson #2. Don't use out-of-the-box configurations.

- Lesson #3. Single points of contention will eventually become a bottleneck.

- Lesson #4. Plan in advance.

- Lesson #5. Offload your databases as much as possible.

- Lesson #6. File systems matter and can run out of space / inodes.

For more details and explanations see the original post.

Reuven Cohen posted Random Access Compute Capacity (RACC) on 9/9/2010:

Forgive me for it's been awhile since my last post. Between the latest addition to my family (little Finnegan) and some new products we have in the works at Enomaly, I haven't had much time to write.

One of the biggest issues I have when I hear people talking about developing data intensive cloud applications is being stuck in a historical point of view. The consensus is this is how we've always done it, so it must be done this way. The problem starts with the fact that many seem to look at cloud apps as a extension to how they've always developed apps in the past. A server is a server, an application a singular component connected a finite set of resources albeit RAM, storage, network, I/o or compute. The trouble with this is development point of view is the concept of linear deployment and scale. The typical cloud development pattern we think of is building applications that scale horizontally to meet the potential and often unknown demands of a given environment rather than one that focuses on the metrics of time and cost. Today I'm going to suggest a more global / holistic view of application development and deployment. A view that looks at global computing in much the same way you would treat memory on a server - a series random transient components.

Before I begin, this post is 2 parts theoretical and one part practical. It's not meant to solve the problem so much as address alternative ways to think about the problems facing an increasingly global data centric & time sensitive applications.

When I think of cloud computing, I think of a large seemingly infinite pool of computing resources available on demand, at anytime, anywhere. I think of these resources as mostly untrusted, from a series of suppliers that may exist in countless regions around the world. Rather than focus on the limitations I focus on the opportunities this new globalized computing world enables in a world where data and it's transformation into usable information will mean the difference between those who succeed and those who fail. A world where time is just as important as user performance. The ability to transform useless data into usable information. I believe those who do accomplish this more efficiently will ultimately win over their competitors. Think Google Vs Yahoo.

For me it's all about time. When looking at hypervisors, I'm typically less interested in the raw performance of the VM than I am in the time it takes to provision a new VM. In a VM world the time it takes to get a VM up and running is arguably just as important a metric as the performance of that VM after it's been provisioned. Yet, for many in the virtualization world this idea of provisioning time seems to be of little interest. And if you treat a VM like a server, sure 5 or 10 minutes to provision a new server is fine if you intend to use it like a traditional server. But for those who need quick indefinite access to computing capacity 10 minutes to deploy a server that may only be used for 10 minutes is a huge overhead. If I intend to use that VM for 10 minutes, than the ability to deploy it in a matter of seconds becomes just as important as the performance of the VM while operational.

I've started to thinking about this need for quick, short term computing resources as Random Access Compute Capacity. The idea of Random Access Capacity, is not unlike the concept of cloud bursting. But with a twist, the capacity it self can be from any number of sources, trusted (in house) or from a global pool of random cloud providers. The strength of the concept is in treating any and all providers as nameless, faceless and possibly unsecured group of providers of raw localized computing capability. By removing trust completely from the equation, you begin to view your application development methods differently. You see a provider as a means to accomplish something in smaller anonymous asynchronous pieces. (Asynchronous communication is a mediated form of communication in which the sender and receiver are not concurrently engaged in communication.) Rather than moving large marco data sets, you move micro pieces of data across a much larger federated pool of capacity providers (Edge based computing). Transforming data closer to the end users of the information rather than centrally. Singularly anyone provider doesn't pose much threat because each application workload is useless unless in a completed set.

Does this all sound eerily familiar? Well it should, it's basically just grid computing. The difference between grid computing of the past and today's cloud based approach is one of publicly accessible capacity. The rise of regional cloud providers means that there is today a much more extensive supply of compute capacity from just about every region of the world. Something that previously was limited to mostly the academic realms. It will be interesting to see what new applications and approaches emerge from this new world wide cloud of compute capacity.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

J. D. Meier reported Windows Azure Security Notes Posted to the Security TechCenter on 9/8/2010:

Our Windows Azure Security Notes are now available under the Highlights section on TechNet’s Security TechCenter.

It’s a collection of common applications scenarios for Web applications, Web services, and data on Azure, and it’s a map of common threats, attacks, vulnerabilities, and countermeasures. It also explains the approach we used to understand the security impact of the various application models on Windows Azure.

You can use the examples from the application scenarios in the notes as a way to help you map out and model your application scenarios. Even just knowing how to whiteboard Windows Azure application scenarios can go along way, especially bridging the gap between application design and runtime deployment.

<Return to section navigation list>

Cloud Computing Events

Nick Boyer interviews David Robinson on 9/9/2010 in a 00:05:41 Cloud & SQL Azure Tech Talk with David Robinson (NZ 2010) video:

Nick Bowyer interviews David Robinson about SQL Azure at Tech.Ed New Zealand 2010.

Repeated from the SQL Azure Database, Codename “Dallas” and OData section above.

Scott Klein announced on 9/9/2010 in an Azure Boot Camp in Calgary post that he will present at the Boot Camp on 10/2/2010 at Nexen Inc., 801-7th Avenue S.W., Calgary, AB, Canada T2P 3P7:

The last few months have been extremely busy. Herve and I have been heads down working furiously on the SQL Azure book. The is a light at the end of the finally, we are wrapping up the final chapters this week. Along with that, we put on a SQL Saturday event, and continue to run two local SQL Server User Group[s] in the area.

However, as if we weren't busy enough, we have run and presented at several Azure Boot Camps around the area, and I have been asked to be the presenter at the Calgary Azure Boot Camp in October. The date for this FREE event is October 2nd and the location is here:

- Nexen Inc.

- 801-7th Avenue S.W.

- Calgary, AB, Canada T2P 3P7

I am very excited for the opportunity to go to Calgary, and I need to that Noel Tan for not letting this drop. He has been on the ball with this and we should get a great turnout.

So, if you are in the area and want to come learn about Azure, come on by! Did I mention this event is FREE

The location contradicts that in http://windowsazurebootcamp.com/city/calgary.

<Return to section navigation list>Other Cloud Computing Platforms and Services

My (@rogerjenn) Amazon Starts Cloud Price War with Micro EC2 Linux Instances at $0.02/Hour ($0.007/Hour Spot) article of 9/9/2010 quotes Jeff Barr’s New Amazon EC2 Micro Instances - New, Low Cost Option for Low Throughput Applications post to the AWS blog of the same date and the most popular threads in the Windows Azure Feature Voting Forum:

Microsoft developers have clamored for lower priced Windows Azure compute instances since the Windows Azure Services Platform went into commercial service on 1/4/2010. Here are the two most-requested features on the Windows Azure Feature Voting Forum as of 9/9/2010:

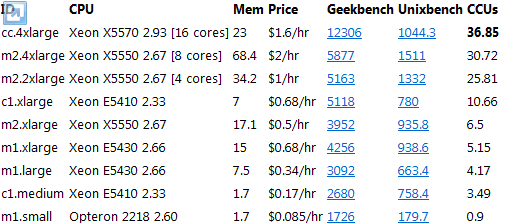

Following is a comparison of the monthly (30-days) prices of the least expensive AWS and Windows Azure Compute instances:

A Windows Azure compute instance is four times more expensive than a EC2 Micro instance with standard pricing, almost time times more expensive compared with Amazon’s spot pricing.

Another recent Windows-in-the-cloud pricing event: Mary Jo Foley (@maryjofoley) reported Rackspace fills out its Windows cloud story in a 9/8/2010 post to her All About Microsoft ZDNet blog:

“Pricing for the [Rackspace] Cloud Servers for Windows hosting service begins at $0.08 an hour for 1GB RAM and 40GB disk.”

When will we hear a response from Microsoft?

The CloudHarmony blog posted Benchmarking of EC2's new Cluster Compute Instance Type on 9/9/2010:

Two months ago Amazon Web Services released a new "Cluster Compute" EC2 instance type, the cc.4xlarge. This new instance type is targeted for High-Performance Computing (HPC) such as computationally intensive scientific applications. The major differences between this and other EC2 instance types are:

- Dual quad core "Nehalem" X5570 2.93 processors: compared with X5550 2.67 processors for the next largest m2 instance types. Amazon states this CPU configuration provides 33.5 ECU (EC2 Compute Units) compared with 26 ECUs for their m2.4xlarge instance type (previously the largest instance type)

- Hardware-Assisted Virtualization (HVM): compared with paravirtualization used by other instance types

- Multi-node 10 Gbps clustering capabilities: instances can be deployed to separate "Placement Groups" wherein each such group has non-blocking, low latency 10 Gbps network connectivity

Previously, we published 5 blog posts on cloud server performance which did not include this new EC2 instance type (cc.4xlarge) including:

- What is an ECU? CPU Benchmarking in the Cloud

- Disk IO Benchmarking in the Cloud

- Cloud Server Benchmarking Part 3: Java, Ruby, Python and PHP

- Cloud Server Benchmarking Part 4: Memory IO

- Cloud Server Benchmarking Part 5: Encoding & Encryption

The purpose of this post is to highlight the new EC2 Cluster Compute instance type in the context of these benchmarks and how it performs relative to the other EC2 instance types and servers in other IaaS clouds. For specifics on how the benchmarks are conducted and scores calculated, review the previous blog posts linked above. The benchmarks were performed on an individual cc.4xlarge instance and measure performance of a single instance only. The most beneficial feature of this new instance type is the clustering capabilities via 10 Gbps non-blocking network, which is not highlighted in this post.

The new cluster compute instance type is currently only available in Amazon's US-East region. The benchmark results tables below show only EC2 instances from that same region. NOTE: Although the EC2 documentation states that the cluster compute instance is assigned 2 quad core processors (8 cores total), the processors' hyper-threading capabilities resulted in benchmarks reporting 16 total cores.

CPU Performance

As described in the original post, we calculated CPU performance using a metric we created called the CCU. This metric is based on Amazon's ECU. Amazon states that the new cluster compute instance type should provide 33.5 ECUs. This is fairly close to our calculated 36.85 ECUs. Overall, CPU performance was exceptionally good, exceeding the performance of 134 cloud servers in 28 IaaS clouds from the previous post with the exception of the Storm on Demand 48GB X5650 Westmere cloud server which scored 42.87.

…

The post continues with charts of Disk IO Performance, Interpreted Programming Language Performance (Java, Python, Ruby, PHP), Memory IO Performance, and Encoding & Encryption Performance.

Conclusion

The new EC2 cluster compute instance type is an excellent performing cloud server. Performance exceeded that of most of the "bare metal" cloud servers we benchmarked previously. Combined with 10 Gbps non-blocking clustering capabilities, and on-demand deployment & hourly billing, this new instance type provides exceptional value and capabilities for HPC applications.

Doug Rehnstrom posted Save Even More with Amazon EC2’s Micro Instances to the Learning Tree site on 9/9/2010:

Yesterday I wrote about how we are going to save money using an open source CRM package called SugarCRM and Amazon’s EC2 cloud computing service. See the post: Saving Money Using the Cloud and Open Source Software.

The bottom line was that we were going to be able to implement a CRM system for only $700 per year. And we can have that system up and running in a couple hours.

This morning when I checked my email there was a message from Amazon announcing their new “Micro Instances”? Below is a description of a Micro Instance pasted from Amazon’s Web site:

“Instances of this family provide a small amount of consistent CPU resources and allow you to burst CPU capacity when additional cycles are available. They are well suited for lower throughput applications and web sites that consume significant compute cycles periodically.” (See the link http://aws.amazon.com/ec2/#instance for more information.)

This description sounded an awful lot like the CRM system we are planning. So, I decided to set up a Micro Instance with SugarCRM installed and test it.

As per yesterday’s post it took me a couple hours to get the instance running and the software loaded. I tested it and it works just fine.

So now the system is not going to cost $700 per year. It’s less than $200 per year. (See the link http://aws.amazon.com/ec2/#pricing for more pricing information on EC2 instances.)

What’s even more amazing is I have the server up and running after only a couple hours.

With the introduction of Micro Instances the cost of using EC2 is comparable with that of a Web hosting service. However, we have complete control over our server within our virtual machine. We are not sharing the server with anyone else. Over time we can even scale our application up or down as needed by changing the instance size.

Lydia Leong rings in about Amazon introduces “micro instances” on EC2 on 9/9/2010:

Amazon has introduced a new type of EC2 instance, called a Micro Instance. These start at $0.02/hour for Linux and $0.03/hour for Windows, come with 613 MB of allocated RAM, a low allocation of CPU, and a limited ability to burst CPU. They have no local storage by default, requiring you to boot from EBS.

613 MB is not a lot of RAM, since operating systems can be RAM pigs if you don’t pay attention to what you’re running in your baseline OS image. My guess is that people who are using micro instances are likely to want to use a JeOS stack if possible. I’d be suggesting FastScale as the tool for producing slimmed-down stacks, except they got bought out some months ago, and wrapped in with EMC Ionix into VMware’s vCenter Configuration Manager; I don’t know if they’ve got anything that builds EC2 stacks any longer.

Amazon has suggested that micro instances can be used for small tasks — monitoring, cron jobs, DNS, and other such things. To me, though, smaller instances are perfect for a lot of enterprise applications. Tons of enterprise apps are “paperwork apps” — fill in a form, kick off some process, be able to report on it later. They get very little traffic, and consolidating the myriad tertiary low-volume applications is one of the things that often drives the most attractive virtualization consolidation ratios. (People are reluctant to run multiple apps on a single OS instance, especially on Windows, due to stability issues, so being able to give each app its own VM is a popular solution.) I read micro instances as being part of Amazon’s play towards being more attractive to the enterprise, since tiny tertiary apps are a major use case for initial migration to the cloud. Smaller instances are also potentially attractive to the test/dev use case, though somewhat less so, since more speed can mean more efficient developers (fewer compiling excuses).

This is very price-competitive with the low end of Rackspace’s Cloud Servers ($0.015/hour for 256 MB and $0.03/hour for 512 MB RAM, Linux only). Rackspace wins on pure ease of use, if you’re just someone who needs a single virtual server, but Amazon’s much broader feature set is likely to win over those who are looking for more than a VPS on steroids. GoGrid has no competitive offering in this range. Terremark can be competitive in this space due to their ability to oversubscribe and do bursting, making its cloud very suitable for smaller-scale enterprise apps. And VirtuStream can also offer smaller allocations tailored to small-scale enterprise apps. So Amazon’s by no means alone in this segment — but it’s a positive move that rounds out their cloud offerings.

James Hamilton reported Amazon EC2 for under $15/Month on 9/9/2010:

You can now run an EC2 instance 24x7 for under $15/month with the announcement last night of the Micro instance type. The Micro includes 613 MB of RAM and can burst for short periods up to 2 EC2 Compute Units(One EC2 Compute Unit equals 1.0-1.2 GHz 2007 Opteron or 2007 Xeon processor). They are available in all EC2 supported regions and in 32 or 64 bit flavors.

The design point for Micro instances is to offer a small amount of consistent, always available CPU resources while supporting short bursts above this level. The instance type is well suited for lower throughput applications and web sites that consume significant compute cycles but only periodically.

Micro instances are available for Windows or Linux with Linux at $0.02/hour and windows at $0.03/hour. You can quickly start a Micro instance using the EC2 management console: EC2 Console.

- The announcement: Announcing Micro Instances for Amazon EC2

- More information: Amazon Elastic Compute Cloud (Amazon EC2)

Dave Courbanou reported Cisco, Citrix Partner on HD Desktop Virtualization in this 9/9/2010 post to The VAR Guy blog:

Instead of competing with last week’s VMworld noise, Cisco and Citrix waited a few days to discuss their growing virtualization relationship. Specifically, Cisco and Citrix, who are no strangers to virtualization, are working on a new solution to provide high-definition virtual desktops and applications to all users in an enterprise, without breaking the bank. Can it be done? Read on for the details…

Cisco and Citrix say their solution’s priority is cost-effectiveness. Cisco’s new Desktop Virtualizaiton solution with Citrix XenDesktop is designed to combine Cisco’s unified computing and Citrix’s desktop virtualization technologies in one happy package. Cisco says Citrix’s FlexCast and HDX technology will be the tech behind the scenes.

Joint channel partners with both Cisco and Citrix can sell and make available this combined solution. Cisco and Citrix claim that their new partnership will help “accelerate the deployment of desktop virtualization with the best performance, user experience and cost savings” and offer a few highlights…

- Preconfigured Kits to Ease Deployment – Customized kits for XenDesktop user scenarios substantially ease operations by accelerating deployment times.

- Cisco Validated Design to Help Ensure Pretested Interoperability – To further simplify and accelerate customer implementations, a reference design architecture is now available based on extensive testing at scale conducted jointly by Cisco, Citrix and NetApp.

- Greater Density and Performance – Cisco UCS offers memory-extension technology that can deliver up to 60 percent greater virtual desktop density per server with no degradation in application performance

- Any Device, Any Location – end users to access their corporate desktops and applications from any combination of PCs, Macs, laptops, thin clients, smartphones and tablets using the universal Citrix Receiver™ software client.

- Improved security and access control – With centralization of desktop infrastructure, technologies like Cisco VN-Link and Citrix SmartAuditor technology help maintain data integrity and compliance

If you want to sink your teeth into their enterprise offering, check out the details over here, and the press release here. Even though this is focused around making things ‘cost effective’, there are conveniently, no price details. The press release details cost-saving components, energy efficiency and ease of scalability as the high-points for cash saving, however. But we’ll let you, the reader, weigh in.

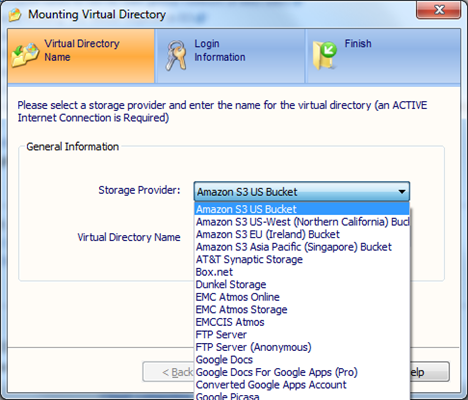

Jerry Huang describes Easy Desktop Access to Amazon S3 Bucket with the Gladinet Cloud Desktop in this 9/8/2010 post:

When people trying to understand the buckets the first time, they think of them as top level folders. It is kind of right. You have your Amazon S3 account and the first level of objects you see are these top level containers – buckets.

As Amazon S3 grows in popularity, we need to to take a closer look to the buckets and understand the functionality beyond the top level “folders”.

Bucket name is part of the DNS domain name such as bucket.amazonaws.com. It has to be unique across all S3 buckets. It also resolves to the server that is hosting the bucket. For this reason, we recommend you create bucket names according to the DNS convention, such as person.department.companyname. For example, a bucket name dev.gladinet will become dev.gladinet.amazonaws.com as the DNS name, which can uniquely identify a development team shared bucket for gladinet.

For this reason, the Amazon S3 support in the new Gladinet Cloud Desktop has been changed to bucket level support. You can mount an S3 bucket into Windows Explorer as a mapped drive, for easy desktop access and backup purpose.

First you will use the Mount Virtual Directory wizard to pick from one of the region that is best for you.

Next step is to fill in the Amazon S3 keys and the bucket name.

If you forgot what the bucket name is, you can use the aws console.

Now your Amazon S3 Bucket is showing up in Windows Explorer, inside a mapped network drive.

Later you can also setting up a sync folder to sync from a local folder to Amazon S3. Anyway, it is fairly easy to access S3 with the bucket mounted as network folders.

Stacey Higgenbotham reported Digg Not Likely to Give Up on Cassandra in this 9/8/2010 post to the GigaOm blog;

Cassandra was a tragic figure in Greek myth. She could hear the future and thus was able to foretell what was coming next (usually death and destruction). It’s no surprise that no one wanted her hanging around. It’s ironic that an open-source NoSQL software of the same name has often found itself amidst controversy. Today, Cassandra was blamed for scaling (and availability) problems at Digg, which led to the unconfirmed departure of Digg VP of Engineering John Quinn, who was a big champion of Cassandra at Digg.

This isn’t the first time Cassandra — which was created inside Facebook and later open-sourced — has taken a beating. Back in July, Twitter reversed its plans to move from MySQL to Cassandra for storing its tweets. Comments from Digg founder Kevin Rose as he tries to explain some problems on Digg’s new site aren’t helping Cassandra either. However, a call to Matt Pfeil, CEO of Riptano — an Austin, Texas-based startup — put thing in perspective. Riptano is building its business providing service and eventually an easy-to-implement version of Cassandra for companies (see my video interview with Pfeil here.) Pfeil said that Riptano is working with Digg and noted that he would be “shocked” if Digg abandoned Cassandra.

When asked if the problems Digg has had with its upgrade stemmed from Cassandra, Pfeil said, “We’ve reached out to Digg to ID what those problems are. I don’t know the full extent of them, and am learning more from them about their situation. We know Cassandra can scale to levels that are equal to or greater than a Digg is putting on it and I have full faith in Cassandra, but there are these little knobs that need to be tuned and you have to know where they are.”

For Pfeil, this could be an opportunity simply because helping find and turn “those little knobs” are what Riptano was formed to do. He said Riptano has been involved with Digg since around April, which was soon after Digg announced its plans to use Cassandra. While Digg may be able to blame Cassandra for some glitches, the database technology still seems to be on the upswing. Today, Quest — an enterprise software-database support company — decided to support Cassandra through a partnership with Riptano, and companies such as Cisco, Ooyala and Rackspace are also using it.

As Pfeil points out, Cassandra is still new, having been open-sourced in 2008. “Cassandra has come a long way, especially in the last year or so … there is a lot to be done before it is close to where it will compare in production environments to something like MySQL, but we’re getting close.” So maybe unlike the Greek prophetess, the database technology will be able to rehabilitate its reputation.

Related GigaOM Pro research (sub req’d): Report: NoSQL Databases – Providing Extreme Scale and Flexibility

Guy Rosen posted his State of the Cloud – September 2010 on 9/9/2010:

Summer’s over, September’s here, and that means it’s time for another monthly installment of the State of the Cloud report.

Snapshot for September 2010

Here are the results for this month.

This month Amazon regain the edge lost to them last month with solid 8% monthly growth. It appears that Rackspace however takes a hit this month, losing over 5% of the sites hosted. Looks, however, may be deceiving – there seems to have been a blip in the Quantcast data set used as input, that resulted in quite a number of sites dropping completely from the top 500k. It’s not immediately clear why Rackspace would be hit more than others, but since most of these are now back in their former ranks then next month’s data should correct itself.

Trends

Coming up

It’s been a year since the groundbreaking Anatomy of an EC2 Resource ID research, that shed light on the volume of usage Amazon’s cloud is seeing. Later this month we’ll go back to see how much has changed in the past year. Stay tuned!

<Return to section navigation list>

1 comments:

Great blog and resource for channel management service companies and channel partners looking for new opportunities!

Post a Comment